Why the primary epochs matter essentially the most…

To grasp vital studying durations inside deep studying, it’s useful to first have a look at a associated analogy to organic methods. Inside people and animals, vital durations are outlined as instances of early post-natal (i.e., after start) improvement, throughout which impairments to studying (e.g., sensory deficits) can result in everlasting impairment of 1’s abilities [5]. For instance, imaginative and prescient impairments at a younger age — a vital interval for the event of 1’s eyesight — typically result in issues like amblyopia in grownup people.

Though I’m removed from a organic skilled (in truth, I haven’t taken a biology class since highschool), this idea of vital studying durations remains to be curiously related to deep studying, as the identical habits is exhibited inside the studying course of for neural networks. If a neural community is subjected to some impairment (e.g., solely proven blurry photographs or not regularized correctly) through the early part of studying, the ensuing community (after coaching is absolutely full) will generalize extra poorly relative to a community that by no means obtained such an early studying impairment, even given a limiteless coaching funds. Recovering from this early studying impairment shouldn’t be attainable.

In analyzing this curious habits, researchers have discovered that neural community coaching appears to progress in two phases. Throughout the first part — the vital interval that’s delicate to studying deficits — the community memorizes the information and passes by means of a bottleneck within the optimization panorama, finally discovering a extra well-behaved area inside which convergence could be achieved. From right here, the community goes by means of a forgetting course of and learns generalizable options moderately than memorizing the information. On this part, the community exists inside a area of the loss panorama during which many equally-performant native optima exist, and finally converges to one among these options.

Vital studying durations are elementary to our understanding of deep studying as a complete. Inside this overview, I’ll embrace the basic nature of the subject by first overviewing fundamental parts of the neural community studying course of. Given this background, my hope is that the ensuing overview of vital studying durations will present a extra nuanced perspective that reveals the true complexity of coaching deep networks.

Inside this part, I’ll overview elementary ideas inside the coaching of deep networks. Such fundamental concepts are pivotal to understanding each the final studying course of for neural networks and significant durations throughout studying. The overviews inside this part are fairly broad and will take time to really grasp, so I present additional hyperlinks for individuals who want extra depth.

Neural Community Coaching

Neural community coaching is a elementary facet of deep studying. Masking the complete depth of this subject is past the scope of this overview. Nonetheless, to grasp vital studying durations, one will need to have at the very least a fundamental grasp of the coaching process for neural networks.

The aim of neural community coaching is — ranging from a neural community with randomly-initialized weights — to be taught a set of parameters that permit the neural community to precisely produce a desired output given some enter. Such enter and output can take many varieties — key factors predicted on a picture, a classification of textual content, object detections in a video, and extra. Moreover, the neural community structure oftentimes adjustments relying on the kind of enter information and downside being solved. Regardless of the variance in neural community definitions and functions, nonetheless, the essential ideas of mannequin coaching stay (roughly) the identical.

To be taught this map between enter and desired output, we want a (ideally massive) coaching dataset of input-output pairs. Why? In order that we are able to:

- Make predictions on the information

- See how the mannequin’s predictions evaluate to the specified output

- Replace the mannequin’s parameters to make predictions higher

This strategy of updating the mannequin’s parameters over coaching information to raised match recognized labels is the crux of the educational course of. For deep networks, this studying course of is carried out for a number of epochs, outlined as full passes by means of the coaching dataset.

To find out the standard of mannequin predictions, we outline a loss operate. The aim of coaching is to reduce this loss operate, thus maximizing the standard of mannequin predictions over coaching information. As a result of the loss operate is usually chosen such that it’s differentiable, we are able to differentiate the loss with respect to every parameter within the mannequin and use stochastic gradient descent (SGD) to generate updates to mannequin parameters. At a excessive degree, SGD merely:

- Computes the gradient of the loss operate

- Makes use of the chain rule of calculus to compute the gradient of the loss with respect to each parameter inside the mannequin

- Subtracts the gradient, scaled by a studying charge, from every parameter

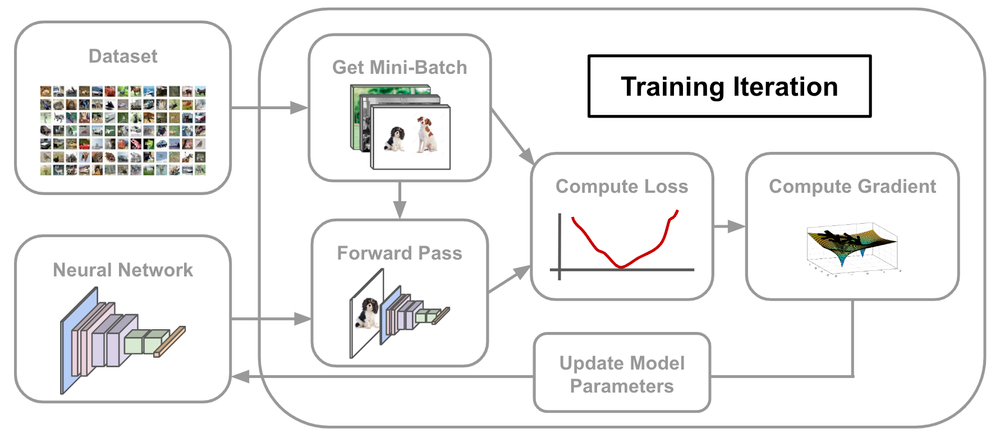

Though a bit sophisticated to grasp intimately, SGD at an intuitive degree is sort of easy — every iteration simply determines the course that mannequin parameters ought to be up to date to lower the loss and takes a small step on this course. We carry out optimization over the community’s parameters to reduce coaching loss. See beneath for a schematic depiction of this course of, the place the educational charge setting controls the dimensions of every SGD step.

In abstract, the neural community coaching course of proceeds for a number of epochs, every of which performs many iterations of SGD — usually utilizing a mini-batch of a number of information examples at a time — over the coaching information. Over the course of coaching, the neural community’s loss over the coaching dataset turns into smaller and smaller, leading to a mannequin that matches the coaching information effectively and (hopefully) generalizes — which means that it additionally performs effectively — to unseen testing information.

See the determine above for a high-level depiction of the neural community coaching course of. There are a lot of extra particulars that go into neural community coaching, however the goal of this overview is to grasp vital studying durations, to not take a deep dive into neural community coaching. Thus, I present beneath some hyperlinks to helpful articles that can be utilized to grasp key neural community coaching ideas in higher element for the reader.

- Neural Community Coaching Overview [blog] [video]

- Understanding Backpropagation [blog] [video]

- SGD (and Different Optimization Algorithms) [blog]

- Fundamental Neural Community Coaching in PyTorch [notebook]

- What’s generalization? [blog]

Regularization

Neural community coaching performs updates that reduce the loss over a coaching dataset. Nonetheless, our aim in coaching this neural community is not only to attain good efficiency over the coaching set. We additionally need the community to carry out effectively on unseen testing information when it’s deployed into the actual world. A mannequin that performs effectively on such unseen information is alleged to generalize effectively.

Minimizing loss on the coaching information doesn’t assure {that a} mannequin will generalize. For instance, a mannequin might simply “memorize” every coaching instance, thus stopping it from studying generalizable patterns that may be utilized to unseen information. To make sure good generalization, deep studying practitioners usually make the most of regularization methods. Many such methods exist, however essentially the most related for the needs of this publish are weight decay and information augmentation.

Weight decay is a method that’s generally utilized to the coaching of machine studying fashions (even past neural networks). The thought is easy. Throughout coaching, regulate your loss operate to penalize the mannequin for studying parameters with massive magnitude. Then, optimizing the loss operate turns into a joint aim of (i) minimizing loss over the coaching set and (ii) making community parameters low in magnitude. The energy of weight decay throughout coaching could be adjusted to seek out completely different tradeoffs between these two targets — it’s one other hyperparameter of the educational course of that may be tweaked/modified (much like the educational charge). To be taught extra, I recommend studying this text.

Knowledge augmentation takes many various varieties relying on the area and setting during which it’s being utilized. However, the basic concept behind information augmentation stays fixed — every time your mannequin encounters some information throughout coaching, one ought to randomly change the information slightly bit in a means that also preserves the information’s output label. Thus, your mannequin by no means sees the identical information instance twice. Quite, the information is at all times barely perturbed, stopping the mannequin from merely memorizing examples from the coaching set. Though information augmentation can take many various varieties, quite a few survey papers and explanations exist that can be utilized to raised perceive these methods.

- Knowledge Augmentation for Laptop Imaginative and prescient [blog] [survey]

- Knowledge Augmentation for Pure Language Processing [blog] [survey]

Coaching, Pre-Coaching, and Effective-Tuning

Past the essential neural community coaching framework offered inside this part, one may also regularly encounter the concepts of pre-training and fine-tuning for deep networks. All of those strategies comply with the identical studying course of outlined above — pre-training and fine-tuning are simply phrases that discuss with a selected, slightly-modified setup for a similar coaching course of.

Pre-training usually refers to coaching a mannequin from scratch (i.e., random initialization) over a really massive dataset. Though such coaching over massive pre-training datasets is computationally costly, mannequin weights realized from pre-training could be very helpful, as they comprise patterns which have been realized from raining over lots of information that will generalize elsewhere (e.g., studying how you can detect edges, understanding shapes/textures, and so on.).

Pre-trained mannequin parameters are sometimes used as a “heat begin” for performing coaching on different datasets, sometimes called the downstream or goal dataset. As an alternative of initializing mannequin parameters randomly when performing downstream coaching, we are able to set mannequin parameters equal to the pre-trained weights and fine-tune — or additional practice — these weights on the downstream dataset; see the determine above. If the pre-training dataset is sufficiently massive, such an method yields improved efficiency, because the mannequin learns ideas when pre-trained on the bigger dataset that can’t be realized utilizing the goal dataset alone.

Throughout the following overviews, I’ll talk about a number of papers that exhibit the existence of vital studying durations inside deep neural networks. The primary paper research the impression of knowledge blurring on the educational course of, whereas the next papers research studying habits with respect to mannequin regularization and information distributions throughout coaching. Regardless of taking completely different approaches, every of those works comply with an analogous method of:

- Making use of some impairment to a portion of the educational course of

- Analyzing how such a deficit impacts mannequin efficiency after coaching

Vital Studying Durations in Deep Networks [1]

Foremost Thought. This research, carried out by a combination of deep studying and neuroscience consultants, explores the connection between vital studying durations in organic and synthetic neural networks. Particularly, authors discover that introducing a deficit (e.g., blurring of photographs) to the coaching of deep neural networks, even for less than a brief time frame, can lead to degraded efficiency. Going additional, the extent of the injury to efficiency will depend on when and the way lengthy the impairment is implied — a discovering that mirrors the habits of organic methods.

For instance, if the impairment is utilized firstly of coaching, there exists a ample variety of impaired studying epochs, past which the deep community’s efficiency won’t ever recuperate. Organic neural networks exhibit comparable properties with respect to early impairments to studying. Particularly, experiencing an impairment to studying for too lengthy throughout early levels of improvement can have everlasting penalties (e.g., amblyopia). The determine above demonstrates the impression of vital studying durations in each synthetic and organic methods.

At a excessive degree, the invention inside this paper could be merely acknowledged as follows:

If one impairs a deep community’s coaching course of in a sustained trend through the early epochs of coaching, the community’s efficiency can not recuperate from this impairment

To higher perceive this phenomenon, authors quantitatively research the connectivity of the community’s weight matrices, discovering that studying is comprised of a two-step strategy of “memorizing”, then “forgetting”. Extra particularly, the community memorizes information through the early studying interval, then reorganizes/forgets such information because it begins to be taught extra environment friendly, generalizable patterns. Throughout the early memorization interval, the community navigates a bottleneck within the loss panorama — the community is sort of delicate to studying impairments because it traverses this slim panorama. Ultimately, nonetheless, the community escapes this bottleneck to find a wider valley that accommodates many high-performing options — the community is extra sturdy to studying impairments inside this area.

Methodology. Inside this work, authors practice a convolutional neural community structure on the CIFAR-10 dataset. To imitate a studying impairment, photographs inside the dataset are blurred for various numbers of epochs at completely different factors through the studying course of. Then, the impression of this impairment is measured by way of the mannequin’s take a look at accuracy after the complete coaching course of has been accomplished. Notably, the educational impairment is usually solely utilized throughout a small portion of the complete studying course of. By finding out the impression of such impairments on community efficiency, the authors uncover that:

- If the impairment shouldn’t be eliminated sufficiently early throughout coaching, then community efficiency will probably be completely broken.

- Sensitivity to such studying impairments peaks through the early interval of studying (i.e., the primary 20% of epochs).

To additional discover the properties of vital studying durations in deep networks, authors measure the Fisher info inside the mannequin’s parameters, which quantitatively describes the connectivity between community layers, or the quantity of “helpful info” contained inside community weights.

Fisher info is discovered to extend quickly throughout early coaching levels, then decay all through the rest of coaching. Such a pattern reveals that the mannequin first memorizes info through the early studying part, then slowly reorganizes or reduces this info — at the same time as classification efficiency improves — by eradicating redundancy and establishing robustness to non-relevant variability within the information. When an impairment is utilized, the Fisher Data grows and stays a lot greater than regular, even after the deficit is eliminated, revealing that the community is much less able to studying generalizable information representations on this case. See the determine beneath for an illustration of this pattern.

Findings.

- Community efficiency is most delicate to impairments through the early stage of coaching. If picture blurring shouldn’t be eliminated inside the first 25–40% of coaching epochs (i.e., the precise ratio will depend on community structure and coaching hyperparameters) for the deep community, then community efficiency will probably be completely broken.

- Excessive-level adjustments to information (e..g, vertical flipping of photographs, permutation of output labels) wouldn’t have any impression of community efficiency. Moreover, performing impaired coaching with white noise doesn’t injury community efficiency — fully sensory deprivation (i.e., this parallels darkish rearing in organic methods) shouldn’t be problematic to studying.

- Pre-training could also be detrimental to community efficiency if carried out poorly (e.g., utilizing photographs which can be too blurry).

- Fisher info is usually highest within the intermediate community layers, the place low and mid-level picture options could be most effectively processed. Impairments to the educational course of result in a focus of Fisher info within the ultimate community layer, which accommodates no decrease or mid-level options, until the deficit is eliminated sufficiently early in coaching.

Time Issues in Regularizing Deep Networks: Weight Decay and Knowledge Augmentation Have an effect on Early Studying Dynamics, Matter Little Close to Convergence [2]

Foremost Thought. The everyday view of regularization (e.g., by way of weight decay or information augmentation) posits that regularization merely alters a community’s loss panorama as to bias the educational course of in direction of ultimate options with low curvature. The ultimate, vital level of studying is clean/flat within the loss panorama, which is (arguably) indicative of fine generalization efficiency. Whether or not such an instinct is appropriate is a topic of sizzling debate — one can learn a number of fascinating articles in regards to the connection between native curvature and generalization on-line.

This paper proposes another perspective of regularization, going past these fundamental intuitions. The authors discover that eradicating regularization (i.e., weight decay and information augmentation) after the early epochs of coaching doesn’t alter community efficiency. Alternatively, if regularization is barely utilized through the later levels of coaching, it doesn’t profit community efficiency — the community performs simply as poorly as if regularization have been by no means utilized. Such outcomes collectively exhibit the existence of a vital interval for regularizing deep networks that’s indicative of ultimate efficiency; see the determine above.

Such a end result reveals that regularization doesn’t merely bias community optimization in direction of ultimate options that generalize effectively. If this instinct have been appropriate, eradicating regularization through the later coaching durations — when the community begins to converge to its ultimate answer — can be problematic. Quite, regularization is discovered to have an effect on the early studying transient, biasing the community optimization course of in direction of areas of the loss panorama that comprise quite a few options with good generalization to be explored later in coaching.

Methodology. Equally to earlier work, the impression of regularization on community efficiency is studied utilizing convolutional neural community architectures on the CIFAR-10 dataset. In every experiment, the authors apply regularization (i.e., weight decay and information augmentation) to the educational course of for the primary t epochs, then proceed coaching with out regularization. When evaluating the generalization efficiency of networks with regularization utilized for various durations firstly of coaching, authors discover that good generalization could be achieved by solely performing regularization through the earlier part of coaching.

Past these preliminary experiments, the authors carry out experiments during which regularization is barely utilized for various durations at completely different factors in coaching. Such experiments exhibit the existence of a vital interval for regularization. Particularly, if regularization is utilized solely after some later epoch in coaching, then it yields no profit by way of ultimate generalization efficiency. Such a end result mirrors the findings in [1], as the shortage of regularization imposed could be seen as a type of studying deficit that impairs community efficiency.

Findings.

- The impact of regularization on ultimate efficiency is maximal through the preliminary, “vital” coaching epochs.

- The vital interval habits of weight decay is extra pronounced than that of knowledge augmentation. Knowledge augmentation impacts community efficiency equally all through coaching, whereas weight decay is simplest when utilized throughout earlier epochs of coaching.

- Performing regularization for your complete length of coaching yields networks that obtain comparable generalization efficiency to those who solely obtain regularization through the early studying transient (i.e., first 50% of coaching epochs).

- Utilizing regularization or not throughout later coaching durations leads to completely different factors of convergence (i.e., the ultimate answer shouldn’t be equivalent), however the ensuing generalization efficiency is identical. Such a end result reveals that regularization “directs” coaching through the early interval towards areas with a number of, completely different options that carry out equally effectively.

On Heat-Beginning Neural Community Coaching [3]

Foremost Thought. In real-world machine studying methods, it’s common for brand spanking new information to reach in an incremental trend. Usually, one will start with some aggregated dataset, then over time, as new information turns into obtainable, this dataset grows and evolves. In such a case, sequences of deep studying fashions are skilled over every model of the dataset, the place every mannequin takes benefit of all the information that’s obtainable up to now. Given such a setup, nonetheless, one could start to wonder if a “heat begin” may very well be formulated, such that every mannequin on this sequence begins coaching with the parameters of the earlier mannequin, mimicking a type of pre-training that enables mannequin coaching to be extra environment friendly and high-performing.

In [3], the authors discover that merely initializing mannequin parameters with the parameters of a previously-trained mannequin shouldn’t be ample to attain good generalization efficiency. Though ultimate coaching losses are comparable, fashions that first pre-trained over a smaller subset of knowledge, then fine-tuned on the complete dataset obtain degraded take a look at accuracy compared to fashions which can be randomly initialized and skilled utilizing the complete dataset. Such a discovering mimics the habits of vital studying durations outlined in [1, 2] — the early part of coaching is totally targeted upon a smaller information subset (i.e., the model of the dataset earlier than the arrival of latest information), which leads to degraded efficiency as soon as the mannequin is uncovered to the complete dataset. Nonetheless, the authors suggest a easy warm-starting approach that can be utilized to keep away from such deteriorations in take a look at accuracy.

Methodology. Think about a setup the place new information arrives right into a system as soon as every day. In such a system, one would ideally re-train their mannequin when this new information arrives every day. Then, to reduce coaching time, a naive heat beginning method may very well be applied by initializing the brand new mannequin’s parameters with the parameters of the earlier days’ mannequin previous to coaching/fine-tuning. Apparently, nonetheless, such a heat beginning method is discovered to yield fashions that generalize poorly, revealing that pre-training over an incomplete subset of knowledge is a type of studying impairment when utilized throughout a vital interval.

To beat the impression of this impairment, the authors suggest a easy approach known as Shrink, Perturb, Repeat that:

- Shrinks mannequin weights in direction of zero.

- Provides a small quantity of noise to mannequin weights.

If such a process is utilized to the weights of a earlier mannequin skilled over an incomplete subset of knowledge, then the parameters of this mannequin can be utilized to heat begin coaching over the complete dataset with out inflicting any deterioration in generalization efficiency. Though the quantity of shrinking and scale of the noise introduce new hyperparameters to the coaching course of, this easy trick yields outstanding computational financial savings — as a result of skill to heat begin, and thus pace up, mannequin coaching — with no deterioration to community efficiency.

To elucidate the efficacy of this method, authors clarify {that a} naive heat begin methodology experiences vital imbalances between the gradients of latest and outdated information. Such imbalances are recognized to negatively impression the educational course of [4]. Nonetheless, shrinking and noising mannequin parameters previous to coaching each (i) preserves community predictions and (ii) balances the gradient contributions of latest and outdated information, thus hanging a steadiness between leveraging previously-learned info and adapting to newly-arriving information.

Findings.

- Though vital studying durations related to incomplete datasets are demonstrated in deep networks, less complicated fashions (e.g., logistic regression) don’t expertise such an impact (i.e., seemingly as a result of coaching is convex).

- The degradation in take a look at accuracy as a result of naive heat beginning can’t be alleviated by way of tuning of hyperparameters like batch dimension or studying charge.

- Solely a small quantity of coaching (i.e., a number of epochs) over an incomplete subset of knowledge is critical to break the take a look at accuracy of a mannequin skilled over the complete dataset, additional revealing that coaching over incomplete information is a type of studying impairment with connections to vital studying durations.

- Leveraging the Shrink, Perturb, Repeat technique fully eliminates the generalization hole between randomly-initialized and warm-started fashions, enabling vital computational financial savings.

Is deep studying principle lacking the mark?

The existence of vital studying durations offers start to an fascinating perspective of the educational course of for deep neural networks. Particularly, the truth that such networks can not recuperate from impairments utilized through the early epochs of coaching reveals that studying progresses in two, distinct phases, every of which have fascinating properties and habits.

- Vital Studying Interval: the memorization interval. The community should navigate a slim/bottlenecked area of the loss panorama.

- Converging to a Ultimate Resolution: the forgetting interval. After traversing a bottlenecked area of the loss panorama, the community enters a large valley of many equally-performant options to which it will possibly converge.

The vital studying interval through the early studying transient performs a key position in figuring out ultimate community efficiency. Later adjustments to the educational course of can not alleviate errors throughout this early interval.

Apparently, most theoretical work within the area of deep studying is asymptotic in nature. Put merely, which means such strategies of study focus upon the properties of the ultimate, converged answer after many iterations of coaching. No notion of vital studying durations or completely different phases of studying seem. The convincing empirical outcomes that define the existence of vital studying durations inside deep networks trace that there’s extra to deep studying than is revealed by present, asymptotic evaluation. Theoretical evaluation that actually captures the complexity of studying inside deep networks is but to return.

The takeaways from the overview could be acknowledged fairly merely:

- Neural community coaching appears to proceed in two main phases — memorization and forgetting.

- Impairing the educational course of through the first, early part shouldn’t be good.

To be a bit extra particular, studying impairments through the first part aren’t simply unhealthy… they’re seemingly catastrophic. One can not recuperate from these impairments through the second part, and the ensuing community is doomed to poor efficiency generally. The work overviewed right here has demonstrated this property in quite a few domains, displaying that the next impairments utilized through the first part of studying can degrade community generalization:

- Sufficiently blurred photographs

- Lack of regularization (i.e., information augmentation or weight decay)

- Lack of ample information

Vital studying durations present a novel perspective on neural community coaching that makes even seasoned researchers query their intuitions. This two-phase view of neural community coaching defies commonly-held beliefs and isn’t mirrored inside a lot of the theoretical evaluation of deep networks, revealing that rather more work is to be performed if we’re to collectively arrive at a extra nuanced understanding of deep studying. With this in thoughts, one could start to wonder if essentially the most elementary breakthroughs in our understanding of deep networks are but to return.

Additional Studying

- Linear Mode Connectivity and the LTH

- Pleasant Coaching: Neural Networks Can Adapt Knowledge To Make Studying Simpler

- Catastrophic Fisher Explosion: Early Part Fisher Matrix Impacts Generalization

Conclusion

Thanks a lot for studying this text. I hope that you simply loved it and realized one thing new. I’m Cameron R. Wolfe, a analysis scientist at Alegion and PhD scholar at Rice College finding out the empirical and theoretical foundations of deep studying. Should you preferred this publish, please comply with my Deep (Studying) Focus e-newsletter, the place I decide a single, bi-weekly subject in deep studying analysis, present an understanding of related background info, then overview a handful of widespread papers on the subject. You may as well try my different writings!

Bibliography

[1] Achille, Alessandro, Matteo Rovere, and Stefano Soatto. “Vital studying durations in deep networks.” Worldwide Convention on Studying Representations. 2018.

[2] Golatkar, Aditya Sharad, Alessandro Achille, and Stefano Soatto. “Time issues in regularizing deep networks: Weight decay and information augmentation have an effect on early studying dynamics, matter little close to convergence.” Advances in Neural Data Processing Methods 32 (2019).

[3] Ash, Jordan, and Ryan P. Adams. “On warm-starting neural community coaching.” Advances in Neural Data Processing Methods 33 (2020): 3884–3894.

[4] Yu, Tianhe, et al. “Gradient surgical procedure for multi-task studying.” Advances in Neural Data Processing Methods 33 (2020): 5824–5836.

[5] Eric R Kandel, James H Schwartz, Thomas M Jessell, Steven A Siegelbaum, and A James Hudspeth. Ideas of Neural Science. McGraw-Hill, New York, NY, fifth version, 2013.