Personalised generated pictures with customized kinds or objects

On 22 Aug 2022, Stability.AI introduced the public launch of Secure Diffusion, a strong latent text-to-image diffusion mannequin. The mannequin is able to producing completely different variants of pictures given any textual content or picture as enter.

Please word that the

… mannequin is being launched beneath a Artistic ML OpenRAIL-M license. The license permits for industrial and non-commercial utilization. It’s the accountability of builders to make use of the mannequin ethically. This contains the derivatives of the mannequin.

This tutorial focuses on learn how to fine-tune the embedding to create customized pictures based mostly on customized kinds or objects. As an alternative of re-training the mannequin, we will symbolize the customized type or object as new phrases within the embedding area of the mannequin. In consequence, the brand new phrase will information the creation of recent pictures in an intuitive manner.

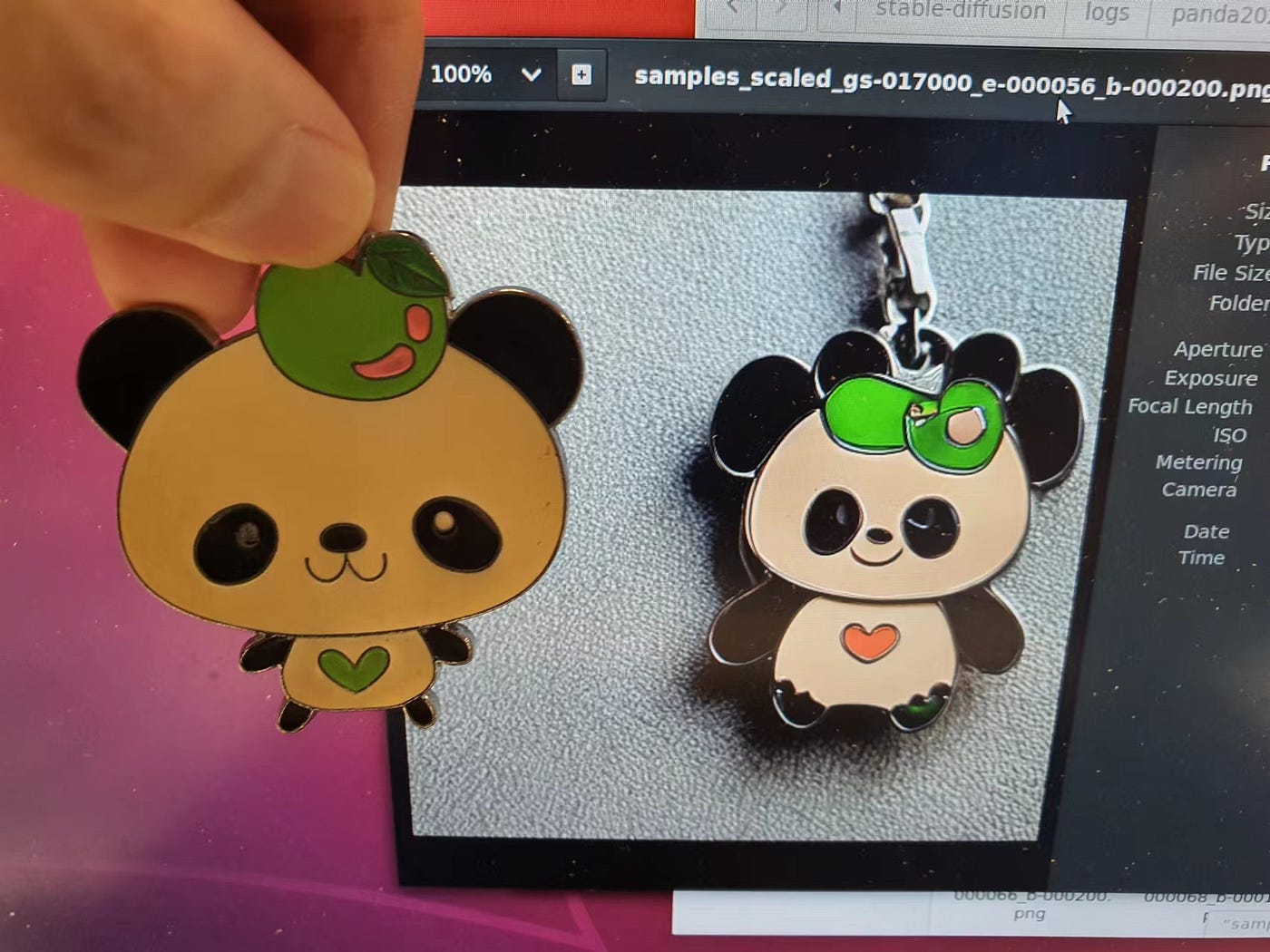

Take a look on the following comparability between an actual life object and a generated picture utilizing secure diffusion mannequin and fine-tuned embedding:

Let’s proceed to the following part for setup and set up.

At first, create a folder referred to as training_data within the root listing (stable-diffusion). We’re going to place all our coaching pictures inside it. You possibly can title them something you want however it will need to have the next properties:

- picture dimension of 512 x 512

- pictures must be upright

- about 3 to five pictures for greatest outcomes (mannequin might not converge when you use too many variety of pictures)

- pictures ought to have related contextual data. For style-based fine-tuning, the colour scheme and artwork type must be constant. For object-based fine-tuning, pictures must be of the identical object from completely different perspective (suggest to keep up the identical going through for objects that differs between back and front).

For demonstration functions, I’m going to make use of my private keychain as coaching knowledge for object-based fine-tuning.

The photographs above are taken with my cellphone digital camera. Therefore, I’ve to resize them to 512 x 512. You should use the next script as reference on learn how to resize the coaching pictures:

Head over to <root>/configs/stable-diffusion/ folder and it is best to see the next recordsdata:

- v1-finetune.yaml

- v1-finetune_style.yaml

- v1-inference.yaml

The v1-finetune.yaml file is supposed for object-based fine-tuning. For style-based fine-tuning, it is best to use v1-finetune_style.yaml because the config file.

Suggest to create a backup of the config recordsdata in case you tousled the configuration.

The default configuration requires at the least 20GB VRAM for coaching. We are able to cut back the reminiscence requirement by decreasing the batch dimension and variety of employees. Modify the next traces

knowledge:

goal: essential.DataModuleFromConfig

params:

batch_size: 2

num_workers: 16...lightning:

callbacks:

image_logger:

goal: essential.ImageLogger

params:

batch_frequency: 500

max_images: 8

to the next

orkers. Modify the next tracesknowledge:

goal: essential.DataModuleFromConfig

params:

batch_size: 1

num_workers: 8...lightning:

callbacks:

image_logger:

goal: essential.ImageLogger

params:

batch_frequency: 500

max_images: 1

batch_sizefrom 2 to 1num_workersfrom 16 to eightmax_imagesfrom 8 to 1

I’ve examined the brand new configuration to be engaged on GeForce RTX 2080.

As well as, you will have to vary the initializer_words as a part of enter to embeddings. It accepts a listing of strings:

# finetuning on a toy picture

initializer_words: ["toy"]# finetuning on a artstyle

initializer_words: ["futuristic", "painting"]

In case you encounter the next error:

<string> maps to greater than a single token. Please use one other string

You possibly can both change the string or remark out the following line of code beneath ldm/modules/embedding_manager.py.

As soon as you might be executed with it, run the next command:

Specify--no-test within the command line to disregard testing throughout fine-tuning.

On the time of this writing, you may solely do single GPU coaching and it’s good to embody a comma character on the finish of the quantity when specifying GPUs.

If every thing is setup correctly, the coaching will start and a logs folder shall be created. Inside it, you will discover a folder for every coaching that you’ve performed with the next folders:

checkpoints— comprises the output embedding and coaching checkpointsconfigs— configuration recordsdata in YAML formatpictures— pattern immediate textual content, immediate picture and generated picturestesttube— recordsdata associated to testing

The coaching will run indefinitely and it is best to examine pictures which are prefix with sample_scaled_ to determine the efficiency of the embedding. You possibly can cease the coaching as soon as it reached round 5000 to 7000 steps.

Contained in the checkpoints folder, it is best to see fairly plenty of recordsdata:

embeddings_gs-5699.pt— the embedding file at 5699 stepepoch=000010.ckpt— the coaching checkpoint at 10 epochsfinal.ckpt— the coaching checkpoint of the final epochembeddings.pt— the embedding file of the final step

The ckpt recordsdata are used to renew coaching. The pt recordsdata are the embedding recordsdata that must be used along with the secure diffusion mannequin. Merely copy the specified embedding file and place it at a comfort location for inference.

Interactive Terminal

For command line utilization, merely specify the embedding_path flag when working ./scripts/dream.py.

python ./scripts/dream.py --embedding_path /path/to/embeddings.pt

Then, use the next immediate within the interactive terminal for text2image:

dream> "a photograph of *"

Apart from that, you may carry out image2image as properly by setting the init_img flag:

dream> "waterfall within the type of *" --init_img=./path/to/drawing.png --strength=0.7

Simplified API

Alternatively, you should utilize the ldm.simplet2i module to run inference programmatically. It comes with prompt2image perform as a single endpoint for text2image and image2image duties.

Be aware that outdated model comprises the next capabilities which have been deprecated however will nonetheless work:

Kindly consult with the simplet2i script for extra data on the enter arguments.

You’ll find the whole inference code on the following gist for reference:

Be aware that outcomes will not be good more often than not. The thought is to generate giant variety of pictures and use the one that’s appropriate to your use circumstances. Let take a look on the consequence that I bought after fine-tuning the embedding.

Furthermore, I’ve examined image2image duties and procure the next output:

The discharge of secure diffusion gives nice potentials and alternatives to builders around the globe. It’s undoubtedly going to have a big effect on many of the current industries on what may be achieved with the ability of AI.

Inside a span of every week, I’ve seen builders around the globe working collectively in constructing their very own open-sourced tasks, starting from internet UI to animation.

I’m wanting ahead to extra nice instruments and state-of-the-art fashions from the open-source communities.

Thanks for studying this piece. Have an incredible day forward!