The favored framework has built-in assist for this nice approach that improves generalization, accuracy, and inference pace

I all the time thought that you just’d want separate modules for pruning however I not too long ago found that PyTorch has built-in assist for it. The documentation is a bit missing although … so I made a decision to pen this text and present you some suggestions and tips!

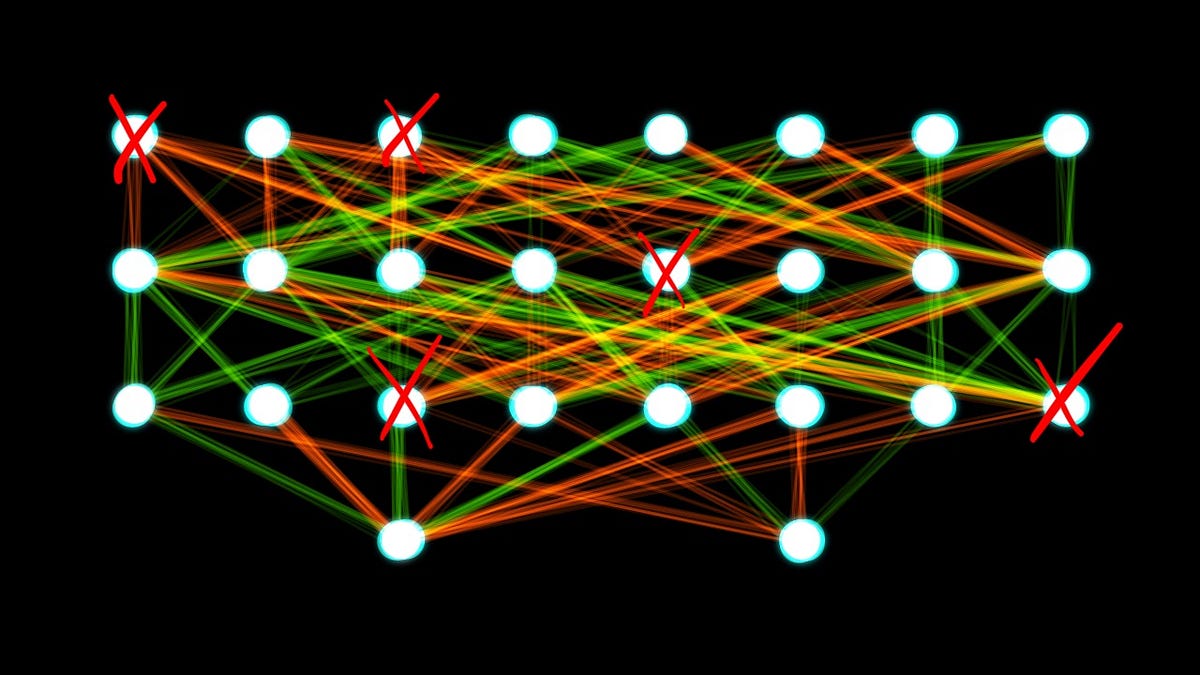

Pruning is a method that removes weights or biases (parameters) from a neural community. If executed proper, this reduces the reminiscence footprint of the mannequin, improves generalization, speeds-up inference, and permits coaching/fine-tuning with fewer samples. After all, you can’t simply randomly take away parameters out of your community and anticipate it to carry out higher — however you’ll be able to decide which parameters are pointless in your goal and take away these. Evidently, you must also watch what number of parameters you take away: should you take away too many your community will carry out a lot worse or could develop into completely defunct should you block the gradient movement (e.g. by pruning all parameters from a connecting layer).

Notice that it’s fairly widespread to prune after coaching, typically, additionally it is potential to use pruning earlier than or throughout coaching.

Within the earlier paragraph, I deliberately used the phrase “pointless” to confer with prunable parameters. However what makes a parameter pointless? That is fairly an advanced query and remains to be a analysis discipline at this time. Amongst the most well-liked strategies for locating prunable weights (pruning criterion) are:

- Random*: Merely prune random parameters.

- Magnitude*: Prune the parameters with the least weight (e.g. their L2 norm).

- Gradient: Prune parameters primarily based on the amassed gradient (requires a backward go and subsequently knowledge).

- Info: Leverage different data reminiscent of high-order curvature data for pruning.

- Realized: After all, we will additionally prepare our community to prune itself (very costly, requires coaching)!

*PyTorch has built-in assist for random-, magnitude-based pruning. Each strategies are surprisingly efficient given how simple it’s to compute them, and that they are often computed with none knowledge.

Unstructured Pruning

Unstructured Puning refers to pruning particular person atoms of parameters. E.g. particular person weights in linear layers, particular person filter pixels in convolution layers, some scaling floats in your customized layer, and so forth. The purpose is you prune parameters with out their respective construction, therefore the identify unstructured pruning.

Structured Pruning

As an alternative choice to unstructured pruning, structured pruning removes whole buildings of parameters. This doesn’t imply that it needs to be a whole parameter, however you transcend eradicating particular person atoms e.g. in linear weights you’d drop whole rows or columns, or, in convolution layers whole filters (I level the reader to [1] the place we’ve proven that many publicly obtainable fashions comprise a bunch of degenerated filters that ought to be prunable).

In apply, you’ll be able to obtain a lot larger pruning ratios with unstructured pruning, however it in all probability received’t pace up your mannequin, as you continue to need to do all computations. Structured pruning can e.g. prune whole convolution channels and subsequently considerably decrease the variety of matrix multiplications you want. At present, there’s a development to assist sparse tensors in each soft- and {hardware}, so sooner or later unstructured pruning could develop into extremely related.

Native vs. International Pruning

Pruning can occur per layer (native) or over all a number of/all layers (international).

How does pruning work in PyTorch?

Pruning is applied in torch.nn.utils.prune.

Curiously, PyTorch goes past merely setting pruned parameters to zero. PyTorch copies the parameter <param> right into a parameter referred to as <param>_original and creates a buffer that shops the pruning masks <param>_mask. It additionally creates a module-level forward_pre_hook (a callback that’s invoked earlier than a ahead go) that applies the pruning masks to the unique weight.

This has the next penalties: Printing <param> will print the parameter with the utilized masks, however itemizing it through <module>.parameters() or <module>.named_parameters() will present the unique, unpruned parameter.

This has the next benefits: It’s potential to find out if a module has been pruned, and unique parameters are accessible which permits experimentation with varied pruning strategies. But, it comes at value of some reminiscence overhead.

Which PyTorch variations are supported?

You’re good you probably have model 1.4.0 or later.

The supported choices are a bit complicated and the API is barely inconsistent so I made this overview, that can hopefully clear issues up:

Native Unstructured Pruning

The next capabilities can be found for native unstructured pruning:

torch.nn.utils.prune.random_unstructured(module, identify, quantity)

torch.nn.utils.prune.l1_unstructured(module, identify, quantity, importance_scores=None)

Simply name the capabilities above and go your layer/module as module and the identify of the parameter to prune for identify. Usually this will probably be weight or bias. The quantity parameter specifies how a lot to prune. You’ll be able to go a float between 0 and 1 for a ratio, or an integer to outline an absolute variety of parameters. Bear in mind that these instructions might be utilized iteratively and the quantity is all the time relative to the variety of remaining (i.e. not pruned) parameters. So, should you iteratively prune a parameter with 12 entries with quantity=0.5 you’ll find yourself with 6 parameters after the primary spherical, then 3, …

Right here is an instance that prunes 40% of a convolution layer weight. Notice how 4 parameters are set to zero.

>>> import torch.nn.utils.prune as prune

>>> conv = torch.nn.Conv2d(1, 1, 3)

>>> prune.random_unstructured(conv, identify="weight", quantity=4)

>>> conv.weight

tensor([[[[-0.0000, 0.0000, 0.2603],

[-0.3278, 0.0000, 0.0280],

[-0.0361, 0.1409, 0.0000]]]], grad_fn=<MulBackward0>)

Different norms than L1 aren’t supported since we function on atoms.

International Unstructured Pruning

In order for you international unstructured pruning the command is barely completely different:

torch.nn.utils.prune.global_unstructured(parameters, pruning_method, importance_scores=None, **kwargs)

Right here we have to go parameters as a listing of tuples that maintain the module and their parameter identify to prune. pruning_method=prune.L1Unstucturedappears to be the one supported choice. Right here is an instance from the PyTorch docs:

mannequin = ...parameters = (

(mannequin.conv1, "weight"),

(mannequin.conv2, "weight"),

(mannequin.fc1, "weight"),

(mannequin.fc2, "weight"),

(mannequin.fc3, "weight"),

)prune.global_unstructured(

parameters,

pruning_method=prune.L1Unstructured,

quantity=0.2,

)

If you wish to prune all weights of a particular layer sort (e.g. a convolution layer), you’ll be able to routinely accumulate them as follows:

mannequin = ...parameters_to_prune = [

(module, "weight") for module in filter(lambda m: type(m) == torch.nn.Conv2d, model.modules())

]prune.global_unstructured(

parameters_to_prune,

pruning_method=prune.L1Unstructured,

quantity=0.2,

)

After all, you’ll be able to modify the filter to your wants.

Native Structured Pruning

PyTorch solely helps native structured pruning:

torch.nn.utils.prune.ln_structured(module, identify, quantity, n, dim, importance_scores=None)

torch.nn.utils.prune.random_structured(module, identify, quantity, dim)

The instructions are pretty much like the native unstructured ones with the one distinction that you’ll have to outline dim parameter. This may outline the axis of your construction. Here’s a helper for the related dimensions:

For torch.nn.Linear

- Disconnect all connections to 1 enter:

1 - Disconnect one neuron:

0

For torch.nn.Conv2d:

- Channels (stack of kernels that output one feature-map):

0 - Neurons (stack of kernels that course of the identical enter feature-map in numerous channels):

1 - Filter kernels: not supported (would require multi-axis [2, 3] or prior reshape, which isn’t that simple both)

Notice that opposite to unstructured pruning you’ll be able to truly outline what norm to make use of with the n parameter. You’ll find a listing of supported ones right here: https://pytorch.org/docs/secure/generated/torch.norm.html#torch.norm.

Right here is an instance that prunes a whole channel(this corresponds to 2 kernels in our instance) primarily based on the L2-norm:

>>> conv = torch.nn.Conv2d(2, 3, 3)

>>> prune.ln_structured(conv, identify="weight", quantity=1, n=2, dim=0)

>>> conv.weight

tensor([[[[ 0.0000, 0.0000, 0.0000],

[ 0.0000, 0.0000, 0.0000],

[ 0.0000, -0.0000, -0.0000]], [[ 0.0000, -0.0000, 0.0000],

[ 0.0000, 0.0000, 0.0000],

[ 0.0000, 0.0000, -0.0000]]], [[[ 0.2284, 0.1574, -0.0215],

[-0.1096, 0.0952, -0.2251],

[-0.0805, -0.0173, 0.1648]], [[-0.1104, 0.2012, -0.2088],

[-0.1687, 0.0815, 0.1644],

[-0.1963, 0.0762, -0.0722]]], [[[-0.1055, -0.1729, 0.2109],

[ 0.1997, 0.0158, -0.2311],

[-0.1218, -0.1244, 0.2313]], [[-0.0159, -0.0298, 0.1097],

[ 0.0617, -0.0955, 0.1564],

[ 0.2337, 0.1703, 0.0744]]]], grad_fn=<MulBackward0>)

Notice how the output adjustments if we prune a neuron as an alternative:

>>> conv = torch.nn.Conv2d(2, 3, 3)

>>> prune.ln_structured(conv, identify="weight", quantity=1, n=2, dim=1)

>>> conv.weight

tensor([[[[ 0.0000, -0.0000, -0.0000],

[-0.0000, -0.0000, 0.0000],

[-0.0000, 0.0000, 0.0000]], [[-0.1013, 0.1255, 0.0151],

[-0.1110, 0.2281, 0.0783],

[-0.0215, 0.1412, -0.1201]]], [[[ 0.0000, -0.0000, 0.0000],

[ 0.0000, -0.0000, 0.0000],

[ 0.0000, -0.0000, 0.0000]], [[ 0.0878, 0.2104, 0.0414],

[ 0.0724, -0.1888, 0.1855],

[ 0.2354, 0.1313, -0.1799]]], [[[-0.0000, -0.0000, -0.0000],

[-0.0000, -0.0000, 0.0000],

[ 0.0000, -0.0000, 0.0000]], [[ 0.1891, 0.0992, 0.1736],

[ 0.0451, 0.0173, 0.0677],

[ 0.2121, 0.1194, -0.1031]]]], grad_fn=<MulBackward0>)

Customized importance-based Pruning

You might have seen that among the earlier capabilities assist the importance_score argument:

torch.nn.utils.prune.l1_unstructured(module, identify, quantity, importance_scores=None)

torch.nn.utils.prune.ln_structured(module, identify, quantity, n, dim, importance_scores=None)

torch.nn.utils.prune.global_unstructured(parameters, pruning_method, importance_scores=None, **kwargs)

You’ll be able to go a tensor (or checklist of tensors for global_unstructured) to these capabilities of the identical form as your parameter together with your customized entries of pruning data. This serves as a alternative for the magnitude and gives you an choice to switch it with any customized scoring.

For instance, let’s implement a easy pruning method that eliminates the primary 5 entries in a linear layers weight tensor:

>>> linear = torch.nn.Linear(3, 3)

>>> prune.l1_unstructured(linear, identify="weight", quantity=5, importance_scores=torch.arange(9).view(3, 3))

>>> linear.weight

tensor([[-0.0000, 0.0000, -0.0000],

[ 0.0000, -0.0000, -0.1293],

[ 0.1886, 0.4086, -0.1588]], grad_fn=<MulBackward0>)

Helper capabilities

PyTorch additionally affords a few helper capabilities. The primary I wish to present is:

torch.nn.utils.prune.is_pruned(module)

As you could have guessed, this operate means that you can examine if any parameter in a module has been pruned. It returns True if a module was pruned. Nevertheless, you can’t specify which parameter to verify.

The final operate I wish to present you is:

torch.nn.utils.prune.take away(module, identify)

Naively, chances are you’ll suppose that this undoes the pruning however it does fairly the alternative: It applies the pruning by eradicating the masks, the unique parameter, and the ahead hook. Lastly, it writes the pruned tensor into the parameter. Consequently, calling torch.nn.utils.prune.is_pruned(module) on such a module would return False.

Conclusion

PyTorch affords a built-in approach to apply unstructured or structured pruning to tensors randomly, by magnitude, or by a customized metric. Nevertheless, the API is a bit complicated and the documentation might be improved.