Studying how you can use Lambda capabilities written in Rust is a particularly helpful talent for devs at the moment. AWS Lambda offers an on-demand computing service with no need to deploy or preserve a long-running server, eradicating the necessity to set up and improve an working system or patch safety vulnerabilities.

On this article, we’ll learn to create and deploy a Lambda perform written in Rust. The entire code for this mission is accessible on my GitHub to your comfort.

Leap Forward:

Chilly begins and Rust

One concern that has continued with Lambda through the years has been the chilly begin downside.

A perform can not spin up immediately, because it requires often a few seconds or longer to return a response. Totally different programming languages have completely different efficiency traits; some languages like Java can have for much longer chilly begin occasions due to their dependencies, just like the JVM.

Rust however has extraordinarily small chilly begins and has grow to be a programming language of alternative for builders searching for important efficiency features by avoiding bigger chilly begins.

Let’s leap in.

Getting began

To start, we’ll want to put in cargo with rustup and cargo-lambda. I shall be utilizing Homebrew as a result of I’m on a Mac, however you’ll be able to check out the earlier two hyperlinks for set up directions by yourself machine.

curl https://sh.rustup.rs -sSf | sh brew faucet cargo-lambda/cargo-lambda brew set up cargo-lambda

Generate boilerplate with the new command

First, let’s create a brand new Rust package deal with the new command. If you happen to embody the --http flag, it can robotically generate a mission suitable with Lambda perform URLs.

cargo lambda new --http rustrocket cd rustrocket

This generates a primary skeleton to begin writing AWS Lambda capabilities with Rust. Our mission construction comprises the next recordsdata:

.

├── Cargo.toml

└── src

└── fundamental.rs

fundamental.rs below the src listing holds our Lambda code written in Rust.

We’ll check out that code in a second, however let’s first check out the one different file, Cargo.toml.

Cargo manifest file

Cargo.toml is the Rust manifest file and features a configuration file written in TOML to your package deal.

# Cargo.toml

[package]

title = "rustrocket"

model = "0.1.0"

version = "2021"

[dependencies]

lambda_http = "0.6.1"

lambda_runtime = "0.6.1"

tokio = { model = "1", options = ["macros"] }

tracing = { model = "0.1", options = ["log"] }

tracing-subscriber = { model = "0.3", default-features = false, options = ["fmt"] }

- The primary part in all

Cargo.tomlrecordsdata defines a package deal. Thetitleandmodelfields are the one required items of knowledge — an in depth checklist of further fields might be discovered within the officialpackage dealdocumentation - The second part specifies our

dependencies, together with the model to put in

We’ll solely want so as to add one additional dependency (serde) for the instance we’ll create on this article.

Serde is a framework for serializing and deserializing Rust knowledge buildings. The present model is 1.0.145 as of the time of writing his article, however you’ll be able to set up the most recent model with the next command:

cargo add serde

Now our [dependencies] contains serde = "1.0.145".

Begin a improvement server with the watch command

Now, let’s boot up a improvement server with the watchsubcommand to emulate interactions with the AWS Lambda management aircraft.

cargo lambda watch

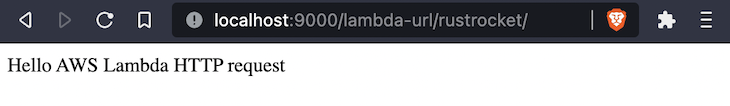

Because the emulator server contains help for Lambda perform URLs out of the field, you’ll be able to open localhost:9000/lambda-url/rustrocket to invoke your perform and consider it in your browser.

Open fundamental.rs within the src listing to see the code for this perform.

// src/fundamental.rs

use lambda_http::{run, service_fn, Physique, Error, Request, RequestExt, Response};

async fn function_handler(occasion: Request) -> Outcome<Response<Physique>, Error> {

let resp = Response::builder()

.standing(200)

.header("content-type", "textual content/html")

.physique("Good day AWS Lambda HTTP request".into())

.map_err(Field::new)?;

Okay(resp)

}

#[tokio::main]

async fn fundamental() -> Outcome<(), Error> {

tracing_subscriber::fmt()

.with_max_level(tracing::Degree::INFO)

.with_target(false)

.without_time()

.init();

run(service_fn(function_handler)).await

}

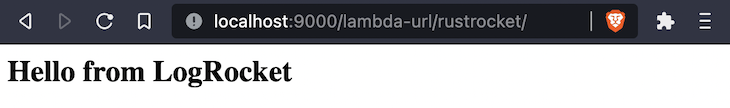

Now, we’ll make a change to the textual content within the physique by together with a brand new message. Because the header is already set to textual content/html, we will wrap our message in HTML tags.

Our terminal has additionally displayed a couple of warnings, which might be mounted with the next modifications:

- Rename

occasionto_event - Take away the unused

RequestExtfrom imports

// src/fundamental.rs

use lambda_http::{run, service_fn, Physique, Error, Request, Response};

async fn function_handler(_event: Request) -> Outcome<Response<Physique>, Error> {

let resp = Response::builder()

.standing(200)

.header("content-type", "textual content/html")

.physique("<h2>Good day from LogRocket</h2>".into())

.map_err(Field::new)?;

Okay(resp)

}

The fundamental() perform shall be unaltered. Return to your browser to see the change:

Embody further data in our mission

Proper now, we have now a single perform that may be invoked to obtain a response, however this Lambda shouldn’t be capable of take particular enter from a person and our mission can’t be used to deploy and invoke a number of capabilities.

To do that, we’ll create a brand new file, hey.rs, inside a brand new listing known as, bin.

mkdir src/bin echo > src/bin/hey.rs

The bin listing provides us the power to incorporate a number of perform handlers inside a single mission and invoke them individually.

We even have so as to add a bin part on the backside of our Cargo.toml file containing the title of our new handler, like so:

# Cargo.toml [[bin]] title = "hey"

Embody the next code to rework the occasion payload right into a Response that’s handed with Okay() to the fundamental() perform, like this:

// src/bin/hey.rs

use lambda_runtime::{service_fn, Error, LambdaEvent};

use serde::{Deserialize, Serialize};

#[derive(Deserialize)]

struct Request {

command: String,

}

#[derive(Serialize)]

struct Response {

req_id: String,

msg: String,

}

pub(crate) async fn my_handler(occasion: LambdaEvent<Request>) -> Outcome<Response, Error> {

let command = occasion.payload.command;

let resp = Response {

req_id: occasion.context.request_id,

msg: format!("{}", command),

};

Okay(resp)

}

#[tokio::main]

async fn fundamental() -> Outcome<(), Error> {

tracing_subscriber::fmt()

.with_max_level(tracing::Degree::INFO)

.without_time()

.init();

let func = service_fn(my_handler);

lambda_runtime::run(func).await?;

Okay(())

}

Ship a request with the invoke command

Ship requests to the management aircraft emulator with the invoke subcommand.

cargo lambda invoke hey

--data-ascii '{"command": "hey from logrocket"}'

Your terminal will reply again with this:

{

"req_id":"64d4b99a-1775-41d2-afc4-fbdb36c4502c",

"msg":"hey from logrocket"

}

Deploy the mission to manufacturing

Up so far, we’ve solely been operating our Lambda handler domestically on our personal machines. To get this handler on AWS, we’ll have to construct and deploy the mission’s artifacts.

Bundle perform artifacts with the construct command

Compile your perform natively with the constructsubcommand. This produces artifacts that may be uploaded to AWS Lambda.

cargo lambda construct

Create IAM position

AWS IAM (identity and access management) is a service for creating, making use of, and managing roles and permissions on AWS sources.

Its complexity and scope of options has motivated groups corresponding to Amplify to develop a big useful resource of instruments round IAM. This contains libraries and SDKs with new abstractions constructed for the categorical goal of simplifying the developer expertise round working with providers like IAM and Cognito.

On this instance, we solely have to create a single, read-only position, which we’ll put in a file known as rust-role.json.

echo > rust-role.json

We’ll use the AWS CLI and ship a JSON definition of the next position. Embody the next code in rust-role.json:

{

"Model": "2012-10-17",

"Assertion": [{

"Effect": "Allow",

"Principal": {

"Service": "lambda.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

To do that, first set up the AWS CLI, which offers an in depth checklist of instructions for working with IAM. The one command we’ll want is the create-role command, together with two choices:

- Embody the IAM coverage for the

--assume-role-policy-documentchoice - Embody the title

rust-rolefor the--role-namechoice

aws iam create-role --role-name rust-role --assume-role-policy-document file://rust-role.json

It will output the next JSON file:

{

"Position": {

"Path": "https://weblog.logrocket.com/",

"RoleName": "rust-role2",

"RoleId": "AROARZ5VR5ZCOYN4Z7TLJ",

"Arn": "arn:aws:iam::124397940292:position/rust-role",

"CreateDate": "2022-09-15T22:15:24+00:00",

"AssumeRolePolicyDocument": {

"Model": "2012-10-17",

"Assertion": [

{

"Effect": "Allow",

"Principal": {

"Service": "lambda.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

}

}

Copy the worth for Arn, which in my case is arn:aws:iam::124397940292:position/rust-role, and embody it within the subsequent command for the --iam-role flag.

Add to AWS with the deploy command

Add your capabilities to AWS Lambda with the deploy subcommand. Cargo Lambda will attempt to create an execution position with Lambda’s default service position coverage AWSLambdaBasicExecutionRole.

If these instructions fail and show permissions errors, then you have to to incorporate the AWS IAM position from the earlier part by including --iam-role FULL_ROLE_ARN.

Extra nice articles from LogRocket:

cargo lambda deploy --enable-function-url rustrocket cargo lambda deploy hey

If all the pieces labored, you’ll get an Arn and URL for the rustrocket perform and an Arn for the hey perform, as proven within the picture under.

perform arn: arn:aws:lambda:us-east-1:124397940292:perform:rustrocket

perform url: https://bxzpdr7e3cvanutvreecyvlvfu0grvsk.lambda-url.us-east-1.on.aws/

Open the perform URL to see your perform:

For the hey handler, we’ll use the invoke command once more, however this time with a --remote flag for distant capabilities.

cargo lambda invoke

--remote

--data-ascii '{"command": "hey from logrocket"}'

arn:aws:lambda:us-east-1:124397940292:perform:hey

Different deployment choices

Up so far, we’ve solely used Cargo Lambda to work with our Lambda capabilities, however there are a minimum of a dozen alternative ways to deploy capabilities to AWS Lambda!

These quite a few strategies range extensively in method, however might be roughly categorized into one in every of three teams:

- Different open supply initiatives like Cargo Lambda maintained by people or collections of individuals, doubtlessly AWS workers themselves or AWS consultants working with purchasers trying to construct on AWS

- Instruments constructed by firms who want to compete with AWS by providing a nicer, extra streamlined developer expertise

- Companies created and pushed by groups internally at AWS who’re constructing new merchandise that search to enhance the lives of present AWS builders whereas additionally bringing in newer and fewer skilled engineers

For directions on utilizing instruments from the second and third class, see the part known as Deploying the Binary to AWS Lambda on the aws-lambda-rust-runtime GitHub repository.

Ultimate ideas

The way forward for Rust is vivid at AWS. They’re closely investing within the crew and basis supporting the mission. There are additionally varied AWS providers that are actually beginning to incorporate Rust, together with:

To be taught extra about operating Rust on AWS generally, take a look at the AWS SDK for Rust. To go deeper into utilizing Rust on Lambda, go to the Rust Runtime repository on GitHub.

I hope this text served as a helpful introduction on how you can run Rust on Lambda, please go away your feedback under by yourself experiences!

LogRocket: Full visibility into manufacturing Rust apps

Debugging Rust functions might be troublesome, particularly when customers expertise points which are troublesome to breed. If you happen to’re all for monitoring and monitoring efficiency of your Rust apps, robotically surfacing errors, and monitoring sluggish community requests and cargo time, attempt LogRocket.

LogRocket is sort of a DVR for internet and cellular apps, recording actually all the pieces that occurs in your Rust app. As a substitute of guessing why issues occur, you’ll be able to combination and report on what state your utility was in when a problem occurred. LogRocket additionally displays your app’s efficiency, reporting metrics like consumer CPU load, consumer reminiscence utilization, and extra.

Modernize the way you debug your Rust apps — begin monitoring without spending a dime.