For the reason that official launch of .NET 6, the rumors about .NET 7 and the brand new options it might convey began. As we speak .NET 7 is (nearly) a actuality and plainly Microsoft is now confirming most of the rumors that we’re in entrance of the quickest .NET model ever: .NET 7. Let’s check out the most recent efficiency enhancements launched by Microsoft.

Improved System.Reflection Efficiency — 75% Sooner

Beginning with the efficiency enhancements of the brand new .NET 7 options, we’ve first the enhancement of the System.Reflection namespace. The System.Reflection namespace is chargeable for containing and storing varieties by metadata, to facilitate the retrieval of saved info from modules, members, assemblies and extra.

They’re largely used for manipulating cases of loaded varieties and with them you possibly can create varieties dynamically in a easy approach.

With this replace by Microsoft, now in .NET 7 the overhead when invoking a member utilizing reflection has been decreased significantly. If we discuss numbers, in keeping with the final benchmark supplied by Microsoft (made with the package deal BenchmarkDotNet ) you possibly can see as much as 3–4x sooner.

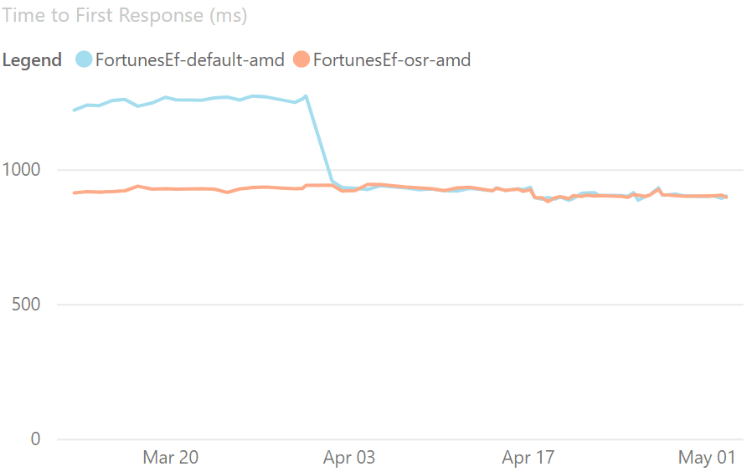

On Stack Alternative (OSR) — 30% Sooner

OSR (On Stack Alternative) is a superb complement to tiered compilation. It permits, in the course of the execution of a way, to change the code that’s being executed by the strategies which can be being executed in the mean time.

“OSR permits long-running strategies to change to extra optimized variations mid-execution, so the runtime can jit all strategies shortly at first after which transition to extra optimized variations when these strategies are known as incessantly (through tiered compilation) or have long-running loops (through OSR).”

With OSR, we are able to get hold of as much as 25% further pace at start-up (Avalonia IL Spy take a look at) and in keeping with TechEmpower, enhancements can vary from 10% to 30%.

Efficiency Impression (Supply: Microsoft)

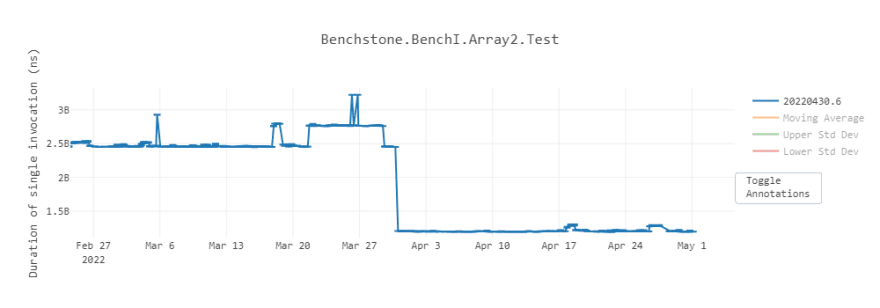

One other benefit of utilizing OSR, is that purposes (particularly with dynamic PGO), are improved when it comes to efficiency resulting from optimization. One of many greatest enhancements was seen with Array2 microbenchmark:

Array2 microbenchamark efficiency (Supply: Microsoft)

📚 If you wish to know in how OSR works, please refer: OSR Doc

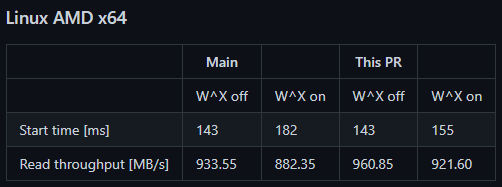

Diminished start-up time (Write-Xor-Execute) — 15% Sooner

As we’ve already seen initially, Microsoft has determined to focus primarily on efficiency enhancements and this one isn’t any exception. Now with Reimplement stubs to enhance efficiency we’ve seen an enchancment in startup time that, in keeping with Microsoft, is as much as 10–15%.

That is primarily resulting from a big discount within the variety of modifications after code creation at runtime.

Let’s verify the benchamarks:

Native AOT Enhancements — 75% sooner

Native AOT (Forward-of-time) is one other of the brand new enhancements and novelties that Microsoft brings this time in .NET 7. Native AOT might be thought-about an improved model of ReadyToRun (RTR).

To clarify it shortly, AOT alone is the know-how that’s chargeable for producing code at compile-time (not run-time). However, RTR is mainly the identical however targeted and specialised in eventualities the place the principle structure is client-server. There may be additionally Mono AOT (which is similar however for cellular purposes).

Now, Native AOT is the development of (merely) AOT. Based mostly on what I defined above, Native AOT is the Microsoft know-how that’s chargeable for producing code at compile time, however native (that’s why “Native” AOT).

This native code era at compile time has some benefits:

- Reminiscence utilization is restricted

- Disk area utilization is decrease

- Startup time is decreased

Microsoft explains how Native AOT works:

“Functions begin working the second the working system pages in them into reminiscence. The info constructions are optimized for working AOT generated code, not for compiling new code at runtime. That is much like how languages like Go, Swift, and Rust compile. Native AOT is finest suited to environments the place startup time issues essentially the most.”

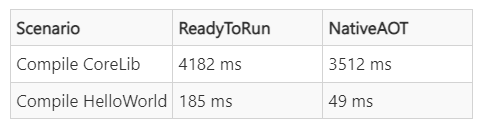

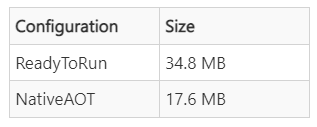

As well as, they’ve revealed a benchmark evaluating NativeAOT in opposition to ReadyToRun, during which the compile time is as much as 73% sooner and nearly half as gentle:

Loop Optimizations — 21% Sooner

This enchancment isn’t very spectacular however it nonetheless manages to enhance efficiency considerably. This time Microsoft has determined to proceed with the elimination of the initialization situations of the loop cloning variables:

In accordance with Bruce Forstall in his pr:

“Assume that any pre-existing initialization is appropriate”

“Test situation in opposition to zero if crucial. Const inits stay as earlier than”

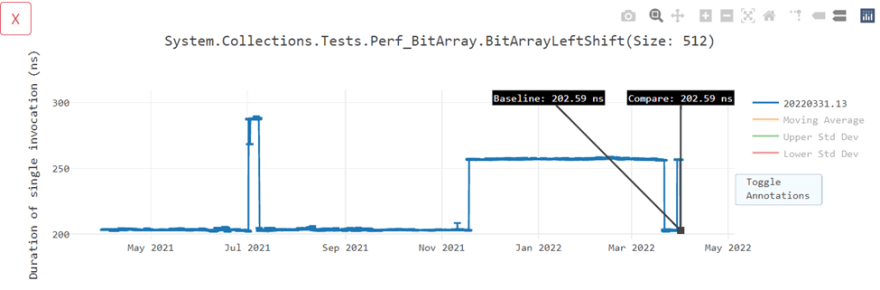

These enhancements enable a 21% efficiency enhance with System.Collections.Exams.Perf_BitArrayLeftShift (Measurement:512) . Let’s see the benchmark supplied by Microsoft:

It appears that evidently we’re in entrance of the following quickest .NET. What do you assume? Do you assume Microsoft will proceed to maintain the give attention to pace and efficiency? Or what would you prefer to see carried out in .NET 7?

From Dotnetsafer we wish to thanks in your time in studying this text.

And keep in mind you could strive without cost our .NET obfuscator. You can even shield your purposes immediately from Visible Studio with the .NET Obfuscator Visible Studio Extension.