TL;DR: As bizarre as it’d sound, seeing just a few false positives reported by a safety scanner might be a very good signal and positively higher than seeing none. Let’s clarify why.

Introduction

False positives have made a considerably sudden look in our lives in recent times. I’m, after all, referring to the COVID-19 pandemic, which required large testing campaigns with the intention to management the unfold of the virus. For the document, a false constructive is a outcome that seems constructive (for COVID-19 in our case), the place it’s really detrimental (the individual isn’t contaminated). Extra generally, we communicate of false alarms.

In laptop safety, we’re additionally usually confronted with false positives. Ask the safety staff behind any SIEM what their largest operational problem is, and chances are high that false positives can be talked about. A latest report estimates that as a lot as 20% of all of the alerts acquired by safety professionals are false positives, making it an enormous supply of fatigue.

But the story behind false positives isn’t so simple as it’d seem at first. On this article, we’ll advocate that when evaluating an evaluation instrument, seeing a reasonable charge of false positives is a quite good signal of effectivity.

What are we speaking about precisely?

With static evaluation in software safety, our major concern is to catch all of the true vulnerabilities by analyzing supply code.

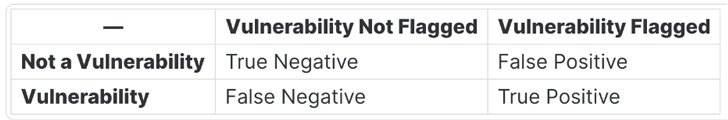

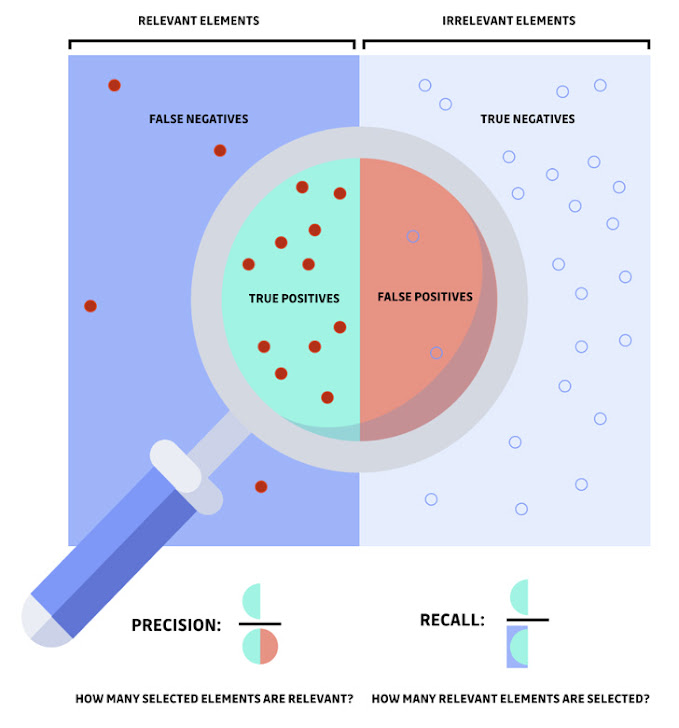

Here’s a visualization to higher grasp the excellence between two basic ideas of static evaluation: precision and recall. The magnifying glass represents the pattern that was recognized or chosen by the detection instrument. You may study extra about methods to assess the efficiency of a statistical course of right here.

Let’s examine what meaning from an engineering viewpoint:

- by lowering false positives, we enhance precision (all vulnerabilities detected really characterize a safety subject).

- by lowering false negatives, we enhance recall (all vulnerabilities current are accurately recognized).

- at 100% recall, the detection instrument would by no means miss a vulnerability.

- at 100% precision, the detection instrument would by no means elevate a false alert.

Put one other approach, a vulnerability scanner’s goal is to suit the circle (within the magnifying glass) as shut as doable to the left rectangle (related parts).

The issue is that the reply isn’t clear-cut, which means trade-offs are to be made.

So, what’s extra fascinating: maximizing precision or recall?

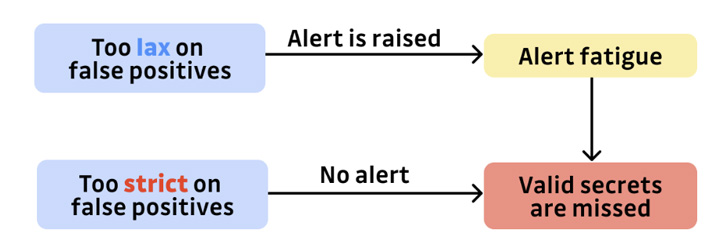

Which one is worse, too many false positives or too many false negatives?

To grasp why, let’s take it to each extremes: think about {that a} detection instrument solely alerts its customers when the chance {that a} given piece of code comprises a vulnerability is superior to 99.999%. With such a excessive threshold, you might be nearly sure that an alert is certainly a real constructive. However what number of safety issues are going to go unnoticed due to the scanner selectiveness? So much.

Now, quite the opposite, what would occur if the instrument was tuned to by no means miss a vulnerability (maximize the recall)? You guessed it: you’d quickly be confronted with tons of and even hundreds of false alerts. And there lies a larger hazard.

As Aesop warned us in his fable The Boy Who Cried Wolf, anybody who simply repeats false claims will find yourself not being listened to. In our trendy world, the disbelief would materialize as a easy click on to deactivate the safety notifications and restore peacefulness, or simply ignore them if deactivation is not allowed. However the penalties could possibly be at the very least as dramatic as there are within the fable.

It is truthful to say that alert fatigue might be the primary motive static evaluation fails so usually. Not solely are false alarms the supply of failure of total software safety packages, however in addition they trigger far more critical damages, resembling burnout and turnout.

And but, regardless of all of the evils attributed to them, you’d be mistaken to suppose that if a instrument doesn’t carry any false positives, then it should deliver the definitive reply to this downside.

How one can study to just accept false positives

To simply accept false positives, we now have to go in opposition to that fundamental intuition that always pushes us in direction of early conclusions. One other thought experiment might help us illustrate this.

Think about that you’re tasked with evaluating the efficiency of two safety scanners A and B.

After operating each instruments in your benchmark, the outcomes are the next: scanner A solely detected legitimate vulnerabilities, whereas scanner B reported each legitimate and invalid vulnerabilities. At this level, who would not be tempted to attract an early conclusion? You’d must be a smart sufficient observer to ask for extra information earlier than deciding. The info would most likely reveal that some legitimate secrets and techniques reported by B had been silently ignored by A.

Now you can see the fundamental thought behind this text: any instrument, course of, or firm claiming that they’re fully free from false positives ought to sound suspicious. If that had been really the case, probabilities can be very excessive that some related parts had been silently skipped.

Discovering the steadiness between precision and recall is a delicate matter and requires a whole lot of tuning efforts (you possibly can learn how GitGuardian engineers are bettering the mannequin precision). Not solely that, however it is usually completely regular to see it sometimes fail. That is why try to be extra nervous about no false positives than a seeing few ones.

However there’s additionally one more reason why false positives may in actual fact be an attention-grabbing sign too: safety is rarely “all white or all black”. There may be all the time a margin the place “we do not know”, and

the place human scrutiny and triage develop into important.

“Because of the nature of the software program we write, generally we get false positives. When that occurs, our builders can fill out a type and say, “Hey, this can be a false constructive. That is a part of a check case. You may ignore this.” — Supply.

There lies a deeper reality: safety is rarely “all white or all black”. There may be all the time a margin the place “we do not know”, and the place human scrutiny and triage turns into important. In different phrases, it’s not nearly uncooked numbers, it is usually about how they are going to be used. False positives are helpful from that perspective: they assist enhance the instruments and refine algorithms in order that context is healthier understood and thought of. However like an asymptote, absolutely the 0 can by no means be reached.

There may be one needed situation to remodel what looks like a curse right into a virtuous circle although. You need to be sure that false positives might be flagged and included within the detection algorithm as simply as doable for end-users. Some of the frequent methods to realize that’s to easily provide the chance to exclude information, directories, or repositories from the scanned perimeter.

At GitGuardian, we’re specialised in secrets and techniques detection. We pushed the thought to reinforce any discovering with as a lot context as doable, resulting in a lot quicker suggestions cycles and assuaging as a lot work as doable.

If a developer tries to commit a secret with the client-side ggshield put in as a pre-commit hook, the commit can be stopped except the developer flags it as a secret to disregard. From there, the key is taken into account a false constructive, and will not set off an alert anymore, however solely on his native workstation. Solely a safety staff member with entry to the GitGuardian dashboard is ready to flag a false constructive for all the staff (world ignore).

If a leaked secret is reported, we offer instruments to assist the safety staff rapidly dispatch them. For instance, the auto-healing playbook mechanically sends a mail to the developer who dedicated the key. Relying on the playbook configuration, builders might be allowed to resolve or ignore the incident themselves, lightening the quantity of labor left to the safety staff.

These are just some examples of how we realized to tailor the detection and remediation processes round false positives, quite than obsessing about eliminating them. In statistics, this obsession even has a reputation: it is referred to as overfitting, and it signifies that your mannequin is simply too depending on a selected set of information. Missing real-world inputs, the mannequin would not be helpful in a manufacturing setting.

Conclusion

False positives trigger alert fatigue and derail safety packages so usually that they’re now extensively thought of pure evil. It’s true that when contemplating a detection instrument, you need the perfect precision doable, and having too many false positives causes extra issues than not utilizing any instrument within the first place. That being mentioned, by no means overlook the recall charge.

At GitGuardian, we designed a large arsenal of generic detection filters to enhance our secrets and techniques detection engine’s recall charge.

From a purely statistical perspective, having a low charge of false positives is a quite good signal, which means that few defects go via the netting.

When in management, false positives should not that unhealthy. They will even be used to your benefit since they point out the place enhancements might be made, each on the evaluation facet or on the remediation facet.

Understanding why one thing was thought of “legitimate” by the system and having a approach to adapt to it’s key to bettering your software safety. We’re additionally satisfied it is without doubt one of the areas the place the collaboration between safety and improvement groups actually shines.

As a remaining be aware, bear in mind: if a detection instrument doesn’t report any false positives, run. You’re in for giant hassle.

Notice — This text is written and contributed by Thomas Segura, technical content material author at GitGuardian.