Studying and inference unified right into a steady, asynchronous, and parallel course of

On this publish, I current the framework for inference and studying in a ahead cross, known as the Sign Propagation framework. It is a framework for utilizing solely ahead passes to be taught any sort of knowledge and on any sort of community. I show it really works properly for discrete networks, steady networks, and spiking networks, all with out modification to the community structure. In different phrases, the model of community used for inference is identical because the model used for studying. In distinction, backpropagation and former works have extra construction and algorithm components for the coaching model of the community than for the inference model of the community, that are known as studying constraints.

Sign Propagation is a least constrained technique for studying, and but has higher efficiency, effectivity, and compatibility than earlier options to backpropagation. It additionally has higher effectivity and compatibility than backpropagation. This framework is launched in https://arxiv.org/abs/2204.01723 (2022) by Adam Kohan, Ed Rietman, and Hava Siegelmann. The origin of ahead studying is in our work https://arxiv.org/abs/1808.03357 (2018).

This publish is a concise tutorial for studying in a ahead cross. By the top of the tutorial, you’ll perceive the idea, and know easy methods to apply this type of studying in your work. The tutorial offers explanations for freshmen, and detailed steps for specialists.

Desk of Contents

- Introduction

1.1. Earlier Approaches to Studying

1.2. A New Framework for Studying

1.3. The Drawback with Studying Constraints - The Two Parts of Studying

- Studying in a Ahead Go

3.1. The Strategy to Study

3.2. The Steps to Study

3.3. Overview of Full Process

3.4. Spiking Networks - Works on Ahead Studying

4.1 Error Ahead Propagation

4.2. Ahead Ahead - Studying Materials

- Appendix: Studying on Credit score Project

6.1. Spatial Credit score Project

6.2. Temporal Credit score Project

1.1. Earlier Approaches to Studying

Studying is the lively ingredient in making synthetic neural networks work. Backpropagation is acknowledged as the very best performing studying algorithm, powering the success of synthetic neural networks. Nonetheless, it’s a extremely constrained studying algorithm. And, it’s these constraints which are seen as obligatory for its excessive efficiency. It’s properly accepted that decreasing even a few of these constraints lowers efficiency. Nonetheless, as a result of these identical constraints, backpropagation has issues with effectivity and compatibility. It isn’t environment friendly with time, reminiscence, and power. It has low compatibility with organic fashions of studying, neuromorphic chips, and edge gadgets. So, one might imagine to deal with this drawback by decreasing completely different subsets of constraints in an try to extend effectivity and compatibility with out closely decreasing efficiency.

For instance, two constraints of backpropagation on the coaching community are: (1) the addition of suggestions weights which are symmetric with the feedforward weights; and (2) the requirement of getting these suggestions weights for each neuron. The inference community by no means makes use of the suggestions weights, that’s the reason we consult with them as studying constraints. Subsets of those constraints embrace: not including any suggestions weights, solely including suggestions weights for one or two layers in a 5 layer community, not having the suggestions weights be symmetric, or any mixture of those. This implies constraints will be added or eliminated partly or totally to type subsets of constraints to cut back. One could preserve attempting to cut back completely different subsets of those constraints, in an try to extend effectivity and compatibility, and hope to not closely influence efficiency.

Earlier various studying algorithms to backpropagation have tried enjoyable constraints, with out success. They scale back subsets of constraints on studying to enhance effectivity and compatibility. They preserve different constraints, with the expectation of retaining efficiency much like the efficiency discovered by protecting all of the constraints (which is backpropagation). So, this suggests there’s a spectrum for studying constraints, from extremely constrained, reminiscent of backpropagation, to no constraints, reminiscent of Sign Propagation, the framework I’m introducing right here.

1.2. A New Framework for Studying

Now, I show a shift away from earlier works. The outcomes introduced right here present help that the least constrained studying technique, Sign Propagation, has higher efficiency, effectivity, and compatibility than options to backpropagation that selectively scale back constraints on studying. This contains properly established and extremely impactful strategies reminiscent of random suggestions alignment, direct suggestions alignment, and native studying (all with out backpropagation). It is a fascinating perception into studying throughout fields from neuroscience to pc science. It advantages areas from organic studying (e.g. within the mind) to synthetic studying (e.g. in neural networks, {hardware}, neuromorphic chips).

Sign Propagation additionally considerably informs the route of future analysis in studying algorithms the place backpropagation is the usual of comparability. On the spectrum of studying constraints, opposite to the extremely constrained backpropagation, Sign Propagation is the least constrained technique to check with and to start out from for creating studying algorithms. With solely backpropagation as a finest performing comparability, studying algorithms didn’t have a place to begin, solely an finish purpose. Now, I’m introducing Sign Propagation as the brand new baseline for studying algorithms to evaluate their effectivity, compatibility, and efficiency.

1.3. The Drawback with Studying Constraints

What are the constraints discovered below backpropagation?

Why are they a problem?

Studying constraints below backpropagation are troublesome to reconcile with studying within the mind. Beneath, I present the primary constraints:

- A whole ahead cross by way of the community is required earlier than sequentially delivering suggestions in reverse order throughout a backward cross.

- The coaching community wants the addition of complete suggestions connectivity for each neuron.

- There are two completely different computations for studying and for inference. In different phrases, the suggestions algorithm is a definite kind of computation, separate from feedforward exercise.

- The suggestions weights should be symmetric with the feedforward weights.

These constraints additionally hinder environment friendly implementations of studying algorithms on {hardware} for the next causes:

- weight symmetry is incompatible with elementary computing items which aren’t bidirectional.

- transportation of non native weight and error info requires particular communication channels.

These studying constraints prohibit parallelization of computations throughout studying, and enhance reminiscence and compute for the next causes:

- The ahead cross wants to finish earlier than the backward cross can start (Time, Sequential)

- Activations of hidden layers should be saved in the course of the ahead cross for the backward cross (Reminiscence)

- Backward cross requires particular suggestions connectivity (Construction)

- Parameters are up to date in reverse order of the ahead cross (Time, Synchronous)

How does studying operate in neural networks?

The quick reply: Spatial and Temporal Credit score Project

There are two major types of knowledge: particular person inputs, and a number of linked inputs that are sequentially or temporally linked. A picture of a canine is a person enter because the community makes a prediction primarily based solely on that picture. On this case, the community is given a single picture to foretell if the picture is of a canine or turtle.

A video of a turtle strolling is a number of linked inputs as movies are made up of a number of photos, and the community makes a prediction after seeing all of those photos. On this case, the community is given a number of photos to foretell if the turtle is strolling or hiding.

Backpropagation (BP) is used for particular person inputs; Backpropagation By means of Time (BPT) is used for a number of linked inputs.

BP offers studying for:

- Each neuron (spatial credit score project)

BPT offers studying for:

- Each neuron (spatial credit score project)

- A number of linked inputs (temporal credit score project)

Offering studying for each neuron is named the spatial credit score project drawback. Spatial credit score project refers back to the placement of neurons within the community, reminiscent of organized into layers of neurons. For instance, in a 5 layer community, the backpropagation studying sign travels from the fifth layer sequentially all the way in which right down to the primary layer of neurons. In part 3, I’ll present how the sign propagation studying sign travels from the primary layer to the fifth layer, the identical as inference.

Offering studying for a number of linked inputs is named the temporal credit score project drawback. Temporal credit score project refers to shifting by way of the a number of linked inputs. For instance, every picture within the video is fed into the community, producing a brand new response from the identical neurons. Every neuron response is particular to every of the photographs/inputs. So, the backpropagation studying sign travels by way of every of those neuron responses, ranging from the neuron response for the final picture within the video to the neuron response for the primary picture. In part 3, it’ll develop into clear that the sign propagation studying sign travels from the neuron response to the primary picture to the neuron response for the final picture, the identical as inference.

Word, the inside drawback of temporal credit score project is spatial credit score project. Temporal credit score project takes the training sign by way of every of the photographs making up the video. For every picture, spatial credit score project takes the training sign to every neuron. Sign propagation gracefully addresses the outer drawback by addressing the inside drawback — a ahead cross, by building of the inference community, traverses by way of each issues.

BP does spatial credit score project. BPT extends BP to do each spatial and temporal credit score project. (Confer with Part 6 for an entire studying on spatial and temporal credit score project.)

The Sign Propagation Framework (SP)

I current right here, the Framework for Studying and Inference in a Ahead Go, known as Sign Propagation (SP). It’s a satisfyingly easy answer to temporal and spatial credit score project. SP is a least constrained technique for studying, and but has higher efficiency, effectivity, and compatibility than earlier options to backpropagation. It additionally has higher effectivity and compatibility than backpropagation. SP offers an inexpensive efficiency tradeoff for effectivity and compatibility. That is notably interesting, contemplating it’s compatibility for goal primarily based deep studying (e.g. supervised and reinforcement) with new {hardware} and long-standing organic fashions, whereas earlier works will not be. (Normally, backpropagation is the very best performing algorithm.)

SP is freed from constraints for studying to happen, with:

- solely a ahead cross, no backward cross

- no suggestions connectivity or symmetric weights

- just one kind of computation for studying and inference.

- a studying sign which travels with the enter within the ahead cross

- updates to parameters as soon as the neuron/layer is reached by the ahead cross

An attention-grabbing perception, SP offers a proof for a way neurons within the mind with out error suggestions connections obtain world studying indicators.

Because of this, Sign Propagation is:

- Suitable with fashions of studying within the mind and in {hardware}.

- Extra environment friendly in studying, with decrease time and reminiscence, and no extra construction.

- A low complexity algorithm for studying.

3.1. The way to Study in a Ahead Go?

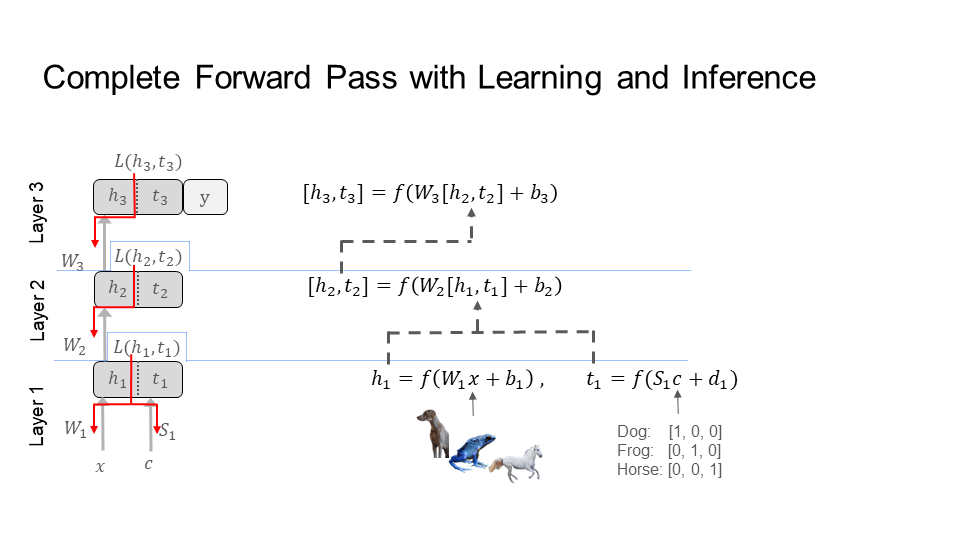

Sign Propagation treats the goal as a further enter (determine under). With this method, SP feeds the goal ahead by way of the community, as if it had been an enter.

SP strikes ahead by way of the community (determine under), bringing the goal and the enter nearer and nearer collectively, ranging from the primary layer (prime left) all the way in which to the final layer (backside proper). Discover that by the final step/layer, the picture of the canine is near its goal [1,0,0], and the picture of the frog is near its goal [0, 1, 0]. Nonetheless, the picture and goal of the canine is much away from the frog. This operation takes place within the representational house of the neurons at every layer. As an illustration, the neurons at layer 1 take within the canine image (the enter x) and canine label (the goal c) and output activations h_1_dog and t_1_dog, respectively. The identical occurs for the frog producing h_1_frog and t_1_frog. On this activation house of the neurons, SP trains the community to carry an enter and its goal nearer collectively, however farther away from different inputs and their respective targets.

3.2. The Steps to Ahead Study

Beneath is the full image for an instance three layer community. Every layer has its personal loss operate, which is used to replace weights within the community. So, SP executes the loss operate and updates the weights as quickly because the goal and label attain a layer. Since SP feeds the goal and enter collectively (alternating), layer/neuron weights are up to date instantly. For spatial credit score project, SP updates weights with out ready for the enter to succeed in the final layer from the primary layer. For temporal credit score project, SP offers a studying sign for (every time step) every of the a number of linked inputs (e.g. photos in a video), with out ready for the final enter to be fed into the community.

Beneath, we’ll go step-by-step, layer by layer, doing studying and inference (i.e. producing a solution/prediction) in ahead passes. Word, within the information under, the goal and enter are batch concatenated right into a ahead cross, making it simpler to observe.

Step 1) Layer 1

Step 2) Layer 2

Step 3) Layer 3

Step 4) Prediction

On the output layer, there are three selections for outputting a prediction. The primary and second choices present extra flexibility and observe naturally from the process to coach utilizing a ahead cross. The primary choice is to take an h_3 for a category and examine it with every t_3 for each class. For instance, SP inputs the picture of a canine and will get h_3_dog , then inputs the labels for all of the lessons and will get t_3_i = { t_3_dog, t_3_frog, and t_3_horse}, lastly it compares h_3_dog with every of the t_3_i; the closes t_3_i is the proper class.

The second choice is an adaptive model of the primary choice. It’s adaptive since SP now not compares h_3_dog with each t_3_i, as a substitute finds a subset of closest t_3_i. For instance, we keep a tree the place t_3_frog is nearer within the tree to t_3_dog than t_3_horse. So, we first examine h_3_dog to t_3_frog, then to t_3_dog, and cease. We by no means examine with t_3_horse as it’s too distant and never in our subset of closest t_3_i.

The third choice: the classical and intuitive alternative is to coach a prediction output layer. This selection can be extra easy for regression and generative duties. For instance, a classification layer, which has has one output per class. So, layer 3 can be a classification layer. Word, that in inference t_3 shouldn’t be longer used. As well as, discover that t_3_i is equal to having a classification layer. To see this, merely concatenate t_3_i collectively to type the burden matrix of a classification (prediction) layer that’s taken with h_3 (e.g. h_3_dog, h_3_horse, …). Which means that this third choice is a particular case of the primary choice, and is usually a particular case of the second choice.

3.3. Overview of the Full Process

3.4. Spiking Networks

Spiking neural networks are much like organic neural networks. They’re utilized in fashions of studying within the mind. They’re additionally used for neuromorphic chips. There are two issues for studying in spiking neural networks. First, the training constraints below backpropagation are troublesome to reconcile with studying within the mind, and hinders environment friendly implementations of studying algorithms on {hardware} (mentioned above). Second, coaching spiking networks leads to the useless neuron drawback (see under).

A reference determine is supplied under. The neurons in these networks reply to inputs by both activating (spiking) to convey info to a different neuron or by doing nothing (top-left determine). Generally, these networks have an issue the place neurons by no means activate, which implies they by no means spike (bottom-left determine). Thereby, whatever the enter, the neurons response is to at all times do nothing. That is known as the useless neuron drawback.

The most well-liked method to resolve this drawback makes use of a surrogate operate to interchange the spiking conduct of the neurons. The community makes use of the surrogate solely throughout studying, when the training sign is shipped to the neurons. The surrogate operate (blue) offers a price for the neuron even when it doesn’t spike (top-right determine). So, the neuron learns even when it doesn’t spike to convey info to a different neuron (bottom-right determine). This helps cease the neuron from dying. Nonetheless, surrogates are troublesome to implement for studying in {hardware}, reminiscent of neuromorphic chips. Moreover, surrogates don’t match fashions of studying within the mind.

Sign Propagation offers two options which are suitable with fashions of studying within the mind and in {hardware}.

Beneath is a visualization of the training sign (coloured in pink) going by way of a spiking neuron (proven as S), previous the voltage or membrane potential (U), to replace the weights (W). Backpropagation, with the useless neuron drawback, is on the left. Backpropagation, with a surrogate operate (f), is second from the left. The training sign for backpropagation is world (L_G) and comes from the final layer of the community; the dotted bins are higher neurons/layers.

The opposite photos on the precise present the 2 options Sign Propagation (SP) offers. First, SP can use a surrogate as properly, however the studying sign doesn’t undergo the spiking equation (S). As a substitute, the training sign is earlier than the spiking equation (S), straight connected to the surrogate operate (f). Because of this, SP is extra suitable with studying within the mind, reminiscent of in a multi compartment mannequin of a organic neuron. Second, SP can be taught utilizing solely the voltage or membrane potential (U). On this case, the training sign is straight connected to U. This requires no surrogate or change to the neuron. Thereby, SP offers compatibility with studying in {hardware}.

A listing of works on ahead studying – utilizing the ahead cross for studying. Works are ordered by date.

A repository webpage is maintained for the neighborhood to doc Ahead Studying strategies, situated right here https://amassivek.github.io/sigprop . The code library is obtainable at https://github.com/amassivek/signalpropagation .

4.1. Error Ahead Propagation Algorithm (2018)

The error ahead propagation algorithm is an implementation of the sign propagation framework for studying and inference in a ahead cross (determine under). Beneath sign propagation, S is the rework of the context c, which for supervised studying is the goal.

In error ahead propagation, S is the projection of the error from the output to the entrance of the community, as proven within the determine under.

Error Ahead-Propagation: Reusing Feedforward Connections to Propagate Errors in Deep Studying

https://arxiv.org/abs/1808.03357

4.2. Ahead Ahead Algorithm (2022)

The ahead ahead algorithm is an implementation of the sign propagation framework for studying and inference in a ahead cross (determine under). Beneath sign propagation, S is the rework of the context c, which for supervised studying is the goal.

In ahead ahead, S is a concatenation of the goal c with the enter x, as proven within the determine under.

Ahead Ahead Algorithm

https://www.cs.toronto.edu/~hinton/FFA13.pdf

Sign Propagation: The Framework for Studying and Inference In a Ahead Go

https://arxiv.org/abs/2204.01723 (2022)

Ahead Ahead Algorithm

https://www.cs.toronto.edu/~hinton/FFA13.pdf (2022)

Error Ahead-Propagation: Reusing Feedforward Connections to Propagate Errors in Deep Studying

https://arxiv.org/abs/1808.03357 (2018)

5.1 Different Materials

A properly written information on spatial and temporal credit score project. I referenced it to assist write the “Appendix: Studying on Credit score Project”.

Coaching Spiking Neural Networks utilizing classes from deep studying

https://arxiv.org/abs/2109.12894 (2021)

A repository webpage is maintained for the neighborhood to doc Ahead Studying strategies, situated right here https://amassivek.github.io/sigprop .

The code library is obtainable at https://github.com/amassivek/signalpropagation.

With Because of: Alexandra Marmarinos for her enhancing work and steerage.

6.1. Spatial Credit score Project

Spatial Locality of Credit score Project is the query: How does the training sign attain each neuron?

On the left of the determine under, is a 3 layer community. Normally, studying takes place over two phases: the inference part and the training part. Within the first part, known as the inference part, the enter is fed by way of the community from the primary layer as much as the final layer. For the reason that enter is fed ahead by way of the community, the inference part takes place in the course of the “ahead cross” by way of the community. Within the second part, known as the training part, the training sign (coloured in pink) wants to succeed in each neuron on this community.

Totally different studying algorithms have completely different options to the training part. In backpropagation, the training sign goes backward by way of the community, so the training part takes place in the course of the “backward cross” by way of the community. As we’ll see with Sign Propagation, studying can happen in the course of the ahead cross as properly.

Broadly, there are two approaches to the training part. The primary method computes a worldwide studying sign (left center determine) after which sends this studying sign to each neuron. The second method computes a neighborhood studying sign (proper determine) at every neuron (or layer). The primary method has the issue of getting to coordinate sending this sign to each neuron in a exact means. That is pricey in time, reminiscence, and compatibility. The second method doesn’t encounter this drawback, however has worse efficiency.

6.2. Temporal Credit score Project

Temporal Locality of Credit score Project is the query: How does the worldwide studying sign attain a number of linked inputs (aka each time step)?

A single picture requires solely that the training sign attain each neuron. Nonetheless, a video is a collection of linked photos. So, now the training sign must journey by way of a number of linked inputs (aka time), ranging from the final picture within the video all the way in which to the primary picture within the video. This idea applies to any sequential or time collection knowledge. So, how does the worldwide studying sign attain each time step? There are two standard strategies to reply this query: Backpropagation by way of time, and ahead mode differentiation.

6.2.1. Backpropagation By means of Time (BPT)

The first reply to the query posed above follows, and takes place in two phases. First, enter all the photographs that make up the video, one after the other, into the community. That is the inference part the place the a number of linked inputs are despatched ahead by way of the community (a ahead cross). Second, go backwards from the final picture to the primary picture propagating the training sign. That is the training part the place the training sign goes backward (a backward cross) by way of the a number of linked inputs (aka time); thus the identify backpropagation by way of time.

Step 1: Inference

Within the determine under, BPT feeds every picture X[i] (e.g. of the turtle strolling), which makes up the video, by way of the community. BPT begins with the first picture X[0] (backside left of the primary determine), which is time step 1 (time is proven on the prime of the determine). Subsequent, BPT feeds in picture X[1], which is time step 2. Lastly, we finish with the final picture X[2] at time step 3 — this demonstration is for a really quick video, or gif. Each time BPT feeds a picture to the community discover that the center layer within the community connects every picture to the following picture by way of time.

Step 2: Studying Backward by way of time

BPT feeds the training sign, coloured in pink, backward by way of the photographs (time), making up the video of the turtle strolling. The training sign is fashioned from the loss operate (prime proper of determine). It travels in the wrong way of how we fed within the photos X[i]. First a gradient/replace is calculated for picture X[2] at time 3, then picture X[1] at time 2, and eventually picture X[0] at time 1. Because of this it’s known as backpropagation by way of time. Once more, discover that the center layer within the community connects the training sign from the final picture X[2] to the primary picture X[0].

6.2.2. Ahead Mode Differentiation (FMD)

Beneath FMD, the conduct of the inference (step 1) and studying (step 2) phases are comparable to one another. Because of this, FMD does step 1 (inference) and step 2 (studying) collectively (alternating). How? In step 2, FMD propagates the training sign ahead by way of the photographs (time), a lot the identical as inference does in step 1. So, the training sign now not must journey from the final picture X[3] within the video again to the primary X[0]. The outcome: FMD has a studying sign that begins with X[0], as a substitute of getting to attend for X[3].

Why FMD vs BPT? Above, I mentioned the training constraints below backpropagation and the issues it has with effectivity and compatibility. FMD makes an attempt to enhance effectivity. Significantly, BPT feeds the entire photos, making up the video, into the community earlier than studying. FMD doesn’t, so it’s extra environment friendly in time than BPT. Nonetheless, FMD is considerably extra pricey than BPT, notably in reminiscence and computation. Word that FMD addresses time. Nonetheless, it doesn’t assist with the training constraints on spatial credit score project discovered below backpropagation, which exist in FMD as properly.

All photos except in any other case famous are by the writer.