An Finish-to-Finish Pipeline together with mannequin constructing, hyperparameter tuning and the deployment

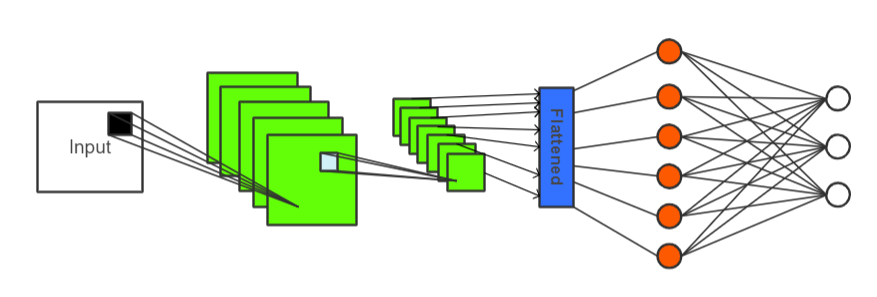

Check out the mannequin that you’re going to construct.

For extra particulars concerning the code or fashions used on this article, confer with this GitHub Repo.

Okay!!! Now let’s dive into constructing the Convolutional Neural Community mannequin that converts the signal language to the English alphabet.

The Drawback Definition

Changing the Signal Language to Textual content will be damaged down into the duty of predicting the English letter comparable to the illustration within the signal language.

Information

We’re going to use the Signal Language MNIST dataset on Kaggle which is licensed beneath CC0: Public Area.

Some details concerning the dataset

- No instances for the letters J & Z (Purpose: J & Z require movement)

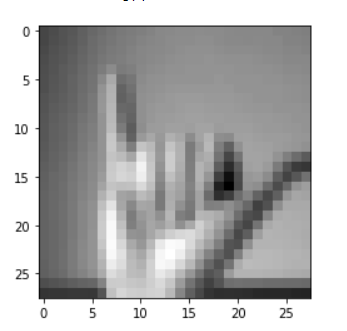

- Grayscale Photos

- Pixel Values starting from 0 to 255

- Every picture accommodates 784 Pixels

- Labels are numerically encoded, which ranges from 0 to 25 for A to Z

- The information comes with the practice, and take a look at units, with every group containing 784 pixels together with the label representing the picture

Analysis Metric

We’re going to use accuracy because the analysis metric. Accuracy is the ratio of appropriately labeled samples to the entire variety of samples.

Modelling

- Convolutional Neural Networks(CNN) are the go-to selection for a picture classification downside.

- Convolutional Neural Community is a man-made neural community consisting of convolutional layers.

- CNN works effectively with the picture information. The principle factor that differentiates the convolutional layer from dense layers is that within the former, each neuron connects solely to a specific set of neurons within the earlier layer.

- Every convolutional layer accommodates a set of filters/kernels/characteristic maps which helps determine the completely different patterns within the picture.

- Convolutional Neural Networks can discover extra sophisticated patterns because the picture passes by means of the deeper layers.

- One other benefit of utilizing CNN, in contrast to a typical dense layered ANN, is as soon as it learns a sample in a location, it may possibly determine the sample at every other place.

Importing the required modules and packages

Making ready the information

Studying the CSV file (sign_mnist_train.csv) utilizing pandas and shuffling the entire coaching information.

Separating the picture pixels and labels permits us to use feature-specific preprocessing strategies.

Normalization and Batching

Normalizing the enter information is essential, particularly when utilizing gradient descent which might be extra more likely to converge sooner when the information is normalized.

Grouping coaching information into batches decreases the time required to coach the mannequin.

A picture from the practice information after making use of the preprocessing

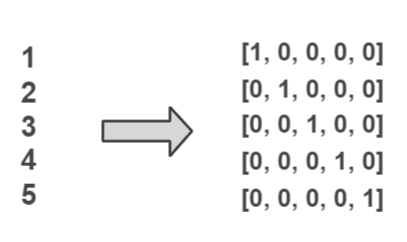

Binarizing the labels

The LabelBinarizer from the Scikit-Be taught library binarizes the labels in a one-vs-all trend and returns the one-hot encoded vectors.

Separating the Validation information

The validation information will helps utilizing selecting the very best mannequin. If we use the take a look at information right here, we’ll choose the mannequin that’s too optimistic on the take a look at information.

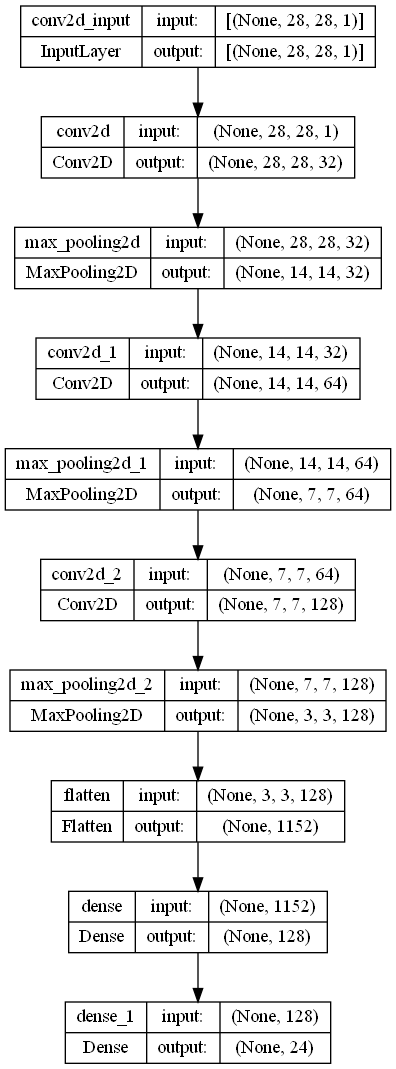

Constructing the mannequin

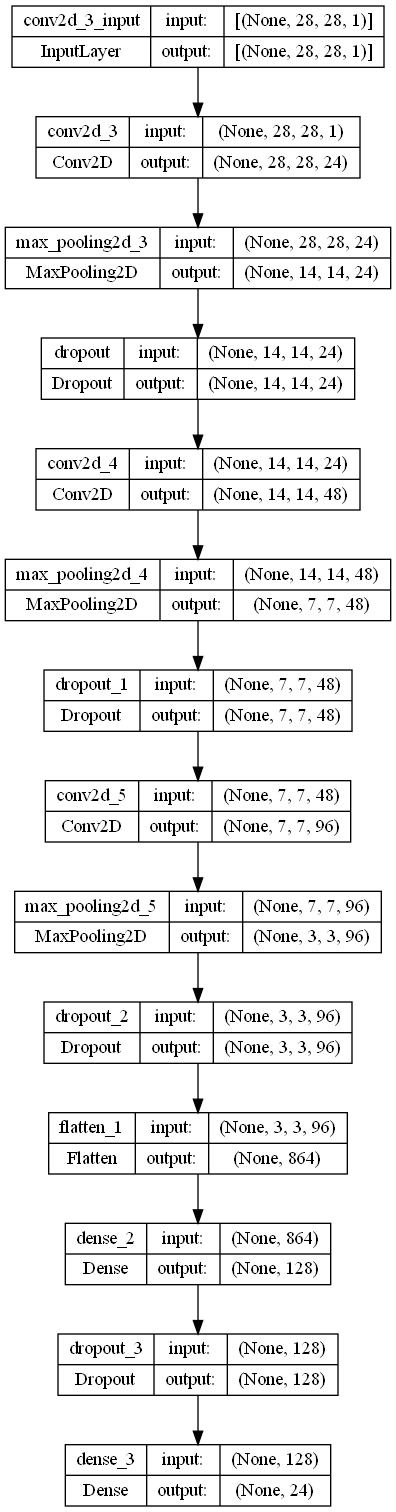

The CNN mannequin will be outlined as beneath

Common choices whereas constructing the CNN is

- Select a set of Convolutional-Pooing layers

- Improve the variety of neurons within the deeper convolutional layers

- Add a set of dense layers after the Convolutional-Pooling layers

Now comes the essential choices of the mannequin constructing

loss— Specifies the loss perform that we are attempting to attenuate. As our labels are one sizzling encoded, we are able to select categorical cross entropy because the loss perform.optimizer— This algorithm finds the very best weights that decrease the loss perform. Adam is one such algorithm which works effectively usually.

We will specify any metrics that consider our mannequin whereas constructing the mannequin, which we selected to be the accuracy.

Checkpoints

ModelCheckpoint — Saves the very best mannequin discovered throughout coaching at every epoch by accessing the mannequin efficiency on the validation information.

EarlyStopping — Interrupts coaching when there isn’t a progress till a specified variety of epochs.

Discovering patterns

Reviewing the mannequin coaching

Historical past object accommodates the loss and specified metrics particulars obtained throughout the mannequin’s coaching. This data can be utilized to acquire the educational curves and entry the coaching course of and the mannequin’s efficiency at every epoch.

For extra particulars concerning the studying curves obtained throughout the coaching, confer with this jupyter pocket book.

The perfect mannequin

Retrieving the very best mannequin obtained throughout the coaching because the mannequin obtained on the finish of the coaching needn’t be the very best mannequin.

Efficiency on the Take a look at Set

Accuracy: 94%

You’ll find the code and the ensuing fashions beneath hyperparameter tuning right here.

In terms of hyperparameter tuning, there are a plethora of decisions we are able to tune in a given CNN. Among the most important and customary hyperparameters that should be tuned embody

Variety of Convolution and Max Pooling Pairs

This represents the variety of Conv2D and MaxPooling2D pairs we stack collectively, constructing the CNN.

As we stack extra pairs, the community will get deeper and deeper, growing the mannequin’s capacity to determine advanced picture patterns.

However stacking too many layers would negatively influence the mannequin’s efficiency (enter picture sizes cut back quickly because it goes deeper into the CNN) and in addition improve the coaching time because the variety of trainable parameters of the mannequin drastically will increase.

Filters

Filters decide the variety of output characteristic maps. A filter acts as a sample and can be capable of discover similarities when convoluted throughout a picture. Rising the variety of filters within the successive layers works effectively usually.

Filter Dimension

It’s a conference to take the filter measurement as an odd quantity, giving us a central place. One of many important issues with even-sized filters is they might require uneven padding.

As an alternative of utilizing a single convolution layer consisting of filters with bigger sizes like(7×7, 9×9), we are able to use a number of convolutional layers with smaller filter sizes which can extra possible enhance the mannequin’s efficiency as deeper networks can detect advanced patterns.

Dropout

Dropout acts as a regularizer and prevents the mannequin from overfitting. The dropout layer nullifies the contribution of some neurons towards the subsequent layer and leaves others unmodified. The dropout charge determines the chance of a specific neuron’s contribution being cancelled.

On the preliminary epochs, we would encounter that the coaching loss is bigger than the validation loss as some neurons could be dropped throughout the coaching, however an entire community with all of the neurons is used within the validation.

Information Augmentation

With Information Augmentation, we are able to generate barely modified copies of the obtainable photographs and use them for the coaching mannequin. These photographs of various orientations assist the mannequin determine objects in several orientations.

For instance, we would introduce a small rotation, zoom, and translation to the photographs.

Different Hyperparameters to strive

- Batch Normalization — It normalizes the layer inputs

- Deeper networks work effectively — Changing the only convolution layer of filter measurement (5X5) with two successive consecutive convolution layers of filter measurement (3X3)

- Variety of models within the dense layer and variety of dense layers

- Changing the MaxPooling Layer with a convolution layer having a stride > 1

- Optimizers

- Studying charge of the optimizer

Analysis of the ultimate mannequin

Accuracy: 96%

Streamlit is a unbelievable platform that removes all the effort required in a handbook deployment.

With Streamlit, all we’d like is a GitHub repository containing a python script that specifies the move of the app.

Setup

Set up the Streamlit library utilizing the beneath command

pip set up streamlit

To run the Streamlit software use streamlit run <script_name>.py

Constructing the app

You’ll find the whole Streamlit app move right here.

Getting the very best mannequin obtained after the hyperparameter tuning and the LabelBinarizer is required to transform the mannequin’s output again to corresponding labels.

@st.cache decorator runs the perform solely as soon as, stopping pointless rework whereas redisplaying the web page.

Mannequin’s Prediction

We must always reshape the uploaded picture to a 28×28 as it’s our mannequin’s enter form. We should additionally protect the side ratio of the uploaded picture.

Then we are able to use the preprocessed picture because the mannequin enter and get the respective prediction which will be remodeled again to the label representing the English letter utilizing the label_binarizer

Deploying the app to the Streamlit Cloud

- Join a Streamlit account right here.

- Join your GitHub account along with your Streamlit account by giving all the mandatory permissions to entry your repositories.

- Make sure the repository accommodates a necessities.txt file specifying all of the app’s dependencies.

- Click on on the New App button obtainable right here.

- Give the repository, department identify, and the python script identify, which accommodates the move of our app.

- Click on on the Deploy button.

Now your app might be deployed to the online and can get up to date everytime you replace the repository.

On this tutorial, now we have understood the next,

- The pipeline for growing an answer utilizing deep studying for an issue

- Preprocessing the picture information

- Coaching a Convolutional Neural Community

- Analysis of the mannequin

- Hyperparameter Tuning

- Deployment

Notice that this tutorial solely briefly introduces the whole end-to-end pipeline of growing an answer utilizing Deep Studying strategies. These pipelines encompass huge coaching, looking a way more complete vary of hyperparameters and analysis metrics particular to the use instances. A deeper understanding of the mannequin, information and wider picture preprocessing strategies are required to construct a pipeline for fixing advanced issues.

Check out the mannequin.

For extra particulars concerning the code or fashions used on this article, confer with this GitHub Repo.

Thanks for Studying!

I hope you discover this tutorial useful in constructing your subsequent unbelievable machine studying venture. In the event you discover any particulars incorrect within the article, please let me know within the feedback part. I’d like to have your solutions and enhancements to the repository.