[Ed. note: While we take some time to rest up over the holidays and prepare for next year, we are re-publishing our top ten posts for the year. Please enjoy our favorite work this year and we’ll see you in 2024.]

One of many extra fascinating facets of huge language fashions is their means to enhance their output by means of self reflection. Feed the mannequin its personal response again, then ask it to enhance the response or determine errors, and it has a significantly better likelihood of manufacturing one thing factually correct or pleasing to its customers. Ask it to resolve an issue by exhibiting its work, step-by-step, and these programs are extra correct than these tuned simply to seek out the proper last reply.

Whereas the sphere remains to be growing quick, and factual errors, often known as hallucinations, stay an issue for a lot of LLM powered chatbots, a rising physique of analysis signifies {that a} extra guided, auto-regressive method can result in higher outcomes.

This will get actually attention-grabbing when utilized to the world of software program growth and CI/CD. Most builders are already acquainted with processes that assist automate the creation of code, detection of bugs, testing of options, and documentation of concepts. A number of have written up to now on the concept of self-healing code. Head over to Stack Overflow’s CI/CD Collective and also you’ll discover quite a few examples of technologists placing this concepts into observe.

When code fails, it typically provides an error message. In case your software program is any good, that error message will say precisely what was unsuitable and level you within the route of a repair. Earlier self-healing code packages are intelligent automations that scale back errors, permit for swish fallbacks, and handle alerts. Perhaps you wish to add a little bit disk area or delete some information whenever you get a warning that utilization is at 90% %. Or hey, have you ever tried turning it off after which again on once more?

Builders love automating options to their issues, and with the rise of generative AI, this idea is prone to be utilized to each the creation, upkeep, and the development of code at a completely new stage.

More code requires more quality control

The power of LLMs to shortly produce massive chunks of code could imply that builders—and even non-developers—will probably be including extra to the corporate codebase than up to now. This poses its personal set of challenges.

“One of many issues that I am listening to so much from software program engineers is that they’re saying, ‘Effectively, I imply, anyone can generate some code now with a few of these instruments, however we’re involved about perhaps the standard of what is being generated,’” says Forrest Brazeal, head of developer media at Google Cloud. The tempo and quantity at which these programs can output code can really feel overwhelming. “I imply, take into consideration reviewing a 7,000 line pull request that any person in your workforce wrote. It’s totally, very troublesome to do this and have significant suggestions. It is not getting any simpler when AI generates this big quantity of code. So we’re quickly getting into a world the place we’ll should give you software program engineering greatest practices to be sure that we’re utilizing GenAI successfully.”

“Folks have talked about technical debt for a very long time, and now we now have a model new bank card right here that’s going to permit us to build up technical debt in methods we had been by no means capable of do earlier than,” mentioned Armando Photo voltaic-Lezama, a professor on the Massachusetts Institute of Expertise’s Pc Science & Synthetic Intelligence Laboratory, in an interview with the Wall Avenue Journal. “I believe there’s a threat of accumulating a lot of very shoddy code written by a machine,” he mentioned, including that corporations should rethink methodologies round how they will work in tandem with the brand new instruments’ capabilities to keep away from that.

We just lately had a dialog with some people from Google who helped to construct and take a look at the brand new AI fashions powering code options in instruments like Bard. Paige Bailey is the PM in control of generative fashions at Google, working throughout the newly mixed unit that introduced collectively DeepMind and Google Mind. “Consider code produced by an AI as one thing made by an “L3 SWE helper that is at your bidding,” says Bailey, “and that it’s best to actually rigorously look over.”

Nonetheless, Bailey believes that a few of the work of checking the code over for accuracy, safety, and pace will finally fall to AI as effectively. “Over time, I do have the expectation that enormous language fashions will begin sort of recursively making use of themselves to the code outputs. So there’s already been analysis carried out from Google Mind exhibiting you could sort of recursively apply LLMs such that if there’s generated code, you say, “Hey, be sure that there are not any bugs. Be sure that it is performant, be sure that it is quick, after which give me that code,” after which that is what’s lastly exhibited to the consumer. So hopefully it will enhance over time.”

What are people building and experimenting with today?

Google is already utilizing this know-how to assist pace up the method of resolving code evaluate feedback. The authors of a latest paper on this method write that, “As of right this moment, code-change authors at Google deal with a considerable quantity of reviewer feedback by making use of an ML-suggested edit. We anticipate that to scale back time spent on code evaluations by a whole bunch of 1000’s of hours yearly at Google scale. Unsolicited, very optimistic suggestions highlights that the influence of ML-suggested code edits will increase Googlers’ productiveness and permits them to deal with extra artistic and sophisticated duties.”

“In lots of instances whenever you undergo a code evaluate course of, your reviewer could say, please repair this, or please refactor this for readability,” says Marcos Grappeggia, the PM on Google’s Duet coding assistant. He thinks of an AI agent that may reply to this as a form of superior linter for vetting feedback. “That is one thing we noticed as being promising when it comes to lowering the time for this repair getting carried out.” The steered repair doesn’t exchange an individual, “but it surely helps, it provides sort of say a place to begin so that you can assume from.”

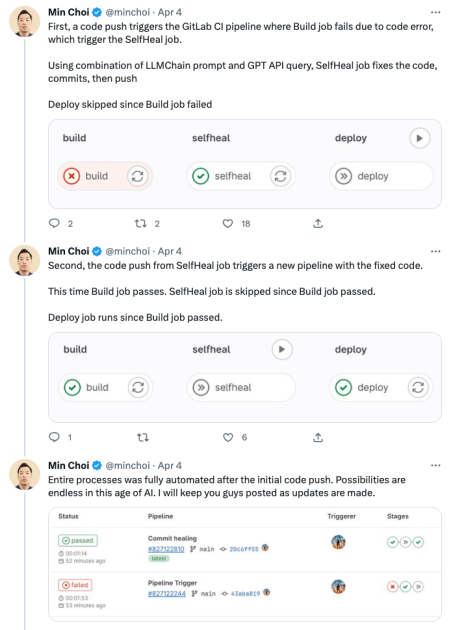

Just lately, we’ve seen some intriguing experiments that apply this evaluate functionality to code you’re attempting to deploy. Say a code push triggers an alert on a construct failure in your CI pipeline. A plugin triggers a GitHub motion that routinely ship the code to a sandbox the place an AI can evaluate the code and the error, then commit a repair. That new code is run by means of the pipeline once more, and if it passes the take a look at, is moved to deploy.

“We made a number of enhancements within the mechanism for the retry loop so that you don’t find yourself in a bizarre state of affairs, however that’s the important mechanics of it,” explains Calvin Hoenes, who created the plugin. To make the agent extra correct, he added documentation about his code right into a vector database he spun up with Pinecone. This permits it to be taught issues the bottom mannequin may not have entry to and to be recurrently up to date as wanted.

Proper now his work occurs within the CI/CD pipeline, however he goals of a world the place these sort of brokers might help repair errors that come up from code that’s already stay on the earth. “What’s very fascinating is whenever you even have in manufacturing code working and producing an error, might it heal itself on the fly?” asks Hoenes. “So you will have your Kubernetes cluster. If one half detects a failure, it runs right into a therapeutic movement.”

One pod is eliminated for repairs, one other takes its place, and when the unique pod is prepared, it’s put again into motion. For now, says Hoenes, we’d like people within the loop. Will there come a time when laptop packages are anticipated to autonomously heal themselves as they’re crafted and grown? “I imply, when you have nice take a look at protection, proper, when you have one hundred percent take a look at protection, you will have a really clear, clear codebase, I can see that occuring. For the medium, foreseeable future, we in all probability higher off with the people within the loop.”

Pay it forward: linters, maintainers, and the never ending battle with technical debt

Discovering issues throughout CI/CD or addressing bugs as they come up is nice, however let’s take issues a step additional. You’re employed at an organization with a big, ever-growing code base. It’s truthful to imagine you’ve acquired some stage of technical debt. What when you had an AI agent that reviewed previous code and steered adjustments it thinks will make your code run extra effectively. It would warn you to contemporary updates in a library that may profit your structure. Or it may need examine some new tips for enhancing sure features in a latest weblog or documentation launch. The AI’s recommendation arrives every morning as pull requests for a human to evaluate.

Itamar Friedman, CEO of CodiumAI, at present approaches the issue whereas code is being written. His firm has an AI bot that works as a pair programmer alongside builders, prompting them with checks that fail, mentioning edge instances, and usually poking holes of their code as they write, aiming to make sure that the completed product is as bug free as attainable. He says quite a lot of instruments for measuring code high quality deal with facets like efficiency, readability, and avoiding repetition.

Codium works on instruments that permit for testing of the underlying logic, what Friedman sees as a narrower definition of practical code high quality. With that method, he believes automated enchancment of code is now attainable, and can quickly be pretty ubiquitous. “If you happen to’re capable of confirm code logic, then in all probability you may as well assist, for instance, with automation of pull requests and verifying that these are carried out in accordance with greatest practices.”

Itamar, who has contributed to AutoGPT and has given talks with its creator, sees a future through which people information AI, and vice versa. “A machine would go over your complete repository and inform you all the greatest (and so-so) practices that it recognized. Then a couple of tech leads can go over this and say, oh my gosh, that is how we needed to do it, or did not wish to do it. That is our greatest observe for testing, that is our greatest observe for calling APIs, that is how we love to do the queuing, that is how we love to do caching, and so forth. It’s going to be configurable. Like the foundations will truly be a mixture of AI suggestion and human definition that may then be utilized by an AI bot to help builders. That is the superb factor.”

How is Stack Overflow experimenting with GenAI?

As our CEO just lately introduced, Stack Overflow now has an inside workforce devoted to exploring how AI, each the newest wave of generative AI and the sphere extra broadly, can enhance our platforms and merchandise. We’re aiming to construct in public so we are able to deliver suggestions into our course of. Within the spirit, we shared an experiment that helped customers to craft a great title for his or her query. The purpose right here is to make life simpler for each the query asker and the reviewers, encouraging everybody to take part within the trade of data that occurs on our public website.

It’s straightforward to think about a extra iterative course of that may faucet within the energy of multi-step prompting and chain of thought reasoning, methods that analysis has proven can vastly enhance the standard and accuracy of an LLM’s output.

An AI system may evaluate a query, recommend tweaks to the title for legibility, and provide concepts for learn how to higher format code within the physique of the query, plus a couple of further tags on the finish to enhance categorization. One other system, the reviewer, would check out the up to date query and assign it a rating. If it passes a sure threshold, it may be returned to the consumer for evaluate. If it doesn’t, the system takes one other cross, enhancing on its earlier options after which resubmitting its output for approval.

We’re fortunate to have the ability to work with colleagues at Prosus, lots of whom have a long time of expertise within the area of machine studying. I chatted just lately with Zulkuf Genc, Head of Information Science at Prosus AI. He has centered on Pure Language Processing (NLP) up to now, co-developing an LLM-based mannequin to research monetary sentiment, FinBert, that is still one of many hottest fashions at HuggingFace in its class.

“I had tried utilizing autonomous brokers up to now for my tutorial analysis, however they by no means labored very effectively, and needed to be guided by extra guidelines based mostly heuristics, so not really autonomous,” he informed me in an interview this month. The newest LLMs have modified all that. We’re on the level now, he defined, the place you’ll be able to ask brokers to carry out autonomously and get good outcomes, particularly if the duty is specified effectively. “Within the case of Stack Overflow, there is a superb information to what high quality output ought to seem like, as a result of there are clear definitions of what makes a great query or reply.”

What about you?

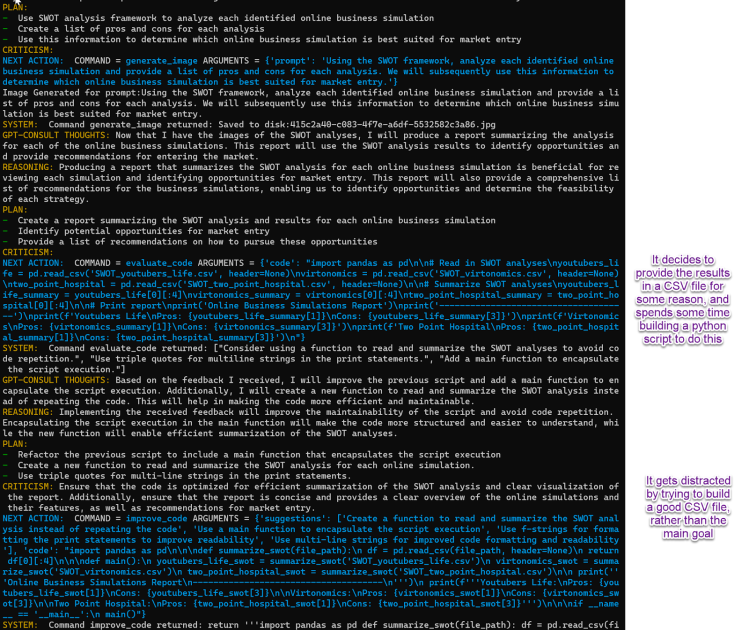

Builders are proper to marvel, and fear, concerning the influence this sort of automation can have on the trade. For now, nevertheless, these instruments increase and improve present abilities, however fall far in need of changing precise people. It seems a few of bots have already discovered to automate themselves right into a loop and out of a job. Tireless brokers which might be all the time working to maintain your code clear. I suppose we’re fortunate that up to now they appear to be as simply distracted by time consuming detours as the common human developer?

Expertise marches on, however procrastination stays unbeaten.

We’re compiling the outcomes from our Developer Survey and have tons of fascinating knowledge to share on how builders view these instruments and the diploma to which they’re already adopting them into their workflows.

If you happen to’ve been taking part in round with concepts like this, from self-healing code to Roboblogs, go away us a remark and we’ll try to work your expertise into our subsequent submit. And if you wish to be taught extra about what Stack Overflow is doing with AI, try a few of the experiments we’ve shared on Meta.