Overview

All of the code used on this tutorial will be discovered on my GitHub undertaking: docker-github-runner-linux.

Welcome to Half 5 of my collection: Self Hosted GitHub Runner containers on Azure.

Within the earlier a part of this collection, we checked out how we will use Azure-CLI or CI/CD workflows in GitHub utilizing GitHub Actions to run self hosted GitHub runner docker containers as Azure Container Cases (ACI) in Azure from a distant container registry additionally hosted in Azure (ACR).

One of many drawbacks of getting self hosted agent runners are if no GitHub Motion Workflows or Jobs are operating, the GitHub runner will simply sit there idle consuming price, whether or not that self hosted GitHub runner be an ACI or a docker container hosted on a VM.

So following on from the earlier half we are going to have a look at how we will use Azure Container Apps (ACA) to run photos from the distant registry as a substitute and in addition reveal how we will robotically scale our self hosted GitHub runners from no runners or 0 up and down primarily based on load/demand, utilizing Kubernetes Occasion-driven Autoscaling (KEDA).

It will permit us to save lots of on prices and solely provision self hosted GitHub runners solely when wanted.

NOTE: On the time of this writing Azure Container Apps helps:

- Any Linux-based x86-64 (linux/amd64) container picture.

- Containers from any public or personal container registry.

- There are not any obtainable KEDA scalers for GitHub runners on the time of this writing.

Proof of Idea

As a result of there are not any obtainable KEDA scalers for GitHub runners on the time of this writing, we are going to use an Azure Storage Queue to regulate the scaling and provisioning of our self hosted GitHub runners.

We’ll create the Container App Atmosphere and Azure Queue, then create an Azure Queue KEDA Scale Rule that may have a minimal of 0 and most of 3 self hosted runner containers. (It’s doable to scale as much as a max of 30).

We’ll use the Azure Queue to affiliate GitHub workflows as queue messages, to provision/scale self hosted runners utilizing an exterior GitHub workflow Job that may sign KEDA to provision a self hosted runner on the fly to make use of on any subsequent workflow Jobs inside the GitHub Workflow.

In any case subsequent workflow Jobs have completed operating, the queue message related to the workflow will likely be faraway from the queue and KEDA will cut back down/destroy the self hosted runner container, primarily scaling again right down to 0 if there are not any different GitHub workflows operating.

Pre-Requisites

Issues we might want to implement this container app proof of idea:

- Azure Container Apps deployment Useful resource Group (Non-obligatory).

- Azure Container Registry (ACR) – See Part3 of this weblog collection. (Admin account must be enabled).

- GitHub Service Principal linked with Azure – See Part3 of this weblog collection.

- Log Analytics Workspace to hyperlink with Azure Container Apps.

- Azure storage account and queue for use for scaling with KEDA.

- Azure Container Apps atmosphere.

- Container App from docker picture (self hosted GitHub runner) saved in ACR.

For this step I’ll use a PowerShell script, Deploy-ACA.ps1 operating Azure-CLI, to create your entire atmosphere and Container App linked with a goal GitHub Repo the place we are going to scale runners utilizing KEDA.

#Log into Azure

#az login

#Add container app extension to Azure-CLI

az extension add --name containerapp

#Variables (ACA)

$randomInt = Get-Random -Most 9999

$area = "uksouth"

$acaResourceGroupName = "Demo-ACA-GitHub-Runners-RG" #Useful resource group created to deploy ACAs

$acaStorageName = "aca2keda2scaler$randomInt" #Storage account that will likely be used to scale runners/KEDA queue scaling

$acaEnvironment = "gh-runner-aca-env-$randomInt" #Azure Container Apps Atmosphere Title

$acaLaws = "$acaEnvironment-laws" #Log Analytics Workspace to hyperlink to Container App Atmosphere

$acaName = "myghprojectpool" #Azure Container App Title

#Variables (ACR) - ACR Admin account must be enabled

$acrLoginServer = "registryname.azurecr.io" #The login server identify of the ACR (all lowercase). Instance: _myregistry.azurecr.io_

$acrUsername = "acrAdminUser" #The Admin Account `Username` on the ACR

$acrPassword = "acrAdminPassword" #The Admin Account `Password` on the ACR

$acrImage = "$acrLoginServer/pwd9000-github-runner-lin:2.293.0" #Picture reference to drag

#Variables (GitHub)

$pat = "ghPatToken" #GitHub PAT token

$githubOrg = "Pwd9000-ML" #GitHub Proprietor/Org

$githubRepo = "docker-github-runner-linux" #Goal GitHub repository to register self hosted runners in opposition to

$appName = "GitHub-ACI-Deploy" #Beforehand created Service Principal linked to GitHub Repo (See half 3 of weblog collection)

# Create a useful resource group to deploy ACA

az group create --name "$acaResourceGroupName" --location "$area"

$acaRGId = az group present --name "$acaResourceGroupName" --query id --output tsv

# Create an azure storage account and queue for use for scaling with KEDA

az storage account create `

--name "$acaStorageName" `

--location "$area" `

--resource-group "$acaResourceGroupName" `

--sku "Standard_LRS" `

--kind "StorageV2" `

--https-only true `

--min-tls-version "TLS1_2"

$storageConnection = az storage account show-connection-string --resource-group "$acaResourceGroupName" --name "$acaStorageName" --output tsv

$storageId = az storage account present --name "$acaStorageName" --query id --output tsv

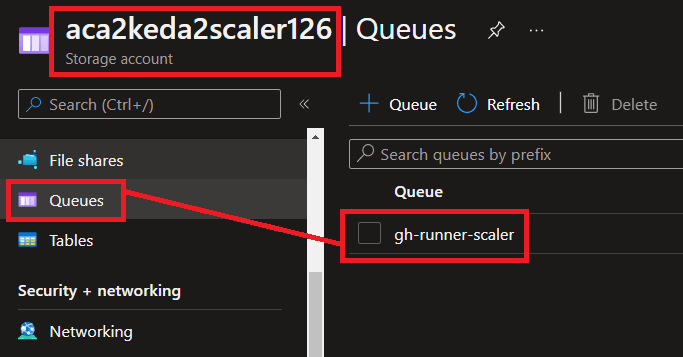

az storage queue create `

--name "gh-runner-scaler" `

--account-name "$acaStorageName" `

--connection-string "$storageConnection"

#Create Log Analytics Workspace for ACA

az monitor log-analytics workspace create --resource-group "$acaResourceGroupName" --workspace-name "$acaLaws"

$acaLawsId = az monitor log-analytics workspace present -g $acaResourceGroupName -n $acaLaws --query customerId --output tsv

$acaLawsKey = az monitor log-analytics workspace get-shared-keys -g $acaResourceGroupName -n $acaLaws --query primarySharedKey --output tsv

#Create ACA Atmosphere

az containerapp env create --name "$acaEnvironment" `

--resource-group "$acaResourceGroupName" `

--logs-workspace-id "$acaLawsId" `

--logs-workspace-key "$acaLawsKey" `

--location "$area"

# Grant AAD App and Service Principal Contributor to ACA deployment RG + `Storage Queue Knowledge Contributor` on Storage account

az advert sp checklist --display-name $appName --query [].appId -o tsv | ForEach-Object {

az function project create --assignee "$_" `

--role "Contributor" `

--scope "$acaRGId"

az function project create --assignee "$_" `

--role "Storage Queue Knowledge Contributor" `

--scope "$storageId"

}

#Create Container App from docker picture (self hosted GitHub runner) saved in ACR

az containerapp create --resource-group "$acaResourceGroupName" `

--name "$acaName" `

--image "$acrImage" `

--environment "$acaEnvironment" `

--registry-server "$acrLoginServer" `

--registry-username "$acrUsername" `

--registry-password "$acrPassword" `

--secrets gh-token="$pat" storage-connection-string="$storageConnection" `

--env-vars GH_OWNER="$githubOrg" GH_REPOSITORY="$githubRepo" GH_TOKEN=secretref:gh-token `

--cpu "1.75" --memory "3.5Gi" `

--min-replicas 0 `

--max-replicas 3

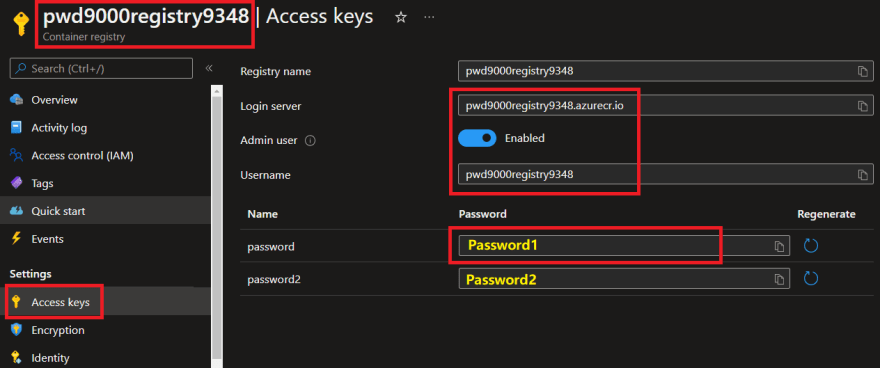

NOTES: Earlier than operating the above PowerShell script you will have to allow the Admin Account on the Azure Container Registry and make an observation of the Username and Password in addition to the LoginSever and Picture reference as these must be handed as variables within the script:

#Variables (ACR) - ACR Admin account must be enabled

$acrLoginServer = "registryname.azurecr.io" #The login server identify of the ACR (all lowercase). Instance: _myregistry.azurecr.io_

$acrUsername = "acrAdminUser" #The Admin Account `Username` on the ACR

$acrPassword = "acrAdminPassword" #The Admin Account `Password` on the ACR

$acrImage = "$acrLoginServer/pwd9000-github-runner-lin:2.293.0" #Picture reference to drag

Additionally, you will want to supply variables for the GitHub Service Principal/AppName we created in Part3 of the weblog collection, that’s linked with Azure, a GitHub PAT token and specify the Proprietor and Repository to hyperlink with the Container App:

#Variables (GitHub)

$pat = "ghPatToken" #GitHub PAT token

$githubOrg = "Pwd9000-ML" #GitHub Proprietor/Org

$githubRepo = "docker-github-runner-linux" #Goal GitHub repository to register self hosted runners in opposition to

$appName = "GitHub-ACI-Deploy" #Beforehand created Service Principal linked to GitHub Repo (See half 3 of weblog collection)

See creating a private entry token on the way to create a GitHub PAT token. PAT tokens are solely displayed as soon as and are delicate, so guarantee they’re stored protected.

The minimal permission scopes required on the PAT token to register a self hosted runner are: "repo", "learn:org":

Let’s take a look at what this script created step-by-step

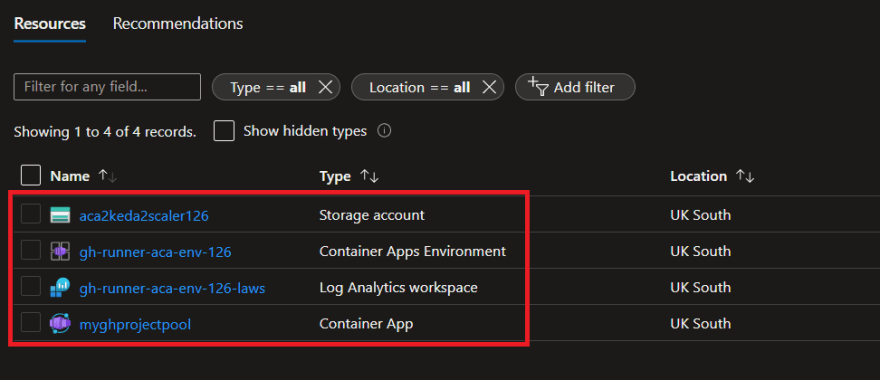

It created a useful resource group known as: Demo-ACA-GitHub-Runners-RG, containing the Azure Container Apps Atmosphere linked with a Log Analytics Workspace, an Azure Storage Account and a Container App primarily based of a GitHub runner picture pulled from our Azure Container Registry.

As well as, the GitHub service principal created in Part3 of this collection has additionally been granted entry on the Useful resource Group as Contributor and Storage Queue Knowledge Contributor on the storage account we are going to use for scaling runners.

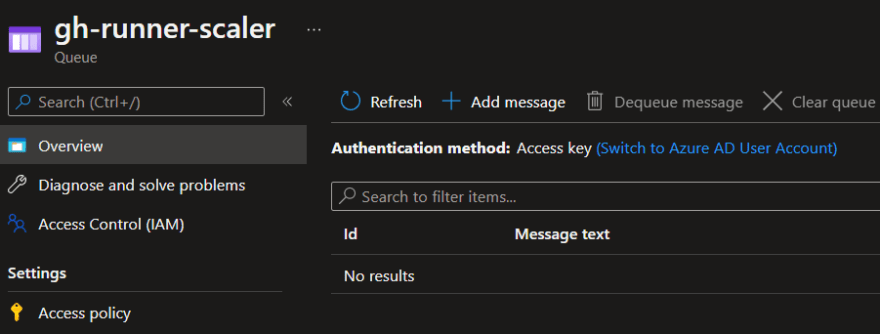

It additionally created an empty queue for us (gh-runner-scaler), that we’ll use to affiliate operating GitHub Workflows as queue messages as soon as we begin operating and scaling GitHub Motion Workflows.

Container App

Lets take a deeper have a look at the created container app itself:

#Create Container App from docker picture (self hosted GitHub runner) saved in ACR

az containerapp create --resource-group "$acaResourceGroupName" `

--name "$acaName" `

--image "$acrImage" `

--environment "$acaEnvironment" `

--registry-server "$acrLoginServer" `

--registry-username "$acrUsername" `

--registry-password "$acrPassword" `

--secrets gh-token="$pat" storage-connection-string="$storageConnection" `

--env-vars GH_OWNER="$githubOrg" GH_REPOSITORY="$githubRepo" GH_TOKEN=secretref:gh-token `

--cpu "1.75" --memory "3.5Gi" `

--min-replicas 0 `

--max-replicas 3

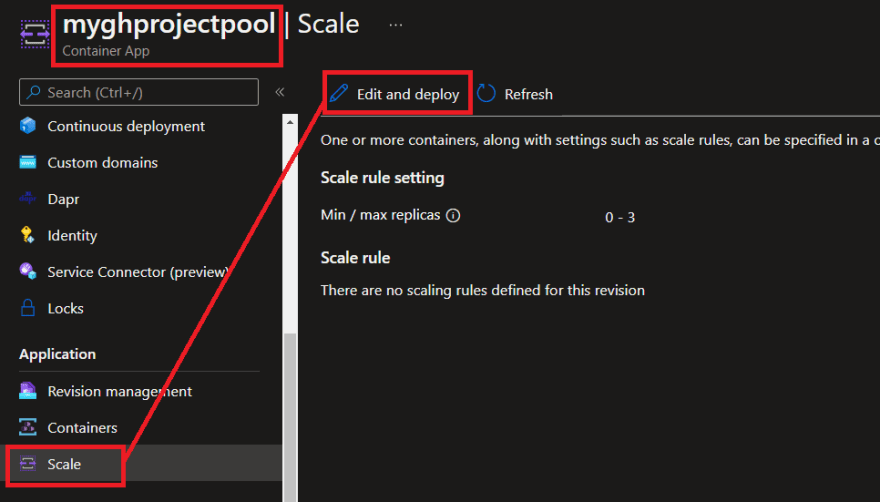

As you possibly can see the Container App created is scaled at 0-3, and we don’t but have a scale rule configured:

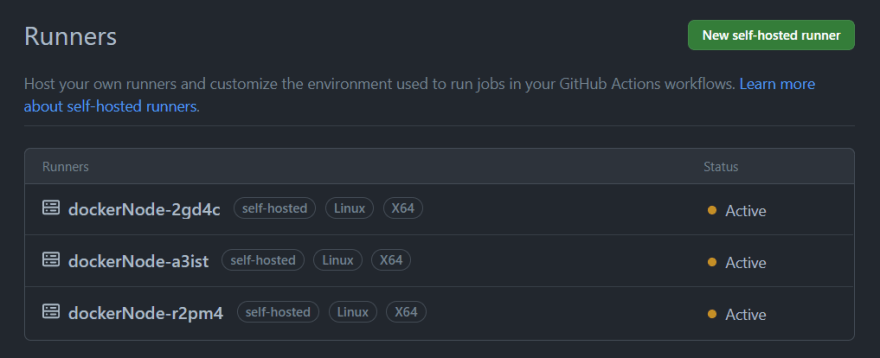

You may additionally discover that the GitHub repository we configured because the goal to deploy runners to, additionally has no runners but, as a result of our scaling is ready to 0:

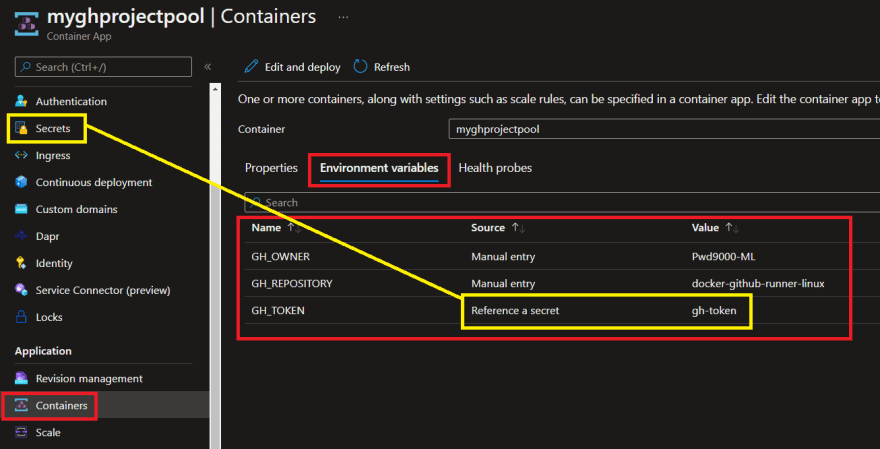

If in case you have been following alongside this weblog collection it’s best to know that after we need to provision a self hosted GitHub runner utilizing the picture we created, via docker or as an ACI, we needed to cross in some atmosphere variables similar to: GH_OWNER, GH_REPOSITORY and GH_TOKEN to specify which repo the runners must be registered on.

You may discover that these variables are saved inside the Container App configuration:

Discover that the GH_TOKEN is definitely referenced by a secret:

The script additionally units the Azure Queue Storage Account Connection String as a secret, as a result of we are going to want this to arrange our KEDA scale rule subsequent.

Create a scale rule

Subsequent we are going to create a KEDA scaling rule. Within the Azure portal navigate to the Container App. Go to 'Scale' and click on on 'Edit and deploy':

Then click on on the 'Scale' tab and choose '+ Add':

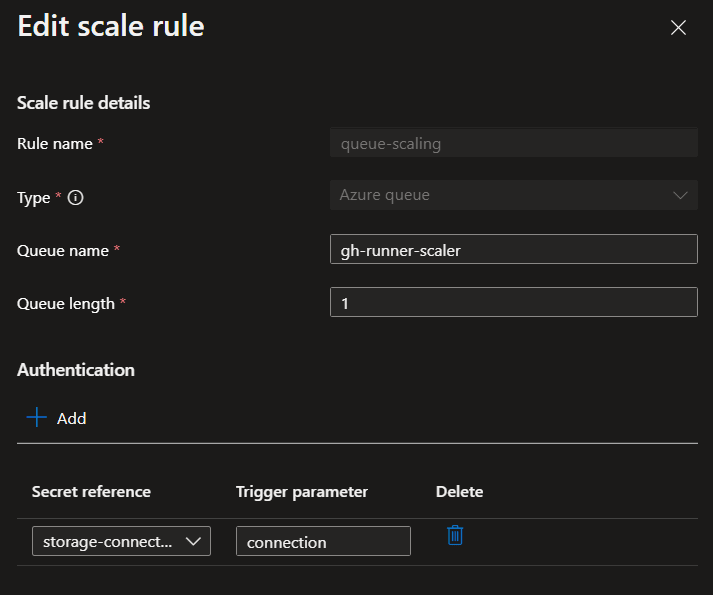

It will carry up the scaling rule configuration pane. Fill out the next:

| Key | Worth | Description |

|---|---|---|

Rule Title |

'queue-scaling' |

Title for scale rule |

Sort |

'Azure queue' |

Sort of scaler to make use of |

Queue identify |

'gh-runner-scaler' |

Azure storage queue identify created by script |

Queue size |

'1' |

Set off threshold (Every workflow run) |

Then click on on '+ Add' on the Authentication part.

Beneath 'Secret reference' you will note a drop down to pick out the 'storage-connection-string' secret we created earlier. For the 'Set off parameter' sort 'connection'.

Then click on on 'Add' and 'Create'. After a minute you will note the brand new scale rule have been created:

NOTE: If you create the size rule for the primary time, when the container app is being provisioned, you’ll discover there will likely be a brief lived runner that may seem on the GitHub repo. The rationale for that is that the provisioning course of will provision not less than 1x occasion momentarily after which scale right down to 0 after about 5 minutes.

Working and Scaling Workflows

Subsequent we are going to create a GitHub workflow that may use an exterior Job to affiliate our workflow run with an Azure Queue message, that may robotically set off KEDA to provision a self hosted runner inside our repo for any subsequent workflow Jobs.

As you possibly can see, we at the moment don’t have any self hosted runners on our GitHub repository:

You need to use the next instance workflow: kedaScaleTest.yml

identify: KEDA Scale self hosted

on:

workflow_dispatch:

env:

AZ_STORAGE_ACCOUNT: aca2keda2scaler126

AZ_QUEUE_NAME: gh-runner-scaler

jobs:

#Exterior Job to create and affiliate workflow with a novel QueueId on Azure queue to scale up KEDA

scale-keda-queue-up:

runs-on: ubuntu-latest

steps:

- identify: 'Login through Azure CLI'

makes use of: azure/login@v1

with:

creds: ${{ secrets and techniques.AZURE_CREDENTIALS }}

- identify: scale up self hosted

id: scaleJob

run: |

OUTPUT=$(az storage message put --queue-name "${{ env.AZ_QUEUE_NAME }}" --content "${{ github.run_id }}" --account-name "${{ env.AZ_STORAGE_ACCOUNT }}")

echo "::set-output identify=scaleJobId::$(echo "$OUTPUT" | grep "id" | sed 's/^.*: //' | sed 's/,*$//g')"

echo "::set-output identify=scaleJobPop::$(echo "$OUTPUT" | grep "popReceipt" | sed 's/^.*: //' | sed 's/,*$//g')"

outputs:

scaleJobId: ${{ steps.scaleJob.outputs.scaleJobId }}

scaleJobPop: ${{ steps.scaleJob.outputs.scaleJobPop }}

#Subsequent Jobs runs-on [self-hosted]. Job1, Job2, JobN and many others and many others

testRunner:

wants: scale-keda-queue-up

runs-on: [self-hosted]

steps:

- makes use of: actions/checkout@v3

- identify: Set up Terraform

makes use of: hashicorp/setup-terraform@v2

- identify: Show Terraform Model

run: terraform --version

- identify: Show Azure-CLI Model

run: az --version

- identify: Delay runner end (5min)

run: sleep 5m

#Take away distinctive QueueId on Azure queue related to workflow as closing step to scale down KEDA

- identify: 'Login through Azure CLI'

makes use of: azure/login@v1

with:

creds: ${{ secrets and techniques.AZURE_CREDENTIALS }}

- identify: scale down self hosted

run: |

az storage message delete --id "${{wants.scaleJob.outputs.scaleJobId}}" --pop-receipt "${{wants.scaleJob.outputs.scaleJobPop}}" --queue-name "${{ env.AZ_QUEUE_NAME }}" --account-name "${{ env.AZ_STORAGE_ACCOUNT }}"

NOTE: On the above GitHub workflow, exchange the atmosphere variables along with your Azure Storage Account and Queue identify:

env:

AZ_STORAGE_ACCOUNT: aca2keda2scaler126

AZ_QUEUE_NAME: gh-runner-scaler

Let’s check out what this workflow does step-by-step

Job 1

jobs:

#Exterior Job to create and affiliate workflow with a novel QueueId on Azure queue to scale up KEDA

scale-keda-queue-up:

runs-on: ubuntu-latest

steps:

- identify: 'Login through Azure CLI'

makes use of: azure/login@v1

with:

creds: ${{ secrets and techniques.AZURE_CREDENTIALS }}

- identify: scale up self hosted

id: scaleJob

run: |

OUTPUT=$(az storage message put --queue-name "${{ env.AZ_QUEUE_NAME }}" --content "${{ github.run_id }}" --account-name "${{ env.AZ_STORAGE_ACCOUNT }}")

echo "::set-output identify=scaleJobId::$(echo "$OUTPUT" | grep "id" | sed 's/^.*: //' | sed 's/,*$//g')"

echo "::set-output identify=scaleJobPop::$(echo "$OUTPUT" | grep "popReceipt" | sed 's/^.*: //' | sed 's/,*$//g')"

outputs:

scaleJobId: ${{ steps.scaleJob.outputs.scaleJobId }}

scaleJobPop: ${{ steps.scaleJob.outputs.scaleJobPop }}

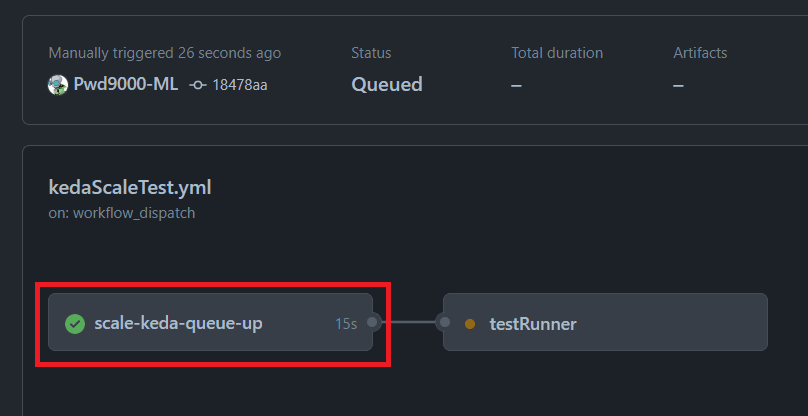

The primary Job known as scale-keda-queue-up:, will use an exterior runner to ship a queue message to the Azure Queue after which save the distinctive Queue Id and Queue popReceipt as an output that we will later reference in different Jobs. This distinctive queue message will symbolize the workflow run:

KEDA will see this queue message primarily based on the size rule we created earlier after which robotically provision a GitHub self hosted runner for this repo while the queue has messages:

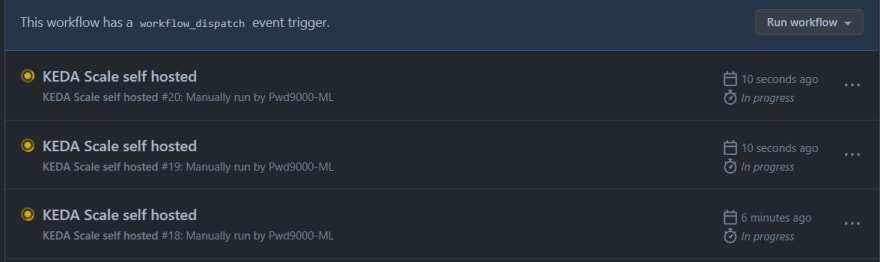

Discover if we run a couple of extra workflows, while the present workflow is operating utilizing th identical approach, extra messages will seem on the azure queue:

These queue messages which represents our workflows will robotically trigger KEDA to scale up and create extra self hosted runners on our repo:

Job 2 <-> Job n

Any subsequent jobs on the workflow can use the self hosted runner as we will see from the next Job on the workflow because it i set to 'runs-on: [self-hosted]':

#Subsequent Jobs runs-on [self-hosted]. Job1, Job2, JobN and many others and many others

testRunner:

wants: scale-keda-queue-up

runs-on: [self-hosted]

steps:

- makes use of: actions/checkout@v3

- identify: Set up Terraform

makes use of: hashicorp/setup-terraform@v2

- identify: Show Terraform Model

run: terraform --version

- identify: Show Azure-CLI Model

run: az --version

- identify: Delay runner end (5min)

run: sleep 5m

#Take away distinctive QueueId on Azure queue related to workflow as closing step to scale down KEDA

- identify: 'Login through Azure CLI'

makes use of: azure/login@v1

with:

creds: ${{ secrets and techniques.AZURE_CREDENTIALS }}

- identify: scale down self hosted

run: |

az storage message delete --id "${{wants.scaleJob.outputs.scaleJobId}}" --pop-receipt "${{wants.scaleJob.outputs.scaleJobPop}}" --queue-name "${{ env.AZ_QUEUE_NAME }}" --account-name "${{ env.AZ_STORAGE_ACCOUNT }}"

This second Job in our workflow will run on the self hosted GitHub runner that KEDA scaled up on the Azure Container Apps and installs Terraform in addition to show the model of Terraform and Azure-CLI.

The final step on the workflow known as scale down self hosted, will take away the queue message on the azure queue to sign that the workflow has accomplished and take away the distinctive queue message. It will trigger KEDA to cut back right down to 0 if there are not any extra workflows operating (All messages are cleared on the azure queue):

- identify: scale down self hosted

run: |

az storage message delete --id "${{wants.scaleJob.outputs.scaleJobId}}" --pop-receipt "${{wants.scaleJob.outputs.scaleJobPop}}" --queue-name "${{ env.AZ_QUEUE_NAME }}" --account-name "${{ env.AZ_STORAGE_ACCOUNT }}"

As soon as the workflow is completed and the Azure Queue is cleared, you will discover that KEDA has scaled again down and our self hosted runners have additionally been cleaned up and faraway from the repo:

Conclusion

As you possibly can see it was fairly simple to provision an Azure Container Apps atmosphere and create a Container App and KEDA scale rule utilizing azure queues to robotically provision self hosted GitHub runners onto a repository of our alternative that has 0 or no runners in any respect.

There are a couple of caveats and ache factors I wish to spotlight on this proof of idea implementation, and hopefully these will likely be remediated quickly. As soon as they’re, I’ll create one other half to this weblog collection as soon as the next ache factors have been fastened or improved.

Situation 1: Azure Container Apps does not totally but permit us to make use of the Container Apps system assigned managed id to drag photos from Azure Container Registry. Which means we’ve to allow the ACRs Admin Account as a way to provision photos from the Azure Container Registry. You possibly can comply with this GitHub problem concerning this bug.

Situation 2: As talked about earlier, there are not any obtainable KEDA scalers for GitHub runners on the time of this writing. Which means we’ve to utilise an Azure Storage Queue linked with our GitHub workflow to provision/scale runners with KEDA utilizing an exterior GitHub workflow Job by sending a queue message with our workflow for KEDA to be signalled to provision a self hosted runner on the fly for us to make use of in any subsequent GitHub Workflow Jobs.

One advantage of this methodology nonetheless is that we will have a minimal container depend of 0, which implies we can’t ever have any idle runners doing nothing consuming pointless prices, thus primarily solely paying for self hosted runners when they’re truly operating. This methodology will solely create self hosted GitHub runners primarily based on the Azure Queue size primarily associating our workflow run with a queue merchandise.

As soon as the GitHub Workflow finishes it should take away the queue merchandise and KEDA will cut back right down to 0 if there are not any GitHub workflows operating.

That concludes this 5 half collection the place we took a deep dive intimately on the way to implement Self Hosted GitHub Runner containers on Azure.

I hope you’ve loved this submit and have discovered one thing new. You’ll find the code samples used on this weblog submit on my GitHub undertaking: docker-github-runner-linux. ❤️

Writer

Like, share, comply with me on: 🐙 GitHub | 🐧 Twitter | 👾 LinkedIn