Generative AI technologies are powerful, but they’re limited by what they know. While an LLM like ChatGPT can perform many tasks, every LLM’s baseline knowledge has gaps based on its training data. If you ask an LLM to write something about a recent trend or event, the LLM won’t have any idea what you’re talking about, and the responses will be mixed at best and problematic at worst.

LLMs’ knowledge-based problems come down to two key issues:

- LLM training data tends to be hopelessly out-of-date (as of writing, ChatGPT’s knowledge of the world ends at January 2022, excluding functionality like Browse with Bing and GPT-4V that provide additional context).

- LLMs extrapolate when facts aren’t available, so they confidently make false but plausible-sounding statements when there’s a gap in their knowledge (called hallucination).

Retrieval augmented generation (RAG) is a strategy that helps address both of these issues, pairing information retrieval with a set of carefully designed system prompts to anchor LLMs on precise, up-to-date, and pertinent information retrieved from an external knowledge store. Prompting LLMs with this contextual knowledge makes it possible to create domain-specific applications that require a deep and evolving understanding of facts, despite LLM training data remaining static.

You could ask an LLM, “What is RAG?”, and you might get very different responses depending on whether or not the LLM itself uses RAG:

“What is RAG?” response with RAG

RAG, or retrieval augmented generation, is a method introduced by Meta AI researchers that combines an information retrieval component with a text generator model to address knowledge-intensive tasks. Its internal knowledge can be modified efficiently without needing to retrain the entire model. Would you like more details on this?“What is RAG?” response without RAG

RAG can refer to several different things depending on the context. Here are a few common meanings:

1. Red, Amber, Green (RAG): In project management and reporting, RAG is a color-coding system used to quickly convey the status or health of a project or task.

2. RAG Analysis: This is a method used in risk assessment or decision-making. It involves categorizing risks or options as Red, Amber, or Green based on their level of severity, impact, or desirability. It helps prioritize actions or choices.

3. Random Access Generator (RAG): In some technical contexts, RAG might refer to a system or algorithm that generates random access patterns or data, often used in computer science or information retrieval.

4. Resource Allocation Graph (RAG): In the context of operating systems and computer science, a Resource Allocation Graph is used for deadlock detection. It's a directed graph that represents resource allocation and request relationships among processes in a system.If you have a specific context or domain in mind, please provide more details, and I can give you a more precise explanation of “RAG” in that context.

There’s a big difference between answering this question with and without RAG. Especially in cases involving domain-specific knowledge (like acronyms), RAG can drastically improve the accuracy of an LLM’s responses.

If you’ve interacted with a chatbot that knows about recent events, is aware of user-specific information, or has a deeper understanding of a subject than is normal, you’ve likely interacted with RAG without realizing it. If you’ve seen tutorials about “chatting over your documents,” that’s the simplest version of RAG around. Frameworks like LangChain and LlamaIndex have democratized RAG by making it possible to create simple knowledge-aware applications quickly.

I’ve developed and implemented LLM applications internal to Skyflow, including systems that use RAG. With RAG being used nearly everywhere—and not leaving anytime soon—it’s important to understand both the basics of RAG and how to move beyond those basics when you want to move your code into production. I invite you to learn from my experience on this RAG journey so you don’t have to learn the hard way.

An overly simplified example

LangChain has an instance of RAG in its smallest (however not easiest) kind:

from langchain.document_loaders import WebBaseLoader

from langchain.indexes import VectorstoreIndexCreator

loader = WebBaseLoader("https://www.promptingguide.ai/strategies/rag")

index = VectorstoreIndexCreator().from_loaders([loader])

index.question("What's RAG?")With these 5 strains, we get an outline of RAG, however the code is closely abstracted, so it is obscure what’s truly occurring:

We fetch the contents of an online web page (our data base for this instance).

- We course of the supply contents and retailer them in a data base (on this case, a vector database).

- We enter a immediate, LangChain finds bits of knowledge from the data base, and passes each immediate and data base outcomes to the LLM.

Whereas this script is useful for prototyping and understanding the principle beats of utilizing RAG, it is not all that helpful for transferring past that stage as a result of you do not have a lot management. Let’s talk about what truly goes into implementation.

Basic architecture

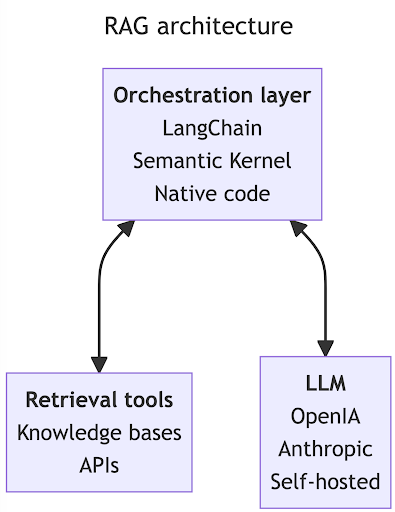

As a result of a full LLM software structure could be pretty massive, we’ll contemplate simply the elements that allow RAG:

- The orchestration layer receives the consumer’s enter in any related metadata (like dialog historical past), interacts with the entire associated tooling, ships the immediate off to the LLM, and returns the consequence. Orchestration layers are sometimes composed of instruments like LangChain, Semantic Kernel, and others with some native code (usually Python) knitting all of it collectively.

- Retrieval instruments are a gaggle of utilities that return context that informs and grounds responses to the consumer immediate. This group encompasses each data bases and API-based retrieval techniques.

- LLM is the big language mannequin that you simply’re sending prompts to. They could be hosted by a 3rd celebration like OpenAI or run internally in your personal infrastructure. For the needs of this text, the precise mannequin you are utilizing would not matter.

In a typical LLM software, your inference processing script connects to retrieval instruments as needed. When you’re constructing an LLM agent-based software, every retrieval utility is uncovered to your agent as a device. From right here on, we’ll solely talk about typical script-based utilization.

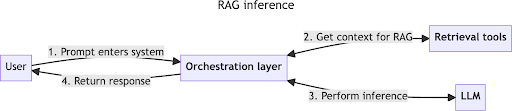

When customers set off your inference circulate, the orchestration layer knits collectively the mandatory instruments and LLMs to collect context out of your retrieval instruments and generate contextually related, knowledgeable responses. The orchestration layer handles all of your API calls and RAG-specific prompting methods (which we’ll contact on shortly). It additionally performs validations, like ensuring you do not go over your LLM’s token restrict, which may trigger the LLM to reject your request since you stuffed an excessive amount of textual content into your immediate.

Knowledge base retrieval

To question your knowledge, you not solely want your knowledge, you want it in a format that is accessible to your software. For LLM-based functions, this often includes a vector retailer—a database that may question based mostly on textual similarity slightly than precise matches.

Getting your knowledge from its supply format right into a vector retailer requires an ETL (extract, remodel, load) pipeline.

- Mixture supply paperwork. Something you need out there to your software, it is advisable acquire. For my work on Skyflow’s personal LLM, this included our product documentation, white papers, and weblog posts, however this might simply lengthen to inner information, planning paperwork, and so on.

- Clear the doc content material. If there’s something that should not be seen to the LLM supplier or to the tip customers of your software, now’s your alternative to take away it. At this stage within the course of, take away personally identifiable data (PII), confidential data, and in-development content material. Something that is still after this step might be seen to all phases of the coaching and inference processes that observe. Skyflow GPT Privateness Vault will help you de-identify doc contents to maintain your coaching knowledge away from delicate data.

- Load doc contents into reminiscence. Instruments like Unstructured, LlamaIndex, and LangChain’s Doc loaders make it doable to load all kinds of doc sorts into your functions, significantly unstructured content material. Whether or not it is a textual content doc, a spreadsheet, an online web page, a PDF, a Git repo, or tons of of different issues, there’s seemingly a loader for it.

Watch out, although, as a result of not all loaders are created equal, and a few load extra content material or context than others. For instance, one loader would possibly load content material from a number of sheets in a spreadsheet, whereas one other masses content material from solely the primary sheet.

- Cut up the content material into chunks. Once you break up your content material, you break it into small, bite-sized items that may match into an LLM immediate whereas sustaining that means. There are a number of methods to separate your content material.

Each LangChain and LlamaIndex have textual content splitters out there, with defaults that break up recursively by whitespace characters and by sentence, however it is advisable use no matter works greatest for you and your content material. For my undertaking, I discovered that my documentation, written in Markdown, misplaced an excessive amount of context even with LangChain’s Markdown splitter, so I wrote my very own splitter that chunked content material based mostly on Markdown’s heading and code block markup.

- Create embeddings for textual content chunks. Embeddings retailer vectors—numerical representations of 1 textual content chunk’s relative place and relationship to different close by textual content chunks. Whereas that is troublesome to visualise, creating embeddings is fortunately straightforward. OpenAI provides an embeddings mannequin, LangChain and LlamaIndex supply a wide range of hosted or self-hosted embedding choices, or you are able to do it your self with an embedding mannequin like SentenceTransformers.

- Retailer embeddings in a vector retailer. After getting your embeddings, you’ll be able to add them to a vector retailer similar to Pinecone, Weaviate, FAISS, Chroma or a large number of different choices.

As soon as the vectors are saved, you’ll be able to question the vector retailer and discover the content material that is most much like your question. If it is advisable replace or add to your supply paperwork, most vector shops enable updating the shop. This implies it’s also possible to take away content material out of your vector retailer, one thing you’ll be able to’t do once you fine-tune a mannequin.

You may run the pipeline once more to recreate the entire data base, and whereas that will be cheaper than re-training a mannequin, it will nonetheless show time-consuming and inefficient. When you count on to replace your supply paperwork repeatedly, contemplate making a doc indexing course of so that you solely course of the brand new and just lately up to date paperwork in your pipeline.

API-based retrieval

Retrieval from a vector retailer is not the one form of retrieval. When you’ve got any knowledge sources that enable programmatic entry (buyer information databases, an inner ticketing system, and so on.), contemplate making them accessible to your orchestration layer. At run time, your orchestration layer can question your API-based retrieval techniques to supply further context pertinent to your present request.

Prompting with RAG

After your retrieval instruments are arrange, it is time for a little bit of orchestration-layer magic to knit all of it collectively.

First, we’ll begin with the immediate template. A immediate template contains placeholders for all the knowledge you wish to move to the LLM as a part of the immediate. The system (or base) immediate tells the LLM tips on how to behave and tips on how to course of the consumer’s request. A easy immediate template would possibly seem like this:

System: You're a pleasant chatbot assistant that responds in a conversational

method to customers' questions. Reply in 1-2 full sentences, until particularly

requested by the consumer to elaborate on one thing. Use Historical past and Context to tell your solutions.

---

Historical past: {historical past}

---

Context: {context}

---

Person: {request}

When your finish consumer submits a request, you get to start out filling within the variables. If the consumer submitted “What’s RAG?”, you would fill within the request variable. You’d seemingly get the dialog historical past together with the request, so you would fill in that variable, too:

System: You're a pleasant chatbot assistant that responds in a conversational

method to consumer's questions. Reply briefly however full solutions until particularly

requested by the consumer to elaborate on one thing. Use Historical past and Context to tell your solutions.

---

Historical past: [{"role": "assistant", "message": "Hi! How can I help you?"}]

---

Context: {context}

---

Person: What's RAG?Subsequent, name your retrieval instruments, whether or not they’re vector shops or different APIs. After getting your context (data base outcomes, buyer information, and so on.), you’ll be able to replace the context variable to tell and floor the LLM throughout inference.

Notice: When you’ve got a number of varieties of information included in your RAG implementation, be sure to label them to assist the LLM differentiate between them.

System: You're a pleasant chatbot assistant that responds in a conversational

method to consumer's questions. Reply briefly however full solutions until particularly

requested by the consumer to elaborate on one thing. Use Historical past and Context to tell your solutions.

---

Historical past: [{"role": "assistant", "message": "Hi! How can I help you?"}]

---

Context: [Document(page_content='Meta AI researchers introduced a method called [Retrieval Augmented Generation](https://ai.fb.com/weblog/retrieval-augmented-generation-streamlining-the-creation-of-intelligent-natural-language-processing-models/) (RAG) to handle such knowledge-intensive duties. RAG combines an data retrieval element with a textual content generator mannequin. RAG will be fine-tuned and its inner data will be modified in an environment friendly method and while not having retraining of the complete mannequin.', metadata={'supply': 'https://www.promptingguide.ai/strategies/rag', 'title': 'Retrieval Augmented Technology (RAG)'})]

---

Person: What's RAG?Along with your context in place, your immediate template is crammed out, however you continue to have two post-processing duties to finish:

- Very similar to you cleaned your supply knowledge, it is advisable clear your immediate as effectively. Customers can enter PII of their request even when your knowledge is clear, and you don’t need your customers’ data ending up in an LLM operator’s logs or knowledge shops. This additionally serves as a safeguard in case you did not clear your supply knowledge. Along with de-identifying knowledge units and prompts, Skyflow GPT Privateness Vault may re-identify delicate values in LLM responses earlier than you ship responses to customers.

- Be sure to do not exceed your LLM’s token limits. When you do, your inference makes an attempt will fail. Earlier than you try inference, use token calculators (like tiktoken), to be sure you’re inside LLM-specific limits. You will have to cut back the quantity of context you embody to suit inside your token restrict.

As soon as your immediate is cleaned and inside token limits, you’ll be able to lastly carry out inference by sending the immediate to your LLM of alternative. Once you get the response, it must be knowledgeable by the context you supplied, and you may ship it again to the consumer.

Improving performance

Now that you’ve RAG working in your software, you could wish to transfer to manufacturing instantly. Do not do it but! Alternatively, you might not be all that thrilled with the outcomes you’ve. By no means concern, listed below are a handful of issues you are able to do to enhance your RAG efficiency and get production-ready:

- Rubbish in, rubbish out. The upper the standard of the context you present, the upper high quality consequence you may obtain. Clear up your supply knowledge to ensure your knowledge pipeline is sustaining ample content material (like capturing spreadsheet column headers), and ensure pointless markup is eliminated so it would not intervene with the LLM’s understanding of the textual content.

- Tune your splitting technique. Experiment with completely different textual content chunk sizes to ensure your RAG-enabled inference maintains ample context. Every knowledge set is completely different. Create in another way break up vector shops and see which one performs greatest along with your structure.

- Tune your system immediate. If the LLM is not paying sufficient consideration to your context, replace your system immediate with expectations of tips on how to course of and use the supplied data.

- Filter your vector retailer outcomes. If there are specific sorts of content material you do or do not wish to return, filter your vector retailer outcomes towards metadata ingredient values. For instance, if you would like a course of, you would possibly filter towards a docType metadata worth to ensure your outcomes are from a how-to doc.

- Attempt completely different embedding fashions (and fine-tune your personal). Completely different embedding fashions have alternative ways of encoding and evaluating vectors of your knowledge. Experiment to see which one performs greatest to your software. You may try the present best-performing open supply embedding fashions on the MTEB leaderboard.

When you’re adventurous, it’s also possible to fine-tune your personal embedding fashions so your LLM turns into extra conscious of domain-specific terminology, and subsequently provides you higher question outcomes. And sure, you’ll be able to completely use your cleaned and processed data base dataset to fine-tune your mannequin.

What about fine-tuning?

Earlier than we wrap up, let’s handle one other LLM-related buzzword: fine-tuning.

Wonderful-tuning and RAG present two alternative ways to optimize LLMs. Whereas RAG and fine-tuning each leverage supply knowledge, they every have distinctive benefits and challenges to think about.

Wonderful-tuning is a course of that continues coaching a mannequin on further knowledge to make it carry out higher on the particular duties and domains that the info set particulars. Nevertheless, a single mannequin cannot be the perfect at the whole lot, and duties unrelated to the fine-tuned duties usually degrade in efficiency with further coaching. For instance, fine-tuning is how code-specific LLMs are created. Google’s Codey is fine-tuned on a curated dataset of code examples in a wide range of languages. This makes Codey carry out considerably higher at coding duties than Google’s Duet or PaLM 2 fashions, however on the expense of common chat efficiency.

Conversely, RAG augments LLMs with related and present data retrieved from exterior data bases. This dynamic augmentation lets LLMs overcome the constraints of static data and generate responses which might be extra knowledgeable, correct, and contextually related. Nevertheless, the combination of exterior data introduces elevated computational complexity, latency, and immediate complexity, doubtlessly resulting in longer inference occasions, greater useful resource utilization, and longer improvement cycles.

RAG does greatest fine-tuning in a single particular space: forgetting. Once you fine-tune a mannequin, the coaching knowledge turns into part of the mannequin itself. You may’t isolate or take away a selected a part of the mannequin. LLMs cannot neglect. Nevertheless, vector shops allow you to add, replace, and delete their contents, so you’ll be able to simply take away inaccurate or out-of-date data everytime you need.

Wonderful-tuning and RAG collectively can create LLM-powered functions which might be specialised to particular duties or domains and are able to utilizing contextual data. Take into account GitHub Copilot: It is a fine-tuned mannequin that focuses on coding and makes use of your code and coding setting as a data base to supply context to your prompts. Consequently, Copilot tends to be adaptable, correct, and related to your code. On the flip facet, Copilot’s chat-based responses can anecdotally take longer to course of than different fashions, and Copilot cannot help with duties that are not coding-related.

Wrap-up

RAG represents a sensible resolution to boost the capabilities of LLMs. By integrating real-time, exterior data into LLM responses, RAG addresses the problem of static coaching knowledge, ensuring that the knowledge supplied stays present and contextually related.

Going ahead, integrating RAG into numerous functions holds the potential to considerably enhance consumer experiences and knowledge accuracy. In a world the place staying up-to-date is essential, RAG provides a dependable means to maintain LLMs knowledgeable and efficient. Embracing RAG’s utility permits us to navigate the complexities of recent AI functions with confidence and precision.