This publish goals to offer inspiration and concepts for analysis instructions to junior researchers and people attempting to get into analysis.

Desk of contents:

It may be arduous to search out compelling matters to work on and know what questions are attention-grabbing to ask if you end up simply beginning as a researcher in a brand new area. Machine studying analysis particularly strikes so quick as of late that it’s troublesome to search out a gap.

This publish goals to offer inspiration and concepts for analysis instructions to junior researchers and people attempting to get into analysis. It gathers a set of analysis matters which are attention-grabbing to me, with a give attention to NLP and switch studying. As such, they could clearly not be of curiosity to everybody. If you’re keen on Reinforcement Studying, OpenAI supplies a choice of attention-grabbing RL-focused analysis matters. In case you’d wish to collaborate with others or are keen on a broader vary of matters, take a look on the Synthetic Intelligence Open Community.

Most of those matters usually are not completely thought out but; in lots of circumstances, the overall description is kind of imprecise and subjective and plenty of instructions are attainable. As well as, most of those are not low-hanging fruit, so critical effort is important to provide you with an answer. I’m glad to offer suggestions with regard to any of those, however won’t have time to offer extra detailed steerage until you could have a working proof-of-concept. I’ll replace this publish periodically with new analysis instructions and advances in already listed ones. Be aware that this assortment doesn’t try and evaluation the in depth literature however solely goals to present a glimpse of a subject; consequently, the references will not be complete.

I hope that this assortment will pique your curiosity and function inspiration in your personal analysis agenda.

Activity-independent knowledge augmentation for NLP

Knowledge augmentation goals to create further coaching knowledge by producing variations of current coaching examples via transformations, which might mirror these encountered in the true world. In Laptop Imaginative and prescient (CV), frequent augmentation strategies are mirroring, random cropping, shearing, and many others. Knowledge augmentation is tremendous helpful in CV. As an illustration, it has been used to nice impact in AlexNet (Krizhevsky et al., 2012) to fight overfitting and in most state-of-the-art fashions since. As well as, knowledge augmentation makes intuitive sense because it makes the coaching knowledge extra numerous and may thus enhance a mannequin’s generalization capacity.

Nonetheless, in NLP, knowledge augmentation is just not extensively used. In my thoughts, that is for 2 causes:

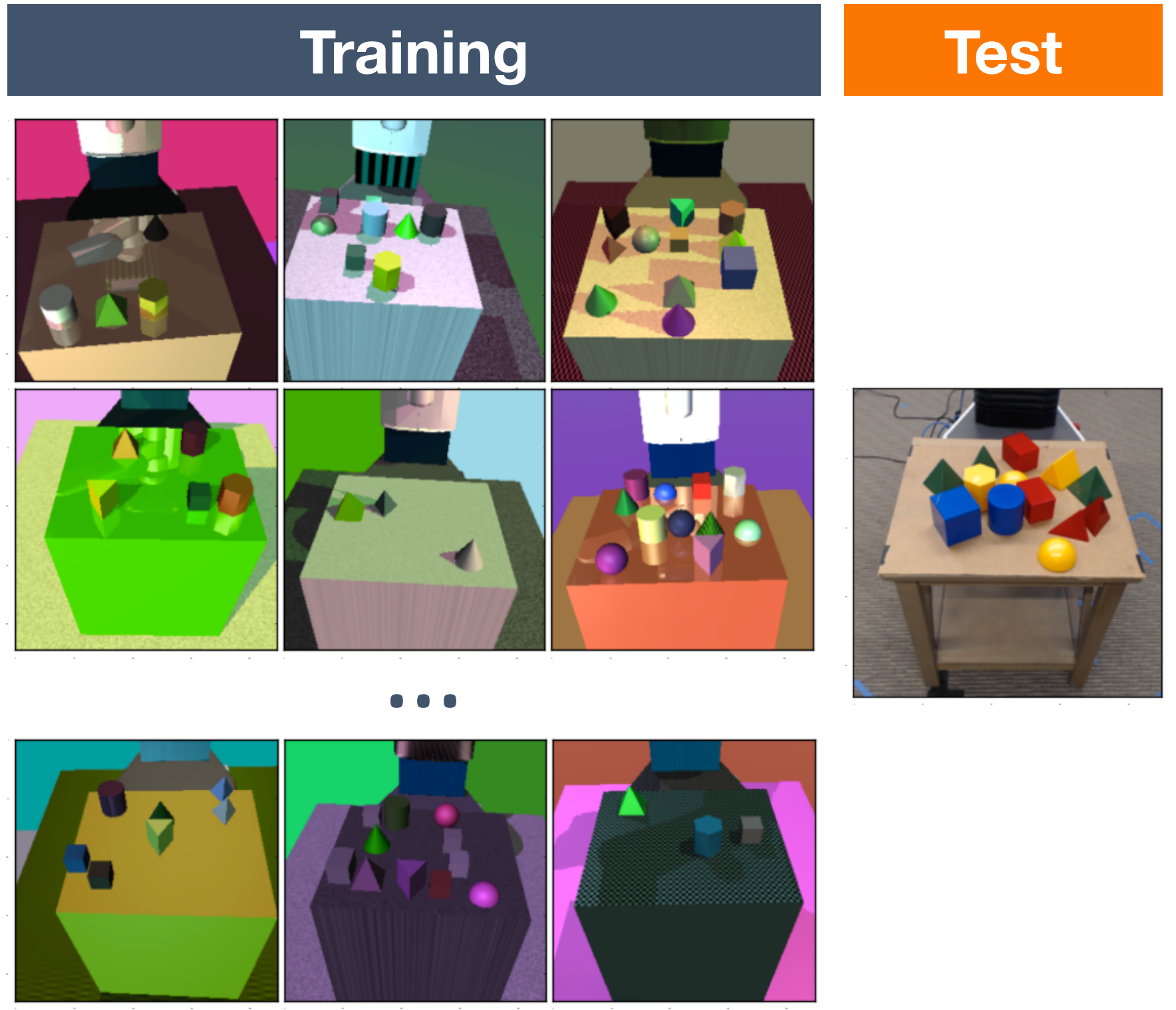

- Knowledge in NLP is discrete. This prevents us from making use of easy transformations on to the enter knowledge. Most just lately proposed augmentation strategies in CV give attention to such transformations, e.g. area randomization (Tobin et al., 2017) .

- Small perturbations could change the that means. Deleting a negation could change a sentence’s sentiment, whereas modifying a phrase in a paragraph may inadvertently change the reply to a query about that paragraph. This isn’t the case in CV the place perturbing particular person pixels doesn’t change whether or not a picture is a cat or canine and even stark adjustments corresponding to interpolation of various photos might be helpful (Zhang et al., 2017) .

Present approaches that I’m conscious of are both rule-based (Li et al., 2017) or task-specific, e.g. for parsing (Wang and Eisner, 2016) or zero-pronoun decision (Liu et al., 2017) . Xie et al. (2017) substitute phrases with samples from completely different distributions for language modelling and Machine Translation. Latest work focuses on creating adversarial examples both by changing phrases or characters (Samanta and Mehta, 2017; Ebrahimi et al., 2017) , concatenation (Jia and Liang, 2017) , or including adversarial perturbations (Yasunaga et al., 2017) . An adversarial setup can also be utilized by Li et al. (2017) who prepare a system to provide sequences which are indistinguishable from human-generated dialogue utterances.

Again-translation (Sennrich et al., 2015; Sennrich et al., 2016) is a standard knowledge augmentation methodology in Machine Translation (MT) that permits us to include monolingual coaching knowledge. As an illustration, when coaching a EN(rightarrow)FR system, monolingual French textual content is translated to English utilizing an FR(rightarrow)EN system; the artificial parallel knowledge can then be used for coaching. Again-translation may also be used for paraphrasing (Mallinson et al., 2017) . Paraphrasing has been used for knowledge augmentation for QA (Dong et al., 2017) , however I’m not conscious of its use for different duties.

One other methodology that’s near paraphrasing is producing sentences from a steady house utilizing a variational autoencoder (Bowman et al., 2016; Guu et al., 2017) . If the representations are disentangled as in (Hu et al., 2017) , then we’re additionally not too removed from type switch (Shen et al., 2017) .

There are just a few analysis instructions that may be attention-grabbing to pursue:

- Analysis examine: Consider a variety of current knowledge augmentation strategies in addition to strategies that haven’t been extensively used for augmentation corresponding to paraphrasing and elegance switch on a various vary of duties together with textual content classification and sequence labelling. Establish what forms of knowledge augmentation are strong throughout activity and that are task-specific. This could possibly be packaged as a software program library to make future benchmarking simpler (assume CleverHans for NLP).

- Knowledge augmentation with type switch: Examine if type switch can be utilized to switch numerous attributes of coaching examples for extra strong studying.

- Study the augmentation: Much like Dong et al. (2017) we may be taught both to paraphrase or to generate transformations for a selected activity.

- Study a phrase embedding house for knowledge augmentation: A typical phrase embedding house clusters synonyms and antonyms collectively; utilizing nearest neighbours on this house for substitute is thus infeasible. Impressed by latest work (Mrkšić et al., 2017) , we may specialize the phrase embedding house to make it extra appropriate for knowledge augmentation.

- Adversarial knowledge augmentation: Associated to latest work in interpretability (Ribeiro et al., 2016) , we may change probably the most salient phrases in an instance, i.e. people who a mannequin will depend on for a prediction. This nonetheless requires a semantics-preserving substitute methodology, nevertheless.

Few-shot studying for NLP

Zero-shot, one-shot and few-shot studying are one of the attention-grabbing latest analysis instructions IMO. Following the important thing perception from Vinyals et al. (2016) {that a} few-shot studying mannequin ought to be explicitly educated to carry out few-shot studying, we have now seen a number of latest advances (Ravi and Larochelle, 2017; Snell et al., 2017) .

Studying from few labeled samples is among the hardest issues IMO and one of many core capabilities that separates the present era of ML fashions from extra typically relevant techniques. Zero-shot studying has solely been investigated within the context of studying phrase embeddings for unknown phrases AFAIK. Dataless classification (Music and Roth, 2014; Music et al., 2016) is an attention-grabbing associated route that embeds labels and paperwork in a joint house, however requires interpretable labels with good descriptions.

Potential analysis instructions are the next:

- Standardized benchmarks: Create standardized benchmarks for few-shot studying for NLP. Vinyals et al. (2016) introduce a one-shot language modelling activity for the Penn Treebank. The duty, whereas helpful, is dwarfed by the in depth analysis on CV benchmarks and has not seen a lot use AFAIK. A number of-shot studying benchmark for NLP ought to comprise numerous lessons and supply a standardized break up for reproducibility. Good candidate duties can be subject classification or fine-grained entity recognition.

- Analysis examine: After creating such a benchmark, the following step can be to guage how properly current few-shot studying fashions from CV carry out for NLP.

- Novel strategies for NLP: Given a dataset for benchmarking and an empirical analysis examine, we may then begin creating novel strategies that may carry out few-shot studying for NLP.

Switch studying for NLP

Switch studying has had a big influence on laptop imaginative and prescient (CV) and has tremendously lowered the entry threshold for folks wanting to use CV algorithms to their very own issues. CV practicioners are not required to carry out in depth feature-engineering for each new activity, however can merely fine-tune a mannequin pretrained on a big dataset with a small variety of examples.

In NLP, nevertheless, we have now up to now solely been pretraining the primary layer of our fashions by way of pretrained embeddings. Latest approaches (Peters et al., 2017, 2018) add pretrained language mannequin embedddings, however these nonetheless require customized architectures for each activity. For my part, with a view to unlock the true potential of switch studying for NLP, we have to pretrain your entire mannequin and fine-tune it on the goal activity, akin to fine-tuning ImageNet fashions. Language modelling, as an example, is a superb activity for pretraining and could possibly be to NLP what ImageNet classification is to CV (Howard and Ruder, 2018) .

Listed below are some potential analysis instructions on this context:

- Establish helpful pretraining duties: The selection of the pretraining activity is essential as even fine-tuning a mannequin on a associated activity may solely present restricted success (Mou et al., 2016) . Different duties corresponding to these explored in latest work on studying general-purpose sentence embeddings (Conneau et al., 2017; Subramanian et al., 2018; Nie et al., 2017) may be complementary to language mannequin pretraining or appropriate for different goal duties.

- Superb-tuning of complicated architectures: Pretraining is most helpful when a mannequin might be utilized to many goal duties. Nonetheless, it’s nonetheless unclear tips on how to pretrain extra complicated architectures, corresponding to these used for pairwise classification duties (Augenstein et al., 2018) or reasoning duties corresponding to QA or studying comprehension.

Multi-task studying

Multi-task studying (MTL) has turn into extra generally utilized in NLP. See right here for a basic overview of multi-task studying and right here for MTL goals for NLP. Nonetheless, there may be nonetheless a lot we do not perceive about multi-task studying basically.

The primary questions relating to MTL give rise to many attention-grabbing analysis instructions:

- Establish efficient auxiliary duties: One of many foremost questions is which duties are helpful for multi-task studying. Label entropy has been proven to be a predictor of MTL success (Alonso and Plank, 2017) , however this doesn’t inform the entire story. In latest work (Augenstein et al., 2018) , we have now discovered that auxiliary duties with extra knowledge and extra fine-grained labels are extra helpful. It might be helpful if future MTL papers wouldn’t solely suggest a brand new mannequin or auxiliary activity, but in addition attempt to perceive why a sure auxiliary activity may be higher than one other carefully associated one.

- Options to arduous parameter sharing: Laborious parameter sharing continues to be the default modus operandi for MTL, however locations a powerful constraint on the mannequin to compress data pertaining to completely different duties with the identical parameters, which frequently makes studying troublesome. We’d like higher methods of doing MTL which are straightforward to make use of and work reliably throughout many duties. Just lately proposed strategies corresponding to cross-stitch items (Misra et al., 2017; Ruder et al., 2017) and a label embedding layer (Augenstein et al., 2018) are promising steps on this route.

- Synthetic auxiliary duties: One of the best auxiliary duties are these, that are tailor-made to the goal activity and don’t require any further knowledge. I’ve outlined an inventory of potential synthetic auxiliary duties right here. Nonetheless, it’s not clear which of those work reliably throughout various numerous duties or what variations or task-specific modifications are helpful.

Cross-lingual studying

Creating fashions that carry out properly throughout languages and that may switch data from resource-rich to resource-poor languages is among the most essential analysis instructions IMO. There was a lot progress in studying cross-lingual representations that venture completely different languages right into a shared embedding house. Confer with Ruder et al. (2017) for a survey.

Cross-lingual representations are generally evaluated both intrinsically on similarity benchmarks or extrinsically on downstream duties, corresponding to textual content classification. Whereas latest strategies have superior the state-of-the-art for a lot of of those settings, we wouldn’t have an excellent understanding of the duties or languages for which these strategies fail and tips on how to mitigate these failures in a task-independent method, e.g. by injecting task-specific constraints (Mrkšić et al., 2017).

Activity-independent structure enhancements

Novel architectures that outperform the present state-of-the-art and are tailor-made to particular duties are repeatedly launched, superseding the earlier structure. I’ve outlined greatest practices for various NLP duties earlier than, however with out evaluating such architectures on completely different duties, it’s typically arduous to achieve insights from specialised architectures and inform which elements would even be helpful in different settings.

A very promising latest mannequin is the Transformer (Vaswani et al., 2017) . Whereas the whole mannequin may not be acceptable for each activity, elements corresponding to multi-head consideration or position-based encoding could possibly be constructing blocks which are typically helpful for a lot of NLP duties.

Conclusion

I hope you’ve got discovered this assortment of analysis instructions helpful. In case you have solutions on tips on how to sort out a few of these issues or concepts for associated analysis matters, be at liberty to remark under.

Cowl picture is from Tobin et al. (2017).