Write sturdy unit assessments with Python pytest

Replace: This text is a part of a collection. Try different “in 10 Minutes” matters right here!

In my earlier article on unit assessments, I elaborated on the aim of unit assessments, unit take a look at ecosystem, and finest practices, and demonstrated primary and superior examples with Python built-in unittest bundle. There may be multiple approach (and multiple Python bundle) to carry out unit assessments, this text will show how you can implement unit assessments with Python pytest bundle. This text will observe the movement of the earlier article carefully, so you’ll be able to examine the assorted elements of unittest vs. pytest.

Whereas the unittest bundle is object-oriented since take a look at instances are written in lessons, the pytest bundle is purposeful, leading to fewer strains of code. Personally, I desire unittest as I discover the codes extra readable. That being stated, each packages, or slightly frameworks, are equally highly effective, and selecting between them is a matter of choice.

In contrast to unittest, pytest shouldn’t be a built-in Python bundle and requires set up. This will merely be completed with pip set up pytest on the Terminal.

Do be aware that the perfect practices for unittest additionally apply for pytest, such that

- All unit assessments should be written in a

assessments/listing - File names ought to strictly begin with

tests_ - Operate names ought to strictly begin with

take a look at

The naming conventions should be adopted in order that the checker can uncover the unit assessments when it’s run.

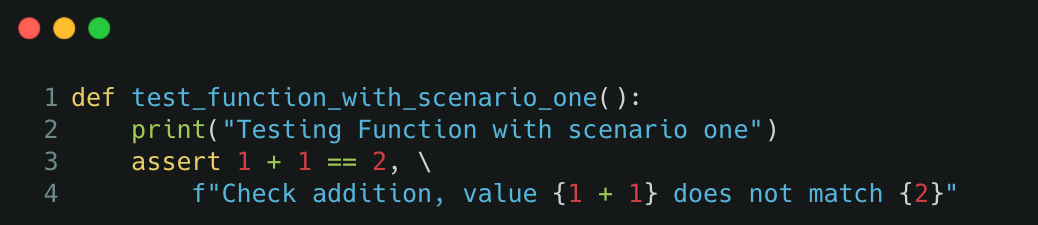

As pytest follows purposeful programming, the unit assessments are written in features the place assertions are made throughout the perform. This ends in pytest being simple to select up, and unit take a look at codes wanting quick and candy!

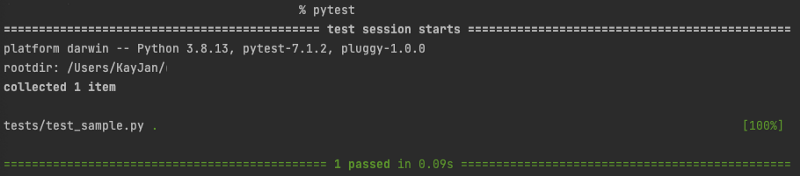

Unit assessments will be run by typing pytest into the command line, which is able to uncover all of the unit assessments in the event that they observe the naming conference. The unit take a look at output returns the full variety of assessments run, the variety of assessments handed, skipped, and failed, the full time taken to run the assessments, and the failure stack hint if any.

To run unit assessments on a particular listing, file, or perform, the instructions are as follows

$ pytest assessments/

$ pytest assessments/test_sample.py

$ pytest assessments/test_sample.py::test_function_one

There are extra customization that may be appended to the command, similar to

-x: exit immediately or fail quick, to cease all unit assessments upon encountering the primary take a look at failure-k "key phrase": specify the key phrase(s) to selectively run assessments, can match file identify or perform identify, and might includeandandnotstatements--ff: failed first, to run all assessments ranging from people who failed the final run (most well-liked)--lf: final failed, to run assessments that failed the final run (disadvantage: could not uncover failures in assessments that beforehand handed)--sw: step-wise, cease on the first take a look at failure and proceed from there within the subsequent run (disadvantage: could not uncover failures in assessments that beforehand handed)

Bonus tip: If the unit assessments are taking too lengthy to run, you’ll be able to run them in parallel as a substitute of sequentially! Set up the pytest-xdist Python bundle and add this to the command when working unit assessments,

-n <variety of employees>: variety of employees to run the assessments in parallel

Debugging error: You would possibly face the error ModuleNotFoundError when your take a look at scripts import from a folder from the bottom listing or whichever supply listing. As an example, your perform resides in src/sample_file.py and your take a look at scripts residing in assessments/ listing carry out an import from src.sample_file import sample_function.

To beat this, create a configuration file pytest.ini within the base listing to point the listing to carry out the import relative to the bottom listing. A pattern of the content material so as to add to the configuration file is as follows,

[pytest]

pythonpath = .

This configuration file will be prolonged to extra makes use of, to be elaborated on in later sections. For now, this configuration permits you to bypass the ModuleNotFoundError error.

After understanding the essential construction of a unit take a look at and how you can run them, it’s time to dig deeper!

In actuality, unit assessments may not be as simple as calling the perform and testing the anticipated output given some enter. There will be superior logic similar to accounting for floating level precision, testing for anticipated errors, grouping assessments collectively, conditional skipping of unit assessments, mocking knowledge, and so forth. which will be completed with context managers and interior decorators from the pytest bundle (principally already coded out for you!).

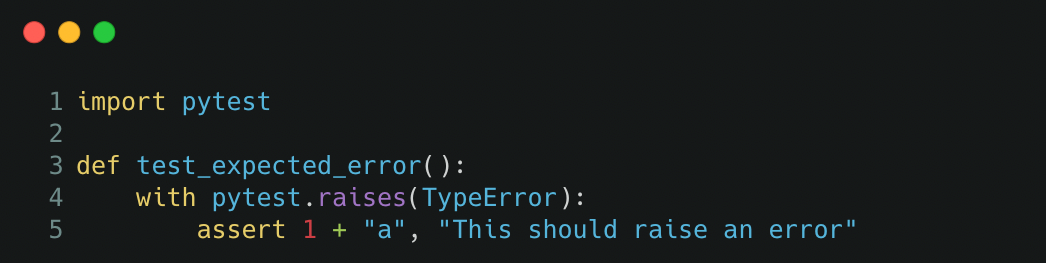

Moreover testing for the anticipated output, you can too take a look at for anticipated errors to make sure that features will throw errors when utilized in a way that it isn’t designed for. This may be completed with pytest.raises context supervisor, utilizing the with key phrase.

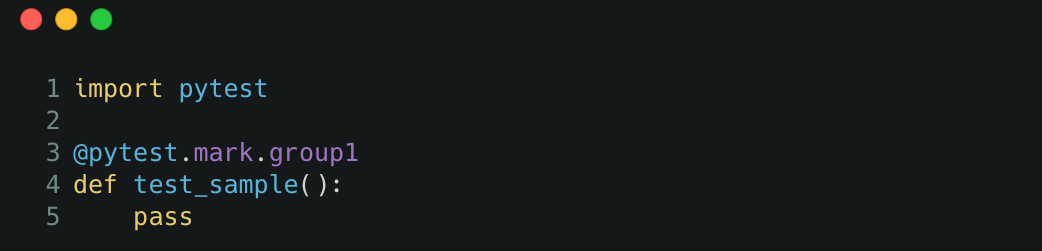

Unit assessments will be marked utilizing pytest.mark decorator, which permits for varied prolonged performance similar to,

- Grouping the unit assessments: A number of unit assessments can then be run as a bunch

- Marked to fail: To point that the unit take a look at is predicted to fail

- Marked to skip/conditional skipping: Unit take a look at default behaviour is to be skipped, or be skipped if sure circumstances are met

- Marked to insert parameters: Take a look at varied inputs to a unit take a look at

Decorators will be stacked to supply a number of prolonged functionalities. As an example, the take a look at will be marked as a bunch and marked to be skipped!

The varied performance elaborated above are carried out on this method,

a) Grouping the unit assessments

As a substitute of working the unit assessments inside a folder, file, or by key phrase search, unit assessments will be grouped and known as with pytest -m <group-name>. The output of the take a look at will present the variety of assessments ran and the variety of assessments which can be deselected as they don’t seem to be within the group.

This may be carried out with pytest.mark.<group-name> decorator with instance under,

To make this work, we would wish to outline the group within the configuration file, the next content material will be added to the prevailing contents within the pytest.ini file,

markers =

group1: description of group 1

b) Marked to fail

For assessments which can be anticipated to fail, they are often marked with the pytest.mark.xfail decorator. The output will present xfailed if the unit assessments fail (versus throwing an error in regular eventualities) and xpassed if the unit take a look at unexpectedly passes. An instance is as follows,

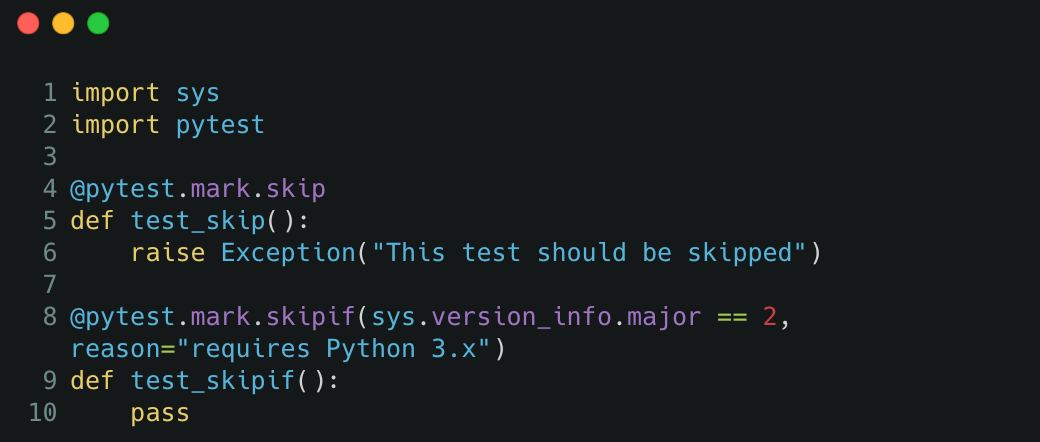

c) Marked to skip/conditional skipping

Marking a unit take a look at to be skipped or skipped if sure circumstances are met is just like the earlier part, simply that the decorator is pytest.mark.skip and pytest.mark.skipif respectively. Skipping a unit take a look at is helpful if the take a look at now not works as anticipated with a more moderen Python model or newer Python bundle model.

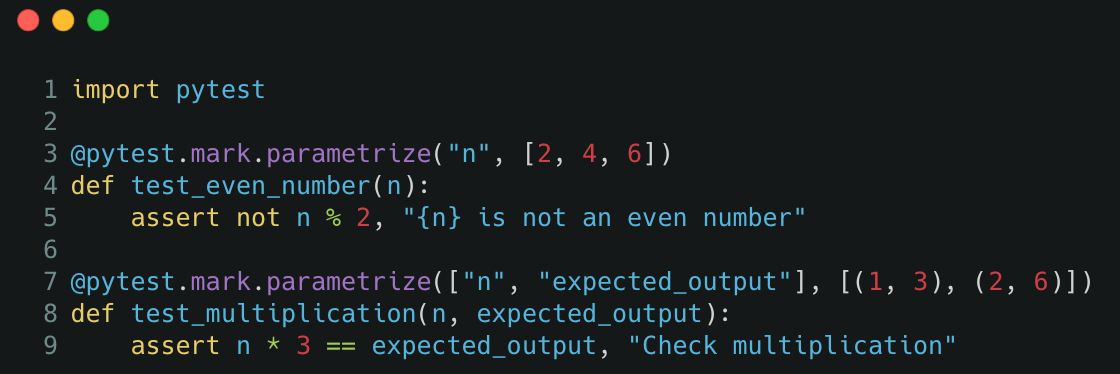

d) Marked to insert parameters

In some instances, we might wish to take a look at the perform towards a couple of inputs, as an illustration, to check the codebase towards regular instances and edge instances. As a substitute of writing a number of assertions inside one unit take a look at or writing a number of unit assessments, we are able to take a look at a number of inputs in an automatic vogue as follows,

Mocking is utilized in unit assessments to exchange the return worth of a perform. It’s helpful to exchange operations that shouldn’t be run in a testing surroundings, as an illustration, to exchange operations that connect with a database and hundreds knowledge when the testing surroundings doesn’t have the identical knowledge entry.

In pytest, mocking can substitute the return worth of a perform inside a perform. That is helpful for testing the specified perform and changing the return worth of a nested perform inside that desired perform we’re testing.

As such, mocking reduces the dependency of the unit take a look at as we’re testing the specified perform and never its dependencies on different features.

As an example, if the specified perform hundreds knowledge by connecting to a database, we are able to mock the perform that hundreds knowledge such that it doesn’t connect with a database, and as a substitute provide different knowledge for use.

To implement mocking, set up the pytest-mock Python bundle. On this instance throughout the src/sample_file.py file, we outline the specified perform and performance to be mocked.

def load_data():

# This ought to be mocked as it's a dependency

return 1def dummy_function():

# That is the specified perform we're testing

return load_data()

Throughout the take a look at script, we outline the perform to be mocked by specifying its full dotted path, and outline the worth that ought to be returned as a substitute,

from src.sample_file import dummy_functiondef test_mocking_function(mocker):

mocker.patch("src.sample_file.load_data", return_value=2)

assert dummy_function() == 2, "Worth ought to be mocked"

Mocking can patch any perform throughout the codebase, as lengthy you outline the complete dotted path. Word that you simply can’t mock the specified perform you’re testing however can mock any dependencies, and even nested dependencies, the specified perform depends on.

a) Pytest Configuration

As mentioned in earlier sections, the configuration file pytest.ini will be outlined on the base listing to bypass ModuleNotFoundError and to outline unit take a look at teams. It ought to look one thing like this by now,

[pytest]

pythonpath = .

markers =

group1: description of group 1

Configuration information enable customers to specify the default mode to run unit assessments, as an illustration, pytest --ff for failed first setting, or pytest -ra -q for a condensed output outcome. The default mode will be indicated by including the road addopts = -ra -q to the configuration file.

To suppress warnings, we are able to additionally add ignore::DeprecationWarning or ignore::ImportWarning to the configuration file.

Extra objects will be added to the configuration file, however these are the extra widespread ones. The official documentation for pytest configuration information will be discovered right here.

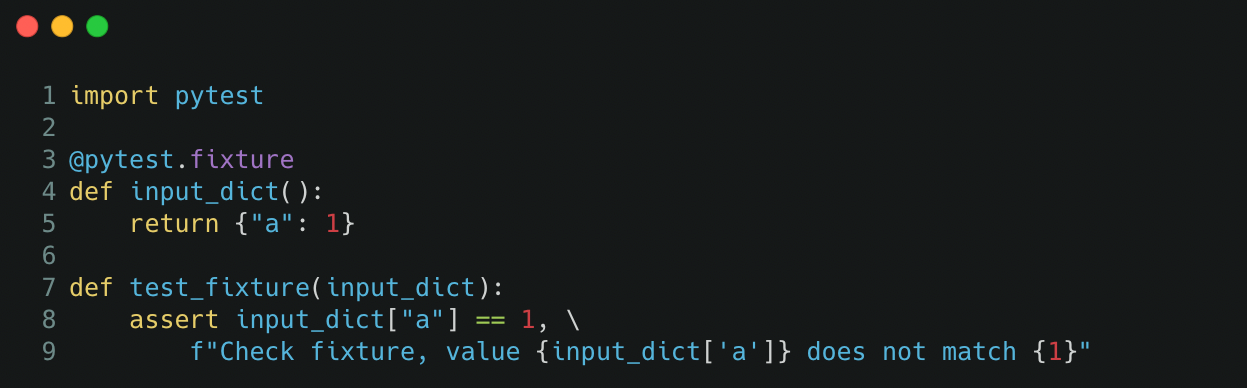

b) Reusing Variables (by Fixtures)

Fixtures can be utilized to standardize enter throughout a number of unit assessments. As an example, a fixture will be outlined to load a file or create an object for use as enter to a number of assessments as a substitute of rewriting the identical strains of code in each take a look at.

Fixtures will be outlined throughout the identical Python file or throughout the file assessments/conftest.py which is dealt with by pytest routinely.

c) Accounting for Floating Level Precision

When asserting equality circumstances for numerical values, there could also be discrepancies within the values within the decimal level positions as a consequence of floating level arithmetic limitations.

To counter this, we are able to examine the equality of numerical values with some tolerance. Utilizing assert output_value == pytest.approx(expected_value) will enable the equality comparability to be relaxed to a tolerance of 1e-6 by default.

Hope you’ve discovered extra about implementing unit assessments with pytest and a few cool methods you are able to do with unit assessments. There are much more functionalities provided similar to utilizing monkeypatch for mocking knowledge, defining the scope in fixtures, utilizing pytest together with the unittest Python bundle, and a lot extra. There is usually a sequel to this if there’s a demand for it

Thanks for studying! Should you preferred this text, be at liberty to share it.