It lastly arrived!

The trajectory of Deep Studying assist for the MacOS group has been superb up to now.

Beginning with the M1 units, Apple launched a built-in graphics processor that permits GPU acceleration. Therefore, M1 Macbooks turned appropriate for deep studying duties. No extra Colab for MacOS information scientists!

Subsequent on the agenda was compatibility with the favored ML frameworks. Tensorflow was the primary framework to turn out to be accessible in Apple Silicon units. Utilizing the Metallic plugin, Tensorflow can make the most of the Macbook’s GPU.

Sadly, PyTorch was left behind. You possibly can run PyTorch natively on M1 MacOS, however the GPU was inaccessible.

Till now!

You possibly can entry all of the articles within the “Setup Apple M-Silicon for Deep Studying” collection from right here, together with the information on the best way to set up Tensorflow on Mac M1.

PyTorch, like Tensorflow, makes use of the Metallic framework — Apple’s Graphics and Compute API. PyTorch labored along side the Metallic Engineering crew to allow high-performance coaching on GPU.

Internally, PyTorch makes use of Apple’s Metal Performance Shaders (MPS) as a backend.

The MPS backend system maps machine studying computational graphs and primitives on the MPS Graph framework and tuned kernels offered by MPS.

Word 1: Don’t confuse Apple’s MPS (Metallic Efficiency Shaders) with Nvidia’s MPS! (Multi-Course of Service).

Word 2: The M1-GPU assist function is supported solely in MacOS Monterey (12.3+).

The set up course of is straightforward. We’ll break it into the next steps:

Step 1: Set up Xcode

Most Macbooks have Xcode preinstalled. Alternatively, you’ll be able to simply obtain it from the App Retailer. Moreover, set up the Command Line Instruments:

$ xcode-select --install

Step 2: Setup a brand new conda setting

That is simple. We create a brand new setting referred to as torch-gpu :

$ conda create -n torch-gpu python=3.8

$ conda activate torch-gpu

The official set up information doesn’t specify which Python model is appropriate. Nevertheless, I’ve verified that Python variations 3.8 and 3.9work correctly.

Step 2: Set up PyTorch packages

There are two methods to try this: i) Utilizing the obtain helper from the PyTorch net web page, or ii) utilizing the command line.

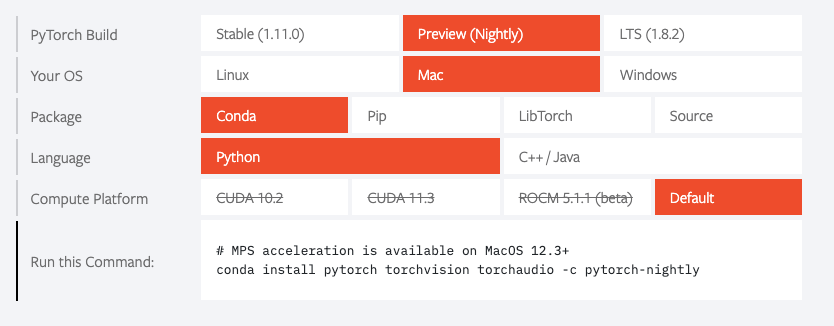

For those who select the primary technique, go to the PyTorch web page and choose the next:

You possibly can additionally straight run the conda set up command that’s displayed within the image:

conda set up pytorch torchvision torchaudio -c pytorch-nightly

And that’s it!

Take note of the next:

- Examine the obtain helper first as a result of the set up command could change sooner or later.

- Anticipate the M1-GPU assist to be included within the subsequent secure launch. In the interim, it solely is discovered within the Nightly launch.

- Extra issues will come sooner or later, so don’t neglect to test the launch checklist every now and then for brand new updates!

Step 3: Sanity Examine

Subsequent, let’s be certain that every thing went as anticipated. That’s:

- PyTorch was put in efficiently.

- PyTorch can use the GPU efficiently.

To make issues straightforward, set up the Jupyter pocket book and/or Jupyter lab:

$ conda set up -c conda-forge jupyter jupyterlab

Now, we are going to test if PyTorch can discover the Metallic Efficiency Shaders plugin. Open the Jupiter pocket book and run the next:

import torch

import math# this ensures that the present MacOS model is not less than 12.3+

print(torch.backends.mps.is_available())# this ensures that the present present PyTorch set up was constructed with MPS activated.

print(torch.backends.mps.is_built())

If each instructions return True, then PyTorch has entry to the GPU!

Step 4: Closing take a look at

Lastly, we run an illustrative instance to test that every thing works correctly.

To run PyTorch code on the GPU, use torch.system("mps") analogous to torch.system("cuda") on an Nvidia GPU. Therefore, on this instance, we transfer all computations to the GPU:

dtype = torch.float

system = torch.system("mps")# Create random enter and output information

x = torch.linspace(-math.pi, math.pi, 2000, system=system, dtype=dtype)

y = torch.sin(x)

# Randomly initialize weights

a = torch.randn((), system=system, dtype=dtype)

b = torch.randn((), system=system, dtype=dtype)

c = torch.randn((), system=system, dtype=dtype)

d = torch.randn((), system=system, dtype=dtype)

learning_rate = 1e-6

for t in vary(2000):

# Ahead go: compute predicted y

y_pred = a + b * x + c * x ** 2 + d * x ** 3

# Compute and print loss

loss = (y_pred - y).pow(2).sum().merchandise()

if t % 100 == 99:

print(t, loss)

# Backprop to compute gradients of a, b, c, d with respect to loss

grad_y_pred = 2.0 * (y_pred - y)

grad_a = grad_y_pred.sum()

grad_b = (grad_y_pred * x).sum()

grad_c = (grad_y_pred * x ** 2).sum()

grad_d = (grad_y_pred * x ** 3).sum()

# Replace weights utilizing gradient descent

a -= learning_rate * grad_a

b -= learning_rate * grad_b

c -= learning_rate * grad_c

d -= learning_rate * grad_d

print(f'End result: y = {a.merchandise()} + {b.merchandise()} x + {c.merchandise()} x^2 + {d.merchandise()} x^3')

For those who don’t see any error, every thing works as anticipated!

A follow-up article will benchmark the PyTorch M1 GPU execution in opposition to numerous NVIDIA GPU playing cards.

Nevertheless, it’s simple that the GPU acceleration far outspeeds the coaching course of on the CPU. You possibly can confirm this your self: Design your personal experiments and measure their respective instances.

The Apple engineering crew carried out an intensive benchmark of in style deep studying fashions on the Apple silicon chip. The next picture exhibits the efficiency speedup of the GPU in comparison with the CPU.

The latest addition of PyTorch to the toolset of appropriate MacOS deep-learning frameworks is an incredible milestone.

This milestone permits MacOS followers to remain inside their favorite Apple ecosystem and give attention to deep studying. They not have to decide on the opposite alternate options — Intel-based chips or Colab.

In case you are a knowledge scientist and fan of MacOS, be happy to test this checklist of all Apple Silicon-related articles!