A brief and candy tutorial on discovering correlations between the objects in imagery information

A important query that arises after figuring out the objects (or class labels) in an imagery database is: “How are the assorted objects found in an imagery database correlated with each other?” This text tries to reply this query by offering a generic framework that may facilitate the readers to find hidden correlations between objects within the imagery database. (The aim of this text is to encourage upcoming researchers to publish high quality analysis papers in high conferences and journals. The portion of this text is drawn from our work revealed in IEEE BIGDATA 2021 [1].)

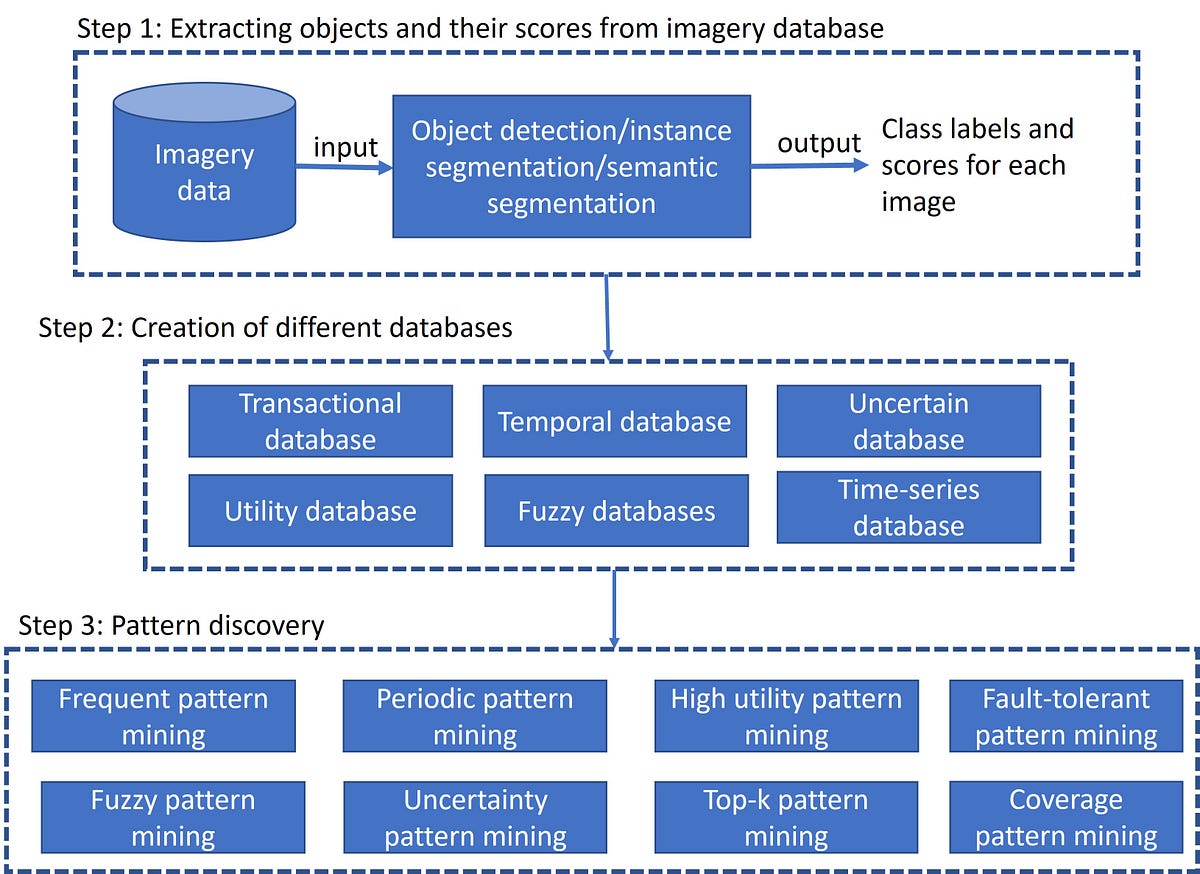

The framework to find the correlation between the objects in an imagery database is proven in Determine 1. It entails the next three steps:

- Extract the objects (or class labels) and their likelihood scores for every picture within the repository. The customers can extract objects utilizing object detection/occasion segmentation/semantic segmentation strategies.

- Remodel the objects and their likelihood scores right into a database of your selection. (If vital, prune uninteresting objects having low likelihood scores to scale back noise.)

- Relying on the database generated and the wanted information, apply the corresponding sample mining method to find thrilling correlations between the objects within the imagery information.

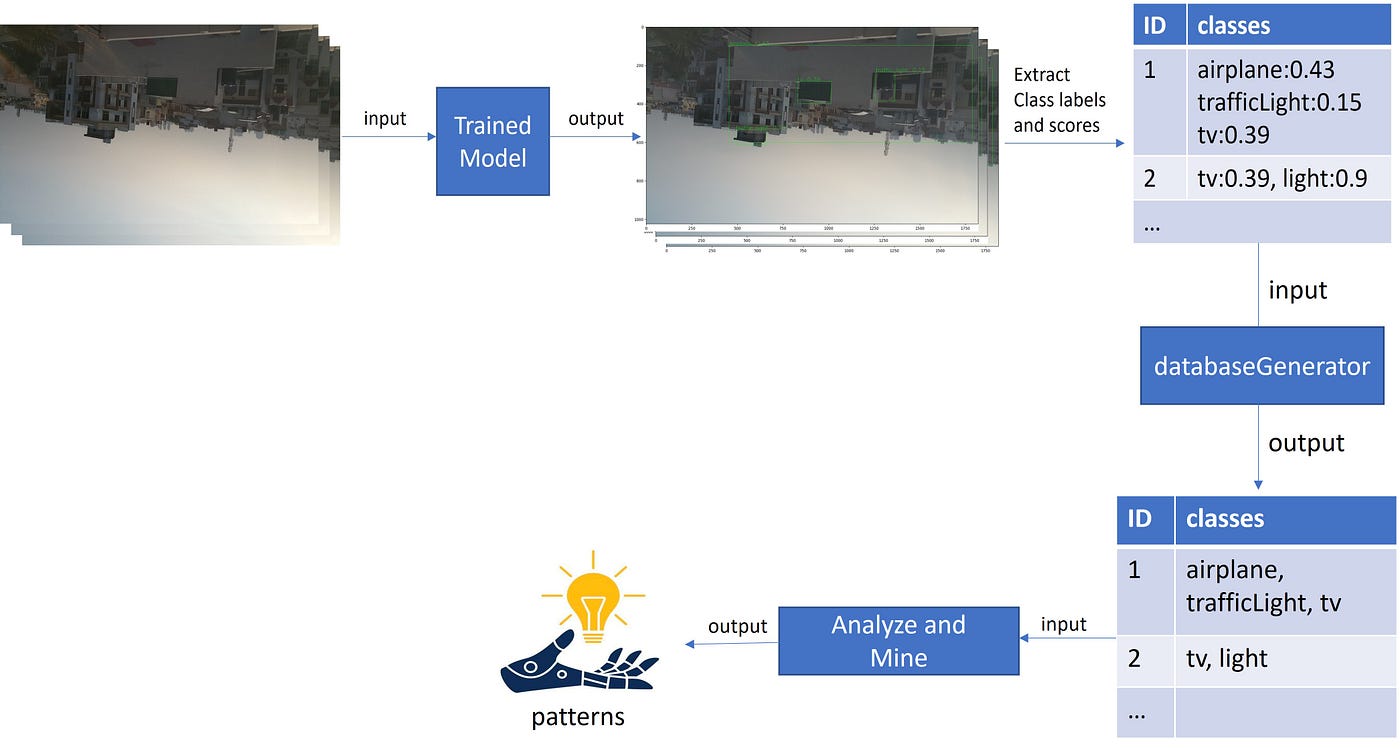

Demonstration: On this demo, we first move the picture information right into a educated mannequin (e.g., resnet50) and extract objects and their scores. Subsequent, the extracted information is remodeled right into a transactional database. Lastly, we carry out (maximal) frequent sample mining on the generated transactional database to find often occurring units of objects within the imagery information. Determine 2 exhibits the overview of our demo.

Pre-requisite:

- We assume the readers are aware of the occasion/semantic segmentation and sample mining subjects. We suggest Phillipe’s video lectures on sample mining.

- Set up the next python packages: pip set up pami torchvision

- Obtain the imagery database from [2]

(Please set up any extra packages wanted relying in your computing setting.)

Step 1: Extracting Objects and Their Scores from Imagery Knowledge

Step 1.1: Load pre-trained object detection mannequin

Save the under code as objectDetection.py. This code accepts the imagery folder as enter, implements the pre-trained resnet50 mannequin, and outputs an inventory (i.e., self.predicted_classes) containing class labels and their scores. Every ingredient on this listing represents the category labels present in a picture.

import glob

import os

import csv

import torchvision

from torchvision import transforms

import torch

from torch import no_grad

import cv2

from PIL import Picture

import numpy as np

import sys

import matplotlib.pyplot as plt

from IPython.show import Picture as Imagedisplay

from PAMI.extras.imageProcessing import imagery2Databases as obclass objectDetection:

def __init__(self):

self.model_ = torchvision.fashions.detection.fasterrcnn_resnet50_fpn(pretrained=True)

self.model_.eval()

for title, param in self.model_.named_parameters():

param.requires_grad = Falsedef mannequin(self, x):

with torch.no_grad():

self.y_hat = self.model_(x)

return self.y_hatdef model_train(self, image_path):

# label names

self.coco_instance_category_names = [

'__background__', 'person', 'bicycle', 'car', 'motorcycle', 'airplane', 'bus',

'train', 'truck', 'boat', 'traffic light', 'fire hydrant', 'N/A', 'stop sign',

'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse', 'sheep', 'cow',

'elephant', 'bear', 'zebra', 'giraffe', 'N/A', 'backpack', 'umbrella', 'N/A', 'N/A',

'handbag', 'tie', 'suitcase', 'frisbee', 'skis', 'snowboard', 'sports ball',

'kite', 'baseball bat', 'baseball glove', 'skateboard', 'surfboard', 'tennis racket',

'bottle', 'N/A', 'wine glass', 'cup', 'fork', 'knife', 'spoon', 'bowl',

'banana', 'apple', 'sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza',

'donut', 'cake', 'chair', 'couch', 'potted plant', 'bed', 'N/A', 'dining table',

'N/A', 'N/A', 'toilet', 'N/A', 'tv', 'laptop', 'mouse', 'remote', 'keyboard', 'cell phone',

'microwave', 'oven', 'toaster', 'sink', 'refrigerator', 'N/A', 'book',

'clock', 'vase', 'scissors', 'teddy bear', 'hair drier', 'toothbrush'

]

self.remodel = transforms.Compose([transforms.ToTensor()])

self.image_path = image_path

self.picture = Picture.open(self.image_path)

# resize and plotting the picture

self.picture.resize([int(0.5 * s) for s in self.image.size])

del self.image_path

self.picture = self.remodel(self.picture)# predictions with none threshold

self.predict = self.mannequin([self.image])

self.predicted_classes = [(self.coco_instance_category_names[i], p) for

i, p in

zip(listing(self.predict[0]['labels'].numpy()),

self.predict[0]['scores'].detach().numpy())]return self.predicted_classes

Step 1.2: Detecting objects from every picture

The under code identifies numerous objects in every picture and appends them to an inventory referred to as detected_objects_list. This listing will probably be remodeled right into a transactional database within the subsequent step.

from PAMI.extras.imageProcessing import imagery2Databases as ob

# enter photographs path folder

images_path = 'aizu_dataset'# listing to retailer output objects

detected_objects_list = []# opening the pictures folder and studying every picture

for filename in glob.glob(os.path.be a part of(images_path,'*.JPG')):

with open(os.path.be a part of(os.getcwd(),filename),'r') as f:# loading pretrained resnet-50 mannequin to coach on our dataset

model_predict = objectDetection()# enter every picture to the pre-trained mannequin

# mannequin returns detected objects

objects_detected = model_predict.model_train(filename)

detected_objects_list.append(objects_detected)

Step 2: Making a transactional database

Prune the uninteresting class labels utilizing the under code. Save the remaining information as a transactional database.

#Prune uninteresting objects whose likelihood rating is lower than a specific worth, say 0.2

obj2db = ob.createDatabase(detected_objects_list,0.2)#save the objects recognized within the photographs as a transactional database

obj2db.saveAsTransactionalDB('aizu_dataset0.2.txt',',')

View the generated transactional database file by typing the next command:

!head -10 aizu_dataset0.2.txt

The output will probably be as follows:

bike,backpack,individual

e book,baseball bat,fridge,cup,toaster

bottle,bowl,television,bathroom,chair,mouse,fridge,mobile phone,microwave,distant,sink

microwave,fridge,bowl,bottle,mobile phone,oven,automotive,individual

bench

potted plant

bottle,purse,suitcase,e book

e book,laptop computer,television,umbrella

oven

parking meter,automotive

Step 3: Extract patterns within the transactional database.

Apply maximal frequent pattern-growth algorithm on the generated transactional database to find the hidden patterns. Within the under code, we’re discovering patterns (i.e., the units of sophistication labels) which have occurred at the least ten instances within the imagery database.

from PAMI.frequentPattern.maximal import MaxFPGrowth as algobj = alg.MaxFPGrowth('aizu_dataset0.2.txt',10, ',')

obj.startMine()

print(obj.getPatterns())

obj.savePatterns('aizuDatasetPatterns.txt')

print('Runtime: ' + str(obj.getRuntime()))

print('Reminiscence: ' + str(obj.getMemoryRSS()))

View the generated patterns by typing the next command:

!head -10 aizuDatasetPatterns.txt

The output will probably be as follows:

fridge microwave :11

bathroom :10

mobile phone :11

visitors mild :12

truck :12

potted plant :12

clock :15

bench :17

oven :17

automotive :18

The primary sample/line says the 11 photographs within the picture repository contained the class-labels fridge and microwave. Related statements could be made with remaining patterns/traces.

Realizing the correlation between the totally different objects/class labels could profit the customers for decision-making functions.

Conclusion:

Environment friendly identification of objects in imagery information has been broadly studied in trade and academia. A key query after figuring out the objects is, what’s the underlying correlation between the assorted objects within the imagery information? This weblog tries to reply this important query by offering a generic methodology for remodeling the found objects within the picture information right into a transactional database, making use of sample mining strategies, and discovering thrilling patterns.

Disclaimer:

- All photographs displayed on this web page have been drawn by the writer.

- The imagery database was created by the writer himself and open-sourced for use for each industrial and non-commercial functions.

References:

[1] Tuan-Vinh La, Minh-Son Dao, Kazuki Tejima, Rage Uday Kiran, Koji Zettsu: Enhancing the Consciousness of Sustainable Sensible Cities by Analyzing Lifelog Photographs and IoT Air Air pollution Knowledge. IEEE BigData 2021: 3589–3594

[2] Imagery dataset: aizu_dataset.zip