Overfitting is an idea in knowledge science that happens when a predictive mannequin learns to generalize properly on coaching knowledge however not on unseen knowledge

The easiest way to clarify what overfitting is is thru an instance.

Image this state of affairs: we’ve simply been employed as an information scientist in an organization that develops picture processing software program. The corporate lately determined to implement machine studying of their processes and the intention is to create software program that may distinguish authentic pictures from edited pictures.

Our job is to create a mannequin that may detect picture edits which have human beings as topics.

We’re excited concerning the alternative, and being our first job expertise, we work very exhausting to make a superb impression.

We correctly practice a mannequin, which seems to carry out very properly on the coaching knowledge. We’re very completely happy about it, and we talk our outcomes to the stakeholders. The subsequent step is to serve the mannequin in manufacturing with a small group of customers. We arrange every part with the technical workforce and shortly after the mannequin is on-line and outputs its outcomes to the take a look at customers.

The subsequent morning we open our inbox and skim a collection of discouraging messages. Customers have reported very unfavorable suggestions! Our mannequin doesn’t appear to have the ability to classify photos accurately. How is it potential that within the coaching part our mannequin carried out properly whereas now in manufacturing we observe such poor outcomes?

Easy. We’ve been sufferer of overfitting.

We’ve misplaced our job. What a blow!

The instance above represents a considerably exaggerated state of affairs. A novice analyst has not less than as soon as heard of the time period overfitting. It’s most likely one of many first phrases you be taught when working within the business, following or listening to on-line tutorials.

Nonetheless, overfitting is a phenomenon that’s virtually at all times noticed when coaching a predictive mannequin. This leads the analyst to repeatedly face the identical drawback which might be brought on by a mess of causes.

On this article I’ll speak about what overfitting is, why it represents the most important impediment that an analyst faces when doing machine studying and the way to forestall this from occurring by means of some strategies.

Though it’s a elementary idea in machine studying, explaining clearly what overfitting means just isn’t simple. It is because you must begin from what it means to coach a mannequin and consider its efficiency. On this article I write about what machine studying is and what it implies to coach a mannequin.

Referencing from the article talked about,

The act of exhibiting the information to the mannequin and permitting it to be taught from it’s known as coaching.[…].Throughout coaching, the mannequin tries to be taught the patterns in knowledge based mostly on sure assumptions. For instance, probabilistic algorithms base their operations on deducing the chances of an occasion occurring within the presence of sure knowledge.

When the mannequin is educated, we use an analysis metric to find out how far the mannequin’s predictions are from the precise noticed worth. For instance, for a classification drawback (just like the one in our instance) we might use the F1 rating to grasp how the mannequin is acting on the coaching knowledge.

The error made by the junior analyst within the introductory instance has to do with a foul interpretation of the analysis metric through the coaching part and the absence of a framework for validating the outcomes.

In actual fact, the analyst paid consideration to the mannequin’s efficiency throughout coaching, forgetting to take a look at and analyze the efficiency on the take a look at knowledge.

Overfitting happens when our mannequin learns properly to generalize coaching knowledge however not take a look at knowledge. When this occurs our algorithm fails to carry out properly with knowledge it has by no means seen earlier than. This utterly destroys its objective, making it a fairly ineffective mannequin.

This is the reason overfitting is an analyst’s worst enemy: it utterly defeats the aim of our work.

When a mannequin is educated, it makes use of a coaching set to be taught the patterns and map the function set to the goal variable. Nonetheless, it may possibly occur, as we’ve already seen, {that a} mannequin can begin studying noisy and even ineffective info — even worse, this info is barely current within the coaching set.

Our mannequin learns info that it doesn’t want (or just isn’t actually current) to do its job on new, unseen knowledge — comparable to these of customers in a stay manufacturing setting.

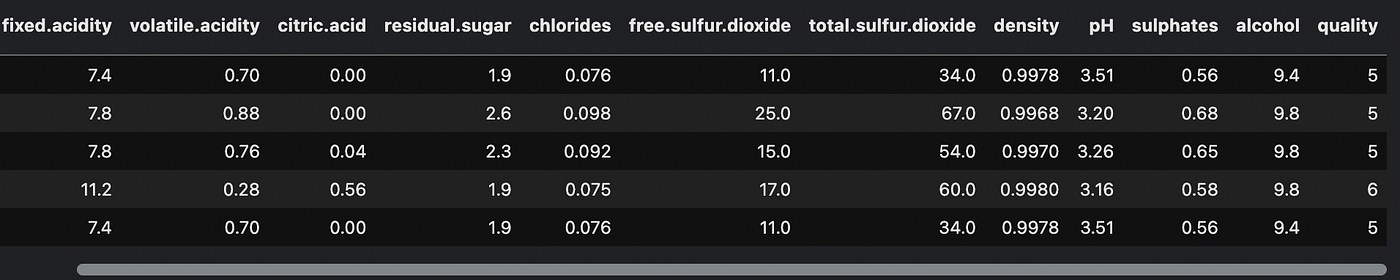

Let’s use the well-known Purple Wine Dataset from Kaggle to visualise a case of overfitting. This dataset has 11 dimensions that outline the standard of a purple wine. Based mostly on these we’ve to construct a mannequin able to predicting the standard of a purple wine, which is a worth between 1 and 10.

We are going to use a call tree-based classifier (Sklearn.tree.DecisionTreeClassifier) to point out how a mannequin might be led to overfit.

That is what the dataset appears to be like like if we print the primary 5 strains

We use this code to coach a call tree.

Practice accuracy: 0.623

Check accuracy: 0.591

We initialized our determination tree with the hyperparameter max_depth = 3. Let’s attempt utilizing a unique worth now — for instance 7.

clf = tree.DecisionTreeClassifier (max_depth = 7) # the remainder of the code stays the identical

Let’s take a look at the brand new values of accuracy

Practice accuracy: 0.754

Check accuracy: 0.591

Accuracy is growing for the coaching set, however not for the take a look at set. We put every part in a loop the place we’re going to modify max_depth dynamically and coaching a mannequin at every iteration.

Look how a excessive max_depth corresponds to a really excessive accuracy in coaching (touching values of 100%) however how that is round 55–60% within the take a look at set.

What we’re observing is overfitting!

In actual fact, the very best accuracy worth within the take a look at set might be seen at max_depth = 9. Above this worth the accuracy doesn’t enhance. It due to this fact is not sensible to extend the worth of the parameter above 9.

This worth of max_depth = 9 represents the “candy spot” — that’s, the best worth for not having a mannequin that overfits, however nonetheless be capable of generalize the information properly.

In actual fact, a mannequin may be very “superficial” and expertise underfitting, the alternative of overfitting. The “candy spot” is in steadiness between these two factors. The analyst’s job is to get as shut as potential so far.

Essentially the most frequent causes that lead a mannequin to overfill are the next:

- Our knowledge comprises noise and different non-relevant info

- The coaching and take a look at units are too small

- The mannequin is simply too complicated

The information comprises noise

When our coaching knowledge comprises noise, our mannequin learns these patterns after which tries to use that data on the take a look at set, clearly with out success.

The information is little and never consultant

If we’ve little knowledge, these will not be ample to be consultant of the truth that may then be supplied by the customers who will use the mannequin.

The mannequin is simply too complicated

A very complicated mannequin will deal with info that’s basically irrelevant to mapping the goal variable. Within the earlier instance, the choice tree with max_depth = 9 was neither too easy nor too complicated. Rising this worth led to a rise within the efficiency metric in coaching, however not in a take a look at setting.

There are a number of methods to keep away from overfitting. Right here we see the most typical and efficient ones for use virtually at all times

- Cross-validation

- Add extra knowledge to our dataset

- Take away options

- Use an early stopping mechanism

- Regularize the mannequin

Every of those strategies permits the analyst to grasp the efficiency of the mannequin properly and to succeed in the “candy spot” talked about above extra shortly.

Cross-validation

Cross-validation is a quite common and very highly effective approach that permits you to take a look at the mannequin’s efficiency on a number of validation “mini-sets”, as a substitute of utilizing a single set as we’ve accomplished beforehand. This permits us to grasp how the mannequin generalizes on completely different parts of all the dataset, thus giving a clearer concept of the conduct of the mannequin.

Add extra knowledge to our dataset

Our mannequin can get nearer to the candy spot just by integrating extra info. We should always improve the information each time we will with a view to supply our mannequin parts of “actuality” which can be more and more consultant. I like to recommend the reader to learn this text the place I clarify the way to construct a dataset from scratch.

Take away options

Function choice strategies (comparable to Boruta) may also help us perceive which options are ineffective for predicting the goal variable. Eradicating these variables may also help cut back background noise observing the mannequin.

Use an early stopping mechanism

Early stopping is a way primarily utilized in deep studying and consists in stopping the mannequin when there isn’t a improve in efficiency for a collection of coaching durations. This lets you save the state of the mannequin at its greatest time and use solely this greatest performing model.

Regularize the mannequin

By means of the tuning of the hyperparameters we will typically and willingly management the conduct of the mannequin to scale back or improve its complexity. We will modify these hyperparameters immediately throughout cross-validation to grasp how the mannequin performs on completely different knowledge splits.

Glad you made it right here. Hopefully you’ll discover this text helpful and implement snippets of it in your codebase.

If you wish to assist my content material creation exercise, be at liberty to observe my referral hyperlink beneath and be part of Medium’s membership program. I’ll obtain a portion of your funding and also you’ll be capable of entry Medium’s plethora of articles on knowledge science and extra in a seamless manner.

Have an amazing day. Keep properly