A crew of researchers with the Massachusetts Institute of Know-how (MIT) have been engaged on a brand new {hardware} resistor design for the following period of electronics scaling – notably in AI processing duties corresponding to machine studying and neural networks.

But in what could look like a throwback (if a throwback to the long run can exist), their work focuses on a design that is extra analog than digital in nature. Enter protonic programmable resistors – constructed to speed up AI networks by mimicking our personal neurons (and their interconnecting synapses) whereas accelerating their operation 1,000,000 occasions — and that is the precise determine, not simply hyperbole.

All of that is carried out whereas slicing down power consumption to a fraction of what is required by transistor-based designs presently used for machine-learning workloads, corresponding to Cerebras’ record-breaking Wafer Scale Engine 2 .

Whereas our synapses and neurons are extraordinarily spectacular from a computational standpoint, they’re restricted by their “wetware” medium: water.

Whereas water’s electrical conduction is sufficient for our brains to function, these electrical alerts work by means of weak potentials: alerts of about 100 millivolts propagating over milliseconds, by means of bushes of interconnected neurons (synapses correspond to the junctions by means of which neurons talk through electrical alerts). One subject is that liquid water decomposes with voltages of 1.23 V – roughly the identical working voltage utilized by the present greatest CPUs. So there is a problem in merely “repurposing” organic designs for computing.

“The working mechanism of the machine is electrochemical insertion of the smallest ion, the proton, into an insulating oxide to modulate its digital conductivity. As a result of we’re working with very skinny gadgets, we might speed up the movement of this ion by utilizing a robust electrical discipline, and push these ionic gadgets to the nanosecond operation regime,” explains senior creator Bilge Yildiz, the Breene M. Kerr Professor within the departments of Nuclear Science and Engineering and Supplies Science and Engineering.

One other subject is that organic neurons aren’t constructed on the identical scale as fashionable transistors are. They are much larger – ranging in sizes from 4 microns (.004 mm) to 100 microns (.1 mm) in diameter. When the most recent accessible GPUs already carry transistors on the 6 nm vary (with a nanometre being 1,000 occasions smaller than a micron), you’ll be able to virtually think about the distinction in scale, and the way way more of those synthetic neurons you’ll be able to match into the identical house.

The analysis targeted on creating solid-state resistors which, because the title implies, create resistance to electrical energy’s passage. Particularly, they resist the ordered motion of electrons (negatively-charged particles). If utilizing materials that resists electrical energy’s motion (and that thus ought to in flip generate warmth) sounds counterintuitive, nicely, it’s. However there are two distinct benefits to analog deep-learning in comparison with its digital counterpart.

First, in programming resistors, you might be together with the required information for coaching within the resistors themselves. Whenever you program their resistance (on this case, by growing or decreasing the variety of protons in sure areas of the chip), you are including values to sure chip constructions. Which means that data is already current within the analog chips: There is no must ferry extra of it out and in in the direction of exterior reminiscence banks, which is strictly what occurs in most present chip designs (and RAM or VRAM). All of this protects latency and power.

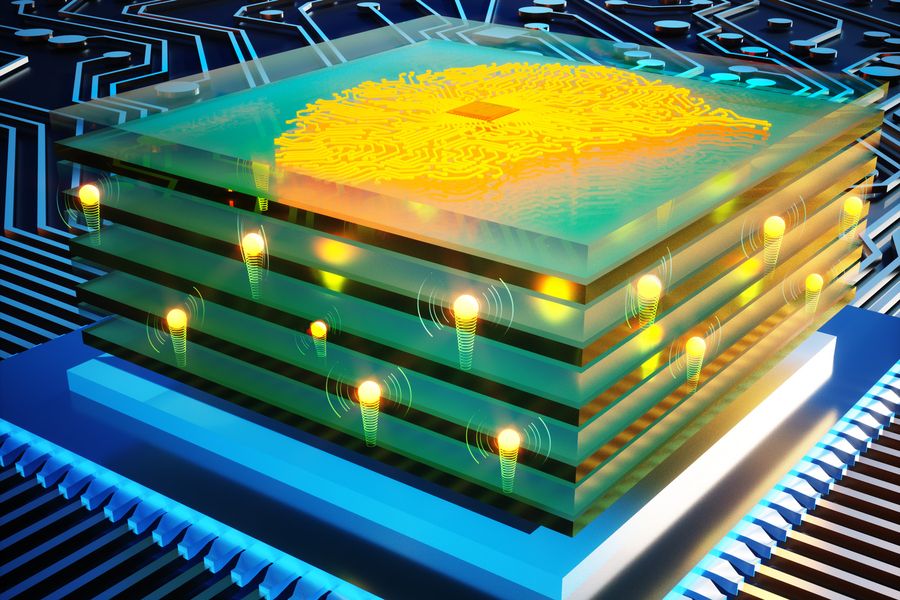

Second, MIT’s analog processors are architected in a matrix (keep in mind Nvidia’s Tensor cores?). This implies they’re extra like your GPUs than your CPUs, in that they conduct operations in parallel. All computation occurs concurrently.

MIT’s protonic resistor design operates at room temperature, which is less complicated to realize than our mind’s 38.5 ºC by means of 40 ºC. But it additionally permits for voltage modulation, a required characteristic in any fashionable chip, permitting the enter voltage to be elevated or decreased in response to the necessities of the workload – with penalties on energy consumption and temperature output.

In keeping with the researchers, their resistors are 1,000,000 occasions quicker (once more, an precise determine) than previous-generation designs, resulting from them being constructed with phosphosilicate glass (PSG), an inorganic materials that’s (shock) suitable with silicon manufacturing strategies, as a result of it is primarily silicon dioxide.

You have seen it your self already: PSG is the powdery desiccant materials present in these tiny baggage that come within the field with new {hardware} items to take away moisture.

“With that key perception, and the very highly effective nanofabrication strategies we’ve at MIT.nano , we’ve been in a position to put these items collectively and show that these gadgets are intrinsically very quick and function with cheap voltages,” says senior creator Jesús A. del Alamo, the Donner Professor in MIT’s Division of Electrical Engineering and Laptop Science (EECS). “This work has actually put these gadgets at some extent the place they now look actually promising for future functions.”

Similar to with transistors, the extra resistors in a smaller space, the upper the compute density. Whenever you attain a sure level, this community will be educated to realize advanced AI duties like picture recognition and pure language processing. And all that is carried out with lowered energy necessities and intensely elevated efficiency.

Maybe supplies analysis will save Moore’s Regulation from its premature demise.