Meta’s Make-A-Video AI generator permits customers to show textual content prompts into temporary, generally creepy, high-quality video clips. The expertise builds off of the corporate’s Make-A-Scene that was introduced in July of this yr.

Know-how, reminiscent of OpenAI’s DALL-E, has been making headlines as of late by permitting customers to show easy textual content prompts into AI generated artwork. To not be ignored, Meta introduced its personal model of the tech, referred to as Make-A-Scene not too long ago. Now, the corporate has taken the AI technology software to the following degree by asserting its Make-A-Video AI system.

Based on the announcement, the system learns what the world appears to be like like from paired text-image information, in addition to how the world strikes from video footage with none textual content related. The corporate states it’s sharing the main points of the expertise by means of a analysis paper and a deliberate demo expertise, in accordance to its dedication to open science.

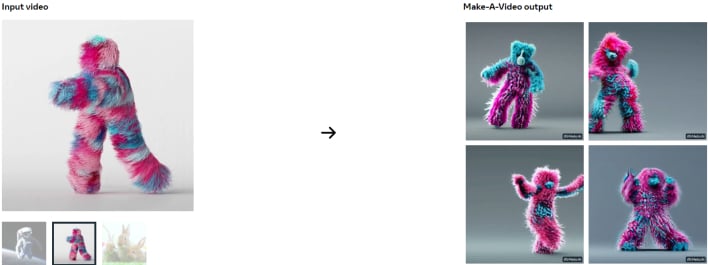

Meta shouldn’t be limiting the expertise to easy textual content prompts, nevertheless. You can even add pictures or quick movies of your individual so as to make an AI generated video clip. The corporate states, “Make-A-Video can carry creativeness to life and create one-of-a-kind movies full of colours, characters, and landscapes.”

The tech big says it needs to be “considerate” about the way it builds its new generative AI system of this nature. The AI will entry publicly accessible datasets, which Meta says provides an additional degree of transparency to its analysis. Additionally it is searching for the communities suggestions, because it continues to make the most of its accountable AI framework to “refine and evolve” its strategy to the rising expertise.

If you need to be taught extra about Meta’s Make-A-Video and submit a request to make use of the software, you are able to do so by visiting the web site. It does seem that Meta is at the moment searching for a selected set of customers, reminiscent of researchers, artists, and press. Nonetheless, if you’re not in a position to entry the expertise but, Meta does say it intends on making it accessible for public use sooner or later. You can even view the corporate’s analysis paper on arxiv.org.