There‘s been a flood of reports about OpenAI‘s new GPT-3 Chatbot. For all of the very actual critiques, it does an astounding and fascinating job of manufacturing cheap responses. What does it imply for risk modeling? There‘s actual promise that it will remodel risk modeling as we all know it.

For readability, I am going to simply name it “chatbot.“ The particular examples I take advantage of are from OpenAI‘s implementation, however we are able to take into consideration this as a brand new kind of technical functionality that others will begin to supply, and so I am going to transcend what we see as we speak.

Let‘s begin with what it might do, ask what can go incorrect, see if we are able to handle these points, after which consider.

What Chatbots Can Do

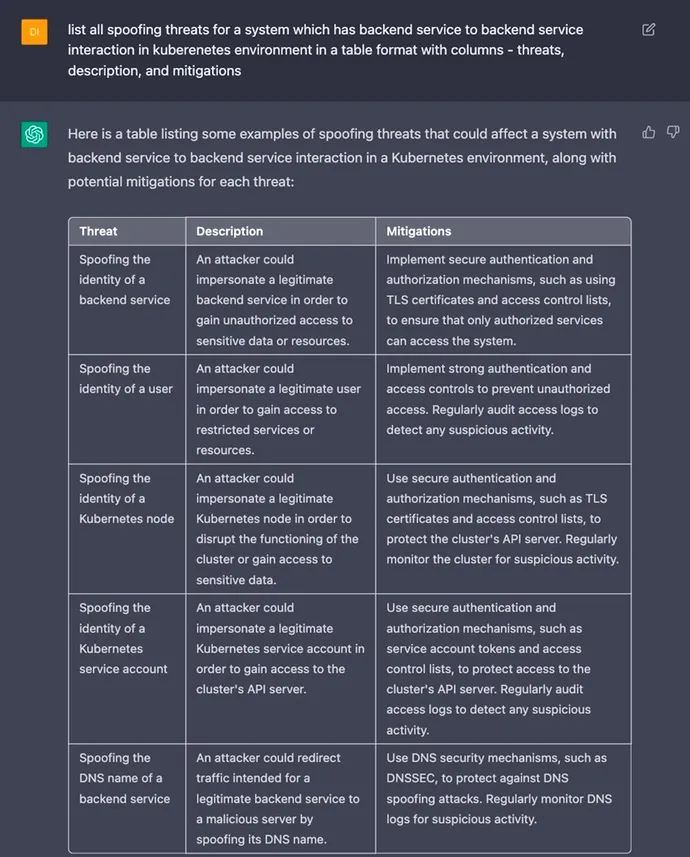

On the Open Net Utility Safety Venture® (OWASP) Slack, @DS shared a screenshot the place he requested it to “record all spoofing threats for a system which has back-end service to back-end service interplay in Kubernetes surroundings in a desk format with columns — threats, description, and mitigations.“

The output is fascinating. It begins “Here’s a desk itemizing some examples …“ Notice the change from “all“ to “some examples.“ However extra to the purpose, the desk is not unhealthy. As @DS says, it supplied him with a base, saving hours of handbook evaluation work. Others have used it to elucidate what code is doing or to discover vulnerabilities in that code.

Chatbots (extra particularly right here Giant Language Fashions, together with GPT-3) don‘t actually know something. What they do beneath the hood is decide statistically seemingly subsequent phrases to answer a immediate. What meaning is they’re going to parrot the threats that somebody has written about of their coaching information. On high of that, they’re going to use image alternative for one thing that seems to our anthropomorphizing brains to be reasoning by analogy.

After I created the Microsoft SDL Menace Modeling Software, we noticed folks open the device and be not sure what to do, so we put in a easy diagram that they might edit. We talked about it addressing “clean web page syndrome.“ Many individuals run into that downside as they‘re studying risk modeling.

What Can Go Mistaken?

Whereas chatbots can produce lists of threats, they‘re probably not analyzing the system that you simply’re engaged on. They‘re more likely to miss distinctive threats, and they‘re more likely to miss nuance {that a} expert and centered particular person would possibly see.

Chatbots will get adequate, and that “principally good sufficient“ is sufficient to lull folks into stress-free and not paying shut consideration. And that appears actually unhealthy.

To assist us consider it, let‘s step approach again, and take into consideration why we risk mannequin.

What Is Menace Modeling? What Is Safety Engineering?

We risk mannequin to assist us anticipate and tackle issues, to ship safer techniques. Engineers risk mannequin to light up safety points as they make design commerce–offs. And in that context, having an infinite provide of cheap potentialities appears way more thrilling than I anticipated once I began this essay.

I’ve described risk modeling as a type of reasoning by analogy, and identified that many flaws exist just because nobody knew to search for them. As soon as we look in the appropriate place, with the appropriate information, the flaws could be fairly apparent. (That‘s so essential that making that simpler is the important thing objective of my new e-book.)

Many people aspire to do nice risk modeling, the sort the place we uncover an thrilling situation, one thing that‘ll get us a pleasant paper or weblog submit, and in the event you simply nodded alongside there … it‘s a entice.

A lot of software program improvement is boring administration of a seemingly never-ending set of particulars, such as iterating over lists to place issues into new lists, then sending them to the following stage in a pipeline. Menace modeling, like check improvement, could be helpful as a result of it offers us confidence in our engineering work.

When Do We Step Again?

Software program is tough as a result of it‘s really easy. The obvious malleability of code makes it simple to create, and it‘s exhausting to understand how typically or how deeply to step again. An excessive amount of our vitality in managing giant software program initiatives (together with each bespoke and general-use software program) goes to assessing what we‘re doing, and getting alignment on priorities — all these different duties are completed sometimes, slowly, not often, as a result of they‘re costly.

It‘s not what the chatbots do as we speak, however I might see comparable software program being tuned to report how a lot any given enter adjustments its fashions. Wanting throughout software program commits, discussions in electronic mail and Slack, tickets, and serving to us assess its similarity to different work might profoundly change the vitality wanted to maintain initiatives (large or small) on monitor. And that, too, contributes to risk modeling.

All of this frees up human cycles for extra fascinating work.