A really foolish weblog put up got here out a pair months in the past about The Unbundling of Airflow. I didn’t absolutely learn the article, however I noticed its title and skimmed it sufficient to suppose that it’d’ve been too skinny of an argument to carry water however simply thick sufficient to clickbait the VC world with the phrase “unbundling” whereas concurrently Cunningham’s Legislation-ing the info world. There was actually a Twitter discourse.

They are saying imitation is the sincerest type of flattery, however I don’t know if that applies right here. However, you’re at present studying a weblog put up about information issues that’s most likely improper and has the phrase “unbundling” in it.

I truly don’t care that a lot concerning the bundling argument that I’ll make on this put up. In truth, I simply wish to argue that characteristic shops, metrics layers, and machine studying monitoring instruments are all abstraction layers on the identical underlying ideas, and 90% of firms ought to simply implement these “purposes” in SQL on high of streaming databases.

I principally wish to argue this Materialize quote-tweet of Josh Wills’ second viral tweet:

https://t.co/0JfQeATA8Y pic.twitter.com/VD72F9UqY8

— Materialize (@MaterializeInc) March 29, 2022

And I will argue this. You, nevertheless, should first wade by means of my bundling thesis in order that I could make my area of interest argument a bit extra grandiose and thought leader-y.

Whereas I don’t actually purchase the unbundling Airflow argument, I do admit that issues are usually not trying nice for Airflow, and that’s nice! On this golden age of Developer Expertise™, Airflow feels just like the DMV. A terrific Airflow-killer has been dbt. As an alternative of constructing out gnarly, untestable, brittle Airflow DAGs for sustaining your information warehouse, dbt enables you to construct your DAG purely in SQL. Certain, it’s possible you’ll have to schedule dbt runs with Airflow, however you don’t must take care of the mess that’s Airflow orchestration.

If something, dbt bundled all people’s customized, hacky DAGs that blended SQL, python, bash, YAML, XCom, and all kinds of different issues into straight SQL that pushes computation all the way down to the database. It is a pretty bundling, and individuals are clearly very glad about it. On high of this, we now have the Trendy Knowledge Stack™ which signifies that we now have connectors like Fivetran and Sew that assist us to shove all kinds of disparate information into Snowflake, the cloud information warehouse du jour.

My weblog (and I) are inclined to skew pretty machine learning-y, so for these unfamiliar – the whole lot I’ve described constitutes the great new world of Analytics Engineering™, and it truly is a incredible time to be an Analytics Engineer. There’s a clearly outlined stack, greatest practices exist, and a single individual can get up a massively scalable information warehouse at a younger startup. It is a excellent match for consulting, by the best way.

After which there’s the machine studying engineering world, or MLOps™ because it’s regrettably being referred to as. I can confidently say that there’s no Trendy ML Stack. This discipline is a clusterfuck that’s absolutely in want of some bundling, so I suggest that we experience the dbt prepare and consolidate into the database.

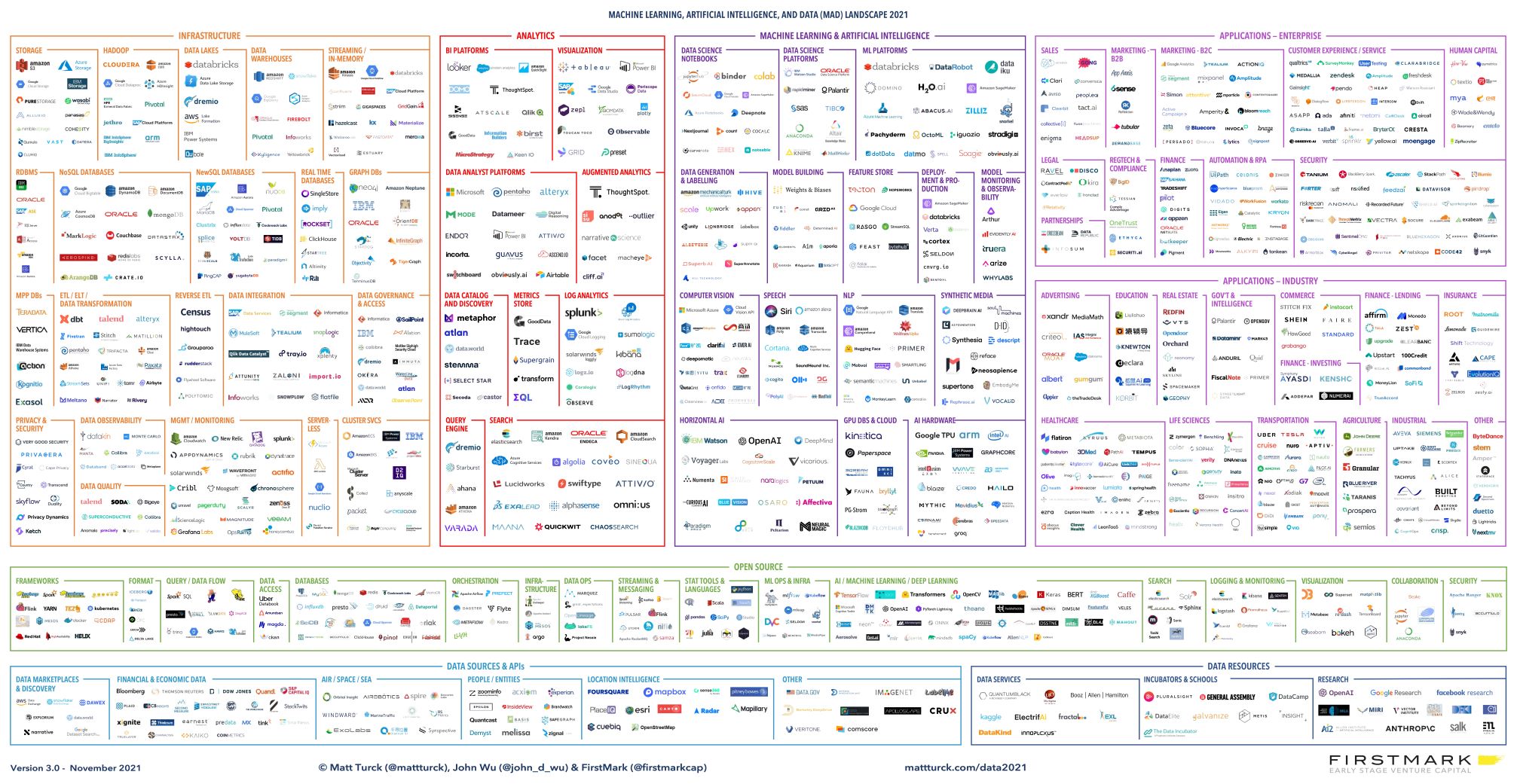

The 2021 Machine Studying, AI and Knowledge (MAD) Panorama

Coming down from the lofty thought chief clouds, I’m going to select ML monitoring as my straw man case examine since that is an space that I’m personally thinking about, having unspectacularly failed to launch a startup on this house a pair years in the past. For the sake of this weblog put up, ML monitoring entails monitoring the efficiency or high quality of your machine studying mannequin. At their core, ML fashions predict issues, after which we later discover out details about these issues. An advert rating mannequin could predict {that a} person will click on on an advert, after which we later fireplace an occasion if the person clicked. A forecasting mannequin could predict the variety of items that shall be offered subsequent month, and on the finish of subsequent month we calculate what number of items had been offered. The mannequin efficiency corresponds to how precisely the predictions match the eventual outcomes, or floor reality.

The way in which most ML monitoring instruments work is that you must ship them your mannequin predictions and ship them your floor reality. The instruments then calculate varied efficiency metrics like accuracy, precision, and recall. You typically get some good plots of those metrics, you may slice and cube alongside varied dimensions, setup alerts, and many others…

Whereas that is all properly and good and there are a lot of firms elevating thousands and thousands of {dollars} to do that, I might argue that commonplace ML efficiency metrics (the aforementioned accuracy, precision, recall, and so forth) are not the very best metrics to be measuring. Ideally, your ML mannequin is impacting the world not directly, and what you truly care about are some enterprise metrics which might be both associated to or downstream of the mannequin. For a fraud detection mannequin, it’s possible you’ll wish to weight your false positives and false negatives by some value or monetary loss. With a view to monitor these different enterprise metrics, you should get that information into the ML monitoring system. And that is annoying.

For each ML mannequin that I’ve ever deployed, I find yourself writing complicated SQL that joins throughout many tables. I’ll even want to question a number of databases after which sew the outcomes along with Python. I don’t wish to must ship all of my information to some third celebration device; I’d relatively the device come to my information.

I ran a survey a pair years in the past, and many individuals both manually monitored their ML fashions or automated a SQL question or script. If that’s the case, then why use a SaaS firm for monitoring?

You possibly can make the argument that an ML monitoring device offers a unified method to monitor ML fashions such that you just don’t must rewrite SQL queries and reinvent the wheel for every new mannequin that you just deploy. You realize, mo’ fashions, mo’ issues.

I don’t suppose that is the place the worth lies, and I don’t suppose that is what you ought to be doing. For any new sort of mannequin “use case” that you just deploy, it’s best to most likely be considering deeply concerning the metrics that matter for measuring the mannequin’s enterprise influence relatively than resting on the laurels of the naked F1 rating.

I might argue that the actual good thing about ML monitoring instruments is that they supply superior infrastructure that means that you can monitor efficiency in actual time. It is a requirement for fashions which might be producing actual time predictions and carry sufficient danger that you should monitor the mannequin faster than some fastened schedule. To watch in near “actual time”, you’ll need a system that does two particular issues with low latency:

- Deduplicate and be part of excessive cardinality keys for information that comes from completely different sources and arrives at completely different time limits.

- Calculate mixture statistics over particular time home windows.

Let me be extra concrete. Think about I construct a conversion prediction mannequin for my SaaS firm. Customers land on the touchdown web page, they provide me their electronic mail handle, after which a few of them buy a subscription whereas others don’t. I’ll retailer a lead’s electronic mail handle in Postgres, I predict whether or not they’ll convert and fireplace an occasion to my eventing system, after which I file their conversion data in Postgres.

If I wish to calculate the accuracy of my conversion prediction mannequin, I would like to affix every lead’s information between Postgres and wherever my occasions reside. The conversion data could happen a lot later than both the lead or prediction data. I then have to mixture this information throughout some window of time with a view to calculate the mannequin accuracy.

In SQL, the trailing 3 hour accuracy would look one thing like

SELECT

-- Rely Distinct leads since we'll may fireplace

-- duplicate prediction occasions.

COUNT(DISTINCT

IFF(

-- True Constructive

(predictions.label = 1 AND conversions.lead_id IS NOT NULL)

-- True Unfavourable

OR (predictions.label = 0 AND conversions.lead_id IS NULL)

, leads.id

, NULL

)

)::FLOAT / COUNT(DISTINCT leads.id) AS accuracy

FROM leads

-- Predictions could come from some occasion system, whereas

-- leads + conversions could also be within the OLTP DB.

JOIN predictions ON predictions.lead_id = leads.id

LEFT JOIN conversions ON conversions.lead_id = leads.id

WHERE

leads.created_at > NOW() - INTERVAL '3 hours'

-- NOTE: we're ignoring the bias incurred by current leads

-- which possible haven't had time to transform since we're

-- assuming that they didn't convert. When the shortage of a

-- prediction label is a label, time performs an essential position!

Sure, I can shove all my information into Snowflake and run a question to do that. However, I can’t do that for current information with low sufficient latency that this appears like an actual time calculation. ML monitoring instruments do that for you.

If we zoom again out to different areas that I discussed earlier on this put up, comparable to characteristic shops and metrics layers, we are able to see that they’ve the identical 2 necessities as an ML monitoring system.

Think about we construct a characteristic in our characteristic retailer for the conversion prediction mannequin which is “the trailing 3 hour conversion price for leads referred by Fb”. That is an mixture statistic over a 3 hour time window. For coaching information in our characteristic retailer, we have to calculate this characteristic worth on the time of earlier predictions. You don’t wish to leak future information into the previous, and that is simple to do when calculating conversion charges since conversions occur a while after the prediction time. For actual time serving of the characteristic, we have to calculate the present worth of this characteristic with low latency.

So how can we do these calculations? We are able to use separate distributors for every use case (ML monitoring, characteristic retailer, metrics layer, and many others…). We may additionally attempt to construct a common system ourselves, though it’s truthfully not tremendous simple proper now. Subsequent era databases like ClickHouse and Druid are choices. I’m significantly enthusiastic about Materialize. It’s easy like Postgres, it’s simple to combine with varied information sources, and so they’re making strikes to horizontally scale. Lest you suppose I’m only a crank, Materialize lately wrote a weblog put up (and offered code) a few proof of precept characteristic retailer constructed on Materialize (although, this doesn’t show that I’m not a crank).

To be clear, I’m not making an attempt to tug a “construct your personal Dropbox“. All these distributors absolutely present vital worth. Materialize possible received’t be capable to fulfill some groups’ latency necessities, there are sophisticated points round ACLs and PII, clever alerting is hard, there are good issues you are able to do on high of those methods like mannequin explainability, and many others… My argument is that there are possible a good variety of firms that may get by simply positive with constructing their very own system on a performant database. Just some fraction of firms want to resolve these area of interest issues, and it’s a good smaller fraction which have extra stringent necessities than a streaming database can clear up for.

If a streaming database turns into the substrate on which to construct issues like ML monitoring and have shops, then how ought to we construct such instruments? In case you take out the infrastructure piece that could be a core a part of vendor’s options proper now, then what’s left? I assume it’s largely software program that’s left? Distributors can (and can) present this software program, however that decreases their worth proposition.

You’ll be able to write your personal code to handle your personal ML monitoring system. I believe this state of affairs appears ripe for an open supply framework. I’d like to see some opinionated framework round the best way to construct an ML monitoring device in SQL. Get me setup with greatest practices, and permit me to keep away from some boilerplate. I assume you may monetize by providing a managed resolution if you really want to. I don’t, so perhaps I’ll attempt to put some open supply code the place my mouth is.

–

Writer’s Notes:

- I ought to be aware that I’ve by no means truly used

dbt. As a former physicist, I do really feel assured that I perceive this space outdoors of my experience properly sufficient to opine on it, although. - Talking of unfound confidence, you’ll be aware that I didn’t actually delve into metrics layers. I don’t know that a lot about them, however it appears like they match this similar paradigm as characteristic shops and ML monitoring instruments? Apart from, the analytics engineering world is way larger than the ML world, so mentioning metrics layers will increase the highest of my weblog funnel.