What each information scientist ought to know concerning the shell

It’s now 50 years previous, and we nonetheless can’t work out what to name it. Command line, shell, terminal, bash, immediate, or console? We will confer with it because the command line to maintain issues constant.

The article will deal with the UNIX-style (Linux & Mac) command line and ignore the remaining (like Home windows’s command processor and PowerShell) for readability. We’ve noticed that almost all information scientists are on UNIX-based techniques nowadays.

The command line is a text-based interface to your pc. You’ll be able to consider it type of as “popping the hood” of an working system. Some folks mistake it as only a relic of the previous however don’t be fooled. The trendy command line is rocking like by no means earlier than!

Again within the day, text-based enter and output had been all you bought (after punch playing cards, that’s). Just like the very first vehicles, the primary working techniques didn’t actually have a hood to pop. Every part was in plain sight. On this setting, the so-called REPL (read-eval-print loop) methodology was the pure approach to work together with a pc.

REPL implies that you sort in a command, press enter, and the command is evaluated instantly. It’s totally different from the edit-run-debug or edit-compile-run-debug loops, which you generally use for extra sophisticated packages.

The command line typically follows the UNIX philosophy of “Make every program do one factor properly”, so primary instructions are very simple. The elemental premise is that you are able to do advanced issues by combining these easy packages. The previous UNIX neckbeards confer with “having a dialog with the pc.”

Nearly any programming language on this planet is extra highly effective than the command line, and most point-and-click GUIs are less complicated to study. Why would you even hassle doing something on the command line?

The primary cause is pace. Every part is at your fingertips. For telling the pc to do easy duties like downloading a file, renaming a bunch of folders with a selected prefix, or performing a SQL question on a CSV file, you actually can’t beat the agility of the command line. The educational curve is there, however it’s like magic after getting internalized a primary set of instructions.

The second cause is agnosticism. No matter stack, platform, or know-how you’re at the moment utilizing, you’ll be able to work together with it from the command line. It’s just like the glue between all issues. It’s also ubiquitous. Wherever there’s a pc, there’s additionally a command line someplace.

The third cause is automation. Not like in GUI interfaces, the whole lot completed within the command line can finally be automated. There’s zero ambiguity between the directions and the pc. All these repeated clicks within the GUI-based instruments that you simply waste your life on will be automated in a command-line setting.

The fourth cause is extensibility. Not like GUIs, the command line could be very modular. The straightforward instructions are good constructing blocks to create advanced performance for myriads of use-cases, and the ecosystem continues to be rising after 50 years. The command line is right here to remain.

The fifth cause is that there aren’t any different choices. It is not uncommon that a few of the extra obscure or bleeding-edge options of a 3rd get together service is probably not accessible by way of GUI in any respect and may solely be used utilizing a CLI (Command Line Interface).

There are roughly 4 layers in how the command-line works:

Terminal = The appliance that grabs the keyboard enter passes it to this system being run (e.g. the shell) and renders the outcomes again. As all fashionable computer systems have graphical person interfaces (GUI) nowadays, the terminal is a needed GUI frontend layer between you and the remainder of the text-based stack.

Shell = A program that parses the keystrokes handed by the terminal software and handles working instructions and packages. Its job is mainly to seek out the place the packages are, handle issues like variables, and likewise present fancy completion with the TAB key. There are totally different choices like Bash, Sprint, Zsh, and Fish, to call just a few. All with barely totally different units of built-in instructions and choices.

Command = A pc program interacting with the working system. Widespread examples are instructions like ls, mkdir, and rm. Some are prebuilt into the shell, some are compiled binary packages in your disk, some are textual content scripts, and a few are aliases pointing to a different command, however on the finish of the day, they’re all simply pc packages.

Working system = This system that executes all different packages. It handles the direct interplay with all of the {hardware} just like the CPU, onerous disk, and community.

The command line tends to look barely totally different for everybody.

There’s normally one factor in widespread, although: the immediate, seemingly represented by the greenback signal ($). It’s a visible cue for the place the standing ends and the place you can begin typing in your instructions.

On my pc, the command line says:

juha@ubuntu:~/hey$

The juha is my username, ubuntu is my pc title, and ~/hey is my present working listing.

And what’s up with that tilde (~) character? What does it even imply that the present listing is ~/hey?

Tilde is shorthand for the house listing, a spot for all of your private information. My house listing is /house/juha, so my present working listing is /house/juha/hey, which shorthands to ~/hey. (The conference ~username refers to somebody’s house listing generally; ~juha refers to my house listing and so forth.)

Any longer, we’ll omit the whole lot else besides the greenback signal from the immediate to maintain our examples cleaner.

Earlier, we described instructions merely as pc packages interacting with the working system. Whereas right, let’s be extra particular.

If you sort one thing after the immediate and press enter, the shell program will try and parse and execute it. Let’s say:

$ generate million {dollars} generate: command not discovered

The shell program takes the primary full phrase generate and considers {that a} command.

The 2 remaining phrases, million and {dollars}, are interpreted as two separate parameters (typically referred to as arguments).

Now the shell program, whose duty is to facilitate the execution, goes on the lookout for a generate command. Generally it’s a file on a disk and typically one thing else. We’ll talk about this intimately in our subsequent chapter.

In our instance, no such command referred to as generate is discovered, and we find yourself with an error message (that is anticipated).

Let’s run a command that truly works:

$ df --human-readableFilesystem Dimension Used Avail Use% Mounted on

sysfs 0 0 0 - /sys

proc 0 0 0 - /proc

udev 16G 0 16G 0% /dev

. . .

Right here we run a command “ df” (brief for disk free) with the “--human-readable” choice.

It is not uncommon to make use of “-” (sprint) in entrance of the abbreviated choice and “ — “ (double-dash) for the long-form. (These conventions have advanced over time; see this weblog put up for extra info.)

For instance, these are the identical factor:

$ df -h

$ df --human-readable

You’ll be able to typically additionally merge a number of abbreviated choice after a single sprint.

df -h -l -a

df -hla

Notice: The formatting is in the end as much as every command to resolve, so don’t assume these guidelines as common.

Since some characters like area or backslash have a particular which means, it’s a good suggestion to wrap string parameters into quotes. For bash-like shells, there’s a distinction between single (‘) and double-quotes (“), although. Single quotes take the whole lot actually, whereas double quotes permit the shell program to interpret issues like variables. For instance:

$ testvar=13

$ echo "$testvar"

13

$ echo '$testvar'

$testvar

If you wish to know all of the obtainable choices, you’ll be able to normally get a list with the --help parameter:

Tip: The widespread factor to sort into the command line is an extended file path. Most shell packages supply TAB key to auto-complete paths or instructions to keep away from repetitive typing. Attempt it out!

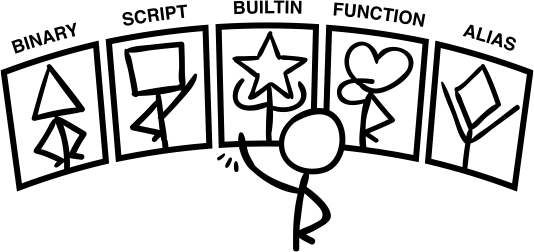

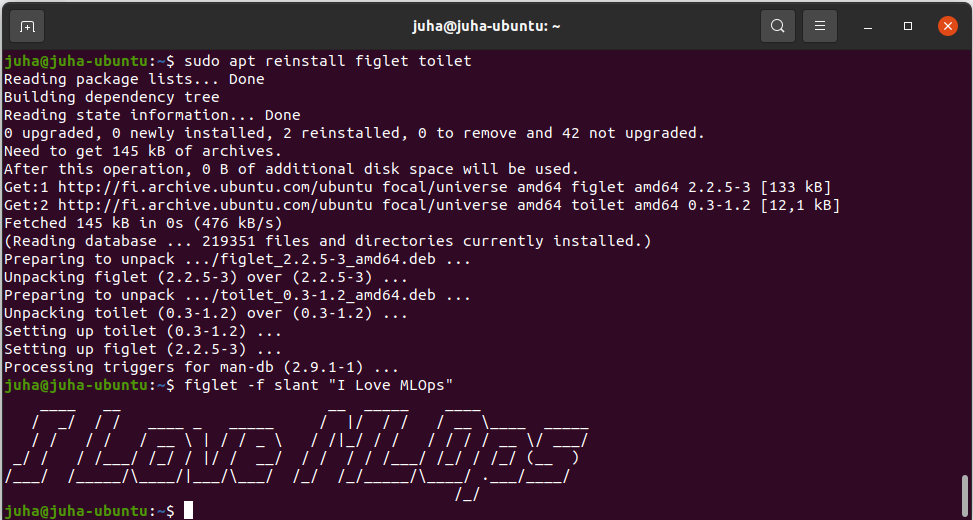

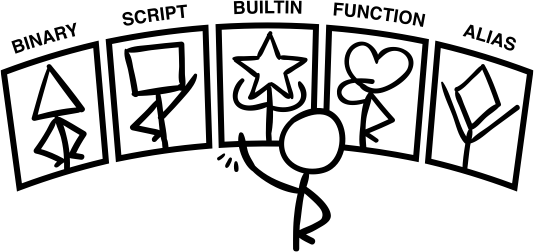

There are 5 several types of instructions: binary, script, builtin, operate, and alias.

We will cut up them into two classes, file-based and digital.

Binary and script instructions are file-based and executed by creating a brand new course of (an working system idea for a brand new program). File-based instructions are usually extra advanced and heavyweight.

Builtins, capabilities, and aliases are digital, and they’re executed inside the current shell course of. These instructions are largely easy and light-weight.

A binary is a traditional executable program file. It incorporates binary directions solely understood by the working system. You’ll get gibberish should you attempt to open it with a textual content editor. Binary information are created by compiling supply code into the executable binary file. For instance, the Python interpreter command python is a binary executable.

For binary instructions, the shell program is accountable for discovering the precise binary file from the file system that matches the command title. Don’t anticipate the shell to go searching in all places in your machine for a command, although. As an alternative, the shell depends on an setting variable referred to as $PATH, which is a colon-delimited (:) checklist of paths to iterate over. The primary match is all the time chosen.

To examine your present $PATH, do that:

If you wish to work out the place the binary file for a sure command is, you’ll be able to name the which command.

$ which python /house/juha/.pyenv/shims/python

Now that you understand the place to seek out the file, you should utilize the file utility to determine the final sort of the file.

$ file /house/juha/.pyenv/shims/pip

/house/juha/.pyenv/shims/pip: Bourne-Once more shell script textual content executable, ASCII textual content

$ file /usr/bin/python3.9

/usr/bin/python3.9: ELF 64-bit LSB executable, x86-64, model 1 (SYSV), dynamically linked, interpreter /lib64/ld-linux-x86-64.so.2, for GNU/Linux 3.2.0, stripped

A script is a textual content file containing a human-readable program. Python, R, or Bash scripts are some widespread examples, which you’ll be able to execute as a command.

Normally we don’t execute our Python scripts as instructions however use the interpreter like this:

$ python hey.py

Hey world

Right here python is the command, and hey.py is only a parameter for it. (In the event you take a look at what python --help says, you’ll be able to see it corresponds to the variation “file: program learn from script file”, which actually does make sense right here.)

However we will additionally execute hey.py as instantly as a command:

$ ./hey.py

Hey world

For this to work, we’d like two issues. Firstly, the primary line of hey.py must outline a script interpreter utilizing a particular #! Notation.

#!/usr/bin/env python3

print("Hey world")

The #! notation tells the working system which program is aware of find out how to interpret the textual content within the file and has many cool nicknames like shebang, hashbang, or my absolute favourite the hash-pling!

The second factor we’d like is for the file to be marked executable. You do this with the chmod (change mode) command: chmod u+x hey.py will set the eXecutable flag for the proudly owning Person.

A builtin is a straightforward command hard-coded into the shell program itself. Instructions like cd, echo, alias, and pwd are normally builtins.

In the event you run the assist command (which can be a builtin!), you may get a listing of all of the builtin instructions.

A operate is like an additional builtin outlined by the person. For instance:

$ hey() { echo 'hey, world'; }

Can be utilized as a command:

$ hey

hey, world

If you wish to checklist all of the capabilities at the moment obtainable, you’ll be able to name (in Bash-like shells):

Aliases are like macro. A shorthand or an alternate title for a extra sophisticated command.

For instance, you need new command showerr to checklist latest system errors:

$ alias showerr="cat /var/log/syslog"

$ showerr

Apr 27 10:49:20 juha-ubuntu gsd-power[2484]: failed to show the kbd backlight off: GDBus.Error:org.freedesktop.UPower.GeneralError: error writing brightness

. . .

Since capabilities and aliases will not be bodily information, they don’t persist after closing the terminal and are normally outlined within the so-called profile file ~/.bash_profile or the ~/.bashrc file, that are executed when a brand new interactive or login shell is began. Some distributions additionally help a ~/.bash_aliases file (which is probably going invoked from the profile file — it is scripts all the way in which down!).

If you wish to get a listing of all of the aliases at the moment energetic to your shell, you’ll be able to simply name the alias command with none parameters.

Just about something that occurs in your pc occurs inside processes. Binary and script instructions all the time begin a brand new course of. Builtins, capabilities, and aliases piggyback on the present shell program’s course of.

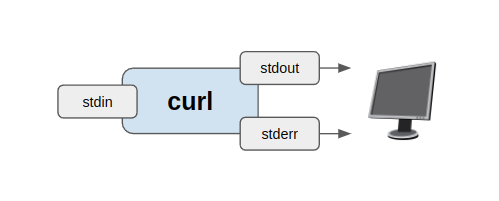

A course of is an working system idea for working an occasion of a command (program). Every course of will get an ID, its personal reserved reminiscence area, and safety privileges to do issues in your system. Every course of additionally has a regular enter ( stdin), customary output ( stdout), and customary error ( stderr) streams.

What are these streams? They’re merely arbitrary streams of knowledge. No encoding is specified, which implies it may be something. Textual content, video, audio, morse-code, regardless of the creator of the command felt applicable. In the end your pc is only a glorified information transformation machine. Thus it is sensible that each course of has an enter and output, similar to capabilities do. It additionally is sensible to separate the output stream from the error stream. In case your output stream is a video, then you definately don’t need the bytes of the text-based error messages to get combined together with your video bytes (or, within the Seventies, when the usual error stream was applied after your phototypesetting was ruined by error messages being typeset as an alternative of being proven on the terminal).

By default, the stdout and stderr streams are piped again into your terminal, however these streams will be redirected to information or piped to turn out to be an enter of one other course of. Within the command line, that is completed through the use of particular redirection operators (|,>,<,>>).

Let’s begin with an instance. The curl command downloads an URL and directs its customary output again into the terminal as default.

$ curl https://filesamples.com/samples/doc/csv/sample1.csv

"Could", 0.1, 0, 0, 1, 1, 0, 0, 0, 2, 0, 0, 0

"Jun", 0.5, 2, 1, 1, 0, 0, 1, 1, 2, 2, 0, 1

"Jul", 0.7, 5, 1, 1, 2, 0, 1, 3, 0, 2, 2, 1

"Aug", 2.3, 6, 3, 2, 4, 4, 4, 7, 8, 2, 2, 3

"Sep", 3.5, 6, 4, 7, 4, 2, 8, 5, 2, 5, 2, 5

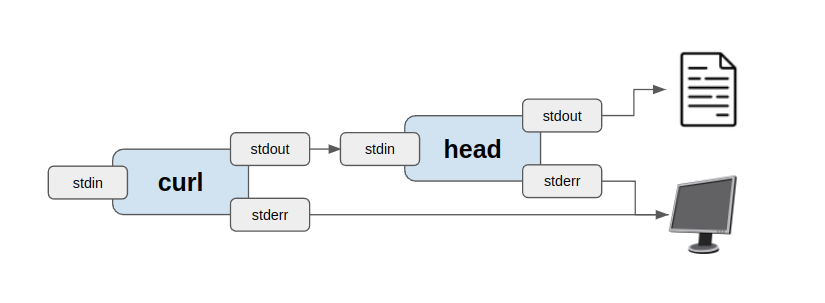

Let’s say we solely need the primary three rows. We will do that by piping two instructions collectively utilizing the piping operator (|). The usual output of the primary command ( curl) is piped as the usual enter of the second ( head). The usual output of the second command ( head) stays output to the terminal as a default.

$ curl https://filesamples.com/samples/doc/csv/sample1.csv | head -n 3

"Could", 0.1, 0, 0, 1, 1, 0, 0, 0, 2, 0, 0, 0

"Jun", 0.5, 2, 1, 1, 0, 0, 1, 1, 2, 2, 0, 1

"Jul", 0.7, 5, 1, 1, 2, 0, 1, 3, 0, 2, 2, 1

Normally, you need information on the disk as an alternative of your terminal. We will obtain this by redirecting the usual output of the final command ( head) right into a file referred to as foo.csv utilizing the > operator.

$ curl https://filesamples.com/samples/doc/csv/sample1.csv | head -n 3 > foo.csv

Lastly, a course of all the time returns a price when it ends. When the return worth is zero (0), we interpret it as profitable execution. If it returns another quantity, it implies that the execution had an error and stop prematurely. For instance, any Python exception which isn’t caught by attempt/besides has the Python interpreter exit with a non-zero code.

You’ll be able to examine what the return worth of the beforehand executed command was utilizing the $? variable.

$ curl http://fake-url

curl: (6) Couldn't resolve hostmm

$ echo $?

6

Beforehand we piped two instructions along with streams, which implies they ran in parallel. The return worth of a command is necessary after we mix two instructions collectively utilizing the && operator. Because of this we await the earlier command to succeed earlier than transferring on to the subsequent. For instance:

cp /tmp/apple.png /tmp/usedA.png && cp /tmp/apple.png /tmp/usedB.png && rm /tmp/apple.png

Right here we attempt to copy the file /tmp/apple to 2 totally different places and eventually delete the unique file. Utilizing the && operator implies that the shell program checks for the return worth of every command and asserts that it’s zero (success) earlier than it strikes. This protects us from unintentionally deleting the file on the finish.

In the event you’re fascinated about writing longer shell scripts, now is an efficient time to take a small detour to the land of the Bash “strict mode” to save your self from loads of headache.

Typically when a knowledge scientist ventures out into the command line, it’s as a result of they use the CLI (Command Line Interface) software offered by a 3rd get together service or a cloud operator. Widespread examples embody downloading information from the AWS S3, executing some code on a Spark cluster, or constructing a Docker picture for manufacturing.

It isn’t very helpful to all the time manually memorize and kind these instructions time and again. It isn’t solely painful but additionally a foul apply from a teamwork and model management perspective. One ought to all the time doc the magic recipes.

For this objective, we advocate utilizing one of many classics, all the way in which from 1976, the make command. It’s a easy, ubiquitous, and strong command which was initially created for compiling supply code however will be weaponized for executing and documenting arbitrary scripts.

The default approach to make use of make is to create a textual content file referred to as Makefile into the foundation listing of your undertaking. You must all the time commit this file into your model management system.

Let’s create a quite simple Makefile with only one “goal”. They’re referred to as targets because of the historical past with compiling supply code, however you must consider goal as a process.

Makefile

hey:

echo "Hey world!"

Now, bear in mind we mentioned it is a traditional from 1976? Effectively, it’s not with out its quirks. You must be very cautious to indent that echo assertion with a tab character, not any variety of areas. In the event you do not do this, you may get a “lacking separator” error.

To execute our “hey” goal (or process), we name:

$ make hey

echo "Hey world!"

Hey world!

Discover how make additionally prints out the recipes and never simply the output. You’ll be able to restrict the output through the use of the -s parameter.

$ make -s hey

Hey world!

Subsequent, let’s add one thing helpful like downloading our coaching information.

Makefile

hey:

echo "Hey world!"get-data:

mkdir -p .information

curl <https://filesamples.com/samples/doc/csv/sample1.csv>

> .information/sample1.csv

echo "Downloaded .information/sample1.csv"

Now we will obtain our instance coaching information with:

$ make -s get-data

Downloaded .information/sample1.csv

(Apart: The extra seasoned Makefile wizards amongst our readership would observe that get-data ought to actually be named .information/sample1.csv to make the most of Makefile’s shorthands and information dependencies.)

Lastly, we’ll take a look at an instance of what a easy Makefile in a knowledge science undertaking may seem like so we will display find out how to use variables with make and get you extra impressed:

Makefile

DOCKER_IMAGE := mycompany/myproject

VERSION := $(shell git describe --always --dirty --long)default:

echo "See readme"

init:

pip set up -r necessities.txt

pip set up -r requirements-dev.txt

cp -u .env.template .env

build-image:

docker construct .

-f ./Dockerfile

-t $(DOCKER_IMAGE):$(VERSION)

push-image:

docker push $(DOCKER_IMAGE):$(VERSION)

pin-dependencies:

pip set up -U pip-tools

pip-compile necessities.in

pip-compile requirements-dev.in

upgrade-dependencies:

pip set up -U pip pip-tools

pip-compile -U necessities.in

pip-compile -U requirements-dev.in

This instance Makefile would permit your crew members to initialize their setting after cloning the repository, pin the dependencies after they introduce new libraries, and deploy a brand new docker picture with a pleasant model tag.

In the event you constantly present a pleasant Makefile together with a well-written readme in your code repositories, it’ll empower your colleagues to make use of the command line and reproduce all of your per-project magic constantly.

Conclusion

On the finish of the day, some love the command-line and a few don’t.

It isn’t everybody’s cup of tea, however each information scientist ought to attempt it out and provides it an opportunity. Even when it doesn’t discover a place in your each day toolbox, it advantages to grasp the basics. It’s good to have the ability to talk with colleagues who’re command-line fanatics with out getting intimidated by their terminal voodoo.

If the subject obtained you impressed, I extremely advocate trying out the free e-book Knowledge Science on the Command-Line by Jeroen Janssens subsequent.