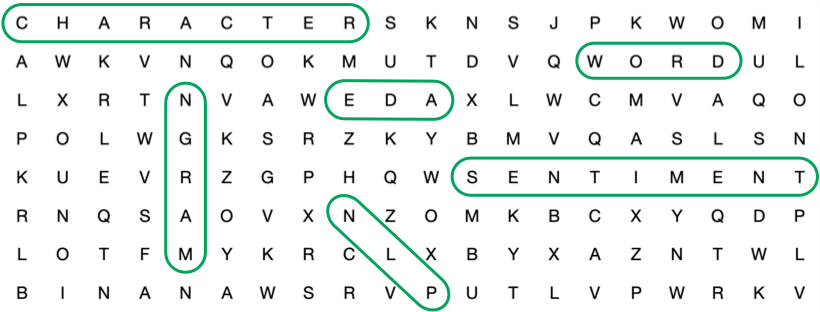

How you can carry out Exploratory Information Evaluation on textual content knowledge for Pure Language Processing

Exploratory Information Evaluation (EDA) for textual content knowledge is greater than counting characters and phrases. To take your EDA to the subsequent stage, you’ll be able to have a look at every phrase and categorize it or you’ll be able to analyze the general sentiment of a textual content.

Exploratory Information Evaluation for textual content knowledge is greater than counting characters and phrases.

On this article, we are going to have a look at some intermediate EDA strategies for textual content knowledge:

- Half-of-Speech Tagging: We’ll have a look at Half-of-Speech (POS) tagging and find out how to use it to get essentially the most frequent adjectives, nouns, verbs, and so forth.

- Sentiment Evaluation: We’ll have a look at sentiment evaluation and discover whether or not the dataset has a optimistic or detrimental tendency.

As within the earlier article, we are going to use the Ladies’s E-Commerce Clothes Opinions Dataset from Kaggle for this text once more.

To simplify the examples, we are going to use 450 optimistic critiques (ranking == 5) and 450 detrimental critiques (ranking == 1). This reduces the variety of knowledge factors to 900 rows, reduces the variety of ranking courses to 2, and balances the optimistic and detrimental critiques.

Moreover, we are going to solely use two columns: the evaluation textual content and the ranking.

The DataFrame’s head of the diminished dataset appears like this:

Within the basic EDA strategies, we coated essentially the most frequent phrases and bi-grams and observed that adjectives like “nice” and “good” have been among the many most frequent phrases within the optimistic critiques.

With POS tagging, you could possibly refine the EDA on essentially the most frequent phrases. E.g., you could possibly discover, which adjectives or verbs are commonest.

POS tagging takes each token in a textual content and categorizes it as nouns, verbs, adjectives, and so forth, as proven beneath:

In case you are interested in how I visualized this sentence, you’ll be able to take a look at my tutorial right here:

To verify which POS tags are the commonest, we are going to begin by making a corpus of all evaluation texts within the DataFrame:

corpus = df["text"].values.tolist()

Subsequent, we’ll tokenize your complete corpus as preparation for POS tagging.

from nltk import word_tokenize

tokens = word_tokenize(" ".be a part of(corpus))

Then, we’ll POS tag every token within the corpus with the coarse tag set “common”:

import nltk

tags = nltk.pos_tag(tokens,

tagset = "common")

As within the Time period Frequency evaluation of the earlier article, we are going to create a listing of tags by eradicating all stopwords. Moreover, we are going to solely embrace phrases of a selected tag, e.g. adjectives.

Then all we have now to do is to make use of the Counter class as within the earlier article.

from collections import Countertag = "ADJ"

cease = set(stopwords.phrases("english"))# Get all tokens which can be tagged as adjectives

tags = [word for word, pos in tags if ((pos == tag) & ( word not in stop))]# Depend commonest adjectives

most_common = Counter(tags).most_common(10)# Visualize commonest tags as bar plots

phrases, frequency = [], []

for phrase, rely in most_common:

phrases.append(phrase)

frequency.append(rely)sns.barplot(x = frequency, y = phrases)

Beneath, you’ll be able to see the highest 10 commonest adjectives for the detrimental and optimistic critiques:

From this system, we are able to see that phrases like “small”, “match”, “large”, and “giant” are commonest. This may point out that clients are most upset a couple of piece of clothes’s match than e.g. about its high quality.

The primary thought of sentiment evaluation is to get an understanding of whether or not a textual content has a optimistic or detrimental tone. E.g., the sentence “I like this high.” has a optimistic sentiment, and the sentence “I hate the colour.” has a detrimental sentiment.

You should use TextBlob for easy sentiment evaluation as proven beneath:

from textblob import TextBlobblob = TextBlob("I like the minimize")blob.polarity

Polarity is an indicator of whether or not an announcement is optimistic or detrimental and is a quantity between -1 (detrimental) and 1 (optimistic). The sentence “I like the minimize” has a polarity of 0.5, whereas the sentence “I hate the colour” has a polarity of -0.8.

The mixed sentence “I like the minimize however I hate the colour” has a polarity of -0.15.

For a number of sentences in a textual content, you may get the polarity of every sentence as proven beneath:

textual content = “I like the minimize. I get plenty of compliments. I adore it.”

[sentence.polarity for sentence in TextBlob(text).sentences]

This code returns an array of polarities of [0.5, 0.0, 0.5]. That signifies that the primary and final sentences have a optimistic sentiment whereas the second sentence has a impartial sentiment.

If we apply this sentiment evaluation to the entire DataFrame like this,

df["polarity"] = df["text"].map(lambda x: np.imply([sentence.polarity for sentence in TextBlob(x).sentences]))

we are able to plot a boxplot comparability with the next code:

sns.boxplot(knowledge = df,

y = "polarity",

x = "ranking")

Beneath, you’ll be able to see the polarity boxplots for the detrimental and optimistic critiques:

As you’ll anticipate, we are able to see that detrimental critiques (ranking == 1) have an general decrease polarity than optimistic critiques (ranking == 5).

On this article, we checked out some intermediate EDA strategies for textual content knowledge:

- Half-of-Speech Tagging: We checked out Half-of-Speech tagging and find out how to use it to get essentially the most frequent adjectives for example.

- Sentiment Evaluation: We checked out sentiment evaluation and explored the evaluation texts’ polarities.

Beneath yow will discover all code snippets for fast copying: