Machine studying and AI demand huge quantities of horsepower, in case you’re doing it on general-purpose processors, which is why corporations like Meta and Alphabet have spent billions creating their very own specialised neural community accelerators. Intel desires a chunk of the AI-acceleration pie in fact as properly, and that is possible a part of why the corporate goes to begin delivery such a function in its upcoming CPUs, starting with its Thirteenth-generation cell components.

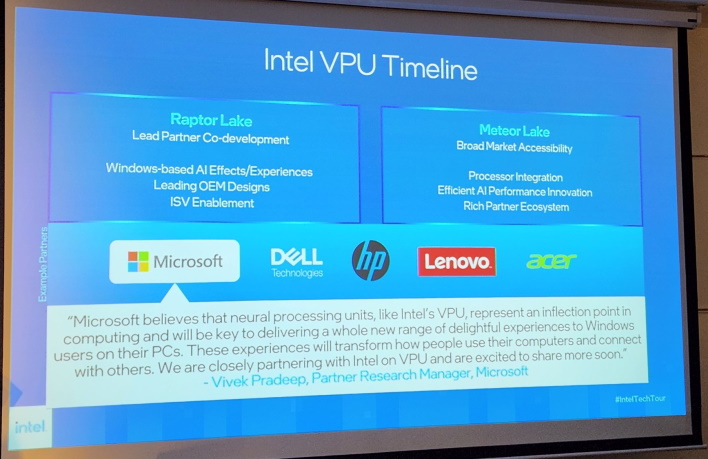

Based on a slide shared on Twitter by Bob O’Donnell from TECHnalysis Analysis, Intel might be including a distinct form of neural-network processor to its Thirteenth-generation Raptor Lake and 14th-generation Meteor Lake CPUs. The presence of a so-called “VPU” in Meteor Lake has been rumored since late final 12 months, however that is the primary we have heard concerning Raptor Lake.

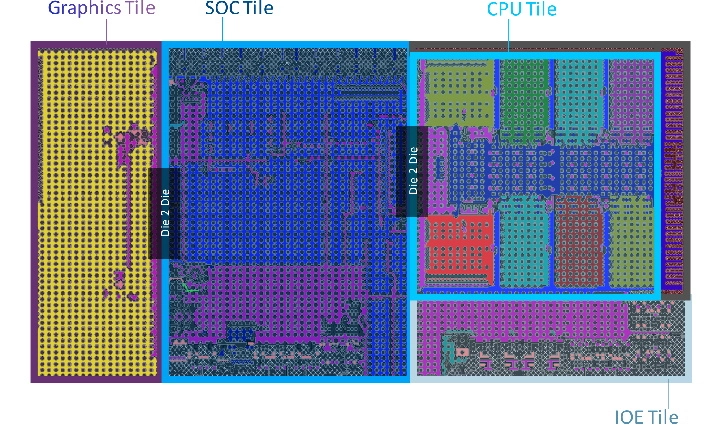

The slide signifies “Lead Accomplice Co-development,” which sounds to us lots just like the VPU will solely seem in merchandise co-developed with Intel’s companions. That typically implies that solely choose laptops will see the function, so we are able to count on that particular SKUs of laptops sporting Raptor Lake cell will embrace the processor as a separate gadget. In the meantime, it is anticipated to be a typical a part of Meteor Lake; Intel says it would have “broad market accessibility” and “processor integration”.

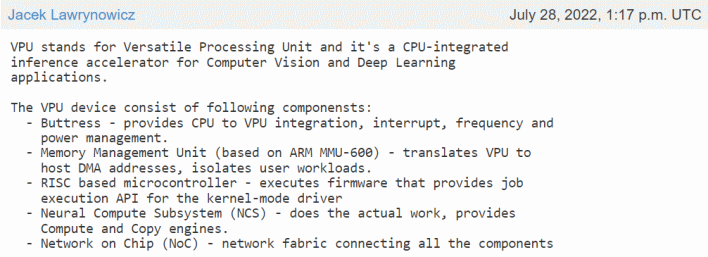

It looks like the final entry in that checklist is the supply for Intel’s VPUs, which suggests they’re possible identified most correctly as “Visible Processing Items.” Nonetheless, some sources assume Intel will take to calling them “Versatile Processing Items” as an alternative. That identify comes from a kernel patch dedicated by Intel Linux developer Jacek Lawrynowicz on the finish of July.

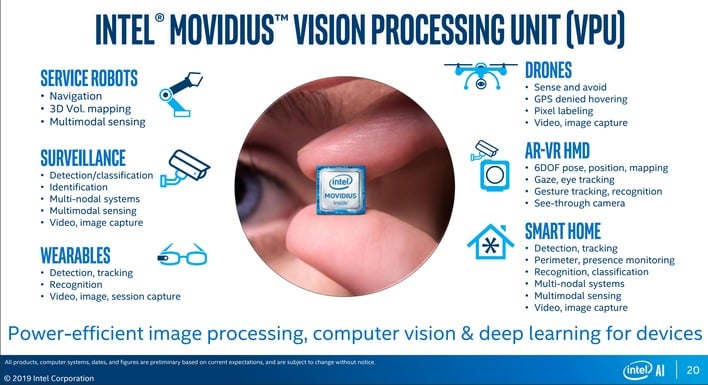

The very last thing we heard out of Intel’s Movidius division was the discharge of the Neural Compute Stick 2, a revamped model of the corporate’s authentic product. That is a flash drive-sized USB gadget that may be bus-powered on USB 2.0 and gives a formidable quantity of neural-network acceleration for a one-watt gadget.

With that in thoughts, Movidius’ VPU is an ideal match for a low-power client-focused AI accelerator. The corporate’s tech is concentrated on AI inference, not coaching, because it’s meant for use to use pre-trained AI fashions to troublesome issues in laptop imaginative and prescient. This might have functions in picture processing and computational images or be used to boost safety with consumer presence detection and extra. Presumably the “Neural Compute Subsystem” within the Movidius processors might be utilized to different neural-network inferencing duties, too, so the “versatile” moniker won’t be fully off-base.