Subsequent.js provides all kinds of nice options. Whether or not it’s the way in which it generates pages (statically or on server request) or updates them with Incremental Static Regeneration, this framework has numerous thrilling choices to draw builders. Out of all Subsequent.js’s options, its search engine optimization assist stands out as considered one of its predominant benefits over different frameworks corresponding to Create React App.

React is a superb language for JavaScript builders, however it’s sadly fairly unhealthy for search engine optimization. The reason being that React is client-side rendered. Concretely, when a person requests a web page, as an alternative of the server returning HTML, it serves JavaScript, which the browser will then use to assemble the web page.

Because of this, the preliminary web page load in a SPA is usually longer than in an software utilizing server-side rendering. Added to this, for a very long time, Google bots didn’t crawl JavaScript correctly.

Subsequent.js mounted this challenge by not solely being based mostly on React however providing builders server-side rendering. This made it simple for builders emigrate their functions.

An important piece of search engine optimization is having a robots.txt in your web site. On this article, you’ll uncover what a robots.txt is and how one can add one to your Subsequent.js software, which isn’t one thing Subsequent does out of the field.

What’s a robots.txt file?

A robots.txt file is an internet normal file that tells search engine crawlers, like Google bots, which pages they’ll or can not crawl. This file is on the root of your host and may subsequently be accessed at this URL: yourdomain.com/robots.txt.

As talked about, this file permits you to inform bots the place pages and information are crawlable or not. You might need sure elements of your software that you really want inaccessible corresponding to your admin pages.

You’ll be able to disallow URLs like this:

Person-agent: nameOfBot Disallow: /admin/

Or enable them like this:

Person-agent: * Enable: /

On the finish of your file, you must also add a line for the vacation spot to your sitemap like this:

Sitemap: http://www.yourdomain.com/sitemap.xml

N.B., unfamiliar with sitemaps? Don’t hesitate to take a look at Google’s documentation on the topic.

In the long run, your robots.txt would look one thing like this:

Person-agent: nameOfBot Disallow: /admin/ Person-agent: * Enable: / Sitemap: http://www.yourdomain.com/sitemap.xml

How one can add a robots.txt file to your Subsequent.js software

Including a robots.txt file to your software may be very simple. Each Subsequent.js mission comes with a folder known as public. This folder permits you to retailer static property that can then be accessible from the basis of your area. Subsequently, by storing a picture like dolphin.jpeg in your public folder, when your mission is constructed, it is going to be accessible from the URL http://www.yourdomain.com/dolphin.jpeg. The identical approach can be utilized in your robots.txt file.

So, so as to add a robots.txt file to your software, all it’s important to do is drop it in your public folder in your software.

Different choice: Dynamic technology

There’s a technique to dynamically generate your robots.txt file. To take action, you possibly can make the most of two of Subsequent.js’s options: API route and rewrites.

Subsequent.js permits you to outline API routes. Because of this when a request is finished to a selected API endpoint, you possibly can return the proper content material in your robots.txt file.

To take action, create a robots.js file in your pages/api folder. This robotically creates a route. Inside this file, add your handler, which is able to return your robots.txt content material:

export default operate handler(req, res) {

res.ship('Robots.txt content material goes there'); // Ship your `robots.txt content material right here

}

Sadly, that is solely out there on the URL /api/robots and as talked about above, search engine crawlers will search for the /robots.txt url.

Fortunately, Subsequent.js provides a function known as rewrites. This lets you reroute a selected vacation spot path to a different path. On this specific case, you wish to redirect all of the requests for /robots.txt to /api/robots.

To take action, go into your subsequent.config.js and add the rewrite:

/** @sort {import('subsequent').NextConfig} */

const nextConfig = {

reactStrictMode: true,

async rewrites() {

return [

{

source: '/robots.txt',

destination: '/api/robots'

}

];

}

}

module.exports = nextConfig

With this in place, each time you entry /robots.txt, it’s going to name /api/robots and show the “Robots.txt content material goes there” message.

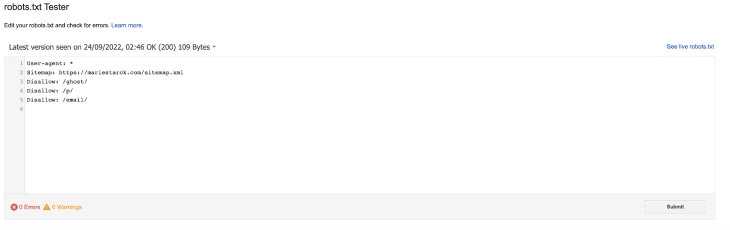

How one can validate your robots.txt file?

When you deploy your software to manufacturing, you possibly can validate your robots.txt file because of the tester supplied by Google Search. In case your file is legitimate, you need to see a message saying 0 errors displayed.

To deploy your software on manufacturing, I extremely suggest Vercel. This platform was created by the founding father of Subsequent.js and was constructed with this framework in thoughts. LogRocket has a tutorial on the way to deploy your Subsequent.js software on Vercel.

Conclusion

search engine optimization is essential for websites that must be found. To have a very good web page rating, webpages must be simply crawlable by search engine crawlers. Subsequent.js makes that simple for React builders by providing inbuilt search engine optimization assist. This assist contains the power so as to add a robots.txt file to your mission simply.

On this article, you realized what a robots.txt file was, the way to add it to your Subsequent.js software, and the way to validate it when your app is deployed.

Upon getting your robots.txt file arrange, additionally, you will want a sitemap. Fortunately, LogRocket additionally has an article on the way to construct a sitemap generator in Subsequent.js.