In laptop imaginative and prescient, picture synthesis is without doubt one of the most spectacular current developments, but additionally amongst these with the best computational calls for. Scaling up likelihood-based fashions is now dominating the high-resolution synthesis of difficult pure settings. The encouraging outcomes of GANs, however, have been confirmed to be primarily confined to knowledge with comparatively restricted variability, as their adversarial studying method doesn’t simply scale to modelling difficult, multi-modal distributions. Lately, it has been demonstrated that diffusion fashions, that are created from a hierarchy of denoising autoencoders, generate excellent leads to image synthesis. This text will assist one to grasp a diffusion mannequin used for picture synthesis. The next matters are to be lined.

Desk of contents

- About diffusion fashions

- The Latent Diffusion

- Producing pictures from textual content utilizing Secure Diffusion

About diffusion fashions

Diffusion Fashions are generative fashions, that means they create knowledge akin to the information on which they had been skilled. Diffusion Fashions operate essentially by corrupting coaching knowledge by successively including Gaussian noise after which studying to retrieve the information by reversing this noising course of.

Diffusion Fashions are probabilistic fashions which are used to be taught an information distribution by steadily denoising a usually distributed variable, which is equal to studying the alternative technique of a fixed-length Markov Chain. The simplest image synthesis fashions depend on a reweighted type of the variational decrease restrict on the distribution, which is analogous to denoising rating matching. These fashions could also be regarded as a sequence of denoising autoencoders which have been skilled to foretell a denoised variation of their enter.

The Latent Diffusion

The latent diffusion fashions are diffusion fashions that are skilled in a latent area. A latent area is a multidimensional summary area that shops a significant inner illustration of externally witnessed occasions. Within the latent area, samples which are comparable within the exterior world are positioned close to one another. Its main goal is to transform uncooked knowledge, equivalent to image pixel values, into an applicable inner illustration or function vector from which the educational subsystem, usually a classifier, might recognise or categorise patterns within the enter.

The variational autoencoder in latent diffusion fashions maximises the ELBO (Proof Decrease Sure). Instantly calculating and maximising the probability of the latent variable is difficult because it requires both integrating out all latent variables, which is intractable for giant fashions, or entry to a floor reality latent encoder. The ELBO, however, is a decrease certain of the proof. On this scenario, the proof is expressed because the log likelihood of the noticed knowledge. Then, maximising the ELBO turns into a proxy purpose for optimising a latent variable mannequin; within the best-case situation, when the ELBO is powerfully parameterized and correctly optimised, it turns into completely equivalent to the proof.

As a result of the encoder optimises for the very best amongst a set of possible posterior distributions specified by the parameters, this method is variational. It’s named an autoencoder as a result of it’s much like a regular autoencoder mannequin, through which enter knowledge is taught to foretell itself after going by means of an middleman bottlenecking illustration section.

The autoencoder’s mathematical equation computes two values: the primary time period within the equation estimates the reconstruction probability of the decoder from the variational distribution. This ensures that the learnt distribution fashions efficient latents from which the unique knowledge could also be recreated. The second a part of the equation compares the learnt variational distribution to a chunk of prior data about latent variables. By decreasing this element, the encoder is inspired to be taught a distribution somewhat than collapse right into a Dirac delta operate. Rising the ELBO is thus much like growing its first time period whereas lowering its second time period.

Producing pictures from textual content utilizing Secure Diffusion

On this article, we are going to use the Secure diffusion V1 pertained mannequin to generate some pictures from the textual content description of the picture. Secure Diffusion is a text-to-image latent diffusion mannequin developed by CompVis, Stability AI, and LAION researchers and engineers. It was skilled utilizing 512×512 photos from the LAION-5B database. To situation, the mannequin on textual content prompts, this mannequin employs a frozen CLIP ViT-L/14 textual content encoder. The mannequin is somewhat light-weight, with an 860M UNet and 123M textual content encoder, and it really works on a GPU with no less than 10GB VRAM.

Whereas image-generating fashions have nice capabilities, they’ll additionally reinforce or worsen societal prejudices. Secure Diffusion v1 was skilled on subsets of LAION-2B(en), which incorporates massive photos with English descriptions. Texts and pictures from teams and cultures that talk completely different languages are prone to be underrepresented. This has an influence on the mannequin’s general output as a result of white and western cultures are often chosen because the default. Moreover, the mannequin’s capability to supply materials with non-English prompts is noticeably decrease than with English-language prompts.

Putting in the dependencies

!pip set up diffusers==0.3.0 !pip set up transformers scipy ftfy !pip set up ipywidgets==7.7.2

The consumer additionally wants to just accept the mannequin license earlier than downloading or utilizing the weights. By visiting the mannequin card and studying the license, and ticking the checkbox if one agrees, the consumer might entry the pre-trained mannequin. The consumer must be a registered consumer in Hugging Face Hub and likewise wants to make use of an entry token for the code to work.

from huggingface_hub import notebook_login notebook_login()

Importing the dependencies

import torch from torch import autocast from diffusers import StableDiffusionPipeline from PIL import Picture

Creating the pipeline

Earlier than creating the pipeline, be sure that the GPU is linked to the pocket book if utilizing the Colab pocket book, use these strains of code.

!nvidia-smi

StableDiffusionPipeline is a whole inference pipeline that can be utilized to supply photos from textual content utilizing only some strains of code.

experimental_pipe = StableDiffusionPipeline.from_pretrained("CompVis/stable-diffusion-v1-4", revision="fp16", torch_dtype=torch.float16, use_auth_token=True)

Initially, we load the mannequin’s pre-trained weights for all elements. We’re supplying a specific revision, torch_dtype, and use auth token to the from pretrained operate along with the mannequin id “CompVis/stable-diffusion-v1-4”, “Use_auth_token” is required to substantiate that you’ve got accepted the mannequin’s licence. To ensure that any free Google Colab can execute Secure Diffusion, we load the weights from the half-precision department “fp16” and inform diffusers to count on weights in float16 precision by passing torch datatype because the float. For quicker inferences, transfer the pipeline to the GPU accelerator.

experimental_pipe = experimental_pipe.to("cuda")

Producing a picture

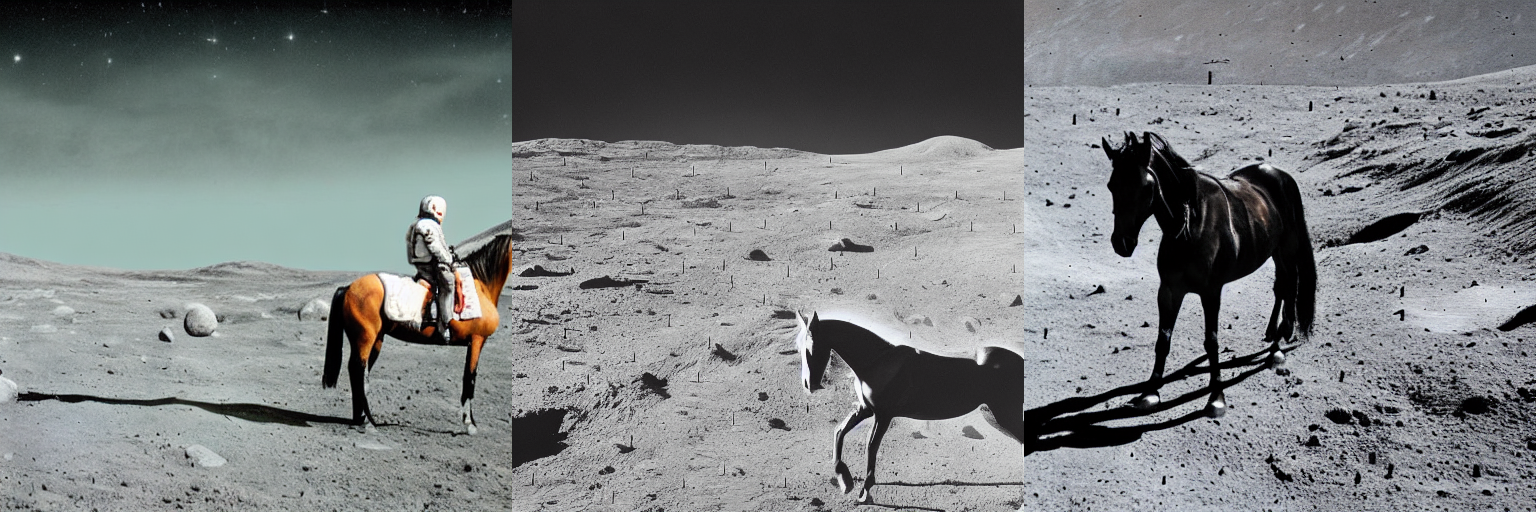

description_1 = "{a photograph} of an horse on moon"

with autocast("cuda"):

image_1 = experimental_pipe(description_1).pictures[0]

image_1

As we might observe the mannequin did a fairly good job in producing the picture. We’ve the horse which is on the moon and we might additionally see the earth from the moon and the main points like highlights, blacks, publicity and others are additionally tremendous.

Let’s strive a posh description with extra particulars in regards to the picture.

description_2 = "canine sitting in a area of autumn leaves"

with autocast("cuda"):

image_2 = experimental_pipe(description_2).pictures[0]

Let’s type a grid of three columns and 1 row to show extra pictures.

num_images = 3

description = [description_2]*num_images

with autocast("cuda"):

experiment_image = experimental_pipe(description).pictures

grid = grids(experiment_image, rows=1, cols=3)

grid

Conclusion

Picture synthesis is a spectacular a part of the pc imaginative and prescient area of synthetic intelligence, and with the expansion of autoencoders and probabilistic strategies, these syntheses are displaying superb outcomes. Secure diffusion is without doubt one of the superb diffusion fashions which may generate a well-defined picture out of a textual content description. With this text, we’ve understood the latent diffusion mannequin and its implementations.

Constructed a Dev.to reproduction with The MERN stack and extra

Constructed a Dev.to reproduction with The MERN stack and extra