Visualizing convolutional neural networks utilizing gradient descent optimization for the goal output

As human beings, we all know how a cat seems to be like. However what about neural networks? On this publish, we reveal how a cat seems to be like inside a neural community mind, and in addition speak about adversarial assaults.

Convolutional Neural Networks work nice for picture classification. There are various pre-trained networks, equivalent to VGG-16 and ResNet, educated on ImageNet dataset, that may classify a picture into considered one of 1000 courses. The best way they work is to study patterns which might be typical for various object courses, after which look by the picture to acknowledge these courses and take the choice. It’s just like a human being, who’s scanning an image along with his/her eyes, on the lookout for acquainted objects.

If you wish to study extra about convolutional neural networks, and about neural networks normally — we advocate you to go to AI for Novices Curriculum, obtainable from Microsoft’s GitHub. It’s a assortment of studying supplies organized into 24 classes, which can be utilized by college students/builders to find out about AI, and by lecturers, who would possibly discover helpful supplies to incorporate of their courses. This weblog publish relies on supplies from the curriculum.

So, as soon as educated, a neural community accommodates completely different patterns inside it’s mind, together with notions of splendid cat (in addition to splendid canine, splendid zebra, and so on.). Nonetheless, it’s not very simple to visualise these pictures, as a result of patterns are unfold all around the community weights, and in addition organized in a hierarchical construction. Our aim can be to visualize the picture of a really perfect cat {that a} neural community has inside its mind.

Classifying pictures utilizing pre-trained neural community is straightforward. Utilizing Keras, we are able to load pre-trained mannequin with one line of code:

mannequin = keras.functions.VGG16(weights='imagenet',include_top=True)

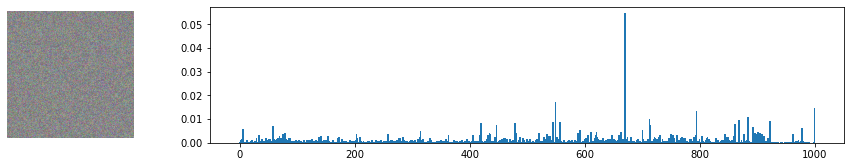

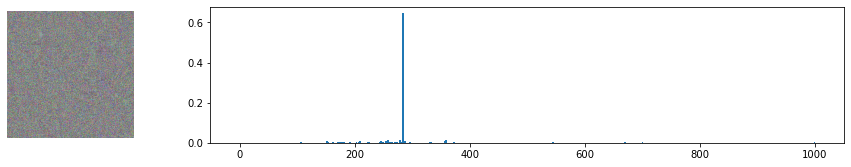

For every enter picture of dimension 224x224x3, the community will give us 1000-dimensional vector of possibilities, every coordinate of this vector equivalent to completely different ImageNet class. If we run the community on the noise picture, we are going to get the next end result:

x = tf.Variable(tf.random.regular((1,224,224,3)))

plot_result(x)

You may see that there’s one class with larger chance than the others. Actually, this class is mosquito web, with chance of round 0.06. Certainly, random noise seems to be just like a mosquito web, however nonetheless the community may be very not sure, and provides many different choices!

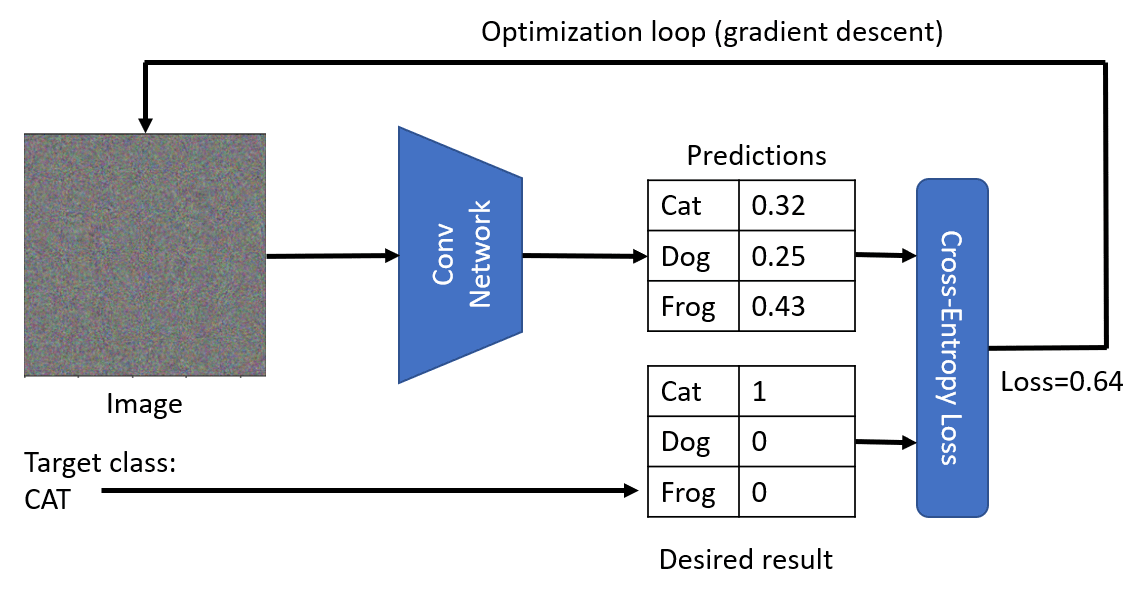

Our important concept to acquire the picture of splendid cat is to make use of gradient descent optimization method to regulate our unique noisy picture in such a approach that the community begins pondering it’s a cat.

Suppose that we begin with unique noise picture x. VGG community V offers us some chance distribution V(x). To match it to the specified distribution of a cat, we are able to use cross-entropy loss operate, and calculate the loss L = cross_entropy_loss(c,V(x)).

To reduce the loss, we have to modify our enter picture. We are able to use the identical concept of gradient descent that’s used for optimization of neural networks. Particularly, at every iteration, we have to modify the enter picture x in accordance with the formulation:

Right here η is the educational fee, which defines how radical our adjustments to the picture can be. The next operate will do the trick:

goal = [284] # Siamese cat

def cross_entropy_loss(goal,res):

return tf.reduce_mean(

keras.metrics.sparse_categorical_crossentropy(goal,res))def optimize(x,goal,loss_fn, epochs=1000, eta=1.0):

for i in vary(epochs):

with tf.GradientTape() as t:

res = mannequin(x)

loss = loss_fn(goal,res)

grads = t.gradient(loss,x)

x.assign_sub(eta*grads)

optimize(x,goal,cross_entropy_loss)

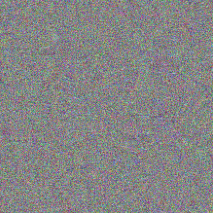

As you possibly can see, we acquired one thing similar to a random noise. It’s because there are lots of methods to make community assume the enter picture is a cat, together with some that don’t make sense visually. Whereas these pictures include a variety of patterns typical for a cat, there’s nothing to constrain them to be visually distinctive. Nonetheless, if we attempt to cross this splendid noisy cat to VGG community, it is going to inform us that the noise is definitely a cat with fairly excessive chance (above 0.6):

This strategy can be utilized to carry out so-called adversarial assaults on a neural community. In adversarial assault, our aim is to switch a picture a bit of bit to idiot a neural community, for instance, make a canine seem like a cat. A classical adversarial instance from this paper by Ian Goodfellow seems to be like this:

In our instance, we are going to take barely completely different route, and as an alternative of including some noise, we are going to begin with a picture of a canine (which is certainly acknowledged by a community as a canine), after which tweak it a bit of however utilizing the identical optimization process as above, till the community begins classifying it as a cat:

img = Picture.open('pictures/canine.jpg').resize((224,224))

x = tf.Variable(np.array(img))

optimize(x,goal,cross_entropy_loss)

Under you possibly can see the unique image (labeled as Italian Greyhound with chance of 0.93), and the identical image after optimization (labeled as Siamese cat with chance of 0.87).

Whereas adversarial assaults are fascinating in their very own proper, we’ve not but been in a position to visualize the notion of splendid cat {that a} neural community has. The explanation the best cat we’ve obtained seems to be like a noise is that we’ve not put any constraints on our picture x that’s being optimized. For instance, we might wish to constraint the optimization course of in order that the picture x is much less noisy, which is able to make some seen patterns extra noticeable.

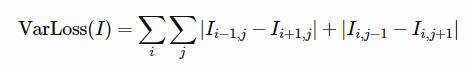

In an effort to do that, we are able to add one other time period into the loss operate. A good suggestion can be to make use of so-called variation loss, a operate that reveals how comparable neighboring pixels of the picture are. For a picture I, it’s outlined as

TensorFlow has a built-in operate tf.picture.total_variation that computes whole variation of a given tensor. Utilizing it, we are able to outline out whole loss operate within the following approach:

def total_loss(goal,res):

return 0.005*tf.picture.total_variation(x,res) +

10*tf.reduce_mean(sparse_categorical_crossentropy(goal,res))

Notice the coefficients 0.005 and 10 – these are decided by trial-and-error to discover a good steadiness between smoothness and element within the picture. It’s possible you’ll wish to play a bit with these to discover a higher mixture.

Minimizing variation loss makes the picture smoother, and removes noise — thus revealing extra visually interesting patterns. Right here is an instance of such “splendid” pictures, which might be labeled as cat and as zebra with excessive chance:

optimize(x,[284],loss_fn=total_loss) # cat

optimize(x,[340],loss_fn=total_loss) # zebra

These pictures give us some insights into how neural networks make sense of pictures. Within the “cat” picture, you possibly can see a number of the components that resemble cat’s eyes, and a few of them resembling ears. Nonetheless, there are lots of of them, and they’re unfold all around the picture. Recall {that a} neural community in its essence calculates weighted sum of it’s inputs, and when it sees a variety of components which might be typical for a cat — it turns into extra satisfied that it’s actually a cat. That’s why many eyes on one image offers us larger chance than only one, despite the fact that it seems to be much less “cat-like” to a human being.

Adversarial assaults and visualization of “splendid cat” are described in switch studying part of AI for Novices Curriculum. The precise code I’ve lined on this weblog publish is offered right here.