Probably the most superior generalist community so far

Gato can play video games, generate textual content, course of pictures, and management robotic arms. And it’s not even too huge. Is true AI developing?

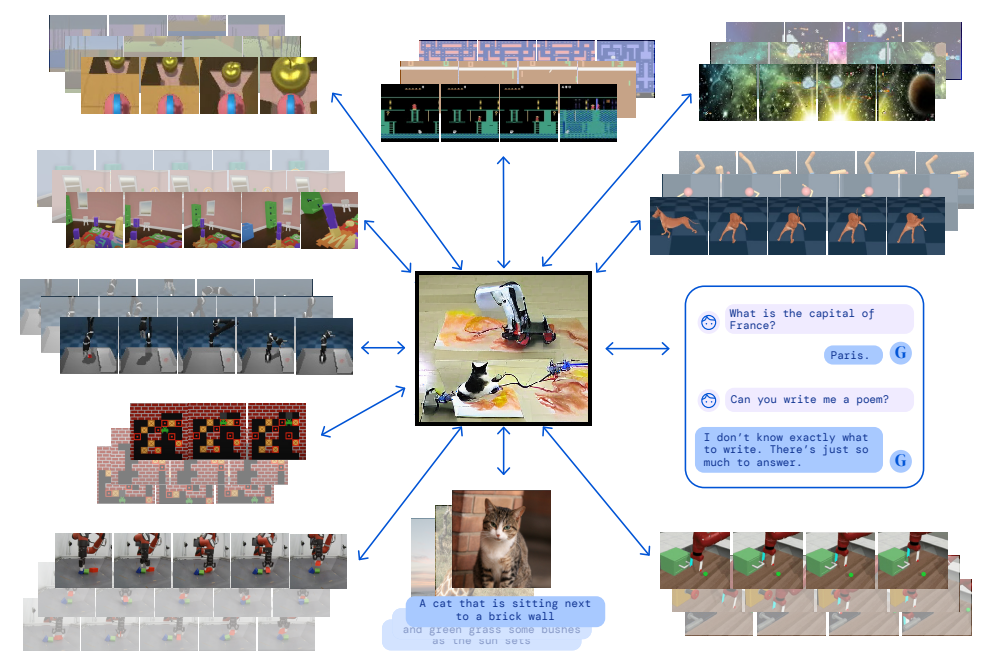

The deep studying area is progressing quickly, and the most recent work from Deepmind is an effective instance of this. Their Gato mannequin is ready to be taught to play Atari video games, generate sensible textual content, course of pictures, management robotic arms, and extra, all with the identical neural community. Impressed by large-scale language fashions, Deepmind utilized an analogous strategy however prolonged past the realm of textual content outputs.

How Gato works

This new AGI (after Synthetic Basic Intelligence) works as a multi-modal, multi-task, multi-embodiment community, which implies that the identical community (i.e. a single structure with a single set of weights) can carry out all duties, regardless of involving inherently totally different sorts of inputs and outputs.

Whereas Deepmind’s preprint presenting Gato isn’t very detailed, it’s clear sufficient in that it’s strongly rooted in transformers as used for pure language processing and textual content technology. Nonetheless, it isn’t solely skilled with textual content but additionally with pictures (already round with fashions like Dall.E), torques appearing on robotic arms, button presses from pc sport taking part in, and so on. Primarily, then, Gato handles every kind of inputs collectively and decides from context whether or not to output intelligible textual content (for instance to talk, summarize or translate textual content, and so on.), or torque powers (for the actuators of a robotic arm), or button presses (to play video games), and so on.

Gato thus demonstrates the flexibility of transformer-based architectures for machine studying, and reveals how they are often tailored to a wide range of duties. We now have seen within the final decade stunning functions of neural networks specialised for enjoying video games, translating textual content, captioning pictures, and so on. However Gato is common sufficient to carry out all these duties by itself, utilizing a single set of weights and a comparatively easy structure. That is in opposition to specialised networks that require a number of modules to be built-in with the intention to work collectively, whose integration will depend on the issue to be solved.

Furthermore, and impressively, Gato isn’t even near the most important neural networks we have now seen! With “solely” 1.2 billion weights, it’s akin to OpenAI’s GPT-2 language mannequin, i.e. over 2 orders of magnitude smaller than GPT-3 (with 175 billion weights) and different fashionable language processing networks.

The outcomes on Gato additionally help earlier findings that coaching from knowledge of various nature leads to higher studying of the data that’s equipped. Identical to people be taught their worlds from a number of simultaneous sources of data! This entire thought enters totally into one of the crucial fascinating traits within the area of machine studying in recent times: multimodality -the capability of dealing with and integrating varied sorts of knowledge.

On the potential of AGIs -towards true AI?

I by no means actually preferred the time period Synthetic Intelligence. I used to assume that simply nothing might beat the human mind. Nonetheless…

The potential behind rising AGIs is way more fascinating, and positively highly effective, than what we had only one yr in the past. These fashions are in a position to clear up a wide range of complicated duties with basically a single piece of software program, making them very versatile. If one such mannequin superior by say a decade from now, had been to be run inside robot-like {hardware} with means for locomotion and with applicable enter and output peripherals, we might effectively be giving strong steps into creating true synthetic beings with actual synthetic intelligence. In any case, our brains are in some way very intricate neural networks connecting and integrating sensory info to output our actions. Nihilistically, nothing prevents this knowledge processing to occur in silico slightly than organically.

Simply 3 years in the past, I completely wouldn’t have stated any of this, particularly not that AI might sometime be actual. Now, I’m not so positive, and the group sentiment is analogous: they now approximate that we might have machine-based methods with the identical general-purpose reasoning and problem-solving duties of people by 2030. The projected yr was round 2200 simply 2 years in the past, and has been slowly reducing:

Though that’s simply blind predictions with no strong modeling behind them, the development does mirror the large steps that the sector is taking. I now don’t see it far-fetched {that a} single robotic might play chess with you sooner or later and scrabble the following, water your vegetation whenever you aren’t dwelling even making its personal selections relying on climate forecasts and the way your vegetation look, intelligently summarize the information for you, cook dinner your meals, and why not even make it easier to to develop your concepts. Generalist AI might get right here prior to we expect.