A step-by-step tutorial for a simple and enjoyable challenge

A fast tutorial on easy methods to seize the dwell video out of your webcam and convert it to anime fashion. I may also focus on easy methods to create another customized filters for our webcam, in addition to the trade-offs for every of them.

This tutorial doesn’t require any Machine Studying data.

All code snippets and pictures under are produced by the writer. Code references are cited inside code snippets, the place relevant.

We are going to go over the next steps:

- Seize the output out of your webcam (Utilizing CV2)

- Remodeling the output (Utilizing PyTorch)

- Show or save the picture (Utilizing CV2)

- Talk about different filters and trade-offs

If you wish to skip the tutorial and rapidly get to the end result:

- Obtain the code snippet named

animeme.pyunder - Open the terminal, navigate to the folder of this file

- Run

python animeme.py

Dependencies:

The next packages are required:

For my setup, I’ve:

pytorch==1.11.0

opencv==4.5.5

pillow==9.1.1

numpy==1.22.4

python==3.9.12

I take advantage of Anaconda for Home windows to handle and set up all dependencies.

Capturing enter picture:

First, let’s make sure that your webcam is detected and discover the suitable port quantity. Usually, a built-in webcam would use port 0, however in case you have an exterior digicam, it could use a distinct port.

I take advantage of two cameras, so this script returns two ports:

>>> camera_indexes

[0, 1]

Now we will go forward and seize the digicam output:

This will provide you with a dwell feed of your digicam output. To shut, press q.

Remodeling Output Picture

With this line

_, body = cam.learn(),

body is now the enter out of your digicam. Earlier than displaying it with cv2.imshow(), we’ll apply our transformation right here.

To transform our picture to anime, I’ll use the PyTorch implementation of AnimeGANv2. The mannequin from this repo comes with pre-trained weights and easy-to-follow directions.

Some essential particulars:

cam.learn()returns a Numpy array of BGR format, whereas AnimeGANv2 requires photographs of sort RGB, andcv2.imshow()expects BGR. So, we’ve got to do some picture pre- and post-processing.- AnimeGANv2 has 4 totally different pre-trained types. You’ll be able to experiment and see which one you want finest.

- If potential, we must always run the Pytorch mannequin on GPU to maximise inference velocity.

The general course of is:

-> enter body BGR from cam.learn()

-> convert to RGB

-> apply mannequin(preferrably on GPU)

-> convert to BGR

-> show with cv2.imshow()

Placing all of it collectively:

And right here is the end result:

Anime styling for the enter body just isn’t our solely possibility. There are various different transformations we will do at this step:

-> apply mannequin(preferrably on GPU)

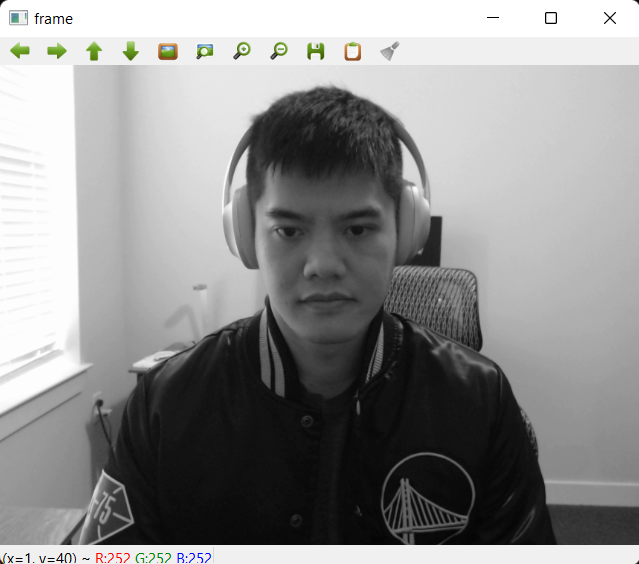

For instance, you’ll be able to convert the picture to grayscale:

to get

And even mix each these steps:

and our manga is now in black and white:

A number of transformations can be found without cost right here. Or you’ll be able to write a customized picture transformation your self.

Chances are you’ll discover that the Anime model of your webcam is way slower. We are able to add the next snippet to measure the mannequin’s efficiency:

Listed here are the outputs with FPS displayed:

Why is the Anime model a lot slower?

- For every body, we’ve got to run one ahead cross of AnimeGANv2. The inference time of this step considerably slows down the entire course of.

- With the default webcam output, we obtain 37–40FPS, or 25ms/body.

- With Grayscale filter, it’s all the way down to 36–38FPS, roughly 27ms/body.

- With AnimeGANv2, we’re all the way down to 5FPS, or 200ms/body.

- For any customized filter, if we use an enormous mannequin for picture processing, we should sacrifice some efficiency.

Optimizations

- To enhance inference velocity, we will look to optimize mannequin runtime. Some frequent methods are working fashions in FP16 or quantizing the mannequin all the way down to INT8.

- These are pretty sophisticated methods and won’t be lined on this article.

- We are able to cut back the scale of the webcam enter. However then your dwell feed might be smaller as effectively.

- We are able to purchase a stronger GPU (I at the moment have a GTX 3070 for my setup).

And a last essential be aware:

Usually, a manga guide would have a coloured cowl and grayscale contents, as a result of it’s cheaper to print in black and white. However in our settings, coloured frames are literally cheaper to provide, as a result of we wouldn’t have to transform to grayscale and again.

This was a fast tutorial on easy methods to seize the output of your webcam and convert it to anime fashion, or different filters.

I discover this challenge to be fairly enjoyable and straightforward to implement. Even in case you have no prior expertise in Machine Studying or Laptop Imaginative and prescient, you will notice how simple it’s to make the most of state-of-the-art Deep Studying Fashions and create one thing fascinating.

Hopefully, you’ll get pleasure from following together with this tutorial and be capable to create some memorable moments!

AnimeGANv2: https://github.com/TachibanaYoshino/AnimeGANv2

AnimeGANv2, PyTorch implementation: https://github.com/bryandlee/animegan2-pytorch

Capturing webcam movies with OpenCV: https://www.geeksforgeeks.org/python-opencv-capture-video-from-camera/

TorchVision transformation: https://pytorch.org/imaginative and prescient/0.9/transforms.html