Privateness is crucial for enterprise, in response to 95% of safety professionals surveyed within the sixth version of Cisco’s Information Privateness Benchmark Examine. The survey of greater than 4,700 safety professionals from 26 geographies included greater than 3,100 respondents who have been acquainted with the info privateness program at their organizations. Additionally, 94% of respondents stated buyer wouldn’t purchase from them in the event that they thought the info was not correctly protected.

One other attention-grabbing factor to notice: 96% say they’ve an moral obligation to deal with information correctly.

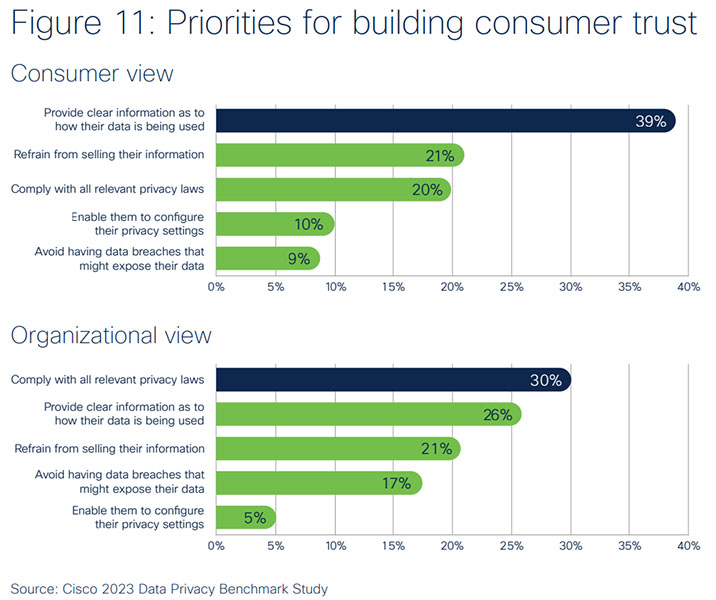

Nevertheless, there’s a disconnect between what shoppers say is critical to achieve their belief on how their info is used, and what organizations suppose they should do to achieve that belief. Shoppers say transparency is the highest precedence to achieve their belief (39%), adopted by not promoting private info (21%), and compliance with privateness legal guidelines (20%). Amongst organizations, the precedence order assorted. From the enterprise perspective, compliance with present laws (30%) was the primary precedence for constructing buyer belief, adopted by transparency about how the info is getting used (26%), and never promoting private info (21%).

“Actually organizations must adjust to privateness legal guidelines,” Cisco writes within the report. “However in the case of incomes and constructing belief, compliance is just not sufficient. Shoppers contemplate authorized compliance to be a ‘given,’ with transparency extra of a differentiator.”

This disconnect can be current with reference to information and synthetic intelligence. Whereas shoppers are “typically supportive” of AI, automated decision-making continues to be an space of concern, in response to the report. Round three-quarter of shoppers (76%) within the survey say offering alternatives for them to decide out of AI-based purposes would assist make them “way more” or “extra” snug with AI. Shoppers would additionally prefer to see organizations institute an AI ethics administration program (75%), clarify how the applying is making selections (74%), and contain a human within the decision-making course of (75%) in response to the survey findings.

Organizations, in distinction, aren’t prioritizing opt-outs, with simply 21% saying they offer clients the chance to decide out of AI use and 22% pondering it will be an efficient step to take. The highest precedence for organizations was to make sure a human is concerned within the decision-making (63%) and to elucidate how the purposes works (60%). Over half of the organizations contemplate explaining how the applying works (58%), making certain human involvement in decision-making (55%), and adopting AI ethics ideas as an efficient solution to acquire buyer belief.

The vast majority of respondents (92%) consider their group must do extra to reassure clients in regards to the methods their information could be used with AI. Letting the consumer opt-out could be a extremely efficient manner.