Introduction

NodeJS runtime for AWS Lambda is likely one of the most used and for good causes. It’s quick with a brief chilly begin length, it’s simple to develop for it, and it may be used for Lambda@Edge features. Apart from these, JavaScript is likely one of the hottest languages. Finally, it “simply is sensible” to make use of JavaScript for Lambdas. Typically, we would discover ourselves in a distinct segment state of affairs the place would need to have the ability to squeeze out a bit of bit extra efficiency from our Lambda. In these instances, we might rewrite our entire perform utilizing one thing like Rust, or we might rewrite solely a portion of our code utilizing Rust and name it from a NodeJS host utilizing a mechanism named international perform interface (FFI).

NodeJS gives an API for addons, dynamically-linked shared objects written often in C++. As we would guess from the title, we’re not required to make use of C++. There are a number of implementations of this API in different languages, corresponding to Rust. In all probability probably the most knowns are node-ffi or Neon.

On this article, we might use Neon to construct and cargo dynamic libraries in a NodeJS undertaking.

Construct a Dynamic Library with Neon

To make a perform name to a binary addon from NodeJS, now we have to do the next:

- Import the binary addon into our JavaScript code. Binary modules ought to implement the Node-API (N-API) and often, they should

.nodeextension. Utilizing therequireperform from CommonJS, we are able to import them. Within the case of Neon, this N-API implementation is hidden from us, we do not have to fret a lot about it (so long as we do not have to do some superior debugging) - Within the implementation of the binary code now we have to use some transformation of the enter information. The categories utilized by JavaScript might not be appropriate with the categories utilized by Rust. This conversion must be accomplished for the enter arguments and the returned sorts as properly. Neon gives the Rust variant of all of the primitive JavaScript sorts and object sorts.

- Implement our enterprise logic within the native code.

- Construct the dynamic library. Rust makes this step handy by with the ability to configure the

[lib]part within the undertaking’stomlfile. Furthermore, if we’re utilizing Neon to provoke our undertaking, this can be configured for us out of the field.

As for step one, we might need to create a Rust undertaking with Neon dependency for constructing a dynamic library. This may be accomplished utilizing npm init neon my-rust-lib. To be extra correct, this can create a so-called Neon undertaking, which suggests it already has a package deal.json for dealing with Node-related issues. The undertaking construction will look one thing like this:

my-rust-lib/

├── Cargo.toml

├── README.md

├── package deal.json

└── src

└── lib.rs

If we check out the package deal.json, we are going to discover one thing that resembles the next:

{

"title": "my-rust-library",

"model": "0.1.0",

"description": "My Rust library",

"fundamental": "index.node",

"scripts": {

"construct": "cargo-cp-artifact -nc index.node -- cargo construct --message-format=json-render-diagnostics",

"build-debug": "npm run construct --",

"build-release": "npm run construct -- --release",

"set up": "npm run build-release",

"check": "cargo check"

},

"creator": "Ervin Szilagyi",

"license": "ISC",

"devDependencies": {

"cargo-cp-artifact": "^0.1"

}

}

Operating npm set up command, our dynamic library can be in-built launch mode. Is essential to know that this can goal our present CPU structure and working system. If we need to construct this package deal for being utilized by an AWS Lambda, we must do a cross-target construct to Linux x86-64 or arm64.

Having a look at lib.rs file, we are able to see the entry level of our library exposing a perform named hi there. The return kind of this perform is a string. It is usually essential to note the conversion from a Rust’s str level to a JsString kind. This sort does the bridging for strings between JavaScript and Rust.

use neon::prelude::*;

fn hi there(mut cx: FunctionContext) -> JsResult<JsString> {

Okay(cx.string("hi there node"))

}

#[neon::main]

fn fundamental(mut cx: ModuleContext) -> NeonResult<()> {

cx.export_function("hi there", hi there)?;

Okay(())

}

After we constructed our library, we are able to set up it in one other NodeJS undertaking by working:

npm set up <path>

The <path> ought to level to the situation of our Neon undertaking.

Constructing a Lambda Perform with an Embedded Dynamic Library

In my earlier article Operating Serverless Lambdas with Rust on AWS we used an unbounded spigot algorithm for computing the primary N digits of PI utilizing Rust programming language. Within the following strains, we are going to extract this algorithm into its personal dynamic library and we are going to name it from a Lambda perform written in JavaScript. This algorithm is nice to measure a efficiency enhance in case we leap from a JavaScript implementation to a Rust implementation.

First what now we have to do is generate a Neon undertaking. After that, we are able to extract the perform which computes the primary N digits of PI and wrap it into one other perform offering the glue-code between Rust and JavaScript interplay.

The entire implementation would look just like this:

use neon::prelude::*;

use num_bigint::{BigInt, ToBigInt};

use num_traits::forged::ToPrimitive;

use once_cell::sync::OnceCell;

use tokio::runtime::Runtime;

#[neon::main]

fn fundamental(mut cx: ModuleContext) -> NeonResult<()> {

cx.export_function("generate", generate)?;

Okay(())

}

// Create a singleton runtime used for async perform calls

fn runtime<'a, C: Context<'a>>(cx: &mut C) -> NeonResult<&'static Runtime> cx.throw_error(err.to_string())))

fn generate(mut cx: FunctionContext) -> JsResult<JsPromise> {

// Remodel the JavaScript sorts into Rust sorts

let js_limit = cx.argument::<JsNumber>(0)?;

let restrict = js_limit.worth(&mut cx);

// Instantiate the runtime

let rt = runtime(&mut cx)?;

// Create a JavaScript promise used for return. Since our perform can take an extended interval

// to execute, it will be smart to not block the primary thread of the JS host

let (deferred, promise) = cx.promise();

let channel = cx.channel();

// Spawn a brand new process and try and compute the primary N digits of PI

rt.spawn_blocking(transfer || {

let digits = generate_pi(restrict.to_i64().unwrap());

deferred.settle_with(&channel, transfer |mut cx| {

let res: Deal with<JsArray> = JsArray::new(&mut cx, digits.len() as u32);

// Place the primary N digits right into a JavaScript array and hand it again to the caller

for (i, &digit) in digits.iter().enumerate() {

let val = cx.quantity(f64::from(digit));

res.set(&mut cx, i as u32, val);

}

Okay(res)

});

});

Okay(promise)

}

fn generate_pi(restrict: i64) -> Vec<i32> {

let mut q = 1.to_bigint().unwrap();

let mut r = 180.to_bigint().unwrap();

let mut t = 60.to_bigint().unwrap();

let mut i = 2.to_bigint().unwrap();

let mut res: Vec<i32> = Vec::new();

for _ in 0..restrict {

let digit: BigInt = ((&i * 27 - 12) * &q + &r * 5) / (&t * 5);

res.push(digit.to_i32().unwrap());

let mut u: BigInt = &i * 3;

u = (&u + 1) * 3 * (&u + 2);

r = &u * 10 * (&q * (&i * 5 - 2) + r - &t * digit);

q *= 10 * &i * (&i * 2 - 1);

i = i + 1;

t *= u;

}

res

}

What’s essential to note right here is that we return a promise to Javascript. Within the Rust code, we create a brand new runtime for dealing with asynchronous perform executions from which we construct a promise that will get returned to JavaScript.

Shifting on, now we have to create the AWS Lambda perform concentrating on the NodeJS Lambda Runtime. The only means to do that is to create a NodeJS undertaking utilizing npm init with app.js file because the entry level and set up the Rust dependency as talked about above.

Within the app.js we are able to construct the Lambda handler as follows:

// Import the dynamic library utilizing commonjs

const compute_pi = require("compute-pi-rs");

// Construct and async handler for the Lambda perform. We use await for unpacking the promise offered by the embedded library

exports.handler = async (occasion) => {

const digits = await compute_pi.generate(occasion.digits);

return {

digits: occasion.digits,

pi: digits.be a part of('')

};

};

That is it. To deploy this perform into AWS, now we have to pack the app.js file and the node_modules folder right into a zip archive and add it to AWS Lambda. Assuming our goal structure for the Lambda and for the dependencies match (we cannot have an x86-64 native dependency working on a Lambda perform set to make use of arm64), our perform ought to work as anticipated….or perhaps not.

GLIBC_2.28 not discovered

With our Neon undertaking, we construct a dynamic library. One of many variations between dynamic and static libraries is that dynamic libraries can have shared dependencies which are anticipated to be current on the host machine on the time of execution. In distinction, a static library might include the whole lot required so as to have the ability to be used as-is. If we construct our dynamic library developed in Rust, we might encounter the next concern throughout its first execution:

/lib64/libc.so.6: model `GLIBC_2.28' not discovered (required by .../compute-pi-rs-lib/index.node)

The rationale behind this concern, as defined on this weblog publish, is that Rust dynamically hyperlinks to the C commonplace library, extra particularly the GLIBC implementation. This shouldn’t be an issue, since GLIBC needs to be current on basically each Unix system, nonetheless, this turns into a problem in case the model of the GLIBC used at construct time is completely different in comparison with the model current on the system executing the binary. If we’re utilizing cross for constructing our library, the GLIBC model of the Docker container utilized by cross could also be completely different than the one current within the Lambda Runtime on AWS.

The answer can be to construct the library on a system that has the identical GLIBC model. Probably the most dependable resolution I discovered is to make use of an Amazon Linux Docker picture because the construct picture as an alternative of utilizing the default cross picture. cross will be configured to make use of a {custom} picture for compilation and constructing. What now we have to do is to supply a Dockerfile with Amazon Linux 2 as its base picture and supply further configuration to have the ability to construct Rust code. The Dockerfile might appear to be this:

FROM public.ecr.aws/amazonlinux/amazonlinux:2.0.20220912.1

ENV RUSTUP_HOME=/usr/native/rustup

CARGO_HOME=/usr/native/cargo

PATH=/usr/native/cargo/bin:$PATH

RUST_VERSION=1.63.0

RUN yum set up -y gcc gcc-c++ openssl-devel;

curl https://sh.rustup.rs -sSf | sh -s -- --no-modify-path --profile minimal --default-toolchain $RUST_VERSION -y;

chmod -R a+w $RUSTUP_HOME $CARGO_HOME;

rustup --version;

cargo --version;

rustc --version;

WORKDIR /goal

Within the second step, we must create a toml file named Cross.toml within the root folder of our undertaking. Within the content material of this file now we have to specify a path to the Dockerfile above, for instance:

[target.x86_64-unknown-linux-gnu]

dockerfile = "./Dockerfile-x86-64"

This toml file can be used robotically at every construct. As an alternative of the bottom cross Docker picture, the required dockerfile can be used for the {custom} picture definition.

The rationale for utilizing Amazon Linux 2 is that the Lambda Runtime itself relies on that. We are able to discover extra details about runtimes and dependencies within the AWS documentation about runtimes.

Efficiency and Benchmarks

Setup for Benchmarking

For my earlier article Operating Serverless Lambdas with Rust on AWS we used an unbounded spigot algorithm to compute the primary N digits of PI. We’ll use the identical algorithm for the upcoming benchmarks as properly. We had seen the implementation of this algorithm above. To summarize, our Lambda perform written in NodeJS will use FFI to name a perform written in Rust which can return a listing with the primary N variety of PI.

To have the ability to examine our measurements with the outcomes gathered for a Lambda written fully in Rust, we are going to use the identical AWS Lambda configurations. These are 128 MB of RAM reminiscence allotted, and the Lambda being deployed in us-east-1. Measurements can be carried out for each x86-64 and arm64 architectures.

Chilly Begin/Heat Begin

Utilizing XRay, we are able to get an fascinating breakdown of how our Lambda performs and the place the time is spent throughout every run. For instance, right here we are able to see a hint for a chilly begin execution for an x86-64 Lambda:

Within the following we are able to see the hint for a heat begin in case of the identical Lambda:

The initialization (chilly begin) interval is fairly commonplace for a NodeJS runtime. In my earlier article, we might measure chilly begins between 100ms and 300ms for NodeJS. The chilly begin interval for this present execution falls immediately into this interval.

Efficiency Comparisons

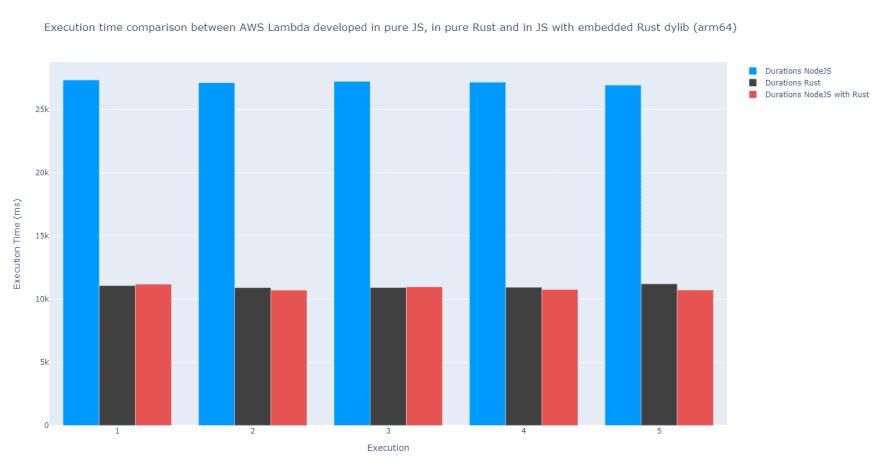

As ordinary, I measured the working time of 5 consecutive executions of the identical Lambda perform. I did this for each x86-64 and arm64 architectures. These are the outcomes I witnessed:

| Period – run 1 | Period – run 2 | Period – run 3 | Period – run 4 | Period – run 5 | |

|---|---|---|---|---|---|

| x86-64 | 5029.48 ms | 5204.80 ms | 4811.30 ms | 4852.36 ms | 4829.74 ms |

| arm64 | 11064.26 ms | 10703.61 ms | 10968.51 ms | 10755.36 ms | 10716.85 ms |

Evaluating them to vanilla NodeJS and vanilla Rust implementations, we get the next chart for x86-64:

With Graviton (arm64) it appears related:

In accordance with my measurements, the perform with the dynamic library has the same execution time in comparison with the one developed in vanilla Rust. In actual fact, it seems that could be a bit of bit sooner. That is form of surprising since there may be some overhead when utilizing FFI. Though this efficiency enhance could be thought-about a margin of error, we also needs to regard the truth that the measurements weren’t accomplished on the identical day. The underlying {hardware} might need been carried out barely higher. Or just I used to be simply fortunate and bought an setting that doesn’t encounter as a lot load from different AWS customers, who is aware of…

Closing Ideas

Combining native code with NodeJS could be a enjoyable afternoon undertaking, however would this make sense in a manufacturing setting?

Typically in all probability not.

The rationale why I am saying that is that fashionable NodeJS interpreters are blazingly quick. They will carry out on the degree required for a lot of the use instances for which a Lambda perform can be ample. Having to cope with the luggage of complexity launched by a dynamic library written in Rust might not be the right resolution. Furthermore, usually, Lambda features are sufficiently small that it will be wiser emigrate them fully to a extra performant runtime, fairly than having to extract a sure a part of it right into a library. Earlier than deciding to partially or fully rewrite a perform, I like to recommend doing a little precise measurements and efficiency assessments. XRay may also help rather a lot to hint and diagnose bottlenecks in our code.

Then again, in sure conditions, it could be helpful to have a local binding as a dynamic library. For instance:

- within the case of cryptographic features, like hashing, encryption/decryption, mood verification, and many others. These will be CPU-intensive duties, so it could be a good suggestion to make use of a local method for these;

- if we are attempting to do picture processing, AI, information engineering, or having to do complicated transformations on an enormous quantity of information;

- if we have to present a single library (developed in Rust) company-wise with out the necessity for rewriting it for each different used stack. We might wrappers, corresponding to Neon, to show them to different APIs, such because the considered one of NodeJS.

Hyperlinks and References

- International perform interface: https://en.wikipedia.org/wiki/Foreign_function_interface

- NodeJS – C++ addons: https://nodejs.org/api/addons.html

- GitHub – node-ffi: https://github.com/node-ffi/node-ffi

- Neon: https://neon-bindings.com/docs/introduction

- Node-API (N-API): https://nodejs.org/api/n-api.html#node-api

- Tokio Runtimes: https://docs.rs/tokio/1.20.1/tokio/runtime/index.html

- Constructing Rust binaries in CI that work with older GLIBC: https://kobzol.github.io/rust/ci/2021/05/07/building-rust-binaries-in-ci-that-work-with-older-glibc.html

-

cross– Customized Docker pictures: https://github.com/cross-rs/cross#custom-docker-images - Lambda runtimes: https://docs.aws.amazon.com/lambda/newest/dg/lambda-runtimes.html

- Operating Serverless Lambdas with Rust on AWS: https://ervinszilagyi.dev/articles/running-serverless-lambdas-with-rust-aws.html

The code used on this article will be discovered on GitHub at https://github.com/Ernyoke/aws-lambda-js-with-rust