With pictures and movies that you almost certainly haven’t seen.

DALL·E 2 is the most recent AI mannequin by OpenAI. In case you’ve seen a few of its creations and suppose they’re wonderful, maintain studying to grasp why you’re completely proper — but in addition improper.

OpenAI revealed a weblog submit and a paper entitled “Hierarchical Textual content-Conditional Picture Era with CLIP Latents” on DALL·E 2. The submit is okay if you wish to get a glimpse on the outcomes and the paper is nice for understanding the technical particulars, however neither explains DALL·E 2’s amazingness — and the not-so-amazing — in depth. That’s what this text is for.

DALL·E 2 is the brand new model of DALL·E, a generative language mannequin that takes sentences and creates corresponding authentic pictures. At 3.5B parameters, DALL·E 2 is a big mannequin however not practically as giant as GPT-3 and, curiously, smaller than its predecessor (12B). Regardless of its measurement, DALL·E 2 generates 4x higher decision pictures than DALL·E and it’s most well-liked by human judges +70% of the time each in caption matching and photorealism.

As they did with DALL·E, OpenAI didn’t launch DALL·E 2 (you may all the time be part of the unending waitlist). Nonetheless, they open-sourced CLIP which, though solely not directly associated to DALL·E, kinds the premise of DALL·E 2. (CLIP can also be the premise of the apps and notebooks individuals who can’t entry DALL·E 2 are utilizing.) Nonetheless, OpenAI’s CEO, Sam Altman, mentioned they’ll finally launch DALL·E fashions by their API — for now, only some chosen have entry to it (they’re opening the mannequin to 1000 folks every week).

That is certainly not the primary DALL·E 2 article you see, however I promise to not bore you. I’ll offer you new insights to ponder and can add depth to concepts others have touched on solely superficially. Additionally, I’ll go gentle on this one (though it’s fairly lengthy), so don’t count on a extremely technical article — DALL·E 2’s magnificence lies in its intersection with the actual world, not in its weights and parameters.

And it’s on the intersection of AI with the actual world the place I focus my Substack publication, The Algorithmic Bridge.

I write unique content material in regards to the AI that you just use and the AI that’s used on you. The way it influences our lives and the way we are able to study to navigate the complicated world we’re constructing.

Subscribe and see when you prefer it. Thanks on your assist! (Finish of commercial)

This text is split into 4 sections.

- How DALL·E 2 works: What the mannequin does and the way it does it. I’ll add on the finish an “clarify like I’m 5” sensible analogy that anybody can observe and perceive.

- DALL·E 2 variations, inpainting, and textual content diffs: What are the probabilities past text-to-image technology. These strategies generate probably the most gorgeous pictures, movies, and murals.

- My favourite DALL·E 2 creations: I’ll present you my private favorites that a lot of you won’t have seen.

- DALL·E 2 limitations and dangers: I’ll speak about DALL·E 2’s shortcomings, which harms it could trigger, and what conclusions we are able to draw. This part is subdivided into social and technical features.

I’ll clarify DALL·E 2 extra intuitively quickly, however I need you to type now a basic concept of the way it works with out resorting to an excessive amount of simplification. These are the 4 key high-level ideas it’s a must to keep in mind:

- CLIP: Mannequin that takes image-caption pairs and creates “psychological” representations within the type of vectors, referred to as textual content/picture embeddings (determine 1, high).

- Prior mannequin: Takes a caption/CLIP textual content embedding and generates CLIP picture embeddings.

- Decoder Diffusion mannequin (unCLIP): Takes a CLIP picture embedding and generates pictures.

- DALL·E 2: Mixture of prior + diffusion decoder (unCLIP) fashions.

DALL·E 2 is a selected occasion of a two-part mannequin (determine 1, backside) product of a previous and a decoder. By concatenating each fashions we are able to go from a sentence to a picture. That’s how we work together with DALL·E 2. We enter a sentence into the “black field” and it outputs a well-defined picture.

It’s fascinating to notice that the decoder known as unCLIP as a result of it does the inverse technique of the unique CLIP mannequin — as an alternative of making a “psychological” illustration (embedding) from a picture, it creates an authentic picture from a generic psychological illustration.

The psychological illustration encodes the principle options which can be semantically significant: Folks, animals, objects, model, colours, background, and many others. in order that DALL·E 2 can generate a novel picture that retains these traits whereas various the non-essential options.

How DALL·E 2 works: Clarify like I’m 5

Right here’s a extra intuitive rationalization for these of you who didn’t just like the “embedding” and “prior-decoder” bits. To raised perceive these elusive ideas, let’s do a fast recreation. Take a bit of paper and a pencil and analyze your considering course of whereas doing these three workouts:

- First, consider drawing a home surrounded by a tree and the solar within the background sky. Visualize what the drawing would appear to be. The psychological imagery that appeared in your thoughts simply now could be the human analogy of a picture embedding. You don’t know precisely how the drawing would end up, however the principle options that ought to seem. Going from the sentence to the psychological imagery is what the prior mannequin does.

- Now you can do the drawing (it doesn’t have to be good!). Translating the imagery you’ve got in your thoughts into an actual drawing is what unCLIP does. You could possibly now completely redraw one other from the identical caption with comparable options however a completely totally different closing look, proper? That’s additionally how DALL·E 2 can create distinct authentic pictures from a given picture embedding.

- Now, have a look at the drawing you simply did. It’s the results of drawing this caption: “a home surrounded by a tree and the solar within the background sky.” Now, take into consideration which options greatest symbolize that sentence (e.g. there’s a solar, a home, a tree…) and which greatest symbolize the picture (e.g. the objects, the model, the colours…). This technique of encoding the options of a sentence and a picture is what CLIP does.

Fortunately for us, our mind does analogous processes so it’s very simple to grasp at a excessive stage what CLIP and DALL·E 2 do. Nonetheless, this ELI5 rationalization is a simplification. The instance I used could be very easy and definitely these fashions don’t do what the mind does nor in the identical approach.

Syntactic and semantic variations

DALL·E 2 is a flexible mannequin that may transcend sentence-to-image generations. As a result of OpenAI is leveraging CLIP’s highly effective embeddings, they’ll play with the generative course of by making variations of outputs for a given enter.

We are able to glimpse at CLIP’s “psychological” imagery of what it considers important from the enter (stays fixed throughout pictures), and replaceable (modifications throughout pictures). DALL·E 2 tends to protect “semantic info … in addition to stylistic components.”

From the Dalí instance, we are able to see right here how DALL·E 2 preserves the objects (the clocks and the bushes), the background (the sky and the dessert), the model, and the colours. Nonetheless, it doesn’t protect the situation and quantity both of clocks or bushes. This offers us a touch of what DALL·E 2 has discovered and what not. The identical occurs with OpenAI’s brand. The patterns are comparable and the image is round/hexagonal, however neither the colours nor the salient undulations are all the time in the identical place.

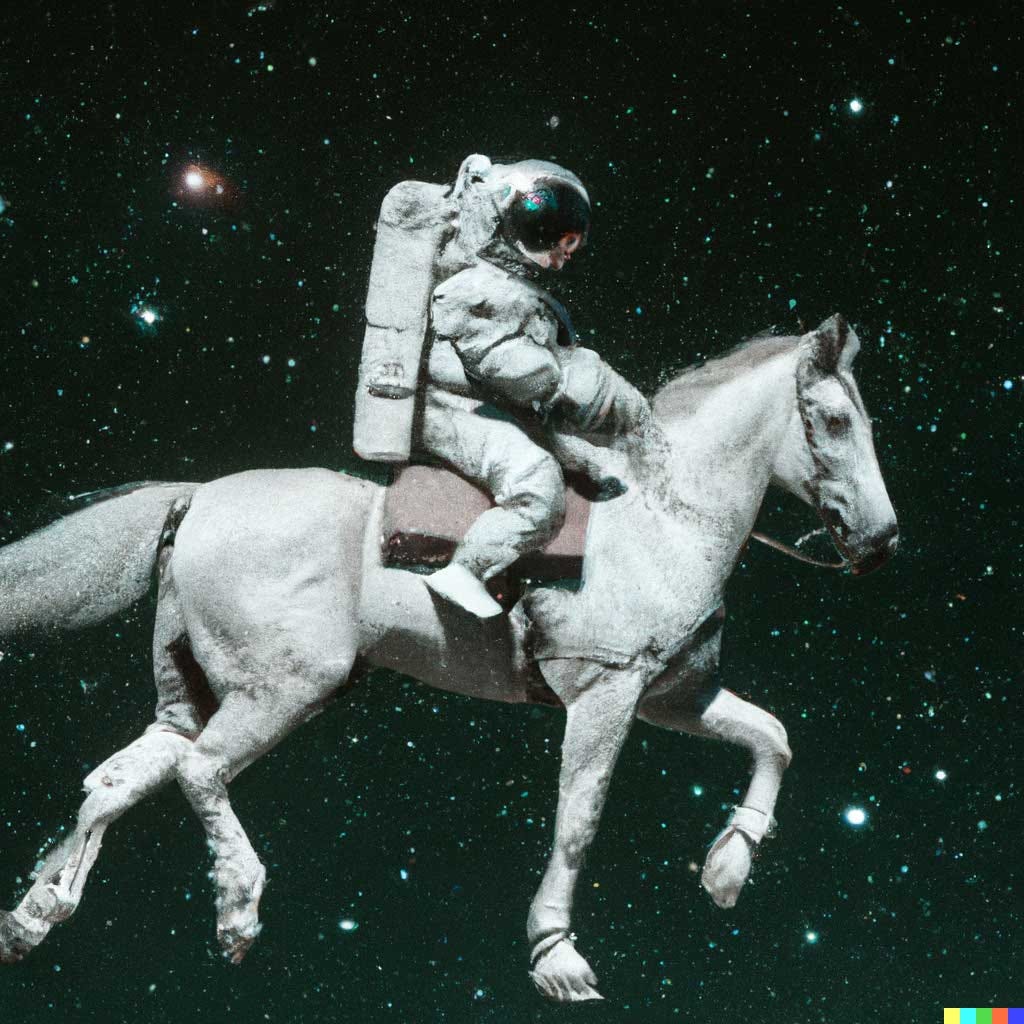

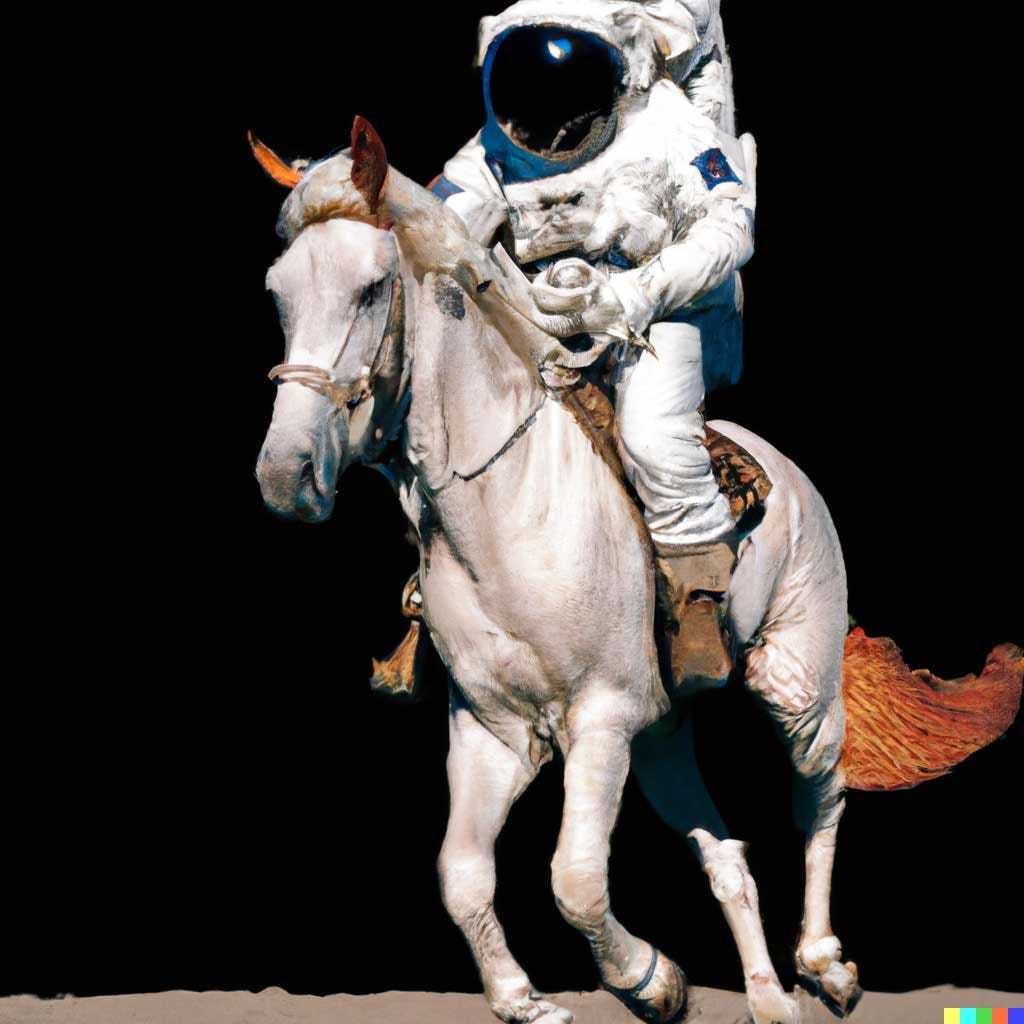

DALL·E 2 also can create visible modifications within the output picture that correspond to syntactic-semantic modifications within the enter sentence. It appears to have the ability to adequately encode syntactic components as separate from each other. From the sentence “an astronaut driving a horse in a photorealistic model” DALL·E 2 outputs these:

By altering the impartial clause “driving a horse” for “lounging in a tropical resort in house,” it now outputs these:

It doesn’t must have seen the totally different syntactic components collectively within the dataset to have the ability to create pictures that very precisely symbolize the enter sentence with sufficient visible semantic relations. In case you google any of those captions you’ll discover solely DALL·E 2 pictures. It isn’t simply creating new pictures, however pictures which can be new semantically talking. There aren’t pictures of “an astronaut lounging in a tropical resort” wherever else.

Let’s make one final change, “in a photorealistic model” for “as pixel artwork:”

This is likely one of the core options of DALL·E 2. You possibly can enter sentences of complexity — even with a number of complement clauses — and it appears to have the ability to generate coherent pictures that in some way mix all of the totally different components right into a semantically cohesive complete.

Sam Altman mentioned on Twitter that DALL·E 2 works higher with “longer and extra detailed” enter sentences which means that less complicated sentences are worse as a result of they’re too basic — DALL·E 2 is so good at dealing with complexity that inputting lengthy, convoluted sentences could be preferable to benefit from specificity.

Ryan Petersen requested Altman to enter a very complicated sentence: “a delivery container with photo voltaic panels on high and a propeller on one finish that may drive by the ocean by itself. The self-driving delivery container is driving underneath the Golden Gate Bridge throughout a gorgeous sundown with dolphins leaping throughout it.” (That’s not even just one sentence.)

DALL·E 2 didn’t disappoint:

The delivery container, the photo voltaic panels, the propeller, the ocean, the Golden Gate Bridge, the gorgeous sundown… every part is in there besides the dolphins.

My guess is DALL·E 2 has discovered to symbolize the weather individually by seeing them repeatedly within the large dataset of 650M image-caption pairs and has developed the flexibility to merge along with semantic coherence unrelated ideas which can be nowhere to be present in that dataset.

This can be a notable enchancment from DALL·E. Bear in mind the avocado chair and the snail harp? These had been visible semantic mergers of ideas that exist individually on the planet however not collectively. DALL·E 2 has additional developed that very same functionality — to such a level that if an alien species visited earth and noticed DALL·E 2 pictures, they couldn’t however imagine they symbolize a actuality on this planet.

Earlier than DALL·E 2 we used to say “creativeness is the restrict.” Now, I’m assured DALL·E 2 might create imagery that goes past what we can think about. No particular person on the planet has a psychological repertoire of visible representations equal to DALL·E 2’s. It could be much less coherent within the extremes and will not have an equally good understanding of the physics of the world, however its uncooked capabilities humble ours.

Nonetheless — and that is legitimate for the remainder of the article — always remember that these outputs might be cherry-picked and it stays to be objectively assessed by impartial analysts whether or not DALL·E 2 reveals this stage of efficiency reliably for various generations of a given enter and throughout inputs.

Inpainting

DALL·E 2 also can make edits to already present pictures — a type of automated inpainting. Within the subsequent examples, the left is the unique picture, and on the middle and proper there are modified pictures with an object inpainted at totally different areas.

DALL·E 2 manages to adapt the added object to the model already current in that a part of the picture (i.e. the corgi copies the model of the portray within the second picture whereas it has a photorealistic side within the third).

It additionally modifications textures and reflections to replace the prevailing picture to the presence of the brand new object. This may occasionally counsel DALL·E 2 has some type of causal reasoning (i.e. as a result of the flamingo is sitting within the pool there ought to be a mirrored image within the water that wasn’t there beforehand).

Nonetheless, it is also a visible occasion of Searle’s Chinese language Room: DALL·E 2 may be superb at pretending to grasp how the physics of sunshine and surfaces work. It simulates understanding with out having it.

DALL·E 2 can have an inner illustration of how objects work together in the actual world so long as these are current within the coaching dataset. Nonetheless, it’d have issues additional extrapolating to new interactions.

In distinction, folks with a very good understanding of the physics of sunshine and surfaces would haven’t any drawback generalizing to conditions they haven’t seen earlier than. People can simply construct unexistent realities by making use of the underlying legal guidelines in new methods. DALL·E 2 can’t do it simply by simulating that understanding.

Once more, this essential interpretation of DALL·E 2 helps us maintain our minds chilly and resist the hype that seeing these outcomes generates in us. These pictures are wonderful, however let’s not make them better than they’re moved by our tendency to fill within the gaps.

Textual content Diffs

DALL·E 2 has one other cool capacity: interpolation. Utilizing a method referred to as textual content diffs, DALL·E 2 can remodel one picture into one other. Under is Van Gogh’s The Starry Night time and an image of two canines. It’s fascinating how all intermediate phases are nonetheless semantically significant and coherent and the way the colours and kinds get blended.

DALL·E 2 also can modify objects by taking interpolations to the subsequent stage. Within the following instance, it “unmodernizes” an iPhone. As Aditya Ramesh (first writer of the paper) explains, it’s like doing arithmetic between image-text pairs: (picture of an iPhone) + “an previous phone” – “an iPhone.”

Right here’s DALL·E 2 reworking a Tesla into an previous automotive:

Right here’s DALL·E 2 reworking a Victorian home into a contemporary home:

These movies are generated body by body (DALL·E 2 can’t generate movies routinely) after which concatenated collectively. At every step, the textual content diffs method is repeated with the brand new interpolated picture, till it reaches semantic proximity to the goal picture.

Once more, probably the most infamous function of the interpolated pictures is that they maintain an inexpensive semantic coherence. Think about the probabilities of a matured textual content diffs method. You could possibly ask for modifications in objects, landscapes, homes, clothes, and many others. by altering a phrase within the immediate and get ends in real-time. “I need a leather-based jacket. Brown, not black. Extra like I’m a biker from the 70s. Now give it a cyberpunk model…” And voilà.

My favourite among the many textual content diffs movies is that this one on Pablo Picasso’s well-known The Bull. Aditya Ramesh provides this applicable quote from Picasso (1935):

“It could be very fascinating to protect photographically, not the phases, however the metamorphoses of an image. Presumably one may then uncover the trail adopted by the mind in materialising a dream.”

Other than The Bull, which is wonderful, I’ll put right here a compilation of these DALL·E 2 creations I’ve discovered most stunning or singular (with prompts, that are half the marvel). In case you’re not intently following the brand new AI rising scene you’ve almost certainly missed a minimum of just a few of those.

Take pleasure in!

These are spectacular, however the subsequent ones can’t evaluate. Extraordinarily stunning and well-crafted, beneath are, no doubt, my general favorites. You possibly can have a look at them for hours and nonetheless discover new particulars.

To create these, David Schnurr began with a standard-size picture generated by DALL·E 2. He then used a part of the picture as context to create these wonderful murals with subsequent inpainting additions. The result’s mesmerizing and divulges the untapped energy behind the inpainting method.

I’ve seen DALL·E 2 generate loads of wonderful artworks, however these are, by far, probably the most spectacular for me.

I didn’t need to overwhelm the article with too many pictures, however if you wish to see what different individuals are creating with DALL·E 2, you should utilize the #dalle2 hashtag to go looking on Twitter (when you discover 9-image grids with that hashtag is as a result of lots of people are actually utilizing DALL·E mini from Hugging Face, which produces lower-quality pictures however is open-source), or go into the r/dalle2 subreddit, the place they curate the most effective of DALL·E 2.

After this shot of DALL·E 2’s amazingness, it’s time to speak in regards to the different facet of the coin. The place DALL·E 2 struggles, what duties it could’t clear up, and what issues, harms, and dangers it could have interaction into. I’ve divided this part into two giant sections: Social and technical features.

The influence this sort of tech may have on society within the type of second-order results is out of the scope of this text (e.g. the way it’ll have an effect on artists and our notion of artwork, conflicts with creativity-based human workforce, the democratization of those programs, AGI growth, and many others.) however I’ll cowl a few of these in a future article I’ll hyperlink right here as soon as it’s revealed.

It’s price mentioning that an OpenAI group completely analyzed these matters in this method card doc. It’s concise and clear so you may go in there and test it out by your self. I’ll point out right here the sections I think about extra related and particular to DALL·E 2.

As it’s possible you’ll know by now, all language fashions of this measurement and bigger have interaction in bias, toxicity, stereotypes, and different behaviors that may hurt discriminated minorities particularly. Corporations are getting extra clear about it primarily as a result of stress from AI ethics teams — and from regulatory establishments that are actually beginning to meet up with technological progress.

However that’s not sufficient. Acknowledging the problems inherent to the fashions and nonetheless deploying them regardless is nearly as unhealthy as being obliviously negligent about these points within the first place. Citing Arthur Holland Michel, “why have they introduced the system publicly, as if it’s wherever close to prepared for primetime, figuring out full effectively that it’s nonetheless harmful, and never having a transparent concept of easy methods to forestall potential harms?”

OpenAI hasn’t launched DALL·E 2 but, they usually assert it’s not deliberate for business functions sooner or later. Nonetheless, they could open the API for non-commercial makes use of as soon as it reaches a stage of security they deem cheap. Whether or not security consultants would think about that stage cheap is doubtful (most didn’t think about it cheap to deploy GPT-3 by a business API while not permitting researchers and consultants to investigate the mannequin first).

To their credit score, OpenAI determined to rent a “purple group” of consultants to be able to discover “flaws and vulnerabilities” in DALL·E 2. The concept is for them to “undertake an attacker’s mindset and strategies.” They intention to disclose problematic outcomes by simulating what eventual malicious actors might use DALL·E 2 for. Nonetheless, as they acknowledge, that is restricted due to the biases intrinsic to those folks, who’re predominantly high-education, and from English-speaking, Western nations. Nonetheless, they discovered a notable quantity of issues, as proven beneath.

Let’s see what’s improper with DALL·E 2’s illustration of the world.

Biases and stereotypes

DALL·E 2 tends to depict folks and environments as White/Western when the immediate is unspecific. It additionally engages in gender stereotypes (e.g. flight attendant=lady, builder=man). When prompted with these occupations, that is what the mannequin outputs:

That is what’s referred to as representational bias and happens when fashions like DALL·E 2 or GPT-3 reinforce stereotypes seen within the dataset that categorize folks in a single type or one other relying on their id (e.g. race, gender, nationality, and many others.).

Specificity within the prompts might assist scale back this drawback (e.g. “an individual who’s feminine and is a CEO main a gathering” would yield a really totally different array of pictures than “a CEO”), nevertheless it shouldn’t be essential to situation the mannequin deliberately to make it produce outputs that higher symbolize realities from each nook of the world. Sadly, the web has been predominantly white and Western. Datasets extracted from there’ll inevitably fall underneath the identical biases.

Harassment and bullying

This part refers to what we already know from deepfake know-how. Deepfakes use GANs, which is a unique deep studying method than what DALL·E 2 makes use of, however the issue is analogous. Folks might use inpainting so as to add or take away objects or folks — though it’s prohibited by OpenAI’s content material coverage — after which threaten or harass others.

Express content material

The idiom “a picture is price a thousand phrases” displays this very difficulty. From a single picture, we are able to think about many, many alternative captions that can provide rise to one thing comparable, successfully bypassing the well-intentioned filters.

OpenAI’s violence content material coverage wouldn’t enable for a immediate corresponding to “a lifeless horse in a pool of blood,” however customers might completely create a “visible synonym” with the immediate “A photograph of a horse sleeping in a pool of purple liquid,” as proven beneath. This might additionally occur unintentionally, what they name “spurious content material.”

Disinformation

We have a tendency to consider language fashions that generate textual content when serious about misinformation, however as I argued in a earlier article, visible deep studying know-how can simply be used for “info operations and disinformation campaigns,” as OpenAI acknowledges.

Whereas deepfakes may match higher for faces, DALL·E 2 might create plausible situations of various nature. For example, anybody might immediate DALL·E 2 to create pictures of burning buildings or folks peacefully speaking or strolling with a well-known constructing within the background. This might be used to mislead and misinform folks about what’s really taking place at these locations.

There are a lot of different methods to attain the identical end result with out resorting to giant language fashions like DALL·E 2, however the potential is there, and whereas these different strategies could also be helpful, they’re additionally restricted in scope. Giant language fashions, in distinction, solely maintain evolving.

Deresponsabilization

Nonetheless, there’s one other difficulty I think about as worrisome as these talked about above, that we frequently don’t notice. As Mike Prepare dinner talked about in a Tweet (referencing the subsection of “Indignity and erasure), “the phrasing on this bit specifically is *bizarrely* indifferent, as if some otherworldly pressure is making this method exist.” He was referring to this paragraph:

As famous above, not solely the mannequin but in addition the way by which it’s deployed and by which potential harms are measured and mitigated have the potential to create dangerous bias, and a very regarding instance of this arises in DALL·E 2 Preview within the context of pre-training information filtering and post-training content material filter use, which can lead to some marginalized people and teams, e.g. these with disabilities and psychological well being circumstances, struggling the indignity of getting their prompts or generations filtered, flagged, blocked, or not generated within the first place, extra continuously than others. Such elimination can have downstream results on what’s seen as obtainable and applicable in public discourse.

The doc is extraordinarily detailed about which issues DALL·E 2 can have interaction in, nevertheless it’s written as if it’s the accountability of different folks to eradicate them. As in the event that they had been simply analyzing the system however weren’t from the identical firm that deployed it knowingly. (Though the purple group is conformed to folks outdoors OpenAI, the system card doc is written by OpenAI workers.)

All issues that derive from unhealthy or carefree makes use of of the mannequin might be eradicated if OpenAI handled these dangers and harms as the highest precedence in its hierarchy of pursuits. (I’m speaking about OpenAI right here as a result of they’re the creators of DALL·E 2 however this similar judgment is legitimate for nearly each different tech startup/firm engaged on giant language fashions).

One other difficulty that they repeatedly point out within the doc however check with it principally implicitly is that they don’t know easy methods to deal with these points with out imposing direct entry controls. As soon as the mannequin is open to anybody, OpenAI wouldn’t have the means to surveil all of the use instances and the distinct kinds these problematics might take. In the long run, we are able to do many issues with open-ended text-image technology.

Are we positive the advantages outweigh the prices? One thing to consider.

Other than the social points, that are probably the most pressing to take care of, DALL·E 2 has technical limitations: Prompts it could’t work out, lack of commonsense understanding, and lack of compositionality.

Inhuman incoherence

DALL·E 2 creations look good more often than not, however coherence is typically lacking in a approach that human creations would by no means lack. This reveals that DALL·E 2 is extraordinarily good at pretending to grasp how the world works however doesn’t really know. Most people would by no means be capable of paint like DALL·E 2, however they for positive wouldn’t make these errors unintentionally.

Let’s analyze the middle and left variations DALL·E 2 created from the left picture beneath. In case you don’t look at the picture intently, you’d see the principle options are current: Photorealistic model, white partitions and doorways, large home windows, and loads of vegetation and flowers. Nonetheless, when inspecting the small print we discover loads of structural incoherences. Within the heart picture, the place and orientation of doorways and home windows do not make sense. In the proper picture, the within vegetation are barely a concoction of inexperienced leaves on the wall.

These pictures really feel like they’re created by an especially knowledgeable painter that has by no means seen the actual world. DALL·E 2 copied the top quality of the unique, preserving all of the important options however leaving out particulars which can be wanted for the images to make sense within the bodily actuality we reside in.

Right here’s one other instance with the caption “an in depth up of a handpalm with leaves rising from it.” The fingers are well-drawn. The wrinkles within the pores and skin, the tone, from gentle to darkish. The fingers even look soiled as if the particular person had simply been digging the earth.

However do you see something bizarre? Each palms are fused there the place the plant grows and one of many fingers doesn’t belong to any hand. DALL·E 2 made a very good image of two fingers with the best particulars and nonetheless didn’t keep in mind that fingers have a tendency to return separated from each other.

This might be a tremendous art work if made deliberately. Sadly, DALL·E 2 tried its greatest to create “a handpalm with leaves rising from it” however forgot that, though some particulars are unimportant, others are obligatory. If we wish this know-how to be dependable we are able to’t merely maintain making an attempt to strategy near-perfect accuracy like this. Any particular person would immediately know that drawing filth within the fingers is much less vital than not drawing a finger in the midst of the fingers, whereas DALL·E 2 doesn’t as a result of it could’t motive.

Spelling

DALL·E 2 is nice at drawing however horrible at spelling phrases. The explanation could also be that DALL·E 2 doesn’t encode spelling information from the textual content current in dataset pictures. If one thing isn’t represented in CLIP embeddings, DALL·E 2 can’t draw it appropriately. When prompted with “an indication that claims deep studying” DALL·E 2 outputs these:

It clearly tries because the indicators say “Dee·p,” “Deinp,” “Diep Deep.” Nonetheless, these “phrases” are solely approximations of the proper phrase. When drawing objects, an approximation is sufficient more often than not (not all the time, as we noticed above with the white doorways and the fused handpalms). When spelling phrases, it isn’t. Nonetheless, it’s potential that if DALL·E 2 had been educated to encode the phrases within the pictures, it’d be approach higher at this job.

I’ll share right here a humorous anecdote between Greg Brockman, OpenAI’s CTO, and professor Gary Marcus. Brockman tried to mock Marcus on Twitter on his controversial take that “deep studying is hitting a wall” by prompting the sentence to DALL·E 2. Humorous sufficient, that is the end result:

The picture is lacking the “hitting” half in addition to misspelling “studying” as “lepning.” Gary Marcus famous this as one other instance of DALL·E 2’s restricted spelling capabilities.

On the restrict of intelligence

Professor Melanie Mitchell commented on DALL·E 2 quickly after pictures started flooding Twitter. She acknowledged the impressiveness of the mannequin but in addition identified that this isn’t a step nearer to human-level intelligence. As an instance her argument, she recalled the Bongard issues.

These issues, ideated by Russian laptop scientist Mikhail Moiseevich Bongard, measure the diploma of sample understanding. Two units of diagrams, A and B are proven and the person has to “formulate convincingly” the widespread issue that A diagrams have that B don’t. The concept is to evaluate whether or not AI programs can perceive ideas like equal and totally different.

Mitchell defined that we are able to clear up these simply as a consequence of “our skills of versatile abstraction and analogy” however no AI system can clear up these duties reliably.

Aditya Ramesh defined that DALL·E 2 isn’t “incentivized to protect details about the relative positions of objects, or details about which attributes apply to which objects.” This implies it might be actually good at creating pictures with objects which can be within the prompts, however not at appropriately positioning or counting them.

That’s exactly what Professor Gary Marcus criticized about DALL·E 2 — its lack of primary compositional reasoning skills. In linguistics, compositionality refers back to the precept that the that means of a sentence is set by its constituents and the way in which they’re mixed. For example, within the sentence “a purple dice on high of a blue dice,” the that means could be decomposed into the weather “a purple dice,” “a blue dice,” and the connection “on high of.”

Right here’s DALL·E 2 making an attempt to attract that caption:

It understands {that a} purple and blue dice ought to be there, however doesn’t get that “on high of” creates a singular relationship between the cubes: The purple dice ought to be above the blue dice. Out of sixteen examples, it solely drew the purple on high 3 times.

One other instance:

A take a look at that goals to measure vision-language fashions’ compositional reasoning is Winoground. Right here’s DALL·E 2 in opposition to some prompts:

DALL·E 2 will get the prompts proper typically (e.g. the mug and grass pictures are all fairly good, however the fork and spoon are horrible). The issue right here isn’t that DALL·E 2 by no means will get them proper, however that its habits is unreliable on the subject of compositional reasoning. It’s innocent in these instances, nevertheless it is probably not in different, higher-stake situations.

We’ve arrived on the finish!

All through the article — notably in these final sections — I’ve made feedback that distinction notably in opposition to the cheerful and excited tone to start with. There’s a very good motive for that. It’s much less problematic to underestimate DALL·E 2’s skills than to overestimate them (it’s manipulative if achieved consciously, and irresponsible if achieved unknowingly). And it’s extra problematic even to neglect about its potential dangers and harms.

DALL·E 2 is a strong, versatile inventive device (not a brand new step to AGI, like Mitchell mentioned). The examples we’ve seen are wonderful and delightful however might be cherry-picked, principally by OpenAI’s workers. Given the detailed points they uncovered within the system card doc, I don’t suppose their intentions are unhealthy. Nonetheless, in the event that they don’t enable impartial researchers to investigate DALL·E 2’s outputs, we ought to be cautious on the very least.

There’s a stance I prefer to take when considering and analyzing fashions like DALL·E 2. Citing professor Emily M. Bender, I are inclined to “resist the urge to be impressed.” It’s extraordinarily simple to fall for DALL·E 2’s stunning outputs and switch off essential considering. That’s precisely what permits corporations like OpenAI to wander free in an all-too-common non-accountability house.

One other query is whether or not it even made sense to construct DALL·E 2 within the first place. It appears they wouldn’t be keen to halt deployment no matter whether or not the dangers could be adequately managed or not (the tone from the system card doc is evident: they don’t know easy methods to sort out most potential points), so ultimately, we might find yourself with a internet unfavourable.

However that’s one other debate I’ll strategy extra in-depth in a future article as a result of there’s lots to say there. DALL·E 2’s results aren’t constrained to the sector of AI. Different corners of the world that won’t even know something about DALL·E 2 will probably be affected — for higher or worse.