Controlling a bionic hand with tinyML key phrase recognizing

— August thirty first, 2022

Conventional strategies of sending motion instructions to prosthetic units typically embody electromyography (studying electrical indicators from muscular tissues) or easy Bluetooth modules. However on this venture, Ex Machina has developed an alternate technique that permits customers to make the most of voice instructions and carry out varied gestures accordingly.

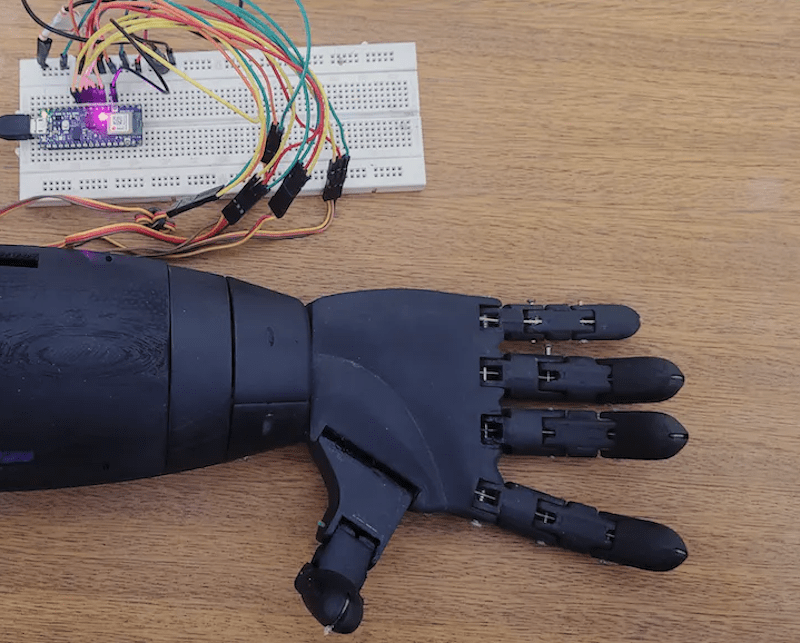

The hand itself was comprised of 5 SG90 servo motors, with each shifting a person finger of the bigger 3D-printed hand meeting. They’re all managed by a single Arduino Nano 33 BLE Sense, which collects voice information, interprets the gesture, and sends indicators to each the servo motors and an RGB LED for speaking the present motion.

As a way to acknowledge sure key phrases, Ex Machina collected 3.5 hours of audio information cut up amongst six whole labels that coated the phrases “one,” “two,” “OK,” “rock,” “thumbs up,” and “nothing” — all in Portuguese. From right here, the samples had been added to a venture within the Edge Impulse Studio and despatched by an MFCC processing block for higher voice extraction. Lastly, a Keras mannequin was educated on the ensuing options and yielded an accuracy of 95%.

As soon as deployed to the Arduino, the mannequin is repeatedly fed new audio information from the built-in microphone in order that it will possibly infer the proper label. Lastly, a change assertion units every servo to the proper angle for the gesture. For extra particulars on the voice-controlled bionic hand, you may learn Ex Machina’s Hackster.io write-up right here.

You possibly can comply with any responses to this entry by the RSS 2.0 feed.

You possibly can depart a response, or trackback from your personal website.