Apache Kafka is an occasion streaming platform that permits software program functions to speak successfully with one another. It’s a superb selection for connecting small functions, like microservices, collectively.

On this article, you’ll learn to construct a microservice utilizing Rust with Kafka. We’ll cowl:

You’ll be able to see the ultimate mission in this GitHub repo.

Stipulations

To comply with together with this text, you’ll want Rust put in in your system and fundamental data of Rust.

What are microservices?

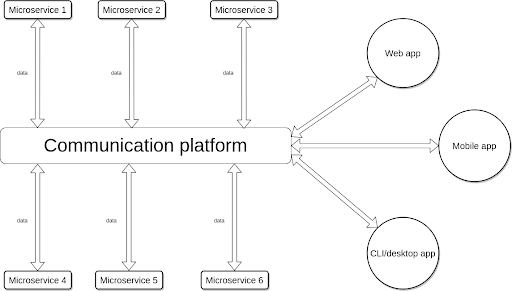

Microservices are small, unbiased functions that carry out particular duties which might be helpful for each decoupling parts of complicated functions and for selling distributed functions.

Consider a microservice as an object on a bigger scale. Much like objects, microservices are unbiased and could be reused. Nonetheless, the numerous distinction between the 2 is the place they return information. Whereas objects get and return information instantly, microservices return information right into a pool.

Microservices permit builders to construct an software with completely different programming languages. Errors in a microservice not often trigger the general software to crash.

What’s Kafka?

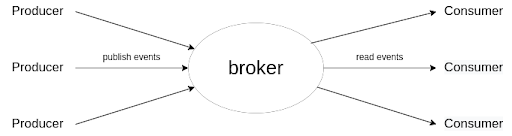

Kafka is an occasion streaming platform used for studying and writing information that makes it straightforward to attach microservices. To study extra about Kafka, try this Kafka real-time information streaming app tutorial.

Earlier than we go into extra depth in regards to the platform, we have to get conversant in some Kafka phrases.

- Occasion: in Kafka, information is named an occasion

- Subject: a subject is an identifier used for organizing occasions. Producers and shoppers can learn and write to a subject in actual time

- Producer: Kafka makes use of producers to publish occasions to a subject

- Shopper: these learn occasions from a subject

- Dealer: servers in Kafka are known as brokers

- Cluster: a number of brokers working collectively types a cluster, which protects occasions from loss. That is important as a result of occasions and subjects could be replicated throughout a number of brokers

Getting began with Kafka

To proceed with this text, you’ll need to arrange your Kafka dealer. Observe that you simply’ll use an area dealer in your system. Except for native brokers, Kafka supplies the choices to make use of brokers both within the Cloud or on a distant system.

To arrange Kafka in your system, comply with these steps:

First, obtain the newest Kafka launch right here and extract the compressed file. After that, open the decompressed folder in your terminal and begin the ZooKeeper server with the next command:

$ bin/zookeeper-server-start.sh config/zookeeper.properties

Then, open one other terminal session within the decompressed folder and begin the Kafka dealer:

$ bin/kafka-server-start.sh config/server.properties

The Kafka dealer requires an lively ZooKeeper to operate correctly. The ZooKeeper maintains the dealer, and with out it, the dealer will generate error messages.

Establishing Kafka subjects, producers, and shoppers

Subsequent, let’s set up printed occasions within the Kafka dealer by establishing subjects, producers, and shoppers.

To create a subject in your native server, run this command:

$ bin/kafka-topics.sh --create --topic topic-name --bootstrap-server localhost:9092

The primary command begins up a shopper within the console, and --topic topic-name tells the patron to learn from topic-name. --bootstrap-server localhost:9092 tells Kafka to connect with your native server at localhost:9092.

--from-beginning tells the patron to learn all occasions from the earliest printed. You need to use --offset earliest as an alternative of --from-beginning to realize the identical consequence. If you’d like the patron to solely hearken to new occasions, use--offset newest.

Kafka supplies a shopper and producer that you may run within the terminal:

Extra nice articles from LogRocket:

# console shopper studying from "topic-name" $ bin/kafka-console-consumer.sh --topic topic-name --from-beginning --bootstrap-server localhost:9092 # console producer publishing to "topic-name" $ bin/kafka-console-producer.sh --topic topic-name --bootstrap-server localhost:9092

The command above begins a producer within the console, and the producer publishes occasions to topic-name, specified by --topic topic-name. The producer is linked to your native server at localhost:9092 and is specified by --bootstrap-server localhost:9092.

Creating Rust shoppers and producers

Now that you simply’ve seen producers and shoppers within the console, I’ll present you construct a Kafka producer and shopper with Rust. Kafka-based microservices constructed with Rust often have a number of producers and shoppers. Let’s get began!

First, you’ll have to create a brand new Rust mission with the next command:

$ cargo new kafka_consumer

Subsequent, add the Kafka library to Cargo.toml:

[dependencies] kafka = "0.9"

Now that we’ve added the Kafka library, we are able to construct the mission to fetch its dependencies:

$ cargo construct

Then paste this code into src/primary.rs:

use kafka::shopper::{Shopper, FetchOffset};

use std::str;

fn primary () {

let hosts = vec!["localhost:9092".to_owned()];

let mut shopper =

Shopper::from_hosts(hosts)

.with_topic("topic-name".to_owned())

.with_fallback_offset(FetchOffset::Newest)

.create()

.unwrap();

loop {

for ms in shopper.ballot().unwrap().iter() {

for m in ms.messages() {

// If the patron receives an occasion, this block is executed

println!("{:?}", str::from_utf8(m.worth).unwrap());

}

shopper.consume_messageset(ms).unwrap();

}

shopper.commit_consumed().unwrap();

}

}

The patron we created above reads occasions printed to the topic-name matter and prints the occasion’s message to the console. Right here’s the way it works in additional element:

First, we initialize an inventory of the hosts that the patron will connect with on line 6 (on this case, localhost:9092).

let hosts = vec!["localhost:9092".to_owned()];

Subsequent, from line 8 to line 13, we initialize a shopper that’s linked to the topic-name matter (line 10) and begin studying occasions from the newest printed:

let mut shopper =

Shopper::from_hosts(hosts)

.with_topic("topic-name".to_owned())

.with_fallback_offset(FetchOffset::Newest)

.create()

.unwrap();

Lastly, we create an infinite loop that listens to occasions printed to topic-name:

loop {

for ms in shopper.ballot().unwrap().iter() {

for m in ms.messages() {

// convert message from bytes to string

println!("{:?}", str::from_utf8(m.worth).unwrap());

}

shopper.consume_messageset(ms).unwrap();

}

shopper.commit_consumed().unwrap();

}

Now that we’ve efficiently created our shopper, let’s look to our subsequent steps for creating our producer.

First, create a brand new Rust mission utilizing this command:

$ cargo new kafka_producer

Then add the Kafka library to Cargo.toml:

[dependencies] kafka = "0.9"

Construct the mission to fetch its dependencies:

$ cargo construct

Then, paste this code into src/primary.rs:

use kafka::producer::{Producer, File};

fn primary() {

let hosts = vec!["localhost:9092".to_owned()];

let mut producer =

Producer::from_hosts(hosts)

.create()

.unwrap();

for i in 0..10 {

let buf = format!("{i}");

producer.ship(&File::from_value("topic-name", buf.as_bytes())).unwrap();

println!("Despatched: {i}");

}

}

The thought is to create a producer that sends numbers 0 via 9 to the topic-name matter. Right here’s the way it works piece-by-piece:

First, we outline an inventory of the Kafka servers we’re connecting to (localhost:9092).

let hosts = vec!["localhost:9092".to_owned()];

Discover that the Producer::from_hosts operate initializes the producer. The initialized producer is linked to the native Kafka server (localhost:9092).

let mut producer =

Producer::from_hosts(hosts)

.create()

.unwrap();

We create a loop that runs from zero to 9 and ship every quantity to the topic-name matter.

for i in 0..10 {

let buf = format!("{i}");

producer.ship(&File::from_value("topic-name", buf.as_bytes())).unwrap();

println!("Despatched: {i}");

}

}

Now that we’ve arrange our shopper and producer, let’s run them. The patron listens to occasions printed in topic-name, and the producer mission publishes occasions to topic-name. Having an lively shopper earlier than publishing occasions ensures the occasions are consumed in actual time.

Constructing a Rust microservice with Kafka

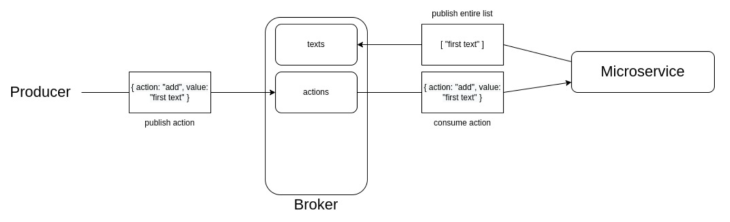

On this part, we’ll construct a microservice to retailer and delete texts. This microservice is operated by sending occasions that describe including or eradicating textual content. The occasion might be a JSON string with an motion key to explain its motion and a worth key to supply needed information.

The next diagram showcases how the microservice works in including textual content:

As you’ll be able to see within the diagram, our microservice reads operations to carry out from the actions matter after which publishes the checklist of texts to the texts matter. Now that we now have some background, let’s construct this microservice.

First, let’s create a brand new mission:

$ cargo new texts-microservice

Add the next dependencies to Cargo.toml utilizing the command beneath:

[dependencies] kafka = "0.9" serde_json = "1.0.85"

Construct the mission utilizing the next command:

$ cargo construct

After that, we’ll create modules that every deal with the producer, the patron, and the texts. The file names of those modules are my_consumer.rs, my_producer.rs, and texts.rs, in src.

Now, comply with these steps to construct the microservice:

First, we have to initialize the modules, since we’re splitting the microservice into modules. So, add this code block to the primary.rs file:

mod my_consumer; mod my_producer; mod texts; use my_consumer::MyConsumer; use my_producer::MyProducer; use texts::Texts;

After that, create the primary operate in primary.rs:

fn primary() {

}

Subsequent, outline the Kafka server you’re connecting the microservice to in primary.rs’s primary operate:

let hosts = vec![ "localhost:9092".to_string() ];

Now, initialize the texts, shopper, and producer modules with the next traces in primary.rs’s primary operate:

let mut texts = Texts::new(); let mut shopper = MyConsumer::new( hosts.clone(), "actions".to_string() ); let mut producer = MyProducer::new( hosts );

Subsequent, create a loop that listens for occasions that the patron receives:

loop {

for ms in shopper.consume_events().iter() {

for m in ms.messages() {

}

shopper.consume_messageset(ms);

}

shopper.commit_consumed();

}

After that, retrieve the information from the loop within the earlier code block with this line:

let event_data = MyConsumer::get_event_data(m);

Now, extract the motion to carry out on texts from the occasion information with this line following the earlier code block:

let motion = event_data["action"].to_string();

After that, use if, if/else, and else blocks to carry out the motion specified by event_data["action"]:

if motion == ""add"" {

texts.add_text( event_data["value"].to_string() );

} else if motion == ""take away"" {

let index = event_data["value"].to_string().parse::<usize>().unwrap();

texts.remove_text( index );

} else {

println!("Invalid motion");

}

Lastly, convert the texts to JSON string and ship it to the texts matter with the producer on the finish of the loop:

producer.send_data_to_topic( "texts", texts.to_json() );

Ultimately, your primary.rs file ought to appear to be this:

mod my_consumer;

mod my_producer;

mod texts;

use my_consumer::MyConsumer;

use my_producer::MyProducer;

use texts::Texts;

fn primary() {

let hosts = vec![ "localhost:9092".to_string() ];

let mut texts = Texts::new();

let mut shopper = MyConsumer::new( hosts.clone(), "actions".to_string() );

let mut producer = MyProducer::new( hosts );

// put right here to indicate that the microservice has began

println!("Began...");

loop {

for ms in shopper.consume_events().iter() {

for m in ms.messages() {

// when the patron receives an occasion, this block is executed

let event_data = MyConsumer::get_event_data(m);

let motion = event_data["action"].to_string();

if motion == ""add"" {

texts.add_text( event_data["value"].to_string() );

} else if motion == ""take away"" {

let index = event_data["value"].to_string().parse::<usize>().unwrap();

texts.remove_text( index );

} else {

println!("Invalid motion");

}

producer.send_data_to_topic( "texts", texts.to_json() );

}

shopper.consume_messageset(ms);

}

shopper.commit_consumed();

}

}

Now, create a my_consumer.rs file in src and paste the next code into it:

use kafka::shopper::{Shopper, FetchOffset, MessageSets, MessageSet, Message};

use std::str;

use serde_json::Worth;

pub struct MyConsumer {

shopper: Shopper

}

impl MyConsumer {

pub fn new (hosts: Vec<String>, matter: String) -> Self {

Self {

shopper: Shopper::from_hosts(hosts)

.with_topic(matter)

.with_fallback_offset(FetchOffset::Newest)

.create()

.unwrap()

}

}

pub fn get_event_data (m: &Message) -> Worth {

let occasion = str::from_utf8(m.worth).unwrap().to_string();

serde_json::from_str(&occasion).unwrap()

}

pub fn consume_events(&mut self) -> MessageSets {

self.shopper.ballot().unwrap()

}

pub fn consume_messageset(&mut self, ms: MessageSet) {

self.shopper.consume_messageset(ms).unwrap();

}

pub fn commit_consumed(&mut self) {

self.shopper.commit_consumed().unwrap();

}

}

Additionally, create a my_producer.rs file with this code in it:

use kafka::producer::{Producer, File};

pub struct MyProducer {

producer: Producer

}

impl MyProducer {

pub fn new( hosts: Vec<String> ) -> Self {

let producer =

Producer::from_hosts( hosts )

.create()

.unwrap();

Self {

producer: producer

}

}

pub fn send_data_to_topic(&mut self, matter: &str, information: String ) {

let report = File::from_value( matter, information.as_bytes() );

self.producer.ship(&report).unwrap();

}

}

Lastly, create a texts.rs file and paste the next into it:

use serde_json::json;

pub struct Texts {

texts: Vec<String>

}

impl Texts {

pub fn new() -> Self {

Self {

texts: vec![]

}

}

pub fn add_text(&mut self, textual content: String) {

self.texts.push(textual content);

}

pub fn remove_text(&mut self, index: usize) {

self.texts.take away( index );

}

pub fn to_json(&self) -> String {

json!(&self.texts).to_string()

}

}

After constructing the mission, we have to run and check it.

First, create the texts and actions subjects:

# create texts matter $ bin/kafka-topics.sh --create --topic texts --bootstrap-server localhost:9092 # create actions matter $ bin/kafka-topics.sh --create --topic actions --bootstrap-server localhost:9092

Subsequent, arrange a console shopper to matter texts:

$ bin/kafka-console-consumer.sh --topic texts --from-beginning --bootstrap-server localhost:9092

Arrange a producer to matter actions.

$ bin/kafka-console-producer.sh --topic actions --bootstrap-server localhost:9092

Let’s now run the mission with:

$ cargo run

Then, write actions to the producer:

> { "motion": "add", "worth": "first textual content" }

> { "motion": "add", "worth": "second textual content" }

> { "motion": "take away", "worth": 1 }

> { "motion": "add", "worth": "third textual content" }

Lastly, let’s see the outcomes of every occasion within the shopper console:

[ "first text" ] [ "first text", "second text" ] [ "first text" ] [ "first text", "third text" ]

Conclusion

On this article, we lined create a fundamental microservice with Rust. Though a single microservice is easy and focuses on one job, a number of or extra superior microservices can kind an simply maintained and distributed complicated software that deal with databases, real-time computing, Web of Issues, real-time information assortment, and fraud detection.

LogRocket: Full visibility into manufacturing Rust apps

Debugging Rust functions could be tough, particularly when customers expertise points which might be tough to breed. In case you’re all for monitoring and monitoring efficiency of your Rust apps, robotically surfacing errors, and monitoring gradual community requests and cargo time, attempt LogRocket.

LogRocket is sort of a DVR for net and cell apps, recording actually all the pieces that occurs in your Rust app. As a substitute of guessing why issues occur, you’ll be able to mixture and report on what state your software was in when a problem occurred. LogRocket additionally screens your app’s efficiency, reporting metrics like shopper CPU load, shopper reminiscence utilization, and extra.

Modernize the way you debug your Rust apps — begin monitoring without spending a dime.