The observability of right this moment’s advanced, dynamic techniques is based on area information or, extra so, on the unknown “unknowns” that come up from incomplete area information. In different phrases, the instances that fall between the cracks and shock us, as illuminated within the beneath quote:

It took us not more than an hour to determine methods to restore the community; a number of further hours had been required as a result of it took so lengthy for us to realize management of the misbehaving IMPs and get them again to regular. A built-in software program alarm system (assuming, in fact, that it was not topic to false alarms) might need enabled us to revive the community extra shortly, considerably decreasing the period of the outage. This isn’t to say that a greater alarm and management system may ever be a alternative for cautious research and design which makes an attempt to correctly distribute the utilization of essential sources, however solely that it’s a crucial adjunct, to deal with the instances that can inevitably fall between the cracks of even essentially the most cautious design – (Rosen, RFC 789)

At its coronary heart, observability is how we expose a system’s conduct (in some disciplined means, hopefully) and make sense of such conduct.

On this article, we’ll focus on the significance of obseravability and we’ll examine composing the underpinnings of an observable Rust utility.

Soar forward:

Why is observability essential?

Our techniques have turn into extra sophisticated because of the proliferation of microservice deployments and orchestration engines, the place main firms are working 1000’s of microservices, and even startups are working within the a whole lot.

The cruel actuality of microservices is that they immediately pressure each developer to turn into a cloud/distributed techniques engineer, coping with the complexity that’s inherent in distributed techniques. Particularly, partial failure, the place the unavailability of a number of providers can adversely influence the system in unknown methods. – (Meiklejohn, et. al., Service-Degree Fault Injection Testing)

In these advanced instances, attaining observability goes a great distance into architecting, troubleshooting, and benchmarking our techniques for the lengthy haul. Delivering observability begins with the gathering of our output information (telemetry and instrumentation) from our working techniques on the proper degree of abstraction, usually organized round a request path, in order that we will then discover and dissect information patterns and discover cross-correlations.

On paper, this sounds considerably straightforward to attain. We collect our three pillars — logs, metrics, and traces — and we’re completed. Nonetheless, these three pillars on their very own are simply bits, whereas gathering essentially the most useful sorts of bits and analyzing the gathering of bits collectively holds essentially the most complexity.

Forming the appropriate abstractions is the onerous half. It may be very domain-specific and depends on constructing a mannequin for our techniques’ conduct, which is open to alter and ready for surprises. It entails the developer having to get extra concerned with how occasions of their purposes and techniques are generated and identified.

Throwing log statements in all places and amassing each attainable metric loses long-term worth and causes different considerations. We have to expose and increase significant output, in order that information correlation is even attainable.

This can be a Rust article in any case, and whereas Rust was constructed with security, pace, and effectivity in thoughts, exposing system conduct was not one among its founding rules.

How can we make Rust purposes extra observable?

Ranging from the primary rules, how will we go about instrumenting code, amassing significant hint info, and deriving information to assist us discover unknown “unknowns”? If all the pieces is pushed by occasions and we now have traces that seize a collection of occasions/operations, which embody requests/responses, database reads/writes, and/or cache misses, and so forth., what’s the trick to go from nothing to one thing for a Rust utility that has to speak with the surface world in an effort to obtain end-to-end observability? What do the constructing blocks seem like?

Sadly, there’s not only one trick or silver bullet right here, particularly when writing Rust providers, which leaves lots for the developer to piece collectively. First, the one factor we will actually depend on to know and debug an unknown “unknown” is telemetry information, and we must always make certain we floor significant, contextual telemetry information (e.g., correlatable fields like request_path, parent_span, trace_id, class, and topic). Second, we’d like a approach to discover that output and correlate it throughout techniques and providers.

On this put up, we’ll primarily be involved with amassing and gathering significant, contextual output information, however we’ll additionally focus on how greatest to hook into platforms that present additional processing, evaluation, and visualization. Fortunately, the core device(s) are on the market for instrumenting Rust packages to gather structured occasion information and course of and emit hint info, for async and sync communication alike.

We’ll give attention to essentially the most normal and versatile framework, tracing, which is located round spans, occasions, and subscribers, and the way we will benefit from its composability and customizability.

Nonetheless, despite the fact that we now have an in depth framework like tracing at our disposal to assist us write the underpinnings of observable providers in Rust, significant telemetry doesn’t come “out of the field” or “fall out free of charge.”

Attending to the appropriate abstraction in Rust just isn’t as simple as it could be in different languages. As a substitute, a sturdy utility should be constructed upon layered behaviors, all of which offer exemplary controls for builders within the know, however will be cumbersome for these extra inexperienced.

We’re going to interrupt up our drawback house right into a collection of composable layers which operate over 4 totally different models of conduct:

- Storing contextual info for the longer term

- Augmenting structured logs with contextual info

- Deriving metrics by means of instrumentation and span durations

- OpenTelemetry interoperability for distributed tracing

Akin to how the authentic QuickCheck paper on property-based testing relied on customers to specify properties and supply situations for user-defined varieties, constructing end-to-end observable Rust providers comes with having to have some understanding of how traces are generated, how information is specified and maintained, and what telemetry is smart as purposes develop. That is notably true when it comes time to debug and/or discover inconsistencies, partial failures, and doubtful efficiency traits.

Hint assortment will drive all the pieces on this put up’s examples, the place spans and occasions would be the lens by means of which we tie collectively a whole image of identified portions. We’ll have logs, however we’ll deal with them as structured occasions. We’ll collect metrics, however have them automated through instrumentation and spans, and we’ll export OpenTelemetry-compatible hint information for emission to a distributed tracing platform like Jaeger.

Spans, occasions, and traces

Earlier than we get into the implementation particulars, let’s begin with some phrases and ideas we should be accustomed to, akin to spans, traces, and occasions.

Spans

A span represents operations or segments which are a part of a hint and acts as the first constructing block of distributed tracing. For any given request, the preliminary span (with no guardian) is named the foundation span. It’s sometimes represented because the end-to-end latency of a whole consumer request of a given distributed hint.

There may also be subsequent youngster spans, which will be nested below different numerous guardian spans. The overall execution time of a span consists of the time spent in that span in addition to the whole subtree represented by its kids.

Right here’s an instance of a purposefully condensed guardian span log for a brand new request:

degree=INFO span identify="HTTP request" span=9008298766368774 parent_span=9008298766368773 span_event=new_span timestamp=2022-10-30T22:30:28.798564Z http.client_ip=127.0.0.1:61033 http.host=127.0.0.1:3030 http.technique=POST http.route=/songs trace_id=b2b32ad7414392aedde4177572b3fea3

This span log comprises essential items of knowledge and metadata just like the request path (http.route), timestamp (2022-10-30T22:30:28.798564Z), request technique (http.technique), and hint identifiers (span, parent_span, and trace_id respectively). We’ll use this info to exhibit how a hint is tied collectively from begin to completion.

Why is it known as a span? Ben Sigelman, an creator of Google’s Dapper tracing infrastructure paper, thought of these elements in A Transient Historical past of “The Span”: Laborious to Love, Laborious to Kill:

- Inside the code itself, the API seems like a timer

- When one considers the hint as a directed graph, the information construction looks as if a node or vertex

- Within the context of structured, multi-process logging (aspect be aware: on the finish of the day, that’s what distributed tracing is), one would possibly consider a span as two occasions

- Given a easy timing diagram, it’s tempting to name the idea a period or window

Occasions

An occasion represents a single operation in time, the place one thing occurred throughout the execution of some arbitrary program. In distinction to out-of-band, unstructured log information, we’ll deal with occasions as a core unit of ingest taking place inside the context of a given span, and structured with key-value fields (akin to the span log above). Extra exactly, these are known as span occasions:

degree=INFO msg="completed processing vendor request" topic=vendor.response class=http.response vendor.standing=200 vendor.response_headers="{"content-type": "utility/json", "range": "Settle for-Encoding, Consumer-Agent", "transfer-encoding": "chunked"}" vendor.url=http://localhost:8080/.well-known/jwks.json vendor.request_path=/.well-known/jwks.json goal="utility::middleware::logging" location="src/middleware/logging.rs:354" timestamp=2022-10-31T02:45:30.683888Z

Our utility also can have arbitrary structured log occasions that happen exterior of a span context. For instance, to show configuration settings at startup or monitor when a cache is flushed.

Traces

A hint is a set of spans that characterize some workflow, like a server request or queue/stream processing steps for an merchandise. Primarily, a hint is a Directed Acyclic Graph of spans, the place the sides connecting spans point out causal relationships between spans and their guardian spans.

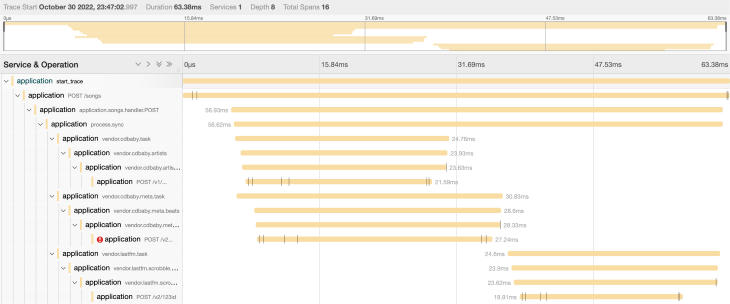

Right here’s an instance of a hint visualized inside Jaeger UI:

If this utility had been half of a bigger, distributed hint, we’d see it nested inside a bigger guardian span.

Now, with the phrases out of the way in which, how will we even get began implementing the skeleton of an observability-ready Rust utility?

Composing a number of tracing layers to construct a subscriber

The tracing framework is break up up into totally different parts (as crates). We’ll give attention to this set of .toml dependencies for our functions:

opentelemetry = { model = "0.17", options = ["rt-tokio", "trace"] }

opentelemetry-otlp = { model = "0.10", options = ["metrics", "tokio", "tonic", "tonic-build", "prost", "tls", "tls-roots"], default-features = false}

opentelemetry-semantic-conventions = "0.9"

tracing = "0.1"

tracing-appender = "0.2"

tracing-opentelemetry = "0.17"

tracing-subscriber = {model = "0.3", options = ["env-filter", "json", "registry"]}

The tracing_subscriber crate offers us the power to compose tracing subscribers from smaller models of conduct, known as layers, for amassing and augmenting hint information.

The Subscriber itself is answerable for registering new spans when created (with a span id), recording and attaching area values and follow-from annotations to spans, and filtering out spans and occasions.

When composed with a subscriber, layers faucet into hooks triggered all through a span’s lifecycle:

fn on_new_span(&self, _attrs: &Attributes<'_>, _id: &span::Id, _ctx: Context<'_, C>) {...}

fn on_record(&self, _span: &Id, _values: &File<'_>, _ctx: Context<'_, S>) { ... }

fn on_follows_from(&self, _span: &Id, _follows: &Id, _ctx: Context<'_, S>) { ... }

fn event_enabled(&self, _event: &Occasion<'_>, _ctx: Context<'_, S>) -> bool { ... }

fn on_event(&self, _event: &Occasion<'_>, _ctx: Context<'_, S>) { ... }

fn on_enter(&self, _id: &Id, _ctx: Context<'_, S>) { ... }

fn on_exit(&self, _id: &Id, _ctx: Context<'_, S>) { ... }

fn on_close(&self, _id: Id, _ctx: Context<'_, S>) { ... }

How are layers composed in code? Let’s begin with a setup technique, producing a registry that’s outlined with 4 with combinators, or layers:

fn setup_tracing(

author: tracing_appender::non_blocking::NonBlocking,

settings_otel: &Otel,

) -> End result<()> {

let tracer = init_tracer(settings_otel)?;

let registry = tracing_subscriber::Registry::default()

.with(StorageLayer.with_filter(LevelFilter::TRACE))

.with(tracing_opentelemetry::layer()...

.with(LogFmtLayer::new(author).with_target(true)...

.with(MetricsLayer)...

);

...

The setup_tracing operate would sometimes be known as upon initialization of a server’s principal() technique in principal.rs. The storage layer itself gives zero output and as a substitute acts as an info retailer for gathering contextual hint info to reinforce and lengthen downstream output for the opposite layers within the pipeline.

The with_filter technique controls which spans and occasions are enabled for this layer, and we’ll need to seize primarily all the pieces, as LevelFilter::TRACE is essentially the most verbose possibility.

Let’s look at every layer and see how every operates over hint information collected and hooks into the span lifecycle. Customizing every layer’s conduct entails implementing lifecycle hooks related to the Layer trait, like on_new_span beneath.

Alongside the way in which, we’ll exhibit how these models of conduct increase span- and event-log formatting, derive some metrics routinely, and ship what we’ve gathered to downstream, distributed-tracing platforms like Jaeger, Honeycomb, or Datadog. We’ll begin with our StorageLayer, which gives contextual info from which different layers can profit.

Storing contextual info for the longer term

On new span

impl<S> Layer<S> for StorageLayer

the place

S: Subscriber + for<'span> LookupSpan<'span>,

{

fn on_new_span(&self, attrs: &Attributes<'_>, id: &Id, ctx: Context<'_, S>) {

let span = ctx.span(id).anticipate("Span not discovered");

// We need to inherit the fields from the guardian span, if there's one.

let mut customer = if let Some(parent_span) = span.guardian() v else {

Storage::default()

};

let mut extensions = span.extensions_mut();

attrs.report(&mut customer);

extensions.insert(customer);

}

...

When a brand new span (through on_new_span) is initiated, for instance, a POST request into our utility to an endpoint like /songs, our code checks to see if we’re already inside a guardian span. In any other case, it is going to default to a newly created, empty Hashmap, which is what Storage::default() wraps below the hood.

For simplicity, we default the map to keys of string references and values of copy-on-write (Cow) good pointers round string references:

#[derive(Clone, Debug, Default)]

pub(crate) struct Storage<'a> {

values: HashMap<&'a str, Cow<'a, str>>,

}

Storage persists fields throughout layers in a registry inside the lifecycle of the span resulting from a span’s extensions, giving us the power to mutably affiliate arbitrary information to a span or immutably learn from continued information, together with our personal information construction.

Extra nice articles from LogRocket:

Many of those lifecycle hooks contain wrestling with extensions, which will be considerably verbose to work with. The registry is what truly collects and shops span information, which may then bubble by means of to different layers through the implementation of LookupSpan.

The opposite code to spotlight is attrs.report(&mut customer) which can report area values of varied varieties by visiting every kind of worth, which is a trait that should be applied:

// Only a pattern of the applied strategies

impl Go to for Storage<'_> {

/// Go to a signed 64-bit integer worth.

fn record_i64(&mut self, area: &Area, worth: i64) {

self.values

.insert(area.identify(), Cow::from(worth.to_string()));

}

... // elided for brevity

fn record_debug(&mut self, area: &Area, worth: &dyn fmt::Debug) {

// Observe: that is invoked through `debug!` and `information! macros

let debug_formatted = format!("{:?}", worth);

self.values.insert(area.identify(), Cow::from(debug_formatted));

}

...

As soon as we’ve recorded all of the values for every kind, all of that is saved inside the Storage Hashmap by the customer, which can be obtainable for downstream layers to make use of for lifecycle triggers sooner or later.

On report

impl<S> Layer<S> for StorageLayer

the place

S: Subscriber + for<'span> LookupSpan<'span>,

{

... // elided for brevity

fn on_record(&self, span: &Id, values: &File<'_>, ctx: Context<'_, S>) {

let span = ctx.span(span).anticipate("Span not discovered");

let mut extensions = span.extensions_mut();

let customer = extensions

.get_mut::<Storage>()

.anticipate("Customer not discovered on 'report'!");

values.report(customer);

}

... // elided for brevity

As we proceed by means of every lifecycle set off, we’ll discover that the sample is analogous. We get a mutable, scoped deal with into the span’s storage extension, and we report values as they arrive.

This hook notifies a layer {that a} span with a given identifier has recorded the given values, through calls like debug_span! or info_span!:

let span = info_span!(

"vendor.cdbaby.process",

topic = "vendor.cdbaby",

class = "vendor"

);

On occasion

impl<S> Layer<S> for StorageLayer

the place

S: Subscriber + for<'span> LookupSpan<'span>,

{

... // elided for brevity

fn on_event(&self, occasion: &Occasion<'_>, ctx: Context<'_, S>) {

ctx.lookup_current().map(|current_span| {

let mut extensions = current_span.extensions_mut();

extensions.get_mut::<Storage>().map(|customer| {

if occasion

.fields()

.any(|f| ON_EVENT_KEEP_FIELDS.comprises(&f.identify()))

{

occasion.report(customer);

}

})

});

}

... // elided for brevity

For our contextual storage layer, hooking into occasions, like tracing::error! messages, is mostly pointless. However, this may turn into worthwhile for storing info on occasion fields we’d wish to maintain which may be helpful in one other layer later within the pipeline.

One instance is to retailer an occasion if it was attributed to an error in order that we will maintain monitor of errors in our metrics layer (e.g., ON_EVENT_KEEP_FIELDS is an array of fields tied to error keys).

On enter and on shut

impl<S> Layer<S> for StorageLayer

the place

S: Subscriber + for<'span> LookupSpan<'span>,

{

... // elided for brevity

fn on_enter(&self, span: &Id, ctx: Context<'_, S>) {

let span = ctx.span(span).anticipate("Span not discovered");

let mut extensions = span.extensions_mut();

if extensions.get_mut::<On the spot>().is_none() {

extensions.insert(On the spot::now);

}

}

fn on_close(&self, id: Id, ctx: Context<'_, S>) {

let span = ctx.span(&id).anticipate("Span not discovered");

let mut extensions = span.extensions_mut();

let elapsed_milliseconds = extensions

.get_mut::<On the spot>()

.map(|i| i.elapsed().as_millis())

.unwrap_or(0);

let customer = extensions

.get_mut::<Storage>()

.anticipate("Customer not discovered on 'report'");

customer.values.insert(

LATENCY_FIELD, // "latency_ms"

Cow::from(format!("{}", elapsed_milliseconds)),

);

}

... // elided for brevity

Spans are inherently tagged time intervals, with a clear-cut starting and finish. For the scope of a span, we need to seize the elapsed time between when the span with a given id was entered (On the spot::now) till it’s closed for a given operation.

Storing the latency for every span inside our extensions allows different layers to derive metrics routinely and advantages exploratory functions whereas debugging occasion logs for a given span id. Beneath, we will see the opening and shutting of a vendor process/course of span with id=452612587184455697, which took 18ms from begin to end:

degree=INFO span_name=vendor.lastfm.process span=452612587184455697 parent_span=span=452612587184455696 span_event=new_span timestamp=2022-10-31T12:35:36.913335Z trace_id=c53cb20e4ab4fa42aa5836d26e974de2 http.client_ip=127.0.0.1:51029 topic=vendor.lastfm utility.request_path=/songs http.technique=POST class=vendor http.host=127.0.0.1:3030 http.route=/songs request_id=01GGQ0MJ94E24YYZ6FEXFPKVFP

degree=INFO span_name=vendor.lastfm.process span=452612587184455697 parent_span=span=452612587184455696 span_event=close_span timestamp=2022-10-31T12:35:36.931975Z trace_id=c53cb20e4ab4fa42aa5836d26e974de2 latency_ms=18 http.client_ip=127.0.0.1:51029 topic=vendor.lastfm utility.request_path=/songs http.technique=POST class=vendor http.host=127.0.0.1:3030 http.route=/songs request_id=01GGQ0MJ94E24YYZ6FEXFPKVFP

Augmenting structured logs with contextual info

Now we’ll get a glimpse into how our storage information will be tapped into for precise telemetry output by our occasion log formatting layer:

.with(LogFmtLayer::new(author).with_target(true)...

On the subject of writing customized layers and subscriber implementations, many examples have a tendency towards customized formatters:

N.B., the log examples above use this similar format, impressed by an implementation from InfluxDB)

We advocate utilizing printed layers or libraries or following tutorials like these listed above for diving into the specifics of producing information in a most well-liked format.

For this text, which builds up our personal customized formatter layer, we’ll re-familiarize ourselves with the span lifecycle, particularly for span and occasion logs, which can now benefit from our storage map.

On new span

impl<S, Wr, W> Layer<S> for LogFmtLayer<Wr, W>

the place

Wr: Write + 'static,

W: for<'author> MakeWriter<'author> + 'static,

S: Subscriber + for<'span> LookupSpan<'span>,

{

fn on_new_span(&self, _attrs: &Attributes<'_>, id: &Id, ctx: Context<'_, S>) {

let mut p = self.printer.write();

let metadata = ctx.metadata(id).anticipate("Span lacking metadata");

p.write_level(metadata.degree());

p.write_span_name(metadata.identify());

p.write_span_id(id);

p.write_span_event("new_span");

p.write_timestamp();

let span = ctx.span(id).anticipate("Span not discovered");

let extensions = span.extensions();

if let Some(customer) = extensions.get::<Storage>() {

for (key, worth) in customer.values() {

p.write_kv(

decorate_field_name(translate_field_name(key)),

worth.to_string(),

)

}

}

p.write_newline();

}

... // elided for brevity

The above code is utilizing the MakeWriter trait to print formatted textual content representations of span occasions. The decision to decorate_field_name and all of the printer write strategies execute particular formatting properties below the hood (once more, on this case, logfmt).

Going again to our earlier span log instance, it’s now extra obvious the place keys like degree, span, and span_name are set. One piece of code to name out right here is how we loop, for (key, worth), over the values learn from our storage map, lifting info we noticed and picked up within the earlier layer.

We use this to offer context to reinforce our structured log occasions in one other layer. Put one other means, we’re composing particular sub-behaviors on hint information through layers in an effort to construct out a singular subscriber to the general hint. Area keys like http.route and http.host are lifted from this storage layer for instance.

On occasion

impl<S, Wr, W> Layer<S> for LogFmtLayer<Wr, W>

the place

Wr: Write + 'static,

W: for<'author> MakeWriter<'author> + 'static,

S: Subscriber + for<'span> LookupSpan<'span>,

{

... // elided for brevity

fn on_event(&self, occasion: &Occasion<'_>, ctx: Context<'_, S>) {

let mut p = self.printer.write();

p.write_level(occasion.metadata().degree());

occasion.report(&mut *p);

//report supply info

p.write_source_info(occasion);

p.write_timestamp();

ctx.lookup_current().map(|current_span| {

p.write_span_id(¤t_span.id());

let extensions = current_span.extensions();

extensions.get::<Storage>().map(|customer| {

for (key, worth) in customer.values() {

if !ON_EVENT_SKIP_FIELDS.comprises(key) {

p.write_kv(

decorate_field_name(translate_field_name(key)),

worth.to_string(),

)

}

}

})

});

p.write_newline();

}

... // elided for brevity

Although considerably tedious, the sample for implementing these span lifecycle strategies is getting simpler and simpler to make out. Area key-value pairs like goal and placement are formatted from supply info, giving us the goal="utility::middleware::logging" and location="src/middleware/logging.rs:354" seen beforehand. Keys like vendor.request_path and vendor.url are additionally lifted from contextual storage.

Whereas extra work would most likely go into implementing any formatting specification appropriately, we will now see the granular management and customization that the tracing framework gives. This contextual info is how we’ll finally have the ability to type correlations inside a request lifecycle.

Deriving metrics by means of instrumentation and span durations

Metrics, specifically, are literally fairly hostile to observability on their very own, and the cardinality of a metric, the variety of distinctive combos of metric names and dimension values, can simply be abused.

Charity Majors on Twitter: “The occasion is the connective tissue for metrics, logs and tracing, as a result of you may derive the primary two from occasions and you’ll visualize the third from occasions.But it surely doesn’t go in reverse: you may by no means work backwards from metrics, logs, and/or traces to get your occasion again. / Twitter”

The occasion is the connective tissue for metrics, logs and tracing, as a result of you may derive the primary two from occasions and you’ll visualize the third from occasions.But it surely doesn’t go in reverse: you may by no means work backwards from metrics, logs, and/or traces to get your occasion again.

We’ve already proven how structured logs will be derived from occasions. Metrics themselves must be fashioned from the occasion, or span, that encompassed them.

We’ll nonetheless want out-of-band metrics like these geared round course of assortment (e.g., CPU utilization, disk bytes written/learn). Nonetheless, if we’re already capable of instrument our code on the operate degree to find out when one thing occurred, couldn’t some metrics “fall out free of charge?” As talked about beforehand, we now have the tooling, however we simply want to string it by means of.

Tracing gives accessible methods to annotate capabilities a consumer needs to instrument, which implies creating, coming into, and shutting a span every time the annotation operate executes. The rust compiler itself makes heavy use of those annotated instrumentations everywhere in the codebase:

#[instrument(skip(self, op), level = "trace")]

pub(tremendous) fn fully_perform_op<R: fmt::Debug, Op>(

&mut self,

areas: Areas,

class: ConstraintCategory<'tcx>,

op: Op) -> Fallible<R>

For our functions, let’s have a look at a simple, async database save_event operate that’s been instrumented with some very particular area definitions:

#[instrument(

level = "info",

name = "record.save_event",

skip_all,

fields(category="db", subject="aws_db", event_id = %event.event_id,

event_type=%event.event_type, otel.kind="client", db.system="aws_db",

metric_name="db_event", metric_label_event_table=%self.event_table_name,

metric_label_event_type=%event.event_type)

err(Display)

)]

pub async fn save_event(&self, occasion: &Occasion) -> anyhow::End result<()> {

self.db_client

.put_item()

.table_name(&self.event_table_name)

.set(Some(occasion))

.ship()

.await...

}

Our instrumentation operate has metric prefixed fields like identify, event_type, and event_table. These keys correspond to metric names and labels sometimes present in a Prometheus monitoring setup. We’ll come again to those prefixed fields shortly. First, let’s develop the MetricsLayer that we arrange initially with some further filters.

Primarily, these filters do two issues: 1) produce metrics for all trace-log-level or larger occasions (despite the fact that they might not be logged to stdout primarily based on a configured log degree); and, 2) move by means of occasions for instrumented capabilities with the report prefix connected, as in identify = "report.save_event" above.

After this, all that’s left in an effort to automate metric derivation is to return to our metrics-layer implementation.

On shut

const PREFIX_LABEL: &str = "metric_label_";

const METRIC_NAME: &str = "metric_name";

const OK: &str = "okay";

const ERROR: &str = "error";

const LABEL: &str = "label";

const RESULT_LABEL: &str = "consequence";

impl<S> Layer<S> for MetricsLayer

the place

S: Subscriber + for<'span> LookupSpan<'span>,

{

fn on_close(&self, id: Id, ctx: Context<'_, S>) {

let span = ctx.span(&id).anticipate("Span not discovered");

let mut extensions = span.extensions_mut();

let elapsed_secs_f64 = extensions

.get_mut::<On the spot>()

.map(|i| i.elapsed().as_secs_f64())

.unwrap_or(0.0);

if let Some(customer) = extensions.get_mut::<Storage>() {

let mut labels = vec![];

for (key, worth) in customer.values() {

if key.starts_with(PREFIX_LABEL) {

labels.push((

key.strip_prefix(PREFIX_LABEL).unwrap_or(LABEL),

worth.to_string(),

))

}

}

... // elided for brevity

let identify = customer

.values()

.get(METRIC_NAME)

.unwrap_or(&Cow::from(span_name))

.to_string();

if customer.values().contains_key(ERROR)

labels.push((RESULT_LABEL, String::from(ERROR)))

} else {

labels.push((RESULT_LABEL, String::from(OK)))

}

... // elided for brevity

metrics::increment_counter!(format!("{}_total", identify), &labels);

metrics::histogram!(

format!("{}_duration_seconds", identify),

elapsed_secs_f64,

&labels

);

... // elided for brevity

There are a variety of bits being pushed round on this instance, and a few of it’s elided. Nonetheless, on_close, we at all times have entry to the top of a span interval through elapsed_secs_f64, which may drive our histogram calculation through the metrics::histogram! macro.

Observe that we’re leveraging the metrics-rs challenge right here. Anybody can mannequin this operate the identical means with one other metrics library that gives counter and histogram assist. From our storage map, we pull out all metric_*-labeled keys and use these to generate labels for the routinely derived incremented counter and histogram.

Additionally, if we’ve saved an occasion that errored out, we’re ready to make use of that as a part of the label, distinguishing our ensuing capabilities primarily based on okay/error. Given any instrumented operate, we’ll derive metrics from it with this similar code conduct.

The output we’d encounter from a Prometheus endpoint would present a counter that appears one thing like this:

db_event_total{event_table="occasions",event_type="Track",consequence="okay",span_name="save_event"} 8

Instrumenting async closures and oblique span relationships

A query that does come up every now and then is methods to instrument code with spans that reference an oblique, non-parent-to-child relationship, or what’s known as a follows from reference.

This might come into play for async operations that spawn requests to side-effecting downstream providers or processes that emit information to service buses the place the direct response or returned output has no impact inside the operation that spawned it itself.

For these instances, we will instrument async closures (or futures) straight by coming into right into a given span (captured as a follows_from reference beneath) related to our async future every time it’s polled and exited each time the future is parked, as proven beneath with .instrument(process_span):

// Begin a span across the context course of spawn

let process_span = debug_span!(

guardian: None,

"course of.async",

topic = "songs.async",

class = "songs"

);

process_span.follows_from(Span::present());

tokio::spawn(

async transfer {

match context.course of().await {

Okay(r) => debug!(tune=?r, "efficiently processed"),

Err(e) => warn!(error=?e, "failed processing"),

}

}

.instrument(process_span),

);

OpenTelemetry interoperability for distributed tracing

A lot of the usefulness of observability comes from the truth that most providers right this moment are literally made up of many microservices. We must always all be pondering distributed.

If every kind of providers have to connect with each other, throughout networks, distributors, clouds, and even edge-oriented or local-first friends, some requirements and vendor-agnostic tooling must be enforced. That is the place OpenTelemetry (OTel) comes into play, and most of the identified observability platforms are very happy to ingest OTel-compliant telemetry information.

Whereas there’s a complete suite of open supply Rust tooling for working inside the OTel ecosystem, most of the well-known Rust internet frameworks haven’t adopted the incorporation of OTel requirements in a baked-in form of means.

Widespread, encompassing internet frameworks like Actix and Tokio’s axum depend on customized implementations and exterior libraries to offer integration (actix-web-opentelemetry and axum-tracing-opentelemetry, respectively). Third-party integration has been the favored alternative to date, and whereas that promotes flexibility and consumer management, it might make it harder for these anticipating so as to add the integration nearly seamlessly.

We gained’t cowl writing a customized implementation right here intimately, however canonical HTTP middleware like Tower permits for overriding the default implementation of span creation on a request. If applied to spec, these fields must be set on a span’s metadata:

http.client_ip: The consumer’s IP handlehttp.taste: The protocol model used (HTTP/1.1, HTTP/2.0, and so forth.)http.host: The worth of theHostheaderhttp.technique: The request techniquehttp.route: The matched routehttp.request_content_length: The request content material sizehttp.response_content_length: The response content material sizehttp.scheme: The URI scheme used (HTTPorHTTPS)http.status_code: The response standing codehttp.goal: The total request goal together with path and question parametershttp.user_agent: The worth of theConsumer-Agentheaderotel.sort: Sometimesserver, discover extra right hereotel.identify: Identify consisting ofhttp.techniqueandhttp.routeotel.status_code:OKif the response is success;ERRORif it’s a 5xxtrace_id: The identifier for a hint, used to group all spans for a selected hint collectively throughout processes

Initializing a tracer

Tracing through tracing-opentelemetry and rust-opentelemetry exposes one other layer with which we will compose our subscriber in an effort to add OTel contextual info to all spans and join and emit these spans to observability platforms like Datadog or Honeycomb or on to a working occasion of Jaeger or Tempo, which can pattern hint information for manageable consumption.

Initializing a [Tracer]() to provide and handle spans is fairly simple:

pub fn init_tracer(settings: &Otel) -> End result<Tracer> {

world::set_text_map_propagator(TraceContextPropagator::new());

let useful resource = Useful resource::new(vec![

otel_semcov::resource::SERVICE_NAME.string(PKG_NAME),

otel_semcov::resource::SERVICE_VERSION.string(VERSION),

otel_semcov::resource::TELEMETRY_SDK_LANGUAGE.string(LANG),

]);

let api_token = MetadataValue::from_str(&settings.api_token)?;

let endpoint = &settings.exporter_otlp_endpoint;

let mut map = MetadataMap::with_capacity(1);

map.insert("x-tracing-service-header", api_token);

let hint = opentelemetry_otlp::new_pipeline()

.tracing()

.with_exporter(exporter(map, endpoint)?)

.with_trace_config(sdk::hint::config().with_resource(useful resource))

.install_batch(runtime::Tokio)

.map_err(|e| anyhow!("didn't intialize tracer: {:#?}", e))?;

Okay(hint)

}

Together with it inside our pipeline of layers is simple as nicely. We will additionally filter primarily based on degree and use a dynamic filter to skip occasions that we’d wish to keep away from in our traces:

.with(

tracing_opentelemetry::layer()

.with_tracer(tracer)

.with_filter(LevelFilter::DEBUG)

.with_filter(dynamic_filter_fn(|_metadata, ctx| s.guardian().is_none() && s.identify() == "Connection")

.unwrap_or_default()

)),

)

With this pipeline initialization, all of our utility traces will be ingested by a device like Jaeger, as we demonstrated earlier on this article. Then, all that’s left is information correlation, slicing, and dicing.

Conclusion

By composing these tracing layers collectively, we will expose system conduct info in a refined and granular means, all of the whereas gaining sufficient output and sufficient context to begin making sense of such conduct. All this customization nonetheless comes at a value: it’s not fully automated all over, however the patterns are idiomatic, and there are open-source layers obtainable to drop in for many regular use instances.

Of all issues, this put up ought to assist make it simpler for customers to aim customizing utility interplay with hint assortment and exhibit how far it might go in making ready our purposes to deal with instances that inevitably fall between the cracks. That is just the start of our lovely friendship with our occasions and after they occur, and thereby, observability. How we go about debugging and fixing issues with it, in the long term, is at all times going to be ongoing work.

Particular thanks

I’d like to supply my honest because of Paul Cleary, Daniel Jin, Lukas Jorgensen, Jonathan Whittle, Jared Morrow, John Mumm, Sean Cribbs, Scott Fritchie, and Cuyler Jones for all their assist, edits, and collaboration on this text.

One other main thanks to my crew at fission.codes, particularly Brian Ginsburg and Quinn Wilton, for all their backing on this, and to my advisor Frank Pfenning for placing up with me whereas I did some non-research work!

LogRocket: Full visibility into manufacturing Rust apps

Debugging Rust purposes will be tough, particularly when customers expertise points which are tough to breed. When you’re keen on monitoring and monitoring efficiency of your Rust apps, routinely surfacing errors, and monitoring sluggish community requests and cargo time, attempt LogRocket.

LogRocket is sort of a DVR for internet and cellular apps, recording actually all the pieces that occurs in your Rust app. As a substitute of guessing why issues occur, you may mixture and report on what state your utility was in when a difficulty occurred. LogRocket additionally screens your app’s efficiency, reporting metrics like consumer CPU load, consumer reminiscence utilization, and extra.

Modernize the way you debug your Rust apps — begin monitoring free of charge.