Key segregation to restrict publicity within the occasion of an information breach

It is a continuation of my sequence of posts on Batch Jobs for Cybersecurity and Cyber Safety Metrics.

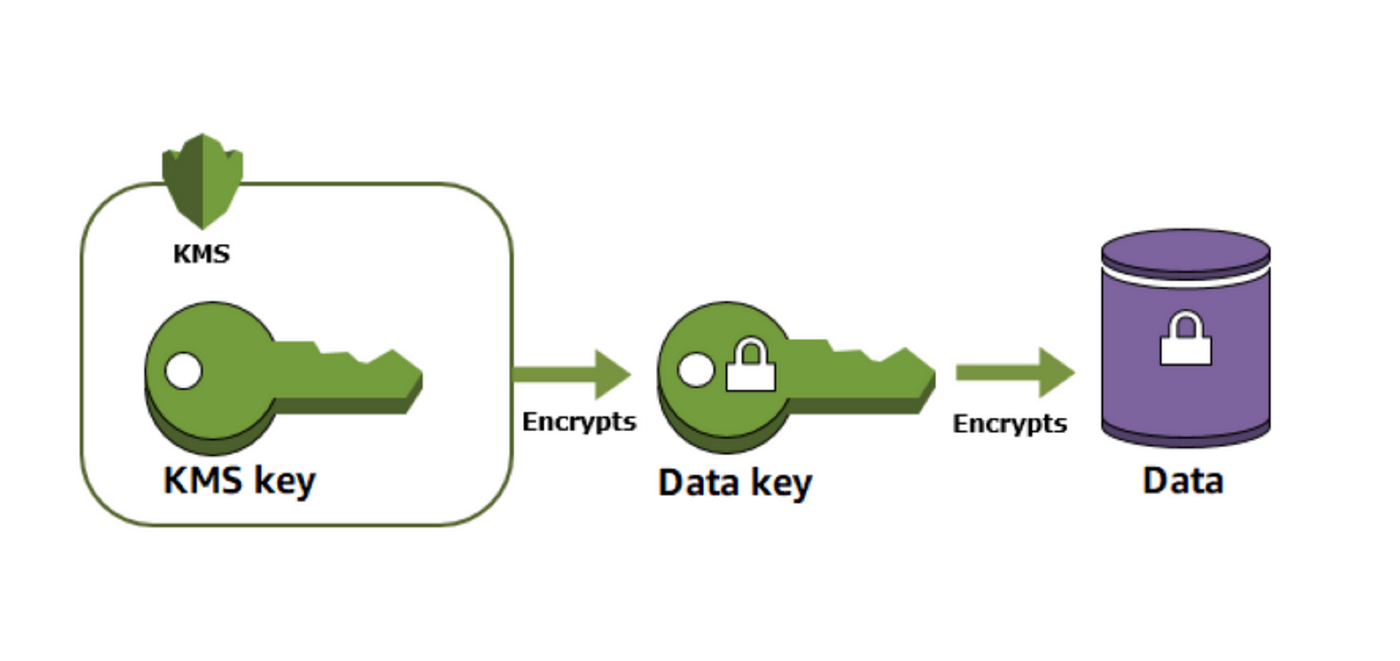

With a purpose to shield the secrets and techniques and knowledge concerned in our knowledge processing situation for the batch jobs we’re going to create we’ll make use of AWS Key Administration Service (KMS). Let’s suppose by means of how we’d architect our use of KMS keys for this goal.

Default encryption on AWS is nice, nonetheless I’ve written earlier than about utilizing encryption the place anybody with AWS credentials in an AWS account to decrypt the information makes encryption considerably ineffective. Encryption for compliance sake will not be adequate. A correct and considerate encryption structure will show you how to way more within the occasion of an information breach.

Earlier than we get began, let’s evaluate what we’ve lined as far as these concerns are related to our architectural selections.

I’m enthusiastic about requiring MFA to kick off an AWS Batch job:

We’re going to make use of a container for our batch job.

We have to safe that container together with any keys or knowledge utilized by or processed by the batch jobs, plus any output.

One of many largest danger within the cloud is credential abuse. I defined some methods attackers can abuse credentials purposes in cloud environments on this final publish. We have to take some steps to guard credentials I must retailer someplace as a result of means that MFA works on AWS.

AWS IAM Roles and MFA

One of many issues I’ve already accomplished previous to writing these weblog posts was to implement a docker container that requires MFA to run a course of. It appears that evidently to be able to make that work we might want to use an AWS entry key and secret key as a result of MFA on AWS is expounded to customers, not AWS IAM roles. I consumer with MFA will kick off the job to permit the batch to imagine a task. From that time, the batch job can use the assumed function.

As I wrote about on this brief weblog publish, we can not require MFA in IAM insurance policies for actions taken by assumed roles as a result of the metadata does point out (a minimum of not on the time of this writing) that somebody was utilizing credentials with MFA to imagine the function:

We will implement using MFA to imagine the function. Right here’s the place we run into some points with defending credentials.

When the batch job runs, it might want to go credentials and a token into the batch job so it could possibly assume a task that requires MFA. I don’t need customers to must retailer credentials in GitHub or go credentials round to run batch jobs. That appears dangerous. I wish to pull these credentials out of AWS Secrets and techniques Supervisor. I additionally wish to shield these credentials in Secrets and techniques Supervisor by encrypting them with an AWS key.

I’ve some ideas about how this may all work ultimately however that is all a proof of idea to see if I could make it work. At this level, I do know that I wish to have a separate key for the credentials and the information. That is aligned with the idea of separating the management airplane and the information airplane when architecting and securing techniques. The encryption key that protects the credentials that management the job might be separate from the encryption key that protects the information. I may create two separate keys named:

batch.credentials.key

knowledge.key

If I labored at a big group, I may grant totally different individuals entry to those separate keys. I may write way more on that subject however for now we’re simply attempting to separate considerations.

Knowledge Earlier than and After Transformation

What’s my final purpose for this batch job? I’ve been writing about safety metrics and reporting. I’m particularly enthusiastic about value-based safety metrics. I’m going to point out you how one can rework knowledge from a number of sources right into a report.

The batch jobs I write are going to drag knowledge out of 1 S3 bucket, rework it, after which retailer it in one other S3 bucket. Maybe I’ll even have event-driven safety batch jobs. The supply knowledge comes from totally different tasks. As soon as every mission is full, the information will be archived.

The information must be decrypted every time it’s learn and encrypted every time it will get saved. As the information strikes by means of a course of I could present entry to decrypt the information solely to some roles with some keys and encrypt the information solely to some roles.

If the credentials of somebody who has encrypt-only permissions are stolen, then whomever stole these credentials can’t learn the information. They’ll solely add new knowledge and encrypted it. That’s nonetheless a priority however we’ve minimize out a bit of danger on this course of. If a cloud identification has decrypt solely permissions, they can’t tamper with the supply knowledge, solely learn it, as a result of they might not have the ability to re-encrypt it and return it to the unique location within the correct encrypted format. The supply knowledge stays pristine and unaffected by our transformation processes.

I might need totally different individuals engaged on tasks producing knowledge. They generate the information and are allowed to encrypt and retailer the supply knowledge. Then the batch jobs are allowed to decrypt the supply knowledge and re-encrypt it with a separate key. I may create two separate keys for supply and transformation knowledge so I’d having one thing like this:

batch.credentials.key

knowledge.supply.key (supply generator: encrypt, transformation: decrypt)

knowledge.rework.key (transformation: encrypt and decrypt)

Separate Keys for Separate Tasks

As well as, an individual engaged on one mission won’t must see the information related to one other mission. Utilizing the need-to-know or zero belief mannequin, I can assign totally different encryption keys for every mission. Utilizing separate keys may help restrict the blast radius in an information breach.

How Separate Keys Assist Restrict Blast Radius in a Knowledge BreachThis idea of separate keys for various purposes would have and did, to some extent, assist restrict the blast radius within the Capital One breach. I talked to some mates concerned (who not work for the corporate) over dinner not too long ago. One factor I at all times questioned why the attacker may entry *all* the information in *all* the S3 buckets. I discovered that, in truth, a few of the S3 buckets used buyer managed KMS keys with zero belief insurance policies and people buckets weren't accessible by the attacker. We'll use that idea in our KMS design for our batch jobs processing studies for various functions.

I wish to automate the creation of those keys, so I would like a singular key title for every mission. I can change the title of the supply and transformation keys to incorporate the mission id.

batch.credentials.key

[projectid].knowledge.supply.key

[projectid].knowledge.rework.key

Manufacturing Knowledge for Prospects

As soon as the information will get reworked to a report for the shopper, the report will be encrypted with a separate report key. This kind of transformation ensures the individuals with entry to the unique supply can not entry the ultimate report. I may used varied strategies to encrypt the ultimate mission report with a key offered by the shopper that solely they and applicable employees at 2nd Sight Lab can use to decrypt the information.

batch.credentials.key

[projectid].knowledge.supply.key

[projectid].knowledge.rework.key

[projectid].knowledge.report.key

Encrypted Secrets and techniques and Digital Machines

We’ll wish to encrypt any secrets and techniques used on this course of and the information on digital machines. In my case I’ve some handbook processes that generate knowledge. Correct penetration testing won’t ever be totally automated. Customers producing handbook knowledge work on VMS within the cloud and people VMS must be encrypted.

I additionally generate a totally new atmosphere for every mission. Together with that customers want some details about the atmosphere that might be saved in secrets and techniques supervisor or AWS Techniques Supervisor Parameter Retailer. In both case, we’ll wish to shield these secrets and techniques with a key that the consumer can use to decrypt the information or begin a digital machine (EC2 occasion).

If the customers are engaged on a specific mission, we may use the KMS keys we used above for S3, however then contemplate what the important thing coverage would must be in that case. We’d must grant much more permissions as defined on this article that covers a typical error when customers don’t have entry to a KMS key used to encrypt a VM

We may create a separate key for every consumer and that key’s used on their very own VMs and browse their very own secrets and techniques. Then nobody else would have the ability to entry or view the information on a specific VM besides the people who find themselves allowed to make use of that decryption key. That would come with 2nd Sight Lab directors and the particular person engaged on that individual mission with a specific VM. If an individual is engaged on a number of tasks they may have one key for his or her VM or, what we do, is have individuals create separate VMs for every mission. The keys would find yourself trying one thing like this:

batch.credentials.key

[projectid].knowledge.supply.key

[projectid].knowledge.rework.key

[projectid].knowledge.report.key

[projectid].[userid].key

Backups

I typically will wait some time after a mission to make sure clients don’t have any questions. After I affirm with them that they’re happy, I archive the information.

When the mission is full I not want the transformation knowledge or the transformation key or the user-specific mission keys. I can at all times re-run the transformation course of if wanted with the supply knowledge, so I can delete all of the transformation-related sources to avoid wasting cash. I can archive the VMs used on the mission additionally and, if utilizing automation, restore consumer entry pretty simply if required.

I can again up the information with a separate key that’s by no means used besides in a break glass situation if a buyer wanted entry to an outdated report and one thing occurred to my report knowledge accessible to clients.

I can use a single backup key for simpler administration and to economize as soon as a mission is full.

There are lots of extra concerns associated to backups to assist defend towards ransomware and create a resilient structure, however for now let’s say that after the mission is full we should always find yourself with the next keys:

credentials.key

[projectid].knowledge.report.key

archive.knowledge.key

This may occasionally create some issues with encrypted AMIs. You will have to decrypt and re-encrypt the information onto EBS snapshots encrypted along with your archive key and take a look at your backups to ensure you can restore them correctly. It can save you cash by storing your snapshots utilizing the Amazon EBS Snapshots Archive storage class.

AWS Accounts for Segregation

One of many issues I’ve been testing out recently is how I construction AWS accounts for segregation of duties. I put my KMS keys in a separate account. At one massive financial institution I labored at we had a separate group that dealt with encryption. In case your group operates this fashion the individuals who present encryption keys to others can accomplish that in a separate account and supply cross account entry to KMS keys to individuals who want them. That means there’s much less probability that somebody will get entry to these KMS keys and might change the insurance policies on them.

I did face one challenge with KMS key cross-account logging that I’m nonetheless trying into and hope the AWS documentation is up to date with reference to that earlier than I get again to that it.

I additionally haven’t been capable of get some points of organizational insurance policies working with KMS keys. I attempted to assign an organizational unit entry to make use of a KMS key to begin VMs encrypted with that key however wasn’t capable of get that engaged on my first try. Ultimately, as defined above, I’ve determined to create user-specific keys anyway so it’s a moot level.

Automation

After all, the entire above is a bit sophisticated. Handbook processes usually are not going to be possible. We’re going to wish to suppose by means of how we automate the creation of all these keys and safe that creation course of so it can’t be abused. I’ll be considering by means of the automation of all of this in my subsequent publish.

Pricing Concerns

Excessive-volume customers will wish to have a look at the value of the KMS keys. You will have to find out what number of keys you will want to assist your use case and what number of instances you will want to encrypt and decrypt the information. The way in which I learn the KMS pricing, the associated fee for KMS utilization is discounted on combination utilization, not per key however I haven’t examined that. You might wish to do a POC or make clear that with AWS when you plan to make heavy use of this service. In different phrases, you probably have 5 KMS keys and also you make 5,000 requests with every key, will you pay .03 for every key as a result of every is under the ten,000 restrict, or are the requests aggregated so that you’d pay .03 for the primary 10,000 requests and .03 for the final 5,000 requests? This might matter you probably have an enormous quantity of quantity.

Limits:

Excessive-Quantity customers may also want to pay attention to AWS quotas (limits). I cannot be hitting limits with my degree of utilization, however different organizations could must quest restrict will increase or change their design to accommodate greater KMS utilization ranges. You don’t wish to discover out you might be hitting a restrict and trigger a service outage so ensure you perceive your necessities prematurely when you suppose you would possibly hit these limits.

Pondering forward

I’m unsure if that is precisely how this mission will find yourself however that is the thought course of I’m going by means of when designing new techniques. As at all times, plans can change as you make new discoveries throughout the improvement course of. Let’s create some KMS keys subsequent. Observe me for extra on this sequence.

Teri Radichel

If you happen to appreciated this story please clap and observe:

Medium: Teri Radichel or Electronic mail Record: Teri Radichel

Twitter: @teriradichel or @2ndSightLab

Requests companies by way of LinkedIn: Teri Radichel or IANS Analysis

© 2nd Sight Lab 2022

Associated posts:

____________________________________________

Creator:

Cybersecurity for Executives within the Age of Cloud on Amazon

Want Cloud Safety Coaching? 2nd Sight Lab Cloud Safety Coaching

Is your cloud safe? Rent 2nd Sight Lab for a penetration take a look at or safety evaluation.

Have a Cybersecurity or Cloud Safety Query? Ask Teri Radichel by scheduling a name with IANS Analysis.

Cybersecurity & Cloud Safety Assets by Teri Radichel: Cybersecurity and Cloud safety lessons, articles, white papers, shows, and podcasts