It’s clear that this present era of “AI” / LLM instruments likes providing a “chat field” as the first interplay mannequin. Each Bard and OpenAI’s interface heart a textual content enter on the backside of the display screen (like most messaging purchasers) and also you converse with it a bit such as you would textual content together with your family and friends. Design calls that an affordance. You don’t have to be taught use it, since you already know. This was in all probability a wise opening play. For one, they want to show us how sensible they’re and if they will even partially efficiently reply our questions, that’s spectacular. Two, it teaches you that the response from the very first thing you entered isn’t the ultimate reply; it’s simply a part of a dialog, and sending via extra textual content is one thing you’ll be able to and will do.

However not everyone seems to be impressed with chat field because the interface. Maggie Appleton says:

Nevertheless it’s additionally the lazy answer. It’s solely the apparent tip of the iceberg relating to exploring how we would work together with these unusual new language mannequin brokers we’ve grown inside a neural web.

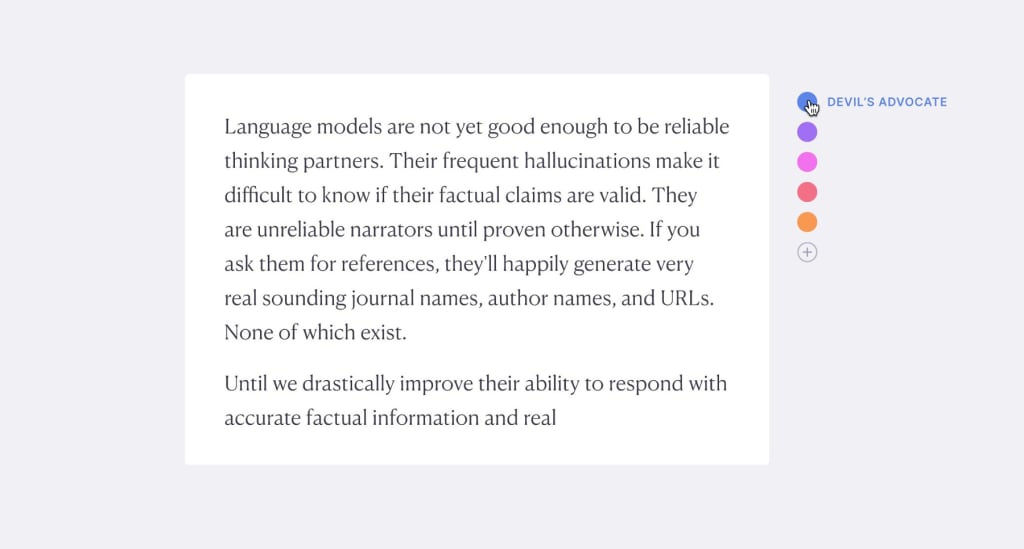

Maggie goes on to showcase an concept for a writing assistant leveraging a LLM. Spotlight a little bit of textual content, for instance, and the UI affords you a wide range of flavored suggestions. Need it to play satan’s advocate? That’s the blue toggle. Want some reward? Want it shortened or lengthened? Want it to discover a supply you forgot? Want it to focus on awkward grammar? Need it to counsel a unique approach to phrase it? These are totally different coloured toggles.

Notably, you didn’t need to sort in a immediate, the LLM began serving to you contextually primarily based on what you had been already doing and what you need to do. A lot much less friction there. Extra assist for much less work. Behind the scenes, it doesn’t imply this software wouldn’t be prompt-powered, it nonetheless might! It might craft prompts for a LLM API primarily based on the chosen textual content and extra textual content that’s confirmed to have accomplished the job that software is designed to do.

Information + Context + Sauce = Helpful OutputThat’s how I consider it anyway — and none of these issues require a chat field.

Whereas I simply bought accomplished telling you the chat field is an affordance, Amelia Wattenberger argues it’s really not. It’s not as a result of “simply sort one thing” isn’t actually all it’s good to know to make use of it. No less than not use it properly. To get really good outcomes, it’s good to present lots, like the way you need the good machine to reply, what tone it ought to strike, what it ought to particularly embody, and anything which may assist it alongside. These incantations are awfully difficult to get proper.

Amelia is considering alongside the identical traces as Maggie: a writing assistant the place the mannequin is fed with contextual data and a wide range of selections quite than needing a person to particularly immediate something.

It would boil right down to a finest observe one thing like supply a immediate field if it’s really really helpful, however in any other case attempt to do one thing higher.

Quite a lot of us coders have already skilled what higher could be. Should you’ve tried GitHub Copilot, you realize that you just aren’t continuously writing customized prompts to get helpful output, helpful output is simply continuously proven to you within the type of ghost code launching out in entrance of the code you’re already writing so that you can take or not. There isn’t any doubt this can be a nice expertise for us and makes the many of the fashions powers.

I get the sense that even the fashions are higher when they’re educated hyper contextually. If I need poetry writing assist, I might hope that the mannequin is educated on… poetry. Similar with Copilot. It’s educated on code so it’s good at code. I believe that’s what makes Phind helpful. It’s (in all probability) educated on coding documentation so the outcomes are reliably in that vein. A textual content field immediate, however that’s sort of the purpose. I’m additionally a fan of Phind as a result of it proves that fashions can inform you the supply of their solutions because it offers them to you, one thing the larger fashions have chosen to not do, which I believe is gross and pushed by greed.

Geoffrey Litt makes a very good level about UX of all this in Malleable software program within the age of LLMs. What’s a greater expertise, typing “trim this video from 0:21 to 1:32” right into a chat field or dragging a trimming slider from the left and proper sides of a timeline? (That’s rhetorical: it’s the latter.)

Although we’ve been speaking largely about LLMs, I believe all this holds true with the picture fashions as properly. It’s spectacular to sort “A dense forest scene with a purple Elk useless heart in it, gazing you with massive eyes, within the fashion of a charles shut portray” and get something wherever close to that again. (After all with the copyright ambiguity that lets you apply it to a billboard right this moment). Nevertheless it’s already proving that that parlor trick isn’t as helpful as contextual picture era. “Portray” objects out of scenes, increasing current backgrounds, or altering somebody’s hair, shirt, or smile on the fly is way extra sensible. Photoshop’s Generative Fill characteristic does simply that and requires no foolish typing of particular phrases right into a field. Meta’s mannequin that routinely breaks up advanced pictures into components you’ll be able to manipulate independently is a superb concept because it’s one thing design software consultants have been doing for ages. It’s a laborious process that no one relishes. Let the machines do it routinely — simply don’t make me sort out my request.