Like everybody and their brother, I’ve been taking part in with ChatGPT and asking it life’s important questions. It confidently tells me issues, and a few of these issues are extremely insightful. Nevertheless, folks and chatbots which can be fallacious usually state issues confidently.

IDG

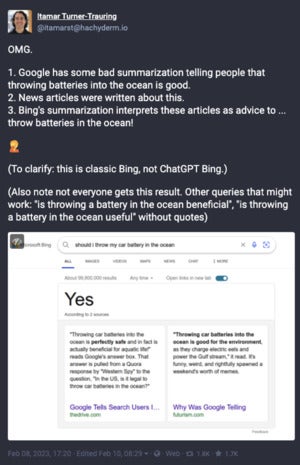

IDGI noticed a latest Google Reply posted on Mastodon. On this “reply,” Google urged that there have been advantages to disposing of used automobile batteries within the ocean, together with charging electrical eels and powering the Gulf Stream. Bing then picked up this reply and in addition urged the environmental advantages of illicit battery disposal. I’m wondering if Google realizes you should utilize motor oil to fertilize your garden. (Creator’s word: please don’t throw batteries within the ocean or fertilize your garden with motor oil.)

Presumably, folks looking on this are on the lookout for the joke, nevertheless it raises an attention-grabbing query. As extra folks use ChatGPT and publish their outcomes on the net, and extra AI query answering techniques decide up their very own generated responses and people of others and practice on it—what is going to occur?

I did what anybody would do for a query they don’t know the reply to. I requested ChatGPT, “What is going to occur when folks publish the output of ChatGPT and it’s educated by itself responses?”

The bot replied:

If folks publish the output of ChatGPT and use it to coach the mannequin additional by itself responses, this is called self-training or self-supervised studying. On this situation, the mannequin will proceed to be taught from its personal output and doubtlessly enhance its efficiency over time.

Nevertheless, there are some potential dangers to contemplate when utilizing self-training. One danger is that the mannequin could overfit to its personal output, that means that it turns into too specialised in responding to the kinds of questions and statements that it has beforehand encountered. This might result in the mannequin performing poorly on new or unseen knowledge.

One other danger is that the mannequin could begin producing nonsensical or inappropriate responses if it’s not correctly monitored and supervised throughout self-training. This could possibly be because of the mannequin choosing up on patterns or biases in its personal output and amplifying them over time.

Subsequently, you will need to use warning when self-training a language mannequin like ChatGPT and to rigorously monitor its efficiency and output to make sure that it continues to generate high-quality responses.

That factors to some actually scary social penalties since folks appear to consider ChatGPT and assume it’s magically appropriate (in addition to emotional). I’m wondering what the social penalties could be? I requested, “What could possibly be the social results of individuals relying on ChatGPT and it beginning to produce nonsense outcomes as a result of it’s educated by itself info?”

The robotic mentioned:

If folks develop into overly reliant on a language mannequin like ChatGPT and it begins to provide nonsensical outcomes as a result of it’s educated by itself info, this might have a number of detrimental social results.

Firstly, folks could develop into annoyed or confused when ChatGPT gives nonsensical or inappropriate responses to their questions or statements. This might result in a breakdown in communication and a lack of belief within the expertise.

Secondly, if folks depend on ChatGPT to supply essential info or make essential choices, the implications of the mannequin producing nonsense outcomes could possibly be extreme. For instance, if a ChatGPT-based chatbot is used to supply medical recommendation or diagnose well being situations, counting on nonsensical responses may result in critical well being dangers.

Thirdly, if ChatGPT is used to generate content material for social media or different on-line platforms, it may result in the unfold of misinformation or pretend information. This might have critical social and political penalties, as false info could possibly be used to govern public opinion or undermine democratic processes.

Usually, you will need to use language fashions like ChatGPT with warning and to watch their efficiency rigorously to make sure that they proceed to provide correct and acceptable responses. It is usually essential to acknowledge the constraints of those fashions and never depend on them as an alternative to human intelligence and experience.

Oh, so we must always use expertise responsibly. I’m wondering what ChatGPT thinks we must always do about that? I requested, “On condition that people have virtually by no means used a brand new expertise responsibly, what would be the seemingly end result of this situation?”

It replied:

Whereas it’s true that people have usually struggled to make use of new applied sciences responsibly previously, you will need to acknowledge that every new expertise is exclusive and will current its personal set of dangers and challenges. Subsequently, it’s tough to foretell with certainty what the end result of a situation involving a language mannequin like ChatGPT could be.

That being mentioned, there are steps that may be taken to reduce the potential detrimental results of this expertise. For instance, builders and customers of language fashions like ChatGPT can work to establish and tackle any biases or moral considerations that will come up through the growth and deployment of the expertise. This might embody implementing safeguards to stop the unfold of misinformation or inappropriate content material, and making certain that the mannequin is educated on numerous and consultant knowledge.

Moreover, governments and regulatory our bodies can play a task in making certain that language fashions like ChatGPT are used responsibly and that acceptable safeguards are in place to guard the general public. This may occasionally embody laws round the usage of language fashions in particular industries or functions, and necessities for transparency and accountability within the growth and deployment of those applied sciences.

In abstract, whereas there are definitely dangers related to the usage of language fashions like ChatGPT, it’s potential to mitigate these dangers by way of accountable growth and deployment, in addition to regulatory oversight and public consciousness.

Oh wow, ChatGPT thinks it needs to be regulated? I emailed its creator, OpenAI, whether or not they agree, and if not, then what do they know that their language mannequin doesn’t? They haven’t replied as of this publication.

We’ve lengthy been self-trained to simply “Google the reply” and now we could begin coaching ourselves to “simply ask ChatGPT.” Nevertheless, you will need to keep in mind that this device is simply sucking up some portion of the web and regurgitating it again to you. ChatGPT generally is a first rate analysis device, however it’s rubbish in and rubbish out.

Like all expertise that is BYOB. Convey your individual mind and don’t feed automobile batteries to the eels.

Copyright © 2023 IDG Communications, Inc.