SPONSORED BY IBM

We right here at IBM have been researching and creating synthetic intelligence {hardware} and software program for many years—we created DeepBlue, which beat the reigning chess world champion, and Watson, a question-answering system that received Jeopardy! in opposition to two of the main champions over a decade in the past. Our researchers haven’t been coasting on these wins; they’ve been constructing new language fashions and optimizing how they’re constructed and the way they carry out.

Clearly, the headlines round AI in the previous couple of years have been dominated by generative AI and large-language fashions (LLM). Whereas we’ve been engaged on our personal fashions and frameworks, we’ve seen the influence these fashions have had—each good and unhealthy. Our analysis has targeted on learn how to make these applied sciences quick, environment friendly, and reliable. Each our companions and purchasers need to re-invent their very own processes and experiences and we have been working with them. As corporations look to combine generative AI into their merchandise and workflows, we would like them to have the ability to benefit from our decade-plus expertise commercializing IBM Watson and the way it’s helped us construct our business-ready AI and information platform, IBM watsonx.

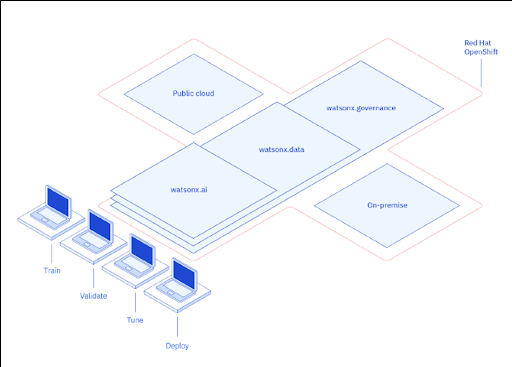

On this article, we’ll stroll you thru IBM’s AI growth, nerd out on a number of the particulars behind our new fashions and fast inferencing stack, and try IBM watsonx’s three elements: watsonx.ai, watsonx.information, and watsonx.governance that collectively type an end-to-end reliable AI platform.

Origin story

Round 2018, we began researching basis fashions basically. It was an thrilling time for these fashions—there have been numerous developments occurring—and we needed to see how they may very well be utilized to enterprise domains like monetary companies, telecommunications, and provide chain. Because it ramped up, we discovered many extra attention-grabbing trade use instances based mostly on years of ecosystem and shopper expertise, and a gaggle right here determined to face up a UI for LLMs to make it simpler to discover. It was very cool to spend time researching this, however each dialog emphasised the significance of guardrails round AI for enterprise.

Then in November 2022, OpenAI captured the general public’s creativeness with the discharge of ChatGPT. Our companions and purchasers had been excited in regards to the potential productiveness and effectivity positive aspects, and we started having extra discussions with them round their AI for enterprise wants. We got down to assist purchasers seize the huge alternative whereas holding core rules of security, transparency and belief on the core of our AI tasks.

In our analysis, we had been each creating our personal basis fashions and testing present ones from others, so our platform was deliberately designed to be versatile in regards to the fashions it helps. However now we wanted so as to add the flexibility to run inference, tuning, and different mannequin customizations, in addition to create the underlying AI infrastructure stack to construct basis fashions from scratch. For that, we wanted to hook up a knowledge platform to the AI entrance finish.

The importance of data

Knowledge landscapes are advanced and siloed, stopping enterprises from accessing, curating, and gaining full worth from their information for analytics and AI. The accelerated adoption of generative AI will solely amplify these challenges as organizations require trusted information for AI.

With the explosion of knowledge in in the present day’s digital period, information lakes are prevalent, however exhausting to effectively scale. In our expertise, information warehouses, particularly within the cloud, are extremely performant however usually are not probably the most value efficient. That is the place the lakehouse structure comes into play, based mostly on worth performant open supply and low-cost object storage. For our information part, watsonx.information, we needed to have one thing that may be each quick and value environment friendly to unify ruled information for AI.

The open information lakehouse structure has been rising up to now few years as a cloud-native answer to the constraints (and separation) of knowledge lakes and information warehouses. The method appeared the most effective match for watsonx, as we wanted a polyglot information retailer that might fulfill completely different wants of various information customers. With watsonx.information, enterprises can simplify their information panorama with the openness of a lakehouse to entry all of their information via a single level of entry and share a single copy of knowledge throughout a number of question engines. This helps optimize worth efficiency, de-duplication of knowledge, and extract, rework and cargo (ETL). Organizations can unify, discover, and put together their information for the AI mannequin or utility of their alternative.

Given IBM’s expertise in databases with DB2 and Netezza, in addition to within the information lake area with IBM Analytics Engine, BigSQL, and beforehand BigInsights, the lakehouse method wasn’t a shock, and we had been engaged on one thing on this vein for a couple of years. All issues being equal, our purchasers would like to have a knowledge warehouse maintain every thing, and simply let it develop larger and larger. Watsonx.information wanted to be on value environment friendly, resilient commodity storage and deal with unstructured information, as LLMs use a number of uncooked textual content.

We introduced in our database and information warehouse consultants, who’ve been optimizing databases and analytical information warehouse for years, and requested “What does an excellent information lakehouse appear like?” Databases basically attempt to retailer information in order that the bytes on disk will be queried shortly, whereas information lakehouses have to effectively eat the present technology of bytes on disk. Needing to optimize for information storage value, as nicely, object storage affords that answer with the dimensions of its adoption and availability. However object shops don’t at all times have the most effective latency for the type of high-frequency queries that AI functions require.

So how can we ship higher question efficiency on object storage? It’s principally a number of intelligent caching on native NVMe drives. The thing retailer that holds a lot of the information is comparatively gradual—measured in MBs per second—whereas the NVMe storage permits queries of GBs per second. Mix that with a database modified to make use of a non-public columnar desk structure and Presto utilizing Parquet, we get environment friendly desk scanning and indices, and we are able to successfully compete with conventional warehouse efficiency, however tailor-made for AI workloads.

With the database design, we additionally needed to contemplate the infrastructure. Efficiency, reliability, and information integrity are simpler to handle for a platform if you personal and handle the infrastructure—that’s why so most of the AI-focused database suppliers run both in managed clouds or as SaaS. With a SaaS product, you possibly can tune your infrastructure so it scales nicely in a cheap method.

However IBM has at all times been a hybrid firm—not simply SaaS, not simply on-premise. Many enterprise corporations don’t really feel comfy putting their mission-critical information in another person’s datacenter. So we’ve to design for client-managed on-prem situations.

After we design for client-managed watsonx installs, we’ve a captivating set of challenges from an engineering perspective. The occasion may very well be a very small proof-of-concept, or it may scale to enterprise necessities round zero downtime, catastrophe restoration backups, multi-site resiliency—all these issues an enterprise-scale enterprise wants from a dependable platform. However all of them essentially require compatibility with an infrastructure structure we do not management. We now have to offer the capabilities that the shopper needs within the type issue that they require.

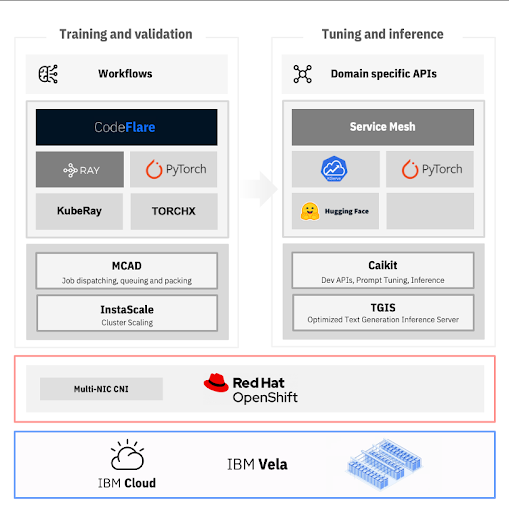

Key to offering reliability and consistency throughout occasion sorts has been our assist of open-source applied sciences. We’re all-in on PyTorch for mannequin coaching and inference, and have been contributing our {hardware} abstraction and optimization work again into the PyTorch ecosystem. Moreover, Ray and CodeFlare have been instrumental in scaling these ML coaching workloads. KServe/ModelMesh/Service Mesh, Caikit, and Hugging Face Transformers helped with tuning and serving basis fashions. And every thing, from coaching to client-managed installs, runs on Purple Hat OpenShift.

We had been speaking to a shopper the opposite day who noticed our open-source stack and stated, “It feels like I may `pip set up` a bunch of stuff and get to the place you might be.” We thought this via—certain you would possibly have the ability to get your “Hey World” up, however are you going to cowl scaling, excessive availability, self-serve by non-experts, entry management, and tackle each new CVE?

We’ve been specializing in high-availability SaaS for a few decade, so we get up within the morning, sip our espresso, and take into consideration advanced techniques in network-enabled environments. The place are you storing state? Is it protected? Will it scale? How do I keep away from carrying an excessive amount of state round? The place are the bottlenecks? Are we exposing the appropriate embedding endpoints with out leaving open backdoors?

One other one of many design tenets was making a paved highway for purchasers. The notion of a paved highway is that we’re bringing our multi-enterprise expertise to bear right here, and we’re going to create the smoothest doable path to the tip consequence as doable for our purchasers’ distinctive aims.

Building LLMs, Dynamic inference, and optimization

A part of our paved highway philosophy includes supplying basis fashions, dynamic inferencing, and optimizations that we may stand behind. You should utilize any mannequin you need from our basis mannequin library in watsonx, together with Llama 2, StarCoder, and different open fashions. Along with these well-known open fashions, we provide a whole AI stack that we’ve constructed based mostly on years of our personal analysis.

Our mannequin staff has been creating novel architectures that advance the cutting-edge, in addition to constructing fashions utilizing confirmed architectures. They’ve give you a variety of business-focused fashions which are at present or will quickly be out there on watsonx.ai:

- The Slate household are 153-million parameter multilingual non-generative encoder-only mannequin based mostly on the RoBERTa method. Whereas not designed for language technology, they effectively analyze it for sentiment evaluation, entity extraction, relationship detection, and classification duties.

- The Granite fashions are based mostly on a decoder-only structure and are educated on enterprise-relevant datasets from 5 domains: web, educational, code, authorized and finance, all scrutinized to root out objectionable content material, and benchmarked in opposition to inside and exterior fashions.

- Subsequent 12 months, we’ll be including Obsidian fashions that use a brand new modular structure developed by IBM Analysis designed to offer extremely environment friendly inferencing. Our researchers are repeatedly engaged on different improvements corresponding to modular architectures.

We are saying that these are fashions, plural, as a result of we’re constructing targeted fashions which have been educated on domain-specific information, together with code, geospatial information, IT occasions, and molecules. For this coaching, we used our years of expertise constructing AI supercomputers like Deep Blue, Watson, and Summit to create Vela, a cloud-native, AI-optimized supercomputer. Coaching a number of fashions was made simpler because of the “LiGO” algorithm we developed in partnership with MIT. It makes use of a number of small fashions to construct into bigger fashions that benefit from emergent LLM skills. This technique can save from 40-70% of the time, value, and carbon output required to coach a mannequin.

As we see how generative AI is used within the enterprise, as much as 90% of use instances contain some variant on retrieval-augmented technology (RAG). We discovered that even in our analysis on fashions, we needed to be open to quite a lot of embeddings and information when it got here to RAG and different post-training mannequin customization.

As soon as we began making use of fashions to our purchasers’ use instances, we realized there was a niche within the ecosystem: there wasn’t an excellent inferencing server stack within the open. Inferencing is the place any generative AI spends most of its time—and due to this fact vitality—so it was necessary to us to have one thing environment friendly. As soon as we began constructing our personal—Textual content Technology Inferencing Service (TGIS), forked from Textual content Technology Inference—we discovered the exhausting drawback round inferencing at scale is that requests are available in at unpredictable instances and GPU compute is dear. You possibly can’t have everyone line up completely and submit serial requests to be processed so as. No, the server could be midway via one inferencing request doing GPU work and one other request would are available in. “Hey? Can I begin doing a little work, too?”

After we carried out batching on our finish, it was dynamic and steady as to be sure that the GPU was totally utilized always.

A totally utilized GPU right here can really feel like magic. Given intermittent arrival charges, completely different sizes of requests, completely different preemption charges, and completely different ending instances, the GPU can lay dormant as the remainder of the system figures out which request to deal with. When it takes perhaps 50 milliseconds for the GPU to do one thing earlier than you get your subsequent token, we needed the CPU to do as a lot sensible scheduling to verify these GPUs had been doing the appropriate work on the proper time. So the CPU isn’t simply queueing them up regionally; it’s advancing the processing token by token.

In the event you’ve studied how compilers work, then this magic could appear somewhat extra grounded in engineering and math. These requests and nodes to schedule will be handled like a graph. From there you possibly can prune the graph, collapse or mix nodes, and carry out different optimizations, like speculative decoding and different optimizations that scale back the quantity of matrix multiplications that the GPUs must sort out.

These optimizations have given us enhancements we are able to measure simply within the variety of tokens per second. We’re engaged on secure quantization that reduces dimension and value of inferencing in a extremely/comparatively lossless approach, however the problem has been getting all these optimizations right into a single inference stack the place they don’t cancel one another out. That’s occurred: we’ve contributed a bunch to PyTorch optimization after which discovered a mannequin quantization that gave us an awesome enchancment.

We’ll proceed to push the boundaries on inferencing efficiency, because it makes our platform a greater enterprise worth. The truth is, although, that the inferencing area goes to commoditize fairly shortly. The actual worth to companies might be how nicely they perceive and management their AI merchandise, and we expect that is the place watsonx.governance will make all of the distinction.

Governance

We’ve at all times strongly believed within the energy of analysis to assist be certain that any new capabilities we provide are reliable and business-ready. However when ChatGPT was launched, the genie was out of the bottle. Folks had been instantly utilizing it in enterprise conditions and turning into very excited in regards to the prospects. We knew we needed to construct on our analysis and capabilities round methods to mitigate LLM downsides with correct governance: lowering issues like hallucinations, bias, and the black field nature of the method.

With any enterprise instrument constructed on information, companions and purchasers must be involved in regards to the dangers concerned in utilizing these instruments, not simply the rewards. They’ll want to fulfill regulatory necessities round information use and privateness like GDPR and auditing necessities for processes like SOC 2 and ISO-27001, anticipate compliance with future AI-focused regulation, and mitigate moral issues like bias and authorized publicity round copyright infringement and license violations.

For these of us engaged on watsonx, giving our purchasers confidence begins with the info that we use to coach our basis fashions. One of many issues that IBM established early on with our partnership with MIT was a very giant curated information set that we may prepare our Granite and different LLM fashions on whereas lowering authorized danger related to utilizing them. As an organization, we stand by this: IBM gives IP indemnification for IBM-developed watsonx AI fashions.

One of many massive use instances for generative AI is code technology. Like a number of fashions, we prepare on the GitHub Clear dataset and have a purpose-built Code Assistant as a part of watsonx.

With such giant information units, LLMs are liable to baking human biases into their mannequin weights. Biases and different unfair outcomes don’t present up till you begin utilizing the mannequin. At that time, it’s very costly to retrain a mannequin to work out the biased coaching information. Our analysis groups have give you a variety of debiasing strategies. These are simply two of the approaches we use in eliminating biases from our fashions.

The Honest Infinitesimal Jackknife approach improves equity by merely dropping rigorously chosen coaching information factors in precept, however with out retrofitting the mannequin. It makes use of a model of the jackknife approach tailored to machine studying fashions that leaves out an commentary from calculations and aggregating the remaining outcomes. This straightforward statistical instrument vastly will increase equity with out affecting the outcomes the mannequin gives.

The FairReprogram method likewise doesn’t attempt to modify the bottom mannequin; it considers the weights and parameters fastened. When the LLM produces one thing that journeys the unfairness set off, FairReprogram introduces false demographic info into the enter that may successfully obscure demographic biases by stopping the mannequin from utilizing the biased demographic info to make predictions.

These interventions, whereas we expect they make our AI and information platform extra reliable, don’t allow you to audit how the AI produced a consequence. For that, we prolonged our OpenScale platform to cowl generative AI. This helps present explainability that’s been lacking from a number of generative AI instruments—you possibly can see what’s occurring within the black field. There’s a ton of knowledge we offer: confusion and confidence scores to see if the mannequin analyzed your transaction accurately, references to the coaching information, views of what this consequence would appear like after debiasing, and extra. Testing for generative AI errors isn’t at all times easy and might contain statistical analyses, so with the ability to hint errors extra effectively enables you to right them higher.

What we stated early about being a hybrid cloud firm and permitting our purchasers to swap in fashions and items of the stack applies to governance as nicely. Shoppers could decide a bunch of disparate applied sciences and anticipate all of them to work. They anticipate our governance instruments to work with any given LLM, together with customized ones. The chances of somebody selecting a stack completely composed of expertise we’ve examined forward of time is fairly slim. So we had to check out our interfaces and ensure they had been broadly adoptable.

From an structure perspective, this meant separating the items out and abstracting the interfaces to them—this piece does inference, this does code, and so on. We additionally couldn’t assume that any payloads could be forwarded to governance by default. The inference and monitoring paths must be separated out so if there are shortcuts out there for information gathering, that’s nice. But when not, there are methods to register intent. We’re working with main suppliers in order that we find out about any payload hooks of their tech. However a shopper utilizing one thing customized or boutique could must do some stitching to get every thing working. Worst case situation is you manually name governance after LLM calls to let it know what the AI is doing.

We all know that skilled builders are somewhat cautious about generative AI (and Stack Overflow does too), so we imagine that any AI platform needs to be open and clear in how they’re created and run. Our dedication to open supply is a part of that, however so is our dedication to decreasing the danger of adopting these highly effective new generative instruments. AI you possibly can belief doing enterprise with.

The chances of generative AI are very thrilling, however the potential pitfalls can preserve companies from adopting it. We at IBM constructed all three elements of watsonx — watsonx.ai, watsonx.information and watsonx.governance — to be one thing our enterprise purchasers may belief and wouldn’t be extra bother than they’re value.

Build with IBM watsonx today

Whether or not you’re an up-and-coming developer or seasoned veteran, study how one can begin constructing with IBM watsonx in the present day.