Samsung’s Area Zoom is as soon as once more below hearth with accusations that the corporate is “faking” photographs of the moon captured by the Galaxy S23 Extremely . That is nothing new, Area Zoom debuted on the Galaxy S20 Extremely in 2020 and the eye-popping 100x zoom that produces crystal clear photographs of the moon on par with (or higher than) far dearer telescopes or cameras was instantly referred to as into query.

The newest spherical of controversy began on Reddit , with a publish by consumer “ibreakphotos” looking for to show that Samsung’s software program is making use of particulars to those moon photographs that aren’t current on the time of seize. As I get pleasure from cellular astrophotography, I needed to take a better take a look at these new claims and clear up a few of the confusion round it.

That is no moon (shot)

One downside with this controversy is defining precisely what it might imply to be “faking” the moon photographs. Samsung is making use of AI and machine studying (ML) to Area Zoom photographs with a view to produce clear photographs of the moon. That time is not below rivalry.

In a earlier deep dive on Area Zoom by Enter in 2021 this was lined extensively. Samsung commented that the function makes use of Scene Identifier to acknowledge the Moon based mostly on AI mannequin coaching after which it reduces blur, noise, and applies Tremendous Decision processing with a number of captures just like HDR processing. For what it is value, Enter finally concluded that Samsung wasn’t “faking” the photographs of the moon.

The declare that ibreakphotos makes of their current Reddit thread is that Samsung is making use of textures that aren’t being captured by the telephone to the photographs. They examined this by utilizing a low decision (170 x 170 pixel) picture of the moon and making use of a gaussian blur to remove nearly all element from the shot earlier than shutting off all their lights and snapping a photograph of it utilizing Area Zoom. Beneath is a take a look at the side-by-side of the supply and the ultimate end result that they posted.

(Picture credit score: Reddit/ibreakphotos)

Clearly, it is a startling end result and definitely would help the claims made within the thread as it might be not possible for the telephone to resolve the element proven on the precise from the picture on the left. Nonetheless, I’ve had no luck recreating these outcomes utilizing the identical unique picture and over half a dozen colleagues have additionally tried and didn’t get something near this end result. One pattern side-by-side is beneath, as you possibly can see it’s at greatest barely much less blurry than the unique.

(Picture credit score: ibreakphotos/Laptop computer)

Now this is not essentially to say that they’re mendacity about their outcomes, merely that we have now been unable to copy them. We do not have all of the variables current of their testing, so maybe one thing is throwing it off. Are we on the identical model of One UI and Samsung’s digicam software program? Are our rooms barely brighter or darker than theirs? Did we apply exactly the identical quantity of gaussian blur to the picture? May the monitor itself yield a distinction within the end result?

I can solely say that in my testing and that of my colleagues, a blurry moon stays blurred. Finally, I believe the bigger query that this raises is how a lot AI-enhancement do you assume is suitable earlier than a photograph is “faked.”

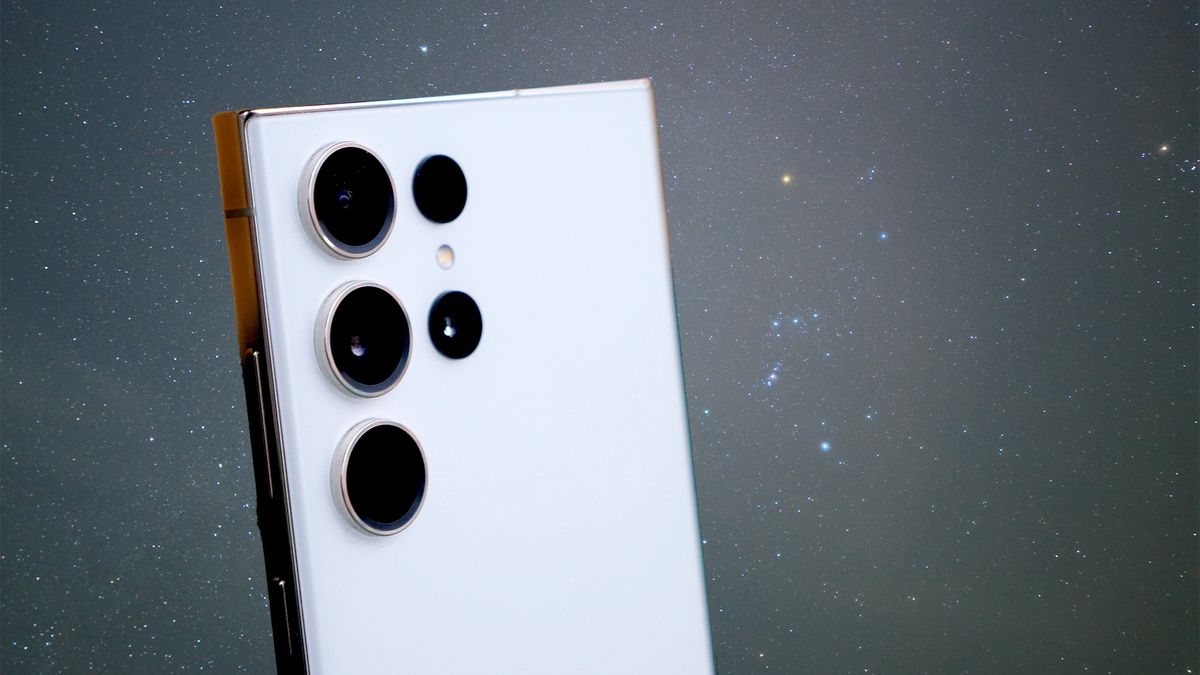

(Picture credit score: Laptop computer Magazine/Sean Riley)

The place’s the road between “actual” and “pretend” with AI-enhanced images?

Computational or AI and ML-enhanced images has fully reworked cellular images during the last a number of years. Google basically constructed the Pixel on it with each telephone producing photographs that have been far superior to what you’d count on based mostly on its {hardware}. Now each cellular chipset maker focuses closely on AI efficiency with a view to enhance and velocity up our telephones means to provide excellent photographs (amongst quite a few different AI-based duties).

Options like portrait mode are supplying you with outcomes that are not truly taking place in digicam. Do you care that the blur is synthetic if it offers you an incredible photograph that you simply wish to share? Is that “pretend?”

What about HDR modes or Evening modes? These are capturing a number of photographs and mixing them collectively, as soon as once more if the ensuing shot is nearer to what you needed to seize, is not that finally the purpose?

(Picture credit score: Laptop computer Magazine/Sean Riley)

Taking it additional, what about Google’s Magic Eraser? You might be fully eliminating parts of the photograph that you simply truly captured, however presumably it leaves you with a end result that you simply like higher.

Now I can recognize that there are customers that desire a photograph to seize a scene precisely as they noticed it with none embellishment. Samsung’s Skilled Uncooked is what you’re searching for.

Nonetheless, nearly all of customers are simply taking snapshots of their lives and the world round them and the brilliance of getting a wonderful digicam on their telephone is that it’s all the time on them and that it reliably captures one of the best photograph doable in as quick a time as doable.

From what I’ve seen and what I’ve been in a position to reproduce myself, Samsung is closely making use of AI-enhancements to photographs of the Moon with out changing them solely. Whether or not you deem that “pretend” or not is as much as you.