In an open letter (opens in new tab) to the AI group, a gaggle of famend AI researchers joined by Elon Musk and different tech luminaries referred to as upon AI labs to stop coaching on large-scale AI initiatives which are extra highly effective than GPT-4, the tech behind ChatGPT and lots of different well-liked AI chatbots.

The Way forward for Life Institute would not mince phrases in its letter; opening with the warning that “AI methods with human-competitive intelligence can pose profound dangers to society and humanity.” They go on to warn that the event of those methods requires correct planning and administration which isn’t occurring within the present “out-of-control race to develop and deploy ever extra highly effective digital minds that nobody — not even their creators — can perceive, predict, or reliably management.”

Dangerous AI enterprise

The researchers aren’t asking that every one AI analysis come to a halt, they are not trying to put themselves out of jobs, somewhat they need a six-month pause to what they name the “ever-larger unpredictable black-box fashions with emergent capabilities.” Throughout that point AI labs and unbiased consultants would work collectively to create security requirements for AI growth that may be “audited and overseen by unbiased exterior consultants” to make sure AI development is correctly managed.

OpenAI, the corporate behind ChatGPT, acknowledged a few of these considerations in its current assertion about synthetic common intelligence (opens in new tab). The corporate stated that “In some unspecified time in the future, it could be vital to get unbiased evaluate earlier than beginning to practice future methods, and for probably the most superior efforts to conform to restrict the speed of progress of compute used for creating new fashions.” The place they differ is that the Way forward for Life Institute feels that we’re already at that inflection level.

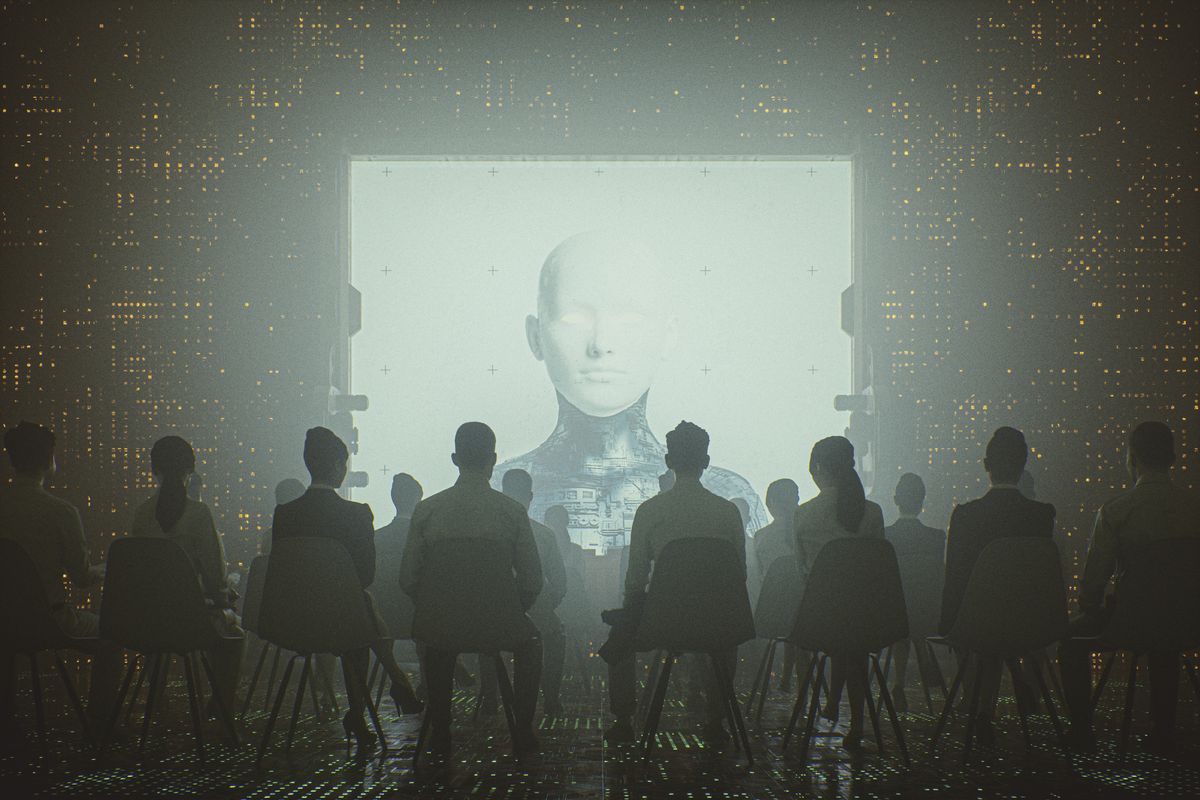

It is exhausting to not summon ideas of apocalyptic AIs from well-liked tradition on this dialogue. Whether or not its Skynet from the Terminator sequence or the AI from The Matrix, the thought of an AI gaining sentience and waging conflict on or enslaving humanity has been a well-liked theme of apocalyptic science fiction for many years.

We aren’t there but, however that’s exactly the extent of concern that’s raised right here. The researchers are warning towards letting this runaway practice construct up sufficient pace that we will not presumably cease it.

These AI initiatives really feel like enjoyable and helpful instruments, and the Way forward for Life Insitute needs them to stay precisely that by pausing them now and stepping again to contemplate the long-term targets and growth technique behind them, which can permit us to reap the advantages of those instruments with out disastrous penalties.

Again to XPS 13