Scaling GNNs

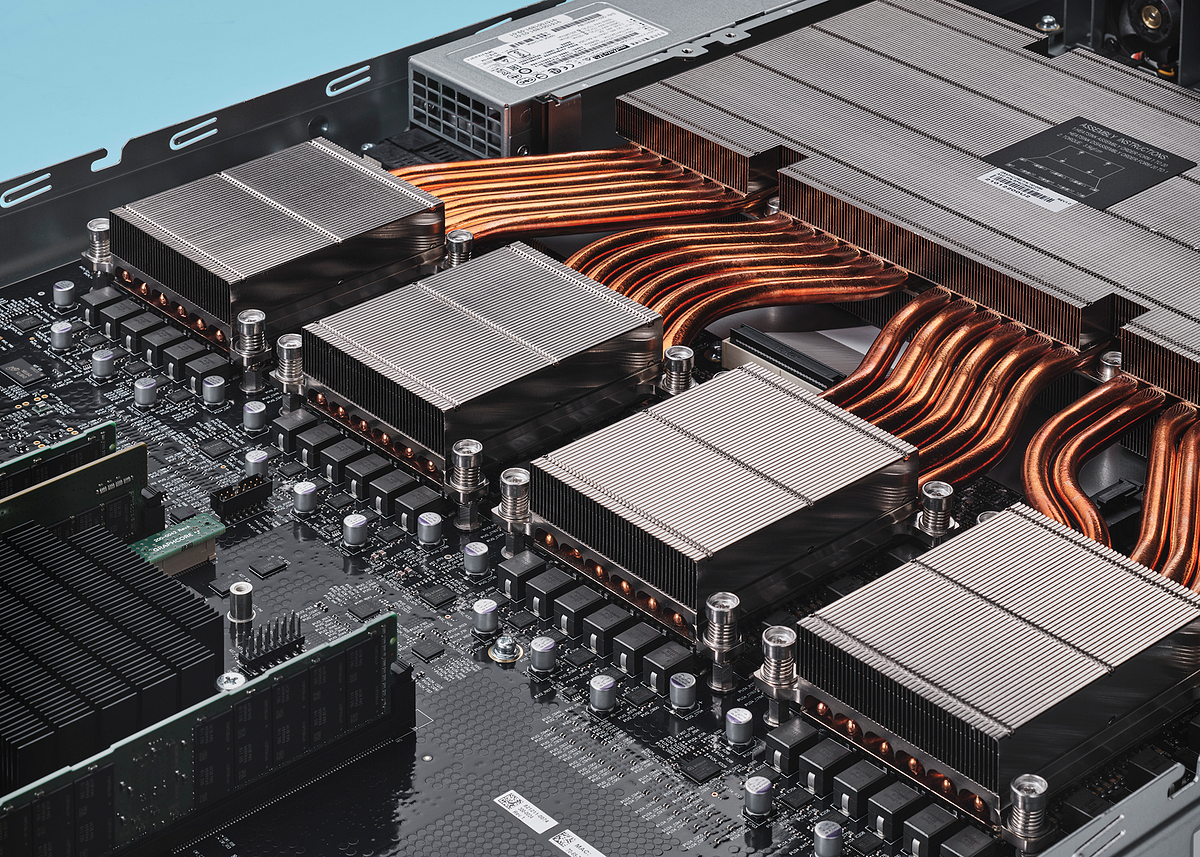

The suitability of ordinary {hardware} for Graph Neural Networks (GNNs) is an usually ignored challenge within the Graph ML neighborhood. On this put up, we discover the implementation of Temporal GNNs on a brand new {hardware} structure developed by Graphcore that’s tailor-made to graph-structured workloads.

This put up was co-authored with Emanuele Rossi and Daniel Justus and relies on a collaboration with the British semiconductor firm Graphcore.

Graph-structured knowledge come up in lots of issues coping with complicated techniques of interacting entities. In recent times, strategies making use of machine studying strategies to graph-structured knowledge, particularly Graph Neural Networks (GNNs), have witnessed an enormous progress in recognition.

The vast majority of GNN architectures assume that the graph is fastened. Nonetheless, this assumption is commonly too simplistic: since in lots of functions the underlying system is dynamic, the graph adjustments over time. That is for instance the state of affairs in social networks or advice techniques, the place the graphs describing person interplay with content material can change in real-time. A number of GNN architectures able to coping with dynamic graphs have not too long ago been developed, together with our personal Temporal Graph Networks (TGNs) [1].

In this put up, we discover the appliance of TGNs to dynamic graphs of various sizes and research the computational complexities of this class of fashions. We use Graphcore’s Bow Intelligence Processing Unit (IPU) to coach TGNs and display why the IPU’s structure is well-suited to deal with these complexities, resulting in as much as an order of magnitude speedup when evaluating a single IPU processor to an NVIDIA A100 GPU.

The TGN structure, described intimately in our earlier put up, consists of two main parts: First, node embeddings are generated by way of a classical graph neural community structure, right here carried out as a single layer graph consideration community [2]. Moreover, TGN retains a reminiscence summarizing all previous interactions of every node. This storage is accessed by sparse learn/write operations and up to date with new interactions utilizing a Gated Recurrent Community (GRU) [3].

We give attention to graphs that change over time by gaining new edges. On this case, the reminiscence for a given node incorporates data on all edges that focus on this node in addition to their respective vacation spot nodes. By oblique contributions, the reminiscence of a given node can even maintain details about nodes additional away, thus making further layers within the graph consideration community dispensable.

We first experiment with TGN on the JODIE Wikipedia dataset [4], a bipartite graph of Wikipedia articles and customers, the place every edge between a person and an article represents an edit of the article by the person. The graph consists of 9,227 nodes (8,227 customers and 1,000 articles) and 157,474 time-stamped edges annotated with 172-dimensional LIWC function vectors [5] describing the edit.

Throughout coaching, edges are inserted batch-by-batch into the initially disconnected set of nodes, whereas the mannequin is skilled utilizing a contrastive lack of true edges and randomly sampled unfavourable edges. Validation outcomes are reported because the likelihood of figuring out the true edge over a randomly sampled unfavourable edge.

Intuitively, a big batch measurement has detrimental penalties for coaching in addition to inference: The node reminiscence and the graph connectivity are each solely up to date after a full batch is processed. Due to this fact, the later occasions inside one batch would possibly depend on outdated data as they don’t seem to be conscious of earlier occasions within the batch. Certainly, we observe an opposed impact of huge batch sizes on process efficiency, as proven within the following determine:

However, the usage of small batches emphasizes the significance of quick reminiscence entry for attaining a excessive throughput throughout coaching and inference. The IPU with its giant In-Processor-Reminiscence, subsequently, demonstrates an growing throughput benefit over GPUs with smaller batch measurement, as proven within the following determine. Specifically, when utilizing a batch measurement of 10 TGN might be skilled on the IPU about 11 instances quicker, and even with a big batch measurement of 200 coaching continues to be about 3 instances quicker on the IPU.

To higher perceive the improved throughput of TGN coaching on Graphcore’s IPU, we examine the time spent by the completely different {hardware} platforms on the important thing operations of TGN. We discover that the time spent on GPU is dominated by the Consideration module and the GRU, two operations which might be carried out extra effectively on the IPU. Furthermore, all through all operations, the IPU handles small batch sizes far more effectively.

Specifically, we observe that the IPU’s benefit grows with smaller and extra fragmented reminiscence operations. Extra typically, we conclude that the IPU structure exhibits a major benefit over GPUs when the compute and reminiscence entry are very heterogeneous.

Whereas the TGN mannequin in its default configuration is comparatively light-weight with about 260,000 parameters, when making use of the mannequin to giant graphs a lot of the IPU In-Processor-Reminiscence is utilized by the node reminiscence. Nonetheless, since it’s sparsely accessed, this tensor might be moved to off-chip reminiscence, through which case the In-Processor-Reminiscence utilisation is unbiased of the scale of the graph.

To take a look at the TGN structure on giant graphs, we apply it to an anonymized graph containing 261 million follows between 15.5 million Twitter customers [6]. The sides are assigned 728 completely different time stamps which respect date ordering however don’t present any details about the precise date when follows occurred. Since no node or edge options are current on this dataset, the mannequin depends completely on the graph topology and temporal evolution to foretell new hyperlinks.

Because the great amount of information makes the duty of figuring out a constructive edge when in comparison with a single unfavourable pattern too easy, we use the Imply Reciprocal Rank (MRR) of the true edge amongst 1000 randomly sampled unfavourable edges as a validation metric. Furthermore, we discover that the mannequin efficiency advantages from a bigger hidden measurement when growing the dataset measurement. For the given knowledge we establish a latent measurement of 256 because the candy spot between accuracy and throughput.

Using off-chip reminiscence for the node reminiscence reduces throughput by a few issue of two. Nonetheless, utilizing induced subgraphs of various sizes in addition to an artificial dataset with 10× the variety of nodes of the Twitter graph and random connectivity we display that throughput is sort of unbiased of the scale of the graph (see desk beneath). Utilizing this system on the IPU, TGN might be utilized to nearly arbitrary graph sizes, solely restricted by the quantity of obtainable host reminiscence whereas retaining a really excessive throughput throughout coaching and inference.