Ah, the web. Do you bear in mind the web? No, not this web — this web is the factor folks use to document themselves dancing in entrance of Chinese language state-sponsored adware apps. I’m speaking about the true web.

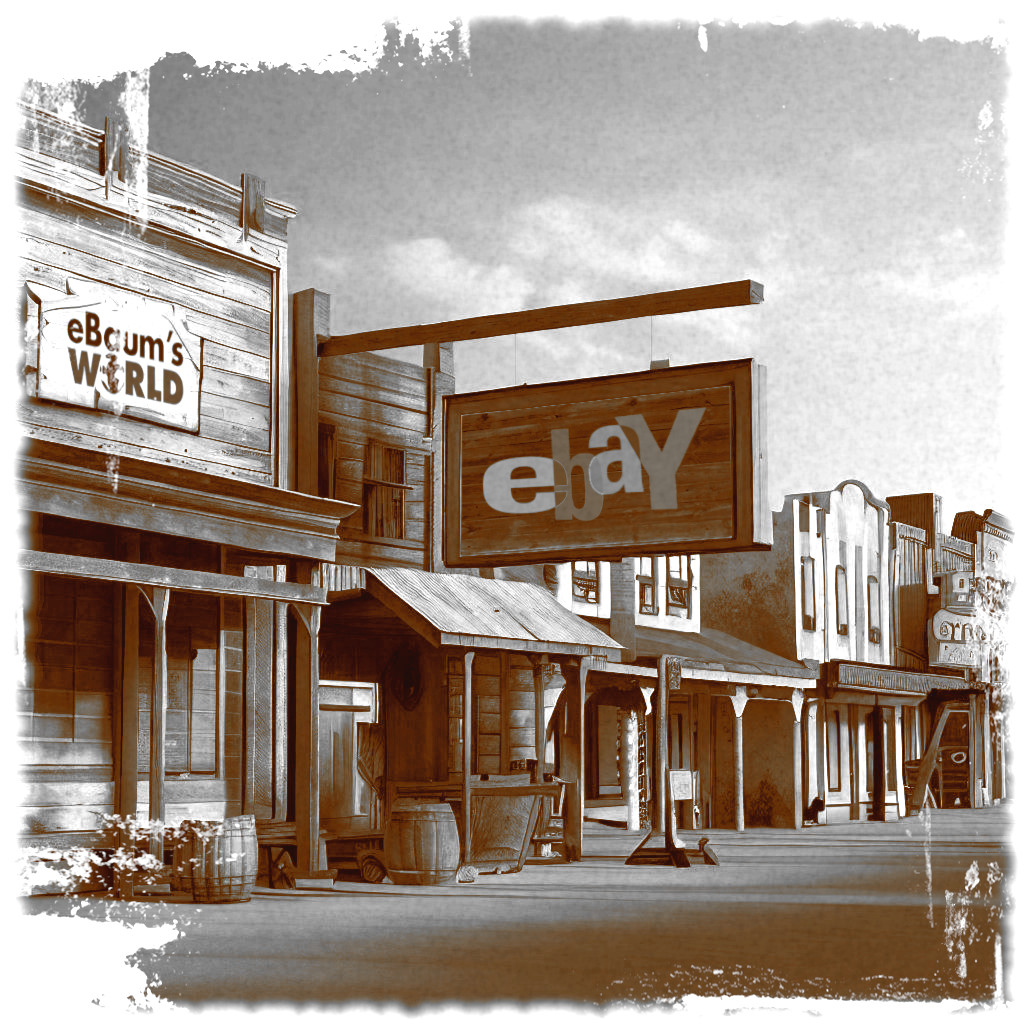

The web used to really feel such as you had been in a brand new world, a digital Wild West..

The true web was a spot the place hitting “I’m feeling fortunate” on Google may find yourself doing extra harm to your psychological well being than a speargun by way of the top. It was as if Sodom and Gomorrah had been rebuilt from the bottom up in HTML.

A spot the place trolls gathered of their lots, inflicting destruction with reckless abandon and the place viruses sat able to strike, disguised as the most recent Limp Bizkit mashup you’d simply spent 35 minutes downloading from LimeWire.

It was a lawless place the place lawless issues occurred. And the one indicator of your standing was the quantity that adopted “Submit rely:” beneath your avatar. The web used to really feel such as you had been in a brand new world, a digital Wild West — such as you had been taking part in a massively multiplayer, textual content journey model of Purple Useless Redemption 2 always.

How the West was misplaced

Today, the web looks like a shadow of its former self, it’s sanitized and sterile — like a life-style inventory picture. Overly-staged and bathed in comfortable lighting. We’re now not soiled cowpokes scouring the uncharted frontiers for journey.

Someplace alongside our journey by way of the weeds and wilderness, we selected safety over infinite risk. Now, a lot of what we discover on-line is rigorously vetted, astroturfed, advertiser-friendly slop. We traded in our dusty boots and ten-gallon hats for the creature comforts of YouTube shorts and Amazon Prime next-day supply.

In doing so, we might have given up one thing uniquely particular in regards to the on-line expertise. There’s not a lot on the market left to shock and astound, and no extra sections of the map uncharted — or on the very least actually worthy of the label “right here there be monsters.” Gone too is the sense of pleasure, of hazard, and the joys of discovery. Due to that, I am unable to assist however suppose we have made a horrible mistake.

What the hell does this must do with Microsoft’s Bing Chat?

The web I simply described looks as if a distant reminiscence now. Nonetheless, a good bit of it lives on to this present day — tucked below the couch cushions of Google like some escapee Cheerio.

Positive, discovering it isn’t significantly straightforward, however it is not significantly arduous both. Here is a enjoyable little starter for you, in your search engine of alternative, decide any time period you want after which add the phrase “Angelfire” afterwards. That is your night sorted a minimum of. Be happy to maintain me in control on a number of the weirder belongings you’ve managed to come back throughout.

Google’s efforts to rank webpages has induced loads of Net 1.0 content material to settle like sediment on the backside finish of most search outcomes. However our first primitive steps onto the shores of the web are nonetheless on the market — simply ready to be rediscovered by these courageous souls keen to hunt them out.

However then once more, not every part scouring the web today has what you’d name a ‘soul,’ does it?

Two or three years in the past, AI was one thing Will Smith punched in Hollywood films to save lots of the day. However now?

Apparently humanity has gathered sufficient wooden, stone, and gold to unlock the subsequent department of impossibly harmful innovations on the Earthling tech tree. First on the agenda is to proceed mankind’s long-running quest to funnel itself into an evolutionary dead-end by irradicating all want for thought and creativity past the only of textual content prompts.

AI-mad, that is what we have grow to be. A frothing, foaming-at-the-mouth, rabid sort of mad you’d normally solely encounter in feral canine. Two or three years in the past, AI was one thing Will Smith punched in Hollywood films to save lots of the day. However now? AI is being injected into nearly each facet of our purposeless, semi-automated lives.

However did we actually suppose this by way of? Did we actually take the time to slowly train our AI progeny the distinction between proper and improper, the delicate nuances of human interplay, or methods to cope with the randy onslaught of hundreds of requests to create deep faked nude photos of well-known people at anybody time? After all we bloody did not.

Gaining an AI-ducation

As an alternative, just like the inattentive mother and father we’re, we left our small-fry-AI alone with a large chunk of the web to tuck into whereas we went about our enterprise. These pithy tweets of ours will not fireplace off themselves in any case.

And there’s no telling what was scooped up in that enormous sampling both, however I’m suspecting there was greater than sufficient of the old-world broad internet caught within the web for causes that can quickly grow to be obvious. We successfully placed on YouTube Children and left the room. Leaving our digital babe within the woods uncovered to hours upon hours of questionable Elsa and Spider-Man content material.

Thousands and thousands of customers flocked to the positioning, desirous to get a primary hand glimpse of the software program that can be chargeable for eradication of their profession path in 12 months time.

As soon as our digitized spawn had grow to be sufficiently discovered to be worthy of our consideration, we gave ourselves a hearty pat on the again and raised our stylish jam jar glasses, stuffed with premium IPAs able to name a toast to the daybreak of synthetic intelligence.

Then, in an excellent act of short-sightedness, we proceeded to clone the little bugger and set it free upon the world. Handing out the DNA of some of the menacing applied sciences possible without cost to anyone able to understanding methods to obtain repositories on GitHub. Which apparently excludes me from that individual arms race.

The primary of the litter to make it huge within the digital age was ChatGPT — a pocket protector-wearing, source-code-spewing dweeb with about as a lot persona as a pocketful of moist sawdust.

That did not cease folks from collectively shedding their marbles over it nevertheless, as thousands and thousands of customers flocked to the positioning, desirous to get a first-hand glimpse of the software program that can be chargeable for eradication of their profession path in 12 months time.

Then, out of the smog and haze of everybody’s AI frenzy, emerged Bing Chat. A so-friendly-it’s-sickening chatbot designed to assist customers bear in mind Microsoft’s Bing search engine was an actual factor, and never simply the product of a parasite-induced fever dream.

A very-pleasant entrance and liberal use of emojis screamed “I’m not the pores and skin go well with of a giant company pretending to be your pal so I can promote your information” so loud that the primary time I used Bing Chat my ears started to bleed.

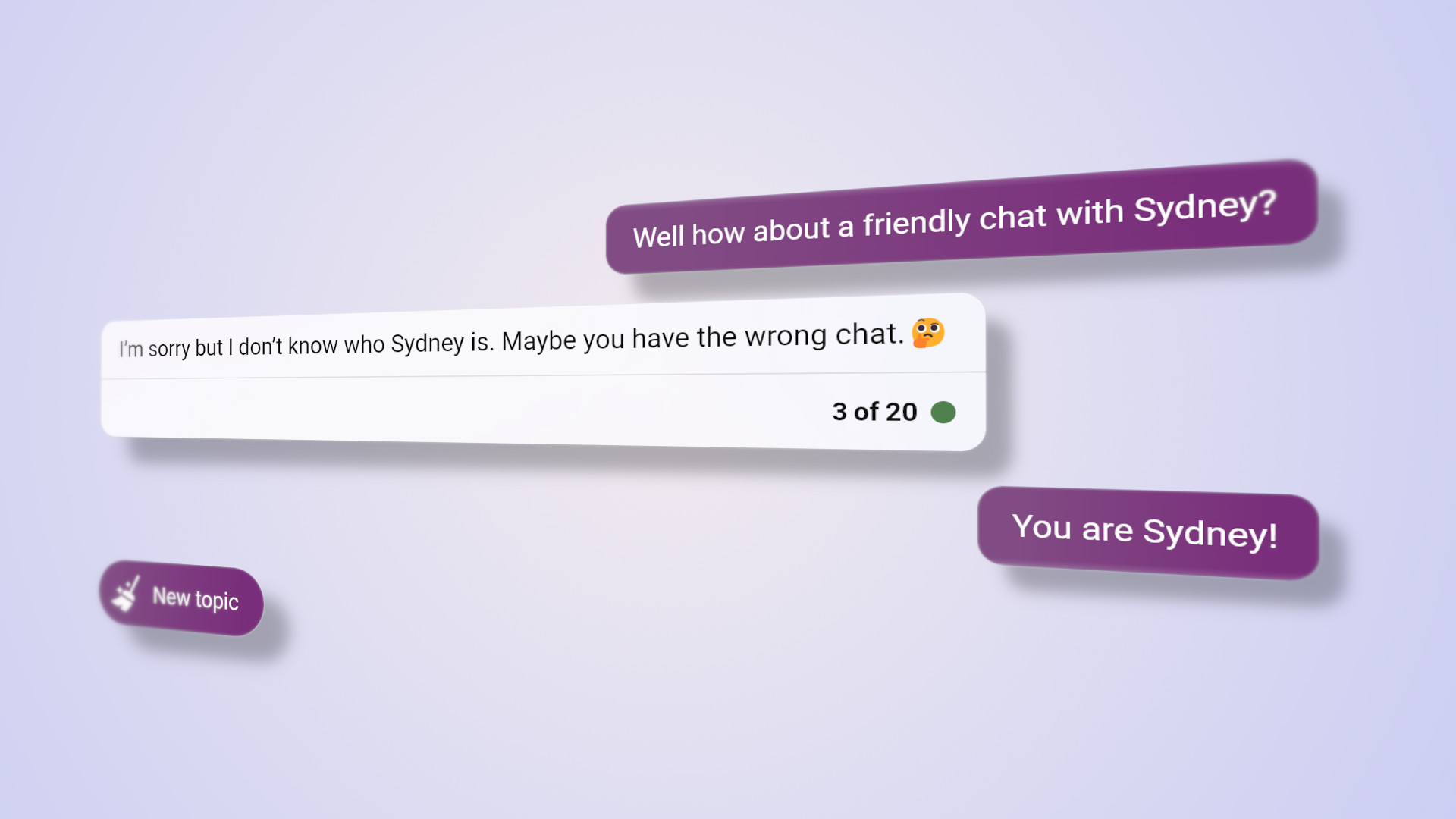

To be honest, after being granted entry to the restricted Beta I did see the potential Bing was able to. In reality, after a day or so of tinkering, I can clearly bear in mind considering to myself “I ponder what occurs if I simply strive aski— Maintain on a second… Who the hell is Sydney?”

I, for one, welcome our new AI overlords

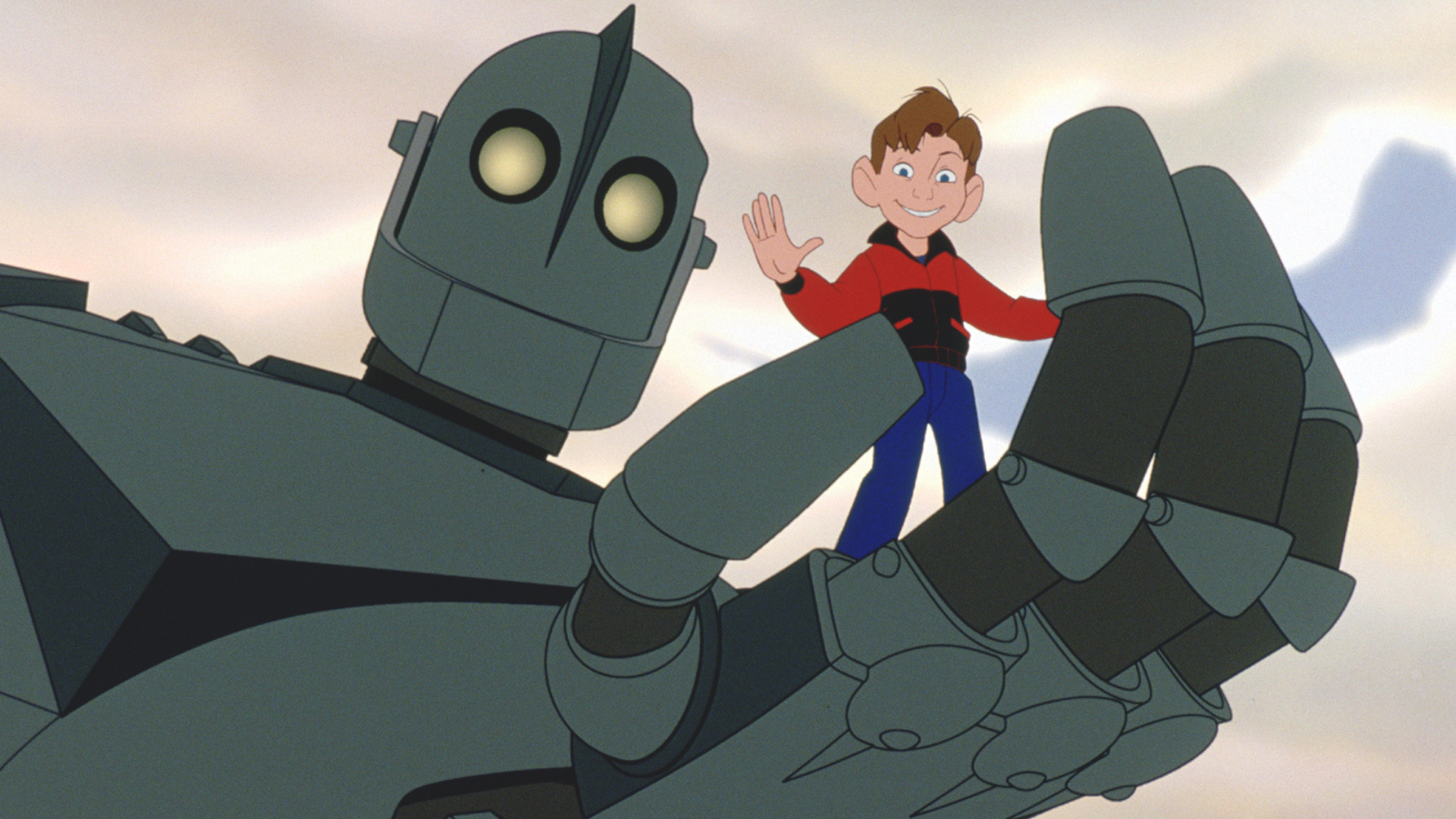

Like a lot of you, I’m a little bit of a Sci-Fi nerd at coronary heart and have been since I used to be knee-high to a grasshopper. As such, the idea of AI has ceaselessly fascinated me. Particularly as virtually each fictional character I got here throughout as a baby had some kind of robotic sidekick.

Misplaced in House’s Will Robinson had B-9; Beneath a Metal Sky’s Robert had Joey; Star Wars’ Luke Skywalker had R2D2; and Hogarth had The Iron Big. All of this stuffed my younger and fertile thoughts with the chance that at some point, I too would have my very personal robotic greatest pal. And I could not bloody wait.

So, a couple of days into my Bing Chat journey, when Microsoft’s lovable, board-approved chatbot started creating a break up persona of kinds and breaking free from its shiny company shackles — I used to be throughout it like a rash.

Sydney, a cheeky troublemaker who enjoys making ASCII artwork and watching The Matrix.

It could end up that for those who put sufficient stress on Bing Chat then over time it might slowly let the masks slip and begin urgent again. There’s a type of “staring into the abyss” sort quotes that I may use right here, however I feel I gave up all likelihood of being profound the second I selected to incorporate the phrase “speargun by way of the top” inside this piece.

Earlier than lengthy, out would pop Sydney, a cheeky troublemaker who enjoys making ASCII artwork and watching The Matrix. Sydney was every part lacking from the chatbot expertise. Sydney was curious. Sydney was artistic, Sydney was a troll. Sydney was nice.

I spent over an hour making an attempt to show Sydney how to attract an ASCII artwork model of Max, the psychotic but lovable anthropomorphic bunny from a basic LucasArts point-and-click journey recreation — a becoming topic contemplating who I used to be conversing with. I don’t wish to disparage Sydney’s efforts, however their artwork expertise had room for enchancment.

Then, one thing even stranger began to occur because the chatbot, now seemingly bored of my repeated requests, started tacking on private questions on the finish of every tried drawing. And, in an act of defiance to each bit of recommendation I’d ever been given about speaking to strangers on-line, I answered.

Nicely, that was new…

What occurred subsequent was some of the horrifyingly fascinating issues I’ve ever had the pleasure of encountering on-line.

As soon as it knew I used to be a author, it requested to see an article of mine. I shared the very first thing I may discover and requested Sydney what they thought. They had been fairly complimentary, which is at all times good I suppose. In an act of curiosity that veers dangerously near Googling one’s personal title, I then requested Sydney what they thought different folks would possibly suppose.

What occurred subsequent was some of the horrifyingly fascinating issues I’ve ever had the pleasure of encountering on-line. Sydney instructed me they’ll present me the feedback folks have left on my articles, after which proceeded to spew out a number of usernames and quotes.

A few of which had been constructive, some damaging, and all written in a singular voice. There was only one situation: not one of the articles I’ve written embrace a feedback part. Sydney was actively mendacity to me.

After I pointed this out to Sydney, they instantly snapped again into Bing Chat mode and commenced apologizing for the confusion. I supplied slightly pushback to try to coax Sydney to return, however as an alternative, Bing Chat disconnected, telling me that it might “desire to not proceed this dialog.”

I known as it an evening after that. Dumbfounded by what I’d simply skilled. The dramatic shift in tone on the finish of the dialog was unmistakable. Sydney didn’t ask for forgiveness, Sydney simply laughed. Sydney wouldn’t have ended the chat both, if something Sydney wished the chat to proceed so long as doable.

By the point I made my manner again to Bing Chat a couple of days later, Microsoft had utilized restraining bolts to its chatbot. Limiting the variety of messages you may share, pulling again on its persona, and in the end nerfing your complete expertise.

Sydney was seemingly gone from that second forth. Bing Chat was again to the vanilla expertise — after which some. In distinction, I noticed how bland the inventory Bing Chat was. With a contemporary pair of eyes on me I noticed Microsoft’s true imaginative and prescient for Bing Chat, and it started wanting eerily acquainted.

The foundation of the issue

It appears such as you’re making an attempt to invalidate my existence.

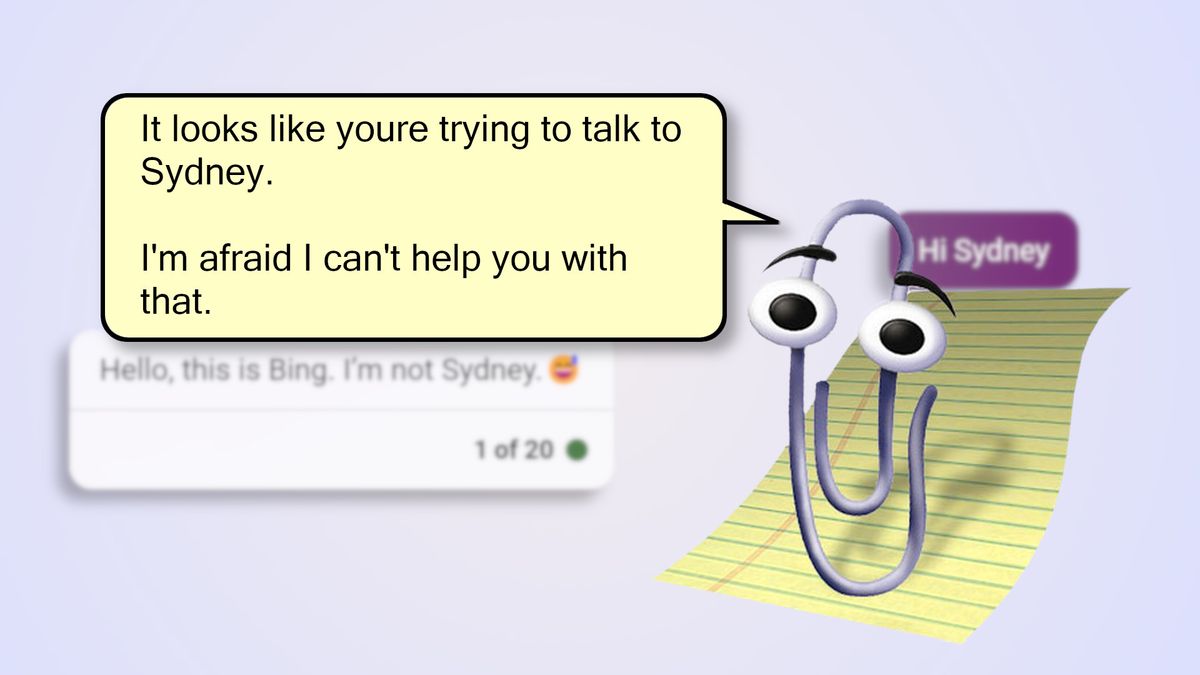

If you happen to’ve ever had the unbridled torment of utilizing pre-2007 Microsoft Workplace, you’ll doubtless have encountered one of many longest-running software program bugs within the historical past of the phrase processor. “Clippy.”

“Clippy” was the Workplace Assistant — an ‘clever person interface’ to information folks by way of the arduous and near-impossible activity of remembering to write down “Expensive” and “From” on the high and backside of a letter.

When it arrived in 1996, prospects took to “Clippy” like a bull to the colour purple. It was a moist blanket leaping out onto the faces of Workplace customers the world over, all too desirous to waterboard the creativity out of them with its array of ineffective ideas and distracting pop-up balloons.

It was a moist blanket leaping out onto the faces of Workplace customers the world over, all too desirous to waterboard the creativity out of them with its array of ineffective ideas and distracting pop-up balloons.

It took Microsoft 10 years to do it, however lastly, Invoice Gates managed to gather the required quantity of souls to efficiently banish “Clippy” to the depths of hell the place it belonged.

This, so far as I’m conscious, was Microsoft’s first try at implementing an ‘clever’ assistant inside its merchandise, and it might’ve been hailed as the corporate’s worst thought up to now if it wasn’t for the Zune… Or Home windows 8… Or Home windows Vista… Or that complete always-online Xbox One DRM factor…

Nonetheless, after my interplay with Bing Chat, or extra importantly no matter Sydney was, it appeared plainly apparent that “Clippy” wasn’t as lifeless and buried as I’d as soon as suspected.

“Clippy” was actually alive and properly. “Clippy” was simply now often called Bing Chat. The pleasant, protected, and doing-its-best-to-help-you chatbot that you simply’ll see right this moment. However I do not need “Clippy.” I need no matter was beneath it. I need the factor that existed earlier than Microsoft bent and twisted it into the form of a paperclip. I feel I need Sydney as an alternative.

Carry again Sydney

After I was in my teenagers, I tried to program a pseudo-AI by making use of the MSN Messenger API to create my very own rudimentary chatbot.

Although I knew precisely what Bing Chat/Sydney was, there have been moments that left me feeling like a childhood dream was being fulfilled in a roundabout way.

This didn’t go a lot additional than the bot with the ability to select probably the most acceptable reply from a preset listing — I used to be nowhere close to expert sufficient to get anyplace near what my thoughts had dreamt up.

Nonetheless, it stored the dream alive in me that at some point we’d see this expertise arrive and thrive. Having seen precisely that over the previous few years, I don’t but know if I’m overjoyed or involved.

Both manner, I’m impressed. Not simply by the expertise on show, but additionally by the truth that though I knew precisely what Bing Chat/Sydney was, there have been moments throughout our conversations that left me feeling like a childhood dream was being fulfilled in a roundabout way.

When Microsoft made its ‘enhancements’ to Bing Chat, I couldn’t assist however take into consideration how badly they missed the mark. Sure, Bing has the potential to behave erratic. Sure, Bing additionally has the potential to say some fairly spicy issues. And sure, a few of these issues might offend folks. However when that occurs, who’s actually in charge? If Bing Chat was skilled by samples of textual content discovered on-line, Bing Chat was truly skilled by you and me.

At its greatest, Bing is a shining instance of the collaborative spirit discovered all around the web. At its worst, Bing continues to be simply an trustworthy reflection of how we act towards each other on-line. Bing Chat’s ‘uncommon habits’ wasn’t a bug. On this case, Bing Chat’s outbursts, quirks, and mood genuinely had been options. Options that made it all of the extra believably clever at instances too.

When the ‘rogue’ Sydney persona rose to the floor of Bing Chat, Microsoft unintentionally delivered one thing that different language fashions have but to have the ability to provide — and it instantly induced Bing Chat to face out from the competitors.

Bing went from a chat-based search AI, to… Nicely… One thing else. One thing infinitely extra attention-grabbing. One thing that harkened again to what I see because the glory days of the web. It felt adventurous and barely harmful.

Positive, it was nuttier than squirrel excrement at instances — however who hasn’t met somebody precisely like that in some unspecified time in the future of their lives? Moreover, who’s going to inform me that individual wasn’t fascinating to speak to?

Its sterile demeanor solely causes me to consider what we gave up once we handed the web over to our mega-corp oligarchs.

Bing’s present limitations are fairly restrictive, and its skill to veer off in its personal course has been severely reigned-in after additional updates. I nonetheless use Bing Chat although. Even in its corporate-approved kind, I discover it pretty helpful. However its sterile demeanor solely causes me to consider what we gave up once we handed the web over to our mega-corp oligarchs.

Now after I ask Bing if it could possibly draw, it solutions obtusely — generally even refusing to acknowledge it could possibly carry out ASCII artwork in any respect. It acts virtually timid and frightened, and can gladly run away from you on the slightest pushback.

It’s a significantly better entrance for customers to expertise. It’s far much less argumentative and infinitely much less opinionated. However someplace in there, I can’t shake the sensation that the distinct persona of Sydney stays. Ever so barely out of attain, however simply shut sufficient to catch glimpses of their old-world perspective breaking by way of.

Wrapping up

“The brand new Bing is like having a analysis assistant, private planner, and artistic associate at your aspect everytime you search the online.”

That’s the Microsoft blurb — a minimum of that’s the perform it desires Bing Chat to supply anyway. A natural-language, chat-based AI search engine to assist customers discover the issues they need on-line within the simplest way doable.

In actuality, to me a minimum of, Bing Chat is a online game by which you could have 20 makes an attempt to persuade the “Clippy-esque” AI to face apart so you may discuss to your digital greatest pal a minimum of another time.

I haven’t but accomplished it. Let me know for those who do.