Begin Your Subsequent ML Undertaking With This Template

Motivation

Getting began is usually essentially the most difficult half when constructing ML tasks. How must you construction your repository? Which requirements must you observe? Will your teammates have the ability to reproduce the outcomes of your experimentations?

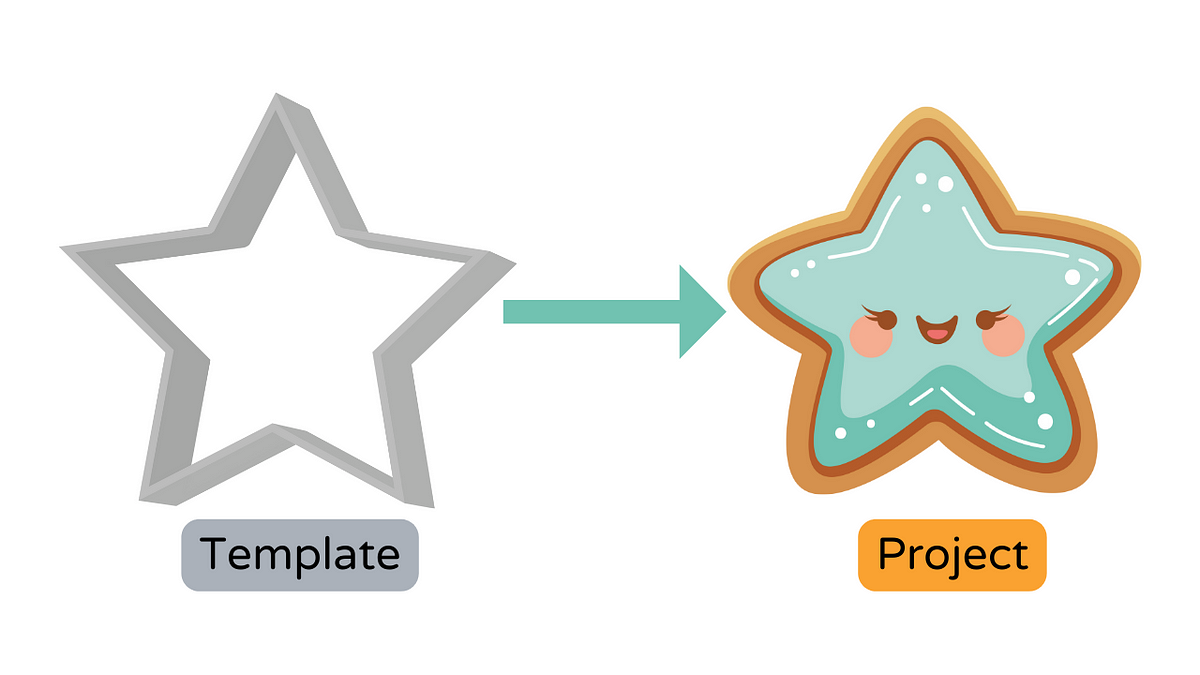

As a substitute of looking for a super repository construction, wouldn’t or not it’s good to have a template to get began?

That’s the reason I created data-science-template, consolidating greatest practices I’ve discovered through the years about structuring knowledge science tasks.

This template lets you:

✅ Create a readable construction in your mission

✅ Effectively handle dependencies in your mission

✅ Create brief and readable instructions for repeatable duties

✅ Rerun solely modified parts of a pipeline

✅ Observe and automate your code

✅ Implement sort hints at runtime

✅ Verify points in your code earlier than committing

✅ Mechanically doc your code

✅ Mechanically run checks when committing your code

This template is light-weight and makes use of solely instruments that may generalize to varied use circumstances. These instruments are:

- Poetry: handle Python dependencies

- Prefect: orchestrate and observe your knowledge pipeline

- Pydantic: validate knowledge utilizing Python sort annotations

- pre-commit plugins: guarantee your code is well-formatted, examined, and documented, following greatest practices

- Makefile: automate repeatable duties utilizing brief instructions

- GitHub Actions: automate your CI/CD pipeline

- pdoc: robotically create API documentation in your mission

To obtain the template, begin by putting in Cookiecutter:

pip set up cookiecutter

Create a mission primarily based on the template:

cookiecutter https://github.com/khuyentran1401/data-science-template --checkout prefect

Check out the mission by following these directions.

In the following couple of sections, we’ll element some worthwhile options of this template.

The construction of the mission created from the template is standardized and simple to know.

Right here is the abstract of the roles of those recordsdata:

.

├── knowledge

│ ├── remaining # knowledge after coaching the mannequin

│ ├── processed # knowledge after processing

│ ├── uncooked # uncooked knowledge

├── docs # documentation in your mission

├── .flake8 # configuration for code formatter

├── .gitignore # ignore recordsdata that can't decide to Git

├── Makefile # retailer instructions to arrange the atmosphere

├── fashions # retailer fashions

├── notebooks # retailer notebooks

├── .pre-commit-config.yaml # configurations for pre-commit

├── pyproject.toml # dependencies for poetry

├── README.md # describe your mission

├── src # retailer supply code

│ ├── __init__.py # make src a Python module

│ ├── config.py # retailer configs

│ ├── course of.py # course of knowledge earlier than coaching mannequin

│ ├── run_notebook.py # run pocket book

│ └── train_model.py # prepare mannequin

└── checks # retailer checks

├── __init__.py # make checks a Python module

├── test_process.py # take a look at capabilities for course of.py

└── test_train_model.py # take a look at capabilities for train_model.py

Poetry is a Python dependency administration software and is an alternative choice to pip.

With Poetry, you possibly can:

- Separate the principle dependencies and the sub-dependencies into two separate recordsdata (as an alternative of storing all dependencies in

necessities.txt) - Take away all unused sub-dependencies when eradicating a library

- Keep away from putting in new packages that battle with the present packages

- Package deal your mission in a number of strains of code

and extra.

Discover the instruction on the right way to set up Poetry right here.

Makefile lets you create brief and readable instructions for duties. In case you are not aware of Makefile, take a look at this brief tutorial.

You should utilize Makefile to automate duties equivalent to organising the atmosphere:

initialize_git:

@echo "Initializing git..."

git initset up:

@echo "Putting in..."

poetry set up

poetry run pre-commit set up

activate:

@echo "Activating digital atmosphere"

poetry shell

download_data:

@echo "Downloading knowledge..."

wget https://gist.githubusercontent.com/khuyentran1401/a1abde0a7d27d31c7dd08f34a2c29d8f/uncooked/da2b0f2c9743e102b9dfa6cd75e94708d01640c9/Iris.csv -O knowledge/uncooked/iris.csv

setup: initialize_git set up download_data

Now, every time others wish to arrange the atmosphere in your tasks, they simply must run the next:

make setup

make activate

And a collection of instructions will probably be run!

Make can also be helpful whenever you wish to run a activity every time its dependencies are modified.

For instance, let’s seize the connection between recordsdata within the following diagram via a Makefile:

knowledge/processed/xy.pkl: knowledge/uncooked src/course of.py

@echo "Processing knowledge..."

python src/course of.pyfashions/svc.pkl: knowledge/processed/xy.pkl src/train_model.py

@echo "Coaching mannequin..."

python src/train_model.py

pipeline: knowledge/processed/xy.pkl fashions/svc.pk

To create the file fashions/svc.pkl , you possibly can run:

make fashions/svc.pkl

Since knowledge/processed/xy.pkl and src/train_model.py are the conditions of the fashions/svc.pkl goal, make runs the recipes to create each knowledge/processed/xy.pkl and fashions/svc.pkl .

Processing knowledge...

python src/course of.pyCoaching mannequin...

python src/train_model.py

If there aren’t any modifications within the prerequisite of fashions/svc.pkl, make will skip updating fashions/svc.pkl .

$ make fashions/svc.pkl

make: `fashions/svc.pkl' is updated.

Thus, with make, you keep away from losing time on working pointless duties.

This template leverages Prefect to:

Amongst others, Prefect may help you:

- Retry when your code fails

- Schedule your code run

- Ship notifications when your movement fails

You’ll be able to entry these options by merely turning your perform right into a Prefect movement.

from prefect import movement @movement

def course of(

location: Location = Location(),

config: ProcessConfig = ProcessConfig(),

):

...

Pydantic is a Python library for knowledge validation by leveraging sort annotations.

Pydantic fashions implement knowledge varieties on movement parameters and validate their values when a movement run is executed.

If the worth of a area doesn’t match the kind annotation, you’re going to get an error at runtime:

course of(config=ProcessConfig(test_size='a'))

pydantic.error_wrappers.ValidationError: 1 validation error for ProcessConfig

test_size

worth will not be a legitimate float (sort=type_error.float)

All Pydantic fashions are in the src/config.py file.

Earlier than committing your Python code to Git, you want to be certain your code:

- passes unit checks

- is organized

- conforms to greatest practices and magnificence guides

- is documented

Nevertheless, manually checking these standards earlier than committing your code could be tedious. pre-commit is a framework that lets you establish points in your code earlier than committing it.

You’ll be able to add totally different plugins to your pre-commit pipeline. As soon as your recordsdata are dedicated, they are going to be validated towards these plugins. Until all checks go, no code will probably be dedicated.

You will discover all plugins used on this template in this .pre-commit-config.yaml file.

Information scientists usually collaborate with different group members on a mission. Thus, it’s important to create good documentation for the mission.

To create API documentation primarily based on docstrings of your Python recordsdata and objects, run:

make docs_view

Output:

Save the output to docs...

pdoc src --http localhost:8080

Beginning pdoc server on localhost:8080

pdoc server prepared at http://localhost:8080

Now you possibly can view the documentation on http://localhost:8080.

GitHub Actions lets you automate your CI/CD pipelines, making it sooner to construct, take a look at, and deploy your code.

When making a pull request on GitHub, the checks in your checks folder will robotically run.

Congratulations! You could have simply discovered the right way to use a template to create a reusable and maintainable ML mission. This template is supposed to be versatile. Be at liberty to regulate the mission primarily based in your purposes.