An introduction to the way to learn and show PDF information in your subsequent dashboard.

I not too long ago have taken an curiosity in utilizing PDF information for my Pure Language Processing (NLP) tasks and you might be questioning, why? PDF paperwork comprise tons of knowledge that may be extracted and used to create varied varieties of machine studying fashions in addition to for locating patterns in numerous information. Drawback? PDF information are difficult to work with in Python. Moreover, as I started making a dashboard for a buyer on Plotly sprint, there was little to no info that I may discover on the way to ingest and parse PDF information in a Plotly dashboard. This lack of expertise will change at present and I’m going to share with you how one can add and work with PDF information in a Plotly Dashboard!

import pandas as pd

from sprint import dcc, Sprint, html, dash_table

import base64

import datetime

import io

import PyPDF2

from sprint.dependencies import Enter, Output, State

import re

import dash_bootstrap_components as dbc

import spacy

from spacy.lang.en.stop_words import STOP_WORDS

from string import punctuation

from heapq import nlargest

A lot of the packages above are what you’ll sometimes discover when deploying a Sprint app. For instance, sprint is the primary Plotly API we are going to use and dcc, Sprint, html, and dash_table are a number of the predominant strategies we’d like for including performance. When studying PDFs in Python, I have a tendency to make use of PyPDF2 however there are different APIs you’ll be able to discover and it’s best to all the time use what works greatest in your challenge.

The supporting capabilities assist with including interactivity to the sprint app. There have been two totally different courses I created for this app that allowed for a PDF to be parsed aside. The primary class is the pdfReader class. The capabilities on this class are all associated to studying a PDF into Python and remodeling its contents right into a usable type. Moreover, a number of the capabilities are able to extracting the metadata intrinsically positioned inside a PDF (ie. Creation Date, Writer, and many others.)

class pdfReader:

def __init__(self, file_path: str) -> str:

self.file_path = file_pathdef PDF_one_pager(self) -> str:

"""A perform that accepts a file path to a pdf

as enter and returns a one line string of the

pdf.

Parameters:

file_path(str): The file path to the pdf.

Returns:

one_page_pdf (str): A one line string of the pdf.

"""

content material = ""

p = open(self.file_path, "rb")

pdf = PyPDF2.PdfFileReader(p)

num_pages = pdf.numPages

for i in vary(0, num_pages):

content material += pdf.getPage(i).extractText() + "n"

content material = " ".be a part of(content material.exchange(u"xa0", " ").strip().cut up())

page_number_removal = r"d{1,3} of d{1,3}"

page_number_removal_pattern = re.compile(page_number_removal, re.IGNORECASE)

content material = re.sub(page_number_removal_pattern, '',content material)

return content material

def pdf_reader(self) -> str:

"""A perform that may learn .pdf formatted information

and returns a python readable pdf.

Parameters:

self (obj): An object of the category pdfReader

Returns:

read_pdf: A python readable .pdf file.

"""

opener = open(self.file_path,'rb')

read_pdf = PyPDF2.PdfFileReader(opener)

return read_pdf

def pdf_info(self) -> dict:

"""A perform which returns an info dictionary

of an object.

Parameters:

self (obj): An object of the pdfReader class.

Returns:

dict(pdf_info_dict): A dictionary containing the meta

information of the article.

"""

opener = open(self.file_path,'rb')

read_pdf = PyPDF2.PdfFileReader(opener)

pdf_info_dict = {}

for key,worth in read_pdf.documentInfo.objects():

pdf_info_dict[re.sub('/',"",key)] = worth

return pdf_info_dict

def pdf_dictionary(self) -> dict:

"""A perform which returns a dictionary of

the article the place the keys are the pages

and the textual content inside the pages are the

values.

Parameters:

obj (self): An object of the pdfReader class.

Returns:

dict(pdf_dict): A dictionary of the article inside the

pdfReader class.

"""

opener = open(self.file_path,'rb')

#attempt:

# file_path = os.path.exists(self.file_path)

# file_path = True

#break

#besides ValueError:

# print('Unidentifiable file path')

read_pdf = PyPDF2.PdfFileReader(opener)

size = read_pdf.numPages

pdf_dict = {}

for i in vary(size):

web page = read_pdf.getPage(i)

textual content = web page.extract_text()

pdf_dict[i] = textual content

return pdf_dict

def get_publish_date(self) -> str:

"""A perform of which accepts an info dictionray of an object

within the pdfReader class and returns the creation date of the

object (if relevant).

Parameters:

self (obj): An object of the pdfReader class

Returns:

pub_date (str): The publication date which is assumed to be the

creation date (if relevant).

"""

info_dict_pdf = self.pdf_info()

pub_date= 'None'

attempt:

publication_date = info_dict_pdf['CreationDate']

publication_date = datetime.datetime.strptime(publication_date.exchange("'", ""), "D:%YpercentmpercentdpercentHpercentMpercentSpercentz")

pub_date = publication_date.isoformat()[0:10]

besides:

go

return str(pub_date)

The second class created was the pdfParser and this class performs the primary parsing operations we want to use on the PDF in Python. These capabilities embody:

- get_emails()– a perform that is ready to discover all the emails inside a string of textual content.

- get_dates()– a perform that may find dates inside a string of textual content. For this dashboard, we’re going to discover the date on which the PDF was downloaded.

- get_summary()– a perform that is ready to create a summarization of a physique of text-based on the significance of phrases and the % of textual content the consumer needs to be positioned inside a abstract from the unique textual content.

- get_urls()– a perform that can discover all the URL and domains inside a string of textual content.

class pdfParser:

def __init__(self):

return@staticmethod

def get_emails(textual content: str) -> set:

"""A perform that accepts a string of textual content and

returns any electronic mail addresses positioned inside the

textual content

Parameters:

textual content (str): A string of textual content

Returns:

set(emails): A set of emails positioned inside

the string of textual content.

"""

email_pattern = re.compile(r'[w.+-]+@[w-]+.[w.-]+')

email_set = set()

email_set.replace(email_pattern.findall(textual content))

return str(email_set)

@staticmethod

def get_dates(textual content: str, info_dict_pdf : dict) -> set:

date_label = ['DATE']

nlp = spacy.load('en_core_web_lg')

doc = nlp(textual content)

dates_pattern = re.compile(r'(d{1,3}.d{1,3}.d{1,3}.d{1,3})')

dates = set((ent.textual content) for ent in doc.ents if ent.label_ in date_label)

filtered_dates = set(date for date in dates if not dates_pattern.match(date))

return str(filtered_dates)

@staticmethod

def get_summary(textual content: str, per: float) -> str:

nlp = spacy.load('en_core_web_sm')

doc= nlp(textual content)

word_frequencies={}

for phrase in doc:

if phrase.textual content.decrease() not in listing(STOP_WORDS):

if phrase.textual content.decrease() not in punctuation:

if phrase.textual content not in word_frequencies.keys():

word_frequencies[word.text] = 1

else:

word_frequencies[word.text] += 1

max_frequency=max(word_frequencies.values())

for phrase in word_frequencies.keys():

word_frequencies[word]=word_frequencies[word]/max_frequency

sentence_tokens= [sent for sent in doc.sents]

sentence_scores = {}

for despatched in sentence_tokens:

for phrase in despatched:

if phrase.textual content.decrease() in word_frequencies.keys():

if despatched not in sentence_scores.keys():

sentence_scores[sent]=word_frequencies[word.text.lower()]

else:

sentence_scores[sent]+=word_frequencies[word.text.lower()]

select_length=int(len(sentence_tokens)*per)

abstract=nlargest(select_length, sentence_scores,key=sentence_scores.get)

final_summary=[word.text for word in summary]

abstract=''.be a part of(final_summary)

return abstract

@staticmethod

def get_urls_domains(textual content: str) -> set:

"""A perform that accepts a string of textual content and

returns any urls and domains positioned inside

the textual content.

Parmeters:

textual content (str): A string of textual content.

Returns:

set(urls): A set of urls positioned inside the textual content

set(domain_names): A set of domains positioned inside the textual content.

"""

#f = open('/Customers/benmccloskey/Desktop/pdf_dashboard/cfg.json')

#information = json.load(f)

#url_endings = [end for end in data['REGEX_URL_ENDINGS']['url_endings'].cut up(',')]

url_end = 'com,gov,edu,org,mil,web,au,in,ca,br,it,mx,ai,fr,tw,il,uk,int,arpa,co,us,data,xyz,ly,web site,biz,bz'

url_endings = [end for end in url_end.split(',')]

url_regex = '(?:(?:https?|ftp)://)?[w/-?=%.]+.(?:' + '|'.be a part of(url_endings) + ')[^s]+'

url_reg_pattern = re.compile(url_regex, re.IGNORECASE)

url_reg_list = url_reg_pattern.findall(textual content)

url_set = set()

url_set.replace(url_reg_list)

domain_set = set()

domain_regex = r'^(?:https?://)?(?:[^@/n]+@)?(?:www.)?([^:/n]+)'

domain_pattern = re.compile(domain_regex, re.IGNORECASE)

for url in url_set:

domain_set.replace(domain_pattern.findall(url))

return str(url_set), str(domain_set)

All through at present’s instance we are going to parse aside the paper ImageNet Classification with Deep Convolutional Neural Networks written by Alex Krizhevsky Ilya Sutskever, and Geoffrey E. Hinton [1].

Setup

You will want to create a listing that holds the PDF information you want to analyze in your dashboard. You’ll then need to initialize your Sprint app.

listing = '/Customers/benmccloskey/Desktop/pdf_dashboard/information'app = Sprint(__name__, external_stylesheets=[dbc.themes.CYBORG],suppress_callback_exceptions=True)

I made a folder referred to as “information” and I merely positioned the PDF I needed to add inside the mentioned folder.

As proven above, the dashboard may be very fundamental when it’s initialized however that is anticipated! No info has been supplied to the app and moreover, I didn’t need the app to be too busy firstly.

The Skeleton

The following step is to create the skeleton of the sprint app. This actually is the scaffolding of the sprint app the place we are able to place totally different items upon it to in the end create the ultimate structure. First, we are going to add a title and magnificence it the way in which we would like. You may get the numbers related to totally different colours right here!

app.format = html.Div(youngsters =[html.Div(children =[html.H1(children='PDF Parser',

style = {'textAlign': 'center',

'color' : '#7FDBFF',})])

Second, we are able to use dcc.Add which permits us to truly add information into our sprint app.

That is the place I met my first problem.

The instance supplied by Plotly Sprint didn’t present the way to learn in PDF information. I configured the next code block and appendes it to the ingestion code supplied by Plotly.

if 'csv' in filename:

# Assume that the consumer uploaded a CSV file

df = pd.read_csv(

io.StringIO(decoded.decode('utf-8')))

elif 'xls' in filename:

# Assume that the consumer uploaded an excel file

df = pd.read_excel(io.BytesIO(decoded))

elif 'pdf' in filename:

pdf = pdfReader(listing + '/' + filename)

textual content = pdf.PDF_one_pager()

emails = pdfParser.get_emails(textual content)

ddate = pdf.get_publish_date()

abstract = pdfParser.get_summary(textual content, 0.1)

df = pd.DataFrame({'Textual content':[text], 'Abstract':[summary],

'Obtain Date' : [ddate],'Emails' : [emails]})

The primary change wanted was to pressure the perform to see if a file title had “pdf” on the finish of its title. Then, I used to be capable of pull the pdf from the listing I initially had initiated. Please word: With the present state of the code, any PDF file uploaded to the dashboard that’s not within the listing folder won’t be able to be learn and parsed by the dashboard. It is a future replace! In case you have any capabilities that work with textual content, that is the place you’ll be able to add these in.

As beforehand acknowledged, the bundle I used for studying the PDF information was PyPDF2 and there are various others out that one can use. Initiating the courses firstly of the dashboard tremendously decreased the muddle inside the skeleton. One other trick that I discovered useful was to truly parse the pdf proper when it’s uploaded and save the information body at a later line within the code. For this instance, we’re going to show the textual content, abstract, obtain date, and electronic mail addresses related to a PDF file.

When you click on the add button, a popup window of your file listing will open and you may choose the PDF file you want to parse (don’t forget that for this setup, the pdf should be in your dashboard’s working listing!).

As soon as the file is uploaded, we are able to end the perform by returning the file title with the DateTime, and create a Plotly sprint guidelines of the totally different options inside the PDF we would like our dashboard to parse out and show. Proven under is the total physique of the dashboard earlier than the callbacks are instantiated.

app.format = html.Div(youngsters =[html.Div(children =[html.H1(children='PDF Parser',

style = {'textAlign': 'center',

'color' : '#7FDBFF',})]),html.Div([

dcc.Upload(

id='upload-data',

children=html.Div([

'Drag and Drop or ',

html.A('Select Files')

]),

model={

'width': '100%',

'top': '60px',

'lineHeight': '60px',

'borderWidth': '1px',

'borderStyle': 'dashed',

'borderRadius': '5px',

'textAlign': 'middle',

'margin': '10px'

},

# Enable a number of information to be uploaded

a number of=True

),

#Returns data, above the datatable,

html.Div(id='output-datatable'),

html.Div(id='output-data-upload')#output for the datatable,

]),

])

def parse_contents(contents, filename, date):

content_type, content_string = contents.cut up(',')

decoded = base64.b64decode(content_string)

attempt:

if 'csv' in filename:

# Assume that the consumer uploaded a CSV file

df = pd.read_csv(

io.StringIO(decoded.decode('utf-8')))

elif 'xls' in filename:

# Assume that the consumer uploaded an excel file

df = pd.read_excel(io.BytesIO(decoded))

elif 'pdf' in filename:

pdf = pdfReader(listing + '/' + filename)

textual content = pdf.PDF_one_pager()

emails = pdfParser.get_emails(textual content)

ddate = pdf.get_publish_date()

abstract = pdfParser.get_summary(textual content, 0.1)

df = pd.DataFrame({'Textual content':[text], 'Abstract':[summary],

'Obtain Date' : [ddate],'Emails' : [emails],

' URLs' : [urls], 'Area Names' : [domains]})

besides Exception as e:

print(e)

return html.Div([

'There was an error processing this file.'

])

return html.Div([

html.H5(filename),#return the filename

html.H6(datetime.datetime.fromtimestamp(date)),#edit date

dcc.Checklist(id='checklist',options = [

{"label": "Text", "value": "Text"},

{"label": "summary", "value": "Summary"},

{"label": "Download Date", "value": "Download Date"},

{"label": "Email Addresses", "value": "Email Addresses"}

],

worth = []),

html.Hr(),

dcc.Retailer(id='stored-data' ,information = df.to_dict('data')),

html.Hr(), # horizontal line

])

Dashboard Guidelines

The guidelines. That is the place we are going to start including some performance to the dashboard. Utilizing the dcc.Guidelines perform, we are able to create an inventory that enables a consumer to pick out which options of the PDF they need to be displayed. For instance, perhaps the consumer simply desires to take a look at one characteristic at a time so they don’t seem to be overwhelmed and might examine one particular sample. Or perhaps the consumer desires to check the abstract to the whole physique of the textual content to see if there may be any info lacking within the abstract that could be wanted to be added. What’s handy about utilizing the guidelines is you’ll be able to take a look at totally different batches of options which might be discovered inside a pdf which helps scale back sensory overload.

As soon as the pdf is uploaded, a guidelines will show with not one of the objects chosen. See within the subsequent part what occurs once we choose the totally different parameters within the guidelines!

Callbacks

Callbacks are extraordinarily essential in Plotly dashboards. They add performance to the dashboard and make it interactive with the consumer. The primary callback is used for the add perform and ensures that our information may be uploaded.

The second callback shows the totally different information in our information desk. It does this by calling upon the saved information body initially created when a PDF is uploaded. Relying on which packing containers of the guidelines are chosen will decide what info is at the moment being displayed within the information desk.

@app.callback(Output('output-datatable', 'youngsters'),

Enter('upload-data', 'contents'),

State('upload-data', 'filename'),

State('upload-data', 'last_modified'))

def update_output(list_of_contents, list_of_names, list_of_dates):

if list_of_contents shouldn't be None:

youngsters = [

parse_contents(c, n, d) for c, n, d in

zip(list_of_contents, list_of_names, list_of_dates)]

return youngsters@app.callback(Output('output-data-upload', 'youngsters'),

Enter('guidelines','worth'),

Enter('stored-data', 'information'))

def table_update(options_chosen, df_dict):

if options_chosen == []:

return options_chosen == []

df_copy = pd.DataFrame(df_dict)

textual content = df_copy['Text']

emails = df_copy['Emails']

ddate = df_copy['Download Date']

abstract = df_copy['Summary']

value_dct = {}

for val in options_chosen:

if val == 'Textual content':

value_dct[val] = textual content

if val == 'Abstract':

value_dct['Summary'] = abstract

if val == 'Obtain Date':

value_dct['Download Date'] = ddate

if val == 'E-mail Addresses':

value_dct['Email'] = emails

dff = pd.DataFrame(value_dct)

return dash_table.DataTable(

dff.to_dict('data'),

[{'name': i, 'id': i} for i in dff.columns],

export_format="csv"

style_data={

'whiteSpace': 'regular',

'top': 'auto',

'textAlign': 'left',

'backgroundColor': 'rgb(50, 50, 50)',

'coloration': 'white'},

style_header={'textAlign' : 'left',

'backgroundColor': 'rgb(30, 30, 30)',

'coloration': 'white'

})

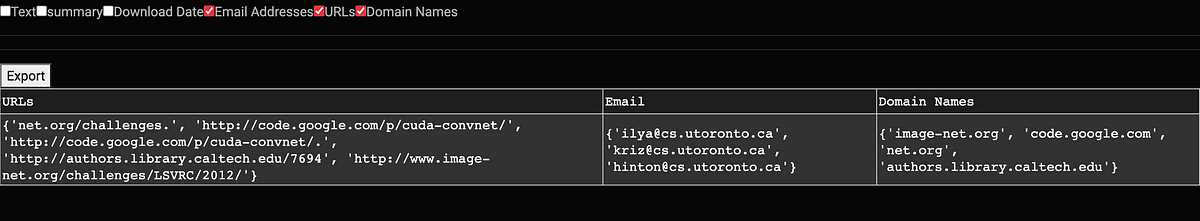

For instance, what if we need to see the emails, urls, and domains positioned inside the PDF? By checking these three picks within the guidelines, that information will populate the desk and show itself on the dashboard.

Lastly, whereas we may simply copy and paste the entries into one other spreadsheet or database of our selection, the “export” button within the prime left permits us to obtain and save the desk to a CSV file!

And that’s it! These few blocks of code are all it takes to carry out fundamental parsing capabilities on a pdf in a Plotly dashboard.

As we speak we checked out one route an information scientist can take to add a PDF to a Plotly dashboard and show sure content material to a consumer. This may be tremendous helpful for an individual who has to tug particular info from PDFs (ie. perhaps buyer electronic mail, title, and cellphone quantity) and wishes to investigate that info. Whereas this dashboard is fundamental, it may be tweaked for various types of PDFs. For instance, perhaps you need to create a parsing dashboard that shows the citations in a analysis paper PDF you’re studying so you’ll be able to both save or use these citations to seek out different papers. Attempt to this code out at present and should you add any cool capabilities, let me know!

If you happen to get pleasure from at present’s studying, PLEASE give me a observe and let me know if there may be one other subject you prefer to me to discover! If you happen to shouldn’t have a Medium account, join by means of my hyperlink right here! Moreover, add me on LinkedIn, or be at liberty to succeed in out! Thanks for studying!

import pandas as pd

from sprint import dcc, Sprint, html, dash_table

import base64

import datetime

import io

import PyPDF2

from sprint.dependencies import Enter, Output, State

import re

import dash_bootstrap_components as dbc

import spacy

from spacy.lang.en.stop_words import STOP_WORDS

from string import punctuation

from heapq import nlargestexternal_stylesheets = ['https://codepen.io/chriddyp/pen/bWLwgP.css']

app = Sprint(__name__, external_stylesheets=[dbc.themes.CYBORG],suppress_callback_exceptions=True)

class pdfReader:

def __init__(self, file_path: str) -> str:

self.file_path = file_path

def PDF_one_pager(self) -> str:

"""A perform that accepts a file path to a pdf

as enter and returns a one line string of the

pdf.

Parameters:

file_path(str): The file path to the pdf.

Returns:

one_page_pdf (str): A one line string of the pdf.

"""

content material = ""

p = open(self.file_path, "rb")

pdf = PyPDF2.PdfFileReader(p)

num_pages = pdf.numPages

for i in vary(0, num_pages):

content material += pdf.getPage(i).extractText() + "n"

content material = " ".be a part of(content material.exchange(u"xa0", " ").strip().cut up())

page_number_removal = r"d{1,3} of d{1,3}"

page_number_removal_pattern = re.compile(page_number_removal, re.IGNORECASE)

content material = re.sub(page_number_removal_pattern, '',content material)

return content material

def pdf_reader(self) -> str:

"""A perform that may learn .pdf formatted information

and returns a python readable pdf.

Parameters:

self (obj): An object of the category pdfReader

Returns:

read_pdf: A python readable .pdf file.

"""

opener = open(self.file_path,'rb')

read_pdf = PyPDF2.PdfFileReader(opener)

return read_pdf

def pdf_info(self) -> dict:

"""A perform which returns an info dictionary

of an object related to the pdfReader class.

Parameters:

self (obj): An object of the pdfReader class.

Returns:

dict(pdf_info_dict): A dictionary containing the meta

information of the article.

"""

opener = open(self.file_path,'rb')

read_pdf = PyPDF2.PdfFileReader(opener)

pdf_info_dict = {}

for key,worth in read_pdf.documentInfo.objects():

pdf_info_dict[re.sub('/',"",key)] = worth

return pdf_info_dict

def pdf_dictionary(self) -> dict:

"""A perform which returns a dictionary of

the article the place the keys are the pages

and the textual content inside the pages are the

values.

Parameters:

obj (self): An object of the pdfReader class.

Returns:

dict(pdf_dict): A dictionary of the article inside the

pdfReader class.

"""

opener = open(self.file_path,'rb')

read_pdf = PyPDF2.PdfFileReader(opener)

size = read_pdf.numPages

pdf_dict = {}

for i in vary(size):

web page = read_pdf.getPage(i)

textual content = web page.extract_text()

pdf_dict[i] = textual content

return pdf_dict

def get_publish_date(self) -> str:

"""A perform of which accepts an info dictionray of an object

within the pdfReader class and returns the creation date of the

object (if relevant).

Parameters:

self (obj): An object of the pdfReader class

Returns:

pub_date (str): The publication date which is assumed to be the

creation date (if relevant).

"""

info_dict_pdf = self.pdf_info()

pub_date= 'None'

attempt:

publication_date = info_dict_pdf['CreationDate']

publication_date = datetime.datetime.strptime(publication_date.exchange("'", ""), "D:%YpercentmpercentdpercentHpercentMpercentSpercentz")

pub_date = publication_date.isoformat()[0:10]

besides:

go

return str(pub_date)

class pdfParser:

def __init__(self):

return

@staticmethod

def get_emails(textual content: str) -> set:

"""A perform that accepts a string of textual content and

returns any electronic mail addresses positioned inside the

textual content

Parameters:

textual content (str): A string of textual content

Returns:

set(emails): A set of emails positioned inside

the string of textual content.

"""

email_pattern = re.compile(r'[w.+-]+@[w-]+.[w.-]+')

email_set = set()

email_set.replace(email_pattern.findall(textual content))

return str(email_set)

@staticmethod

def get_dates(textual content: str, info_dict_pdf : dict) -> set:

date_label = ['DATE']

nlp = spacy.load('en_core_web_lg')

doc = nlp(textual content)

dates_pattern = re.compile(r'(d{1,3}.d{1,3}.d{1,3}.d{1,3})')

dates = set((ent.textual content) for ent in doc.ents if ent.label_ in date_label)

filtered_dates = set(date for date in dates if not dates_pattern.match(date))

return str(filtered_dates)

@staticmethod

def get_summary(textual content, per):

nlp = spacy.load('en_core_web_sm')

doc= nlp(textual content)

word_frequencies={}

for phrase in doc:

if phrase.textual content.decrease() not in listing(STOP_WORDS):

if phrase.textual content.decrease() not in punctuation:

if phrase.textual content not in word_frequencies.keys():

word_frequencies[word.text] = 1

else:

word_frequencies[word.text] += 1

max_frequency=max(word_frequencies.values())

for phrase in word_frequencies.keys():

word_frequencies[word]=word_frequencies[word]/max_frequency

sentence_tokens= [sent for sent in doc.sents]

sentence_scores = {}

for despatched in sentence_tokens:

for phrase in despatched:

if phrase.textual content.decrease() in word_frequencies.keys():

if despatched not in sentence_scores.keys():

sentence_scores[sent]=word_frequencies[word.text.lower()]

else:

sentence_scores[sent]+=word_frequencies[word.text.lower()]

select_length=int(len(sentence_tokens)*per)

abstract=nlargest(select_length, sentence_scores,key=sentence_scores.get)

final_summary=[word.text for word in summary]

abstract=''.be a part of(final_summary)

return abstract

listing = '/Customers/benmccloskey/Desktop/pdf_dashboard/information'

app.format = html.Div(youngsters =[html.Div(children =[html.H1(children='PDF Parser',

style = {'textAlign': 'center',

'color' : '#7FDBFF',})]),

html.Div([

dcc.Upload(

id='upload-data',

children=html.Div([

'Drag and Drop or ',

html.A('Select Files')

]),

model={

'width': '100%',

'top': '60px',

'lineHeight': '60px',

'borderWidth': '1px',

'borderStyle': 'dashed',

'borderRadius': '5px',

'textAlign': 'middle',

'margin': '10px'

},

# Enable a number of information to be uploaded

a number of=True

),

#Returns data, above the datatable,

html.Div(id='output-datatable'),

html.Div(id='output-data-upload')#output for the datatable,

]),

])

def parse_contents(contents, filename, date):

content_type, content_string = contents.cut up(',')

decoded = base64.b64decode(content_string)

attempt:

if 'csv' in filename:

# Assume that the consumer uploaded a CSV file

df = pd.read_csv(

io.StringIO(decoded.decode('utf-8')))

elif 'xls' in filename:

# Assume that the consumer uploaded an excel file

df = pd.read_excel(io.BytesIO(decoded))

elif 'pdf' in filename:

pdf = pdfReader(listing + '/' + filename)

textual content = pdf.PDF_one_pager()

emails = pdfParser.get_emails(textual content)

ddate = pdf.get_publish_date()

abstract = pdfParser.get_summary(textual content, 0.1)

df = pd.DataFrame({'Textual content':[text], 'Abstract':[summary],

'Obtain Date' : [ddate],'Emails' : [emails]})

besides Exception as e:

print(e)

return html.Div([

'There was an error processing this file.'

])

return html.Div([

html.H5(filename),#return the filename

html.H6(datetime.datetime.fromtimestamp(date)),#edit date

dcc.Checklist(id='checklist',options = [

{"label": "Text", "value": "Text"},

{"label": "summary", "value": "Summary"},

{"label": "Download Date", "value": "Download Date"},

{"label": "Email Addresses", "value": "Email Addresses"}

],

worth = []),

html.Hr(),

dcc.Retailer(id='stored-data' ,information = df.to_dict('data')),

html.Hr(), # horizontal line

])

@app.callback(Output('output-datatable', 'youngsters'),

Enter('upload-data', 'contents'),

State('upload-data', 'filename'),

State('upload-data', 'last_modified'))

def update_output(list_of_contents, list_of_names, list_of_dates):

if list_of_contents shouldn't be None:

youngsters = [

parse_contents(c, n, d) for c, n, d in

zip(list_of_contents, list_of_names, list_of_dates)]

return youngsters

@app.callback(Output('output-data-upload', 'youngsters'),

Enter('guidelines','worth'),

Enter('stored-data', 'information'))

def table_update(options_chosen, df_dict):

if options_chosen == []:

return options_chosen == []

df_copy = pd.DataFrame(df_dict)

textual content = df_copy['Text']

emails = df_copy['Emails']

ddate = df_copy['Download Date']

abstract = df_copy['Summary']

value_dct = {}

for val in options_chosen:

if val == 'Textual content':

value_dct[val] = textual content

if val == 'Abstract':

value_dct['Summary'] = abstract

if val == 'Obtain Date':

value_dct['Download Date'] = ddate

if val == 'E-mail Addresses':

value_dct['Email'] = emails

dff = pd.DataFrame(value_dct)

return dash_table.DataTable(

dff.to_dict('data'),

[{'name': i, 'id': i} for i in dff.columns],

style_data={

'whiteSpace': 'regular',

'top': 'auto',

'textAlign': 'left',

'backgroundColor': 'rgb(50, 50, 50)',

'coloration': 'white'},

style_header={'textAlign' : 'left',

'backgroundColor': 'rgb(30, 30, 30)',

'coloration': 'white'

})

if __name__ == '__main__':

app.run_server(debug=True)

- Krizhevsky, Alex, Ilya Sutskever, and Geoffrey E. Hinton. “Imagenet classification with deep convolutional neural networks.” Communications of the ACM 60.6 (2017): 84–90.