With Kubernetes, the flexibility to dynamically scale infrastructure primarily based on demand is a significant profit. It gives a number of layers of autoscaling performance: a horizontal pod autoscaler (HPA) and a vertical pod autoscaler (VPA) as a pod and a cluster autoscaler as a node.

Nonetheless, organising cluster autoscaling with present Kubernetes options could be troublesome and restrictive. For instance, in an AWS EKS cluster, you can not handle nodes immediately. As an alternative, we have to use further orchestration mechanisms corresponding to node teams. Suppose we have now outlined t3.giant as occasion kind of nodegroup. When a brand new node must be provisioned for the cluster, Kubernetes Cluster Autoscaler creates a brand new occasion of kind t3.giant, no matter useful resource necessities. Though we will use combined situations in node teams, it’s not all the time potential to fulfill useful resource calls for and be price efficient.

Kubernetes Autoscaling

K8S Autoscaling helps us to scale horizontally or in our functions. Pod-based or HPA-based scaling is a good first step. Nonetheless, the issue is after we want a number of K8S nodes to carry our PODs.

Karpenter

Karpenter is a node-based scaling resolution created for K8S and goals to enhance effectivity and prices. It is an awesome resolution as a result of we do not have to configure occasion sorts or create node swimming pools, which drastically simplifies configuration. Alternatively, integration with Spot situations is painless and permits us to scale back our prices (as much as 90% lower than On-Demand situations)

Karpenter is an open supply node deployment mission designed for Kubernetes. Including Karpenter to a Kubernetes cluster can considerably enhance the effectivity and price of working workloads on that cluster.

Options of Karpenter

- Watch out for pods that the Kubernetes scheduler has marked as unschedulable.

- Consider scheduling constraints (useful resource necessities, node selectors, affinities, tolerances, and topology distribution constraints).

- Demanded by pods deployment nodes that meet pod wants.

- Schedule pods to run on new nodes.

- Delete nodes when nodes are not wanted.

Management Loops of Karpenter

Karpenter has two management loops that maximize the supply and effectivity of your cluster.

Allocator

Quick-acting controller that ensures pods are scheduled as rapidly as potential

Reallocator

Gradual-acting controller replaces nodes as pod capability modifications over time.

Cluster Autoscaler

Cluster Autoscaler is a Kubernetes utility that will increase or decreases the scale of a Kubernetes cluster (by including or eradicating nodes), primarily based on the presence of pending pods and node utilization metrics.

Mechanically resize the Kubernetes cluster when one of many following situations is met:

- There are pods that can’t run on the cluster as a consequence of inadequate assets.

- There are nodes within the cluster which were underused for a protracted time frame and their pods could be positioned on different present nodes.

A Kubernetes node autoscaling resolution is a software that mechanically scales the Kubernetes cluster primarily based on the calls for of our workloads. Subsequently, there isn’t a must manually create (or delete) a brand new Kubernetes node each time we want it.

Karpenter mechanically gives new nodes in response to non-programmable pods. It does this by observing occasions throughout the Kubernetes cluster after which sending instructions to the underlying cloud supplier. It’s designed to work with any Kubernetes cluster in any atmosphere.

Structure of Cluster Autoscaler

- The cluster autoscaler appears to be like for pods that can not be scheduled and nodes which are underutilized.

- It then simulates including or eradicating nodes earlier than making use of the change to your cluster.

- The AWS cloud supplier implementation in Cluster Autoscaler controls the .DesiredReplicas subject of your EC2 Auto Scaling teams.

- The Kubernetes cluster autoscaler mechanically adjusts the variety of nodes in your cluster when pods fail or are rescheduled to different nodes.

- The cluster autoscaler is often put in as a deployment in your cluster.

Structure of Karpenter

Karpenter works with the Kubernetes scheduler observing incoming pods all through the lifetime of the cluster. It begins or stops nodes to optimize utility availability and cluster utilization. When there’s sufficient capability within the cluster, the Kubernetes scheduler will place the incoming pods as traditional.

When pods are began that can not be scheduled utilizing present cluster capability, Karpenter bypasses the Kubernetes scheduler and works immediately along with your supplier’s compute service to begin the minimal compute assets wanted in these pods and affiliate pods with provisioning nodes.

When pods are eliminated , Karpenter appears to be like for alternatives to terminate underutilized nodes.

Karpenter claims to supply the next enhancements:

Designed to deal with the total flexibility of the cloud

Karpenter has the flexibility to effectively tackle the total vary of occasion sorts accessible via AWS. Cluster Autoscaler was not initially constructed with the pliability to handle a whole bunch of occasion sorts, zones and buying choices.

Node provisioning with out teams

Karpenter manages every occasion immediately, with out utilizing further orchestration mechanisms corresponding to node teams. This lets you retry in milliseconds as an alternative of minutes when capability just isn’t accessible. It additionally permits Karpenter to benefit from various kinds of situations, Availability Zones and buying choices with out creating a whole bunch of node teams.

Making use of Planning

Cluster Autoscaler doesn’t affiliate pods with nodes it creates. As an alternative, it depends on the kube scheduler to make the identical scheduling determination after the node is on-line. The kubelet doesn’t want to attend for the scheduler or the node to arrange. It may well instantly begin getting ready the container runtime atmosphere, together with pre-fetching the picture. This could scale back node startup latency by a number of seconds.

On this tutorial you’ll discover ways to:

- Create EKS cluster for Karpenter.

- Configure AWS Roles.

- Set up Karpenter.

- Configure Karpenter Provisioner.

- Karpenter node automated resizing take a look at

Necessities

- AWS CLI

- eksctl

- kubectl

- helm

Create EKS cluster for Karpenter

Earlier than we proceed, we have to configure some atmosphere variables

export CLUSTER_NAME=YOUR-CLUSTER-NAME

export AWS_ACCOUNT_ID=YOUR-ACCOUNT-ID

Making a cluster with eksctl is the simplest approach to do that on AWS. First we have to create a yaml file. For instance test-demo.yaml

cat <<EOF > test-demo.yaml

---

apiVersion: eksctl.io/v1alpha5

type: ClusterConfig

metadata:

title: ${CLUSTER_NAME}

area: us-east-1

model: "1.21"

tags:

karpenter.sh/discovery: ${CLUSTER_NAME}

managedNodeGroups:

- instanceType: t3.medium

amiFamily: AmazonLinux2

title: ${CLUSTER_NAME}-ng

desiredCapacity: 1

minSize: 1

maxSize: 3

iam:

withOIDC: true

EOF

Create the cluster utilizing the generated file

eksctl create cluster -f test-demo.yaml

Configure AWS Roles

To make use of Karpenter on AWS we have to configure 3 permissions:

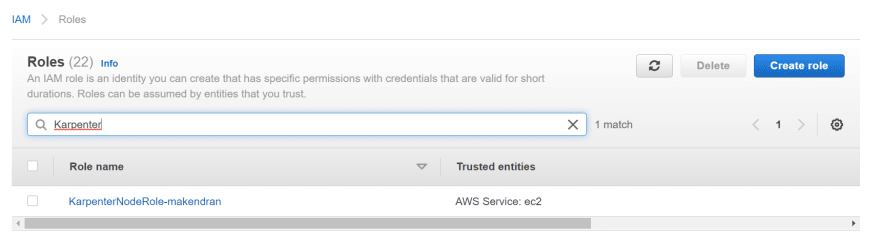

KarpenterNode IAM position

Occasion profile with permissions to run containers and configure networks

Creating the KarpenterNode IAM Position

- It is advisable create the IAM assets utilizing AWS CloudFormation. We have to obtain the cloud formation stack from the karpenter web site and deploy it with our cluster title data.

curl -fsSL https://karpenter.sh/v0.5.5/getting-started/cloudformation.yaml > cloudformation.tmp

[ec2-user@ip-*-*-*-* ~]$ aws cloudformation deploy --stack-name ${CLUSTER_NAME} --template-file cloudformation.tmp --capabilities CAPABILITY_NAMED_IAM --parameter-overrides ClusterName=${CLUSTER_NAME}

Ready for changeset to be created..

Ready for stack create/replace to finish

Efficiently created/up to date stack - makendran

- It is advisable grant entry to the situations through the use of the profile to connect with the cluster. This command provides the Karpenter node position to the aws-auth configuration listing in order that nodes with this position can hook up with the cluster.

[ec2-user@ip-*-*-*-* ~]$ eksctl create iamidentitymapping --username system:node:{{EC2PrivateDNSName}} --cluster ${CLUSTER_NAME} --arn arn:aws:iam::${AWS_ACCOUNT_ID}:position/KarpenterNodeRole-${CLUSTER_NAME} --group system:bootstrappers --group system:nodes

2022-08-10 07:57:55 [] including id "arn:aws:iam::262211057611:position/KarpenterNodeRole-makendran" to auth ConfigMap

Karpenter can now launch new EC2 situations and people situations can hook up with your cluster.

KarpenterController IAM position

Authorization to launch situations

Create IAM KarpenterController position

Create AWS IAM position and Kubernetes service account and affiliate them by way of IAM roles for the service accounts.

[ec2-user@ip-*-*-*-* ~]$ eksctl create iamserviceaccount --cluster $CLUSTER_NAME --name karpenter --namespace karpenter --attach-policy-arn arn:aws:iam::$AWS_ACCOUNT_ID:coverage/KarpenterControllerPolicy-$CLUSTER_NAME --approve

2022-08-10 07:59:39 [] 1 present iamserviceaccount(s) (kube-system/aws-node) can be excluded

2022-08-10 07:59:39 [] 1 iamserviceaccount (karpenter/karpenter) was included (primarily based on the embrace/exclude guidelines)

2022-08-10 07:59:39 [!] serviceaccounts that exist in Kubernetes can be excluded, use --override-existing-serviceaccounts to override

2022-08-10 07:59:39 [] 1 activity: {

2 sequential sub-tasks: {

create IAM position for serviceaccount "karpenter/karpenter",

create serviceaccount "karpenter/karpenter",

} }2022-08-10 07:59:39 [] constructing iamserviceaccount stack "eksctl-makendran-addon-iamserviceaccount-karpenter-karpenter"

2022-08-10 07:59:39 [] deploying stack "eksctl-makendran-addon-iamserviceaccount-karpenter-karpenter"

2022-08-10 07:59:39 [] ready for CloudFormation stack "eksctl-makendran-addon-iamserviceaccount-karpenter-karpenter"

2022-08-10 08:00:09 [] ready for CloudFormation stack "eksctl-makendran-addon-iamserviceaccount-karpenter-karpenter"

2022-08-10 08:00:09 [] created namespace "karpenter"

2022-08-10 08:00:09 [] created serviceaccount "karpenter/karpenter"

Position related to EC2 Spot service

To run EC2 Spot in our account. EC2 Spot occasion is an unused EC2 occasion accessible for lower than the value on request.

Create the position related to the EC2 Spot service

This step is just required if that is your first time utilizing EC2 Spot on this account.

[ec2-user@ip-*-*-*-* ~]$ aws iam create-service-linked-role --aws-service-name spot.amazonaws.com

{

"Position": {

"Path": "/aws-service-role/spot.amazonaws.com/",

"RoleName": "AWSServiceRoleForEC2Spot",

"RoleId": "AROAT2DH7G7F6JRN6LIPO",

"Arn": "arn:aws:iam::262211057611:position/aws-service-role/spot.amazonaws.com/AWSServiceRoleForEC2Spot",

"CreateDate": "2022-08-10T08:00:58+00:00",

"AssumeRolePolicyDocument": {

"Model": "2012-10-17",

"Assertion": [

{

"Action": [

"sts:AssumeRole"

],

"Impact": "Enable",

"Principal": {

"Service": [

"spot.amazonaws.com"

]

}

}

]

}

}

}

Set up Karpenter

We will use Helm to put in Karpenter.

helm repo add karpenter https://charts.karpenter.sh

helm repo replace

helm improve --install karpenter karpenter/karpenter --namespace karpenter --create-namespace --set serviceAccount.create=false --version v0.5.5 --set controller.clusterName=${CLUSTER_NAME} --set controller.clusterEndpoint=$(aws eks describe-cluster --name ${CLUSTER_NAME} --query "cluster.endpoint" --output json) --wait # for the defaulting webhook to put in earlier than making a Provisioner

Take a look at Karpenter’s assets on K8S.

[ec2-user@ip-*-*-*-* ~]$ kubectl get all -n karpenter

NAME READY STATUS RESTARTS AGE

pod/karpenter-controller-6474d8f77b-sntgb 1/1 Working 0 2m24s

pod/karpenter-webhook-7797fd8b6d-7tcd6 1/1 Working 0 2m24s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/karpenter-metrics ClusterIP 10.100.122.232 <none> 8080/TCP 8m37s

service/karpenter-webhook ClusterIP 10.100.197.109 <none> 443/TCP 8m37s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/karpenter-controller 1/1 1 1 8m37s

deployment.apps/karpenter-webhook 1/1 1 1 8m37s

NAME DESIRED CURRENT READY AGE

replicaset.apps/karpenter-controller-54d76d6f98 0 0 0 8m37s

replicaset.apps/karpenter-controller-6474d8f77b 1 1 1 2m24s

replicaset.apps/karpenter-webhook-7797fd8b6d 1 1 1 2m24s

replicaset.apps/karpenter-webhook-95d6dd84 0 0 0 8m37s

Configure Karpenter provisioner

A Karpenter framework is to handle varied provisioning choices primarily based on pod attributes corresponding to labels and affinities.

cat <<EOF > provisioner.yaml

---

apiVersion: karpenter.sh/v1alpha5

type: Provisioner

metadata:

title: default

spec:

necessities:

- key: karpenter.sh/capacity-type

operator: In

values: ["spot"]

limits:

assets:

cpu: 1000

supplier:

subnetSelector:

karpenter.sh/discovery: ${CLUSTER_NAME}

securityGroupSelector:

karpenter.sh/discovery: ${CLUSTER_NAME}

instanceProfile: KarpenterNodeInstanceProfile-${CLUSTER_NAME}

ttlSecondsAfterEmpty: 30

EOF

kubectl apply -f provisioner.yaml

Karpenter is able to begin provisioning nodes.

Karpenter Node Autoscaling Take a look at

This implementation makes use of the pause picture and begins with zero replicas.

cat <<EOF > deployment.yaml

---

apiVersion: apps/v1

type: Deployment

metadata:

title: inflate

spec:

replicas: 0

selector:

matchLabels:

app: inflate

template:

metadata:

labels:

app: inflate

spec:

terminationGracePeriodSeconds: 0

containers:

- title: inflate

picture: public.ecr.aws/eks-distro/kubernetes/pause:3.2

assets:

requests:

cpu: 1

EOF

kubectl apply -f deployment.yaml

Now we will scale the distribution to 4 replicas

kubectl scale deployment inflate --replicas 4

Now we will test Karpenter’s logs.

kubectl logs -f -n karpenter $(ok get pods -n karpenter -l karpenter=controller -o title)

2022-08-10T08:32:46.737Z INFO controller.provisioning Launched occasion: i-0d1406393eaee0b4c, hostname: ip-192-168-121-175.ec2.inside, kind: t3.2xlarge, zone: us-east-1c, capacityType: spot {"commit": "723b1b7", "provisioner": "default"}

2022-08-10T08:32:46.780Z INFO controller.provisioning Sure 4 pod(s) to node ip-192-168-121-175.ec2.inside {"commit": "723b1b7", "provisioner": "default"}

2022-08-10T08:32:46.780Z INFO controller.provisioning Ready for unschedulable pods {"commit": "723b1b7", "provisioner": "default"}

Karpenter created a brand new occasion.

- Occasion: i-0d1406393eaee0b4c

- Host title: ip-192-168-121-175.ec2.inside

- Sort: t3.2xlarge

- Zone: us-east-1c

We will test the nodes,

[ec2-user@ip-*-*-*-* ~]$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

ip-192-168-121-175.ec2.inside Prepared <none> 8m34s v1.21.12-eks-5308cf7

ip-192-168-39-32.ec2.inside Prepared <none> 52m v1.21.12-eks-5308cf7

We now have a brand new employee node. The node is an EC2 Spot occasion. We will test the SpotPrice.

[ec2-user@ip-*-*-*-* ~]$ aws ec2 describe-spot-instance-requests | grep "InstanceType|InstanceId|SpotPrice"

"InstanceId": "i-0d1406393eaee0b4c",

"InstanceType": "t3.2xlarge",

"SpotPrice": "0.332800",

Lastly, we will resize the distribution to 0 to test if the node has been eliminated

kubectl scale deployment inflate --replicas 0

Karpenter cordoned the node after which eliminated it.

kubectl logs -f -n karpenter $(kubectl get pods -n karpenter -l karpenter=controller -o title)

2022-08-10T08:32:46.780Z INFO controller.provisioning Ready for unschedulable pods {"commit": "723b1b7", "provisioner": "default"}

2022-08-10T08:47:14.742Z INFO controller.node Added TTL to empty node {"commit": "723b1b7", "node": "ip-192-168-121-175.ec2.inside"}

2022-08-10T08:47:44.763Z INFO controller.node Triggering termination after 30s for empty node {"commit": "723b1b7", "node": "ip-192-168-121-175.ec2.inside"}

2022-08-10T08:47:44.790Z INFO controller.termination Cordoned node {"commit": "723b1b7", "node": "ip-192-168-121-175.ec2.inside"}

2022-08-10T08:47:45.038Z INFO controller.termination Deleted node {"commit": "723b1b7", "node": "ip-192-168-121-175.ec2.inside"}

Examine the nodes once more. The second node has been eliminated

[ec2-user@ip-*-*-*-* ~]$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

ip-192-168-39-32.ec2.inside Prepared <none> 60m v1.21.12-eks-5308cf7

Cleanup

To keep away from further prices, take away the demo infrastructure out of your AWS account.

helm uninstall karpenter --namespace karpenter

eksctl delete iamserviceaccount --cluster ${CLUSTER_NAME} --name karpenter --namespace karpenter

aws cloudformation delete-stack --stack-name Karpenter-${CLUSTER_NAME}

aws ec2 describe-launch-templates

| jq -r ".LaunchTemplates[].LaunchTemplateName"

| grep -i Karpenter-${CLUSTER_NAME}

| xargs -I{} aws ec2 delete-launch-template --launch-template-name {}

eksctl delete cluster --name ${CLUSTER_NAME}

Conclusion

Karpenter is a good software for configuring the autoscaling of Kubernetes nodes, it is fairly new. Nonetheless, it has integration with Spot, Fargate (serverless) situations, and different nice options. One of the best half is that you just needn’t configure the node swimming pools or select the scale of the situations, it is also very quick, it takes about 1 minute to deploy the pods to the brand new node.

Gratitude for perusing my article until finish. I hope you realized one thing distinctive immediately. When you loved this text then please share to your buddies and you probably have strategies or ideas to share with me then please write within the remark field.