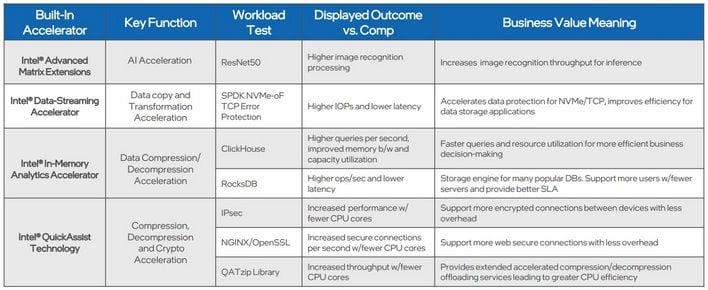

The workloads to be employed have been comprised of widespread cloud information middle duties like information compression/decompression, IPSec encryption throughput, AI picture classification, safe net server SSL handshaking, and database question efficiency. Nevertheless, earlier than we dig into the main points, let’s shortly recap what we’ve lined earlier than about Intel’s 4th Gen Xeons.

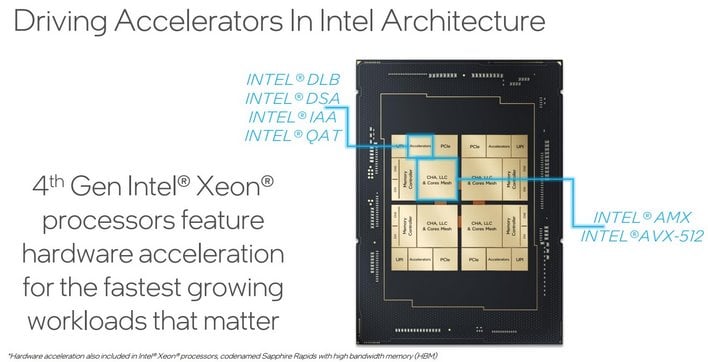

Intel 4th Gen Xeon Scalable Accelerators Detailed

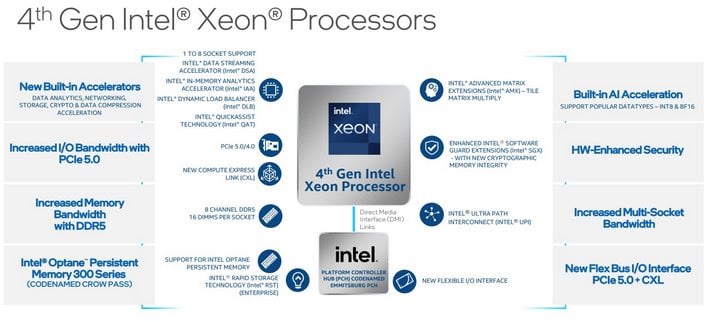

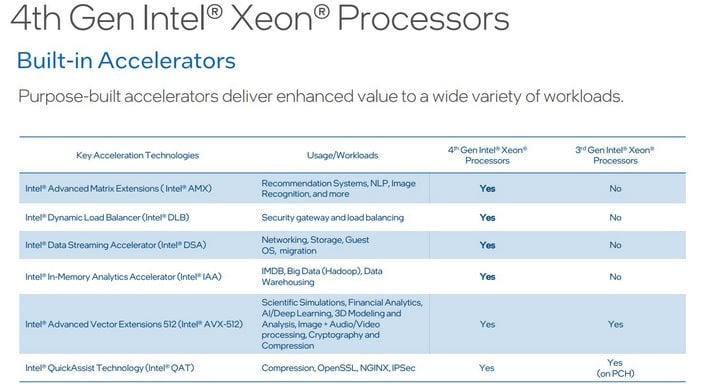

With Sapphire Rapids, it’s not simply concerning the chips’ enhanced Intel 7 process-built Golden Cove CPU cores. This new Intel 4th Gen Xeon Scalable server CPU microarchitecture has devoted {hardware} accelerators on board that may present important efficiency uplifts in an array of widespread information middle workloads and functions.

Intel 4th Gen Xeon Scalable Sapphire Rapids 2P Server

- Node 1: 2x pre-production 4th Gen Intel Xeon Scalable processors (60 core) with Intel Superior Matrix Extensions (Intel AMX), on pre-production Intel platform and software program with 1024GB DDR5 reminiscence (16x64GB), microcode 0xf000380, HT On, Turbo On, SNC Off.

- Node 2: 2x AMD EPYC 7763 processor (64 core) on GIGABYTE R282-Z92 with 1024GB DDR4 reminiscence (16x64GB), microcode 0xa001144, SMT On, Enhance On.

All check outcomes under have been carried out in September 2022 on programs Intel configured, and with that backdrop set and people config particulars, let’s get to some benchmark information.

Intel 4th Gen Xeon Scalable Accelerator Benchmarked

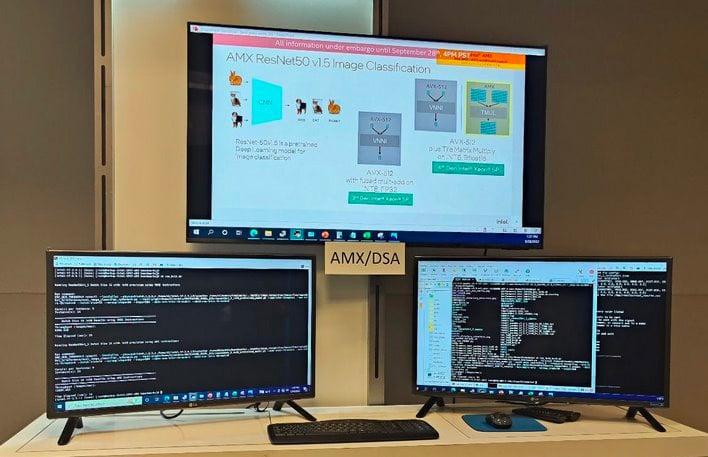

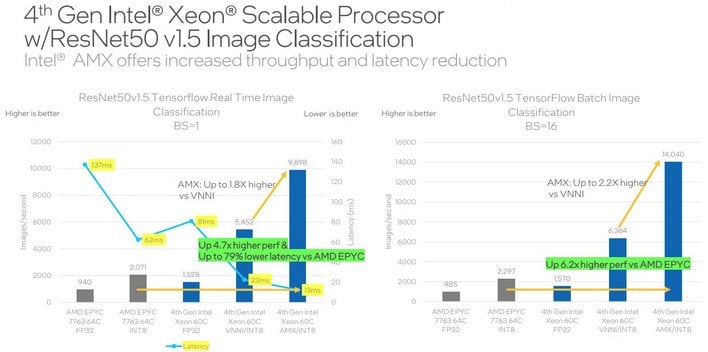

Using a pretrained deep studying AI mannequin for picture classification, Intel demonstrated efficiency good points with simply AVX-512 and Intel VNNI (Vector Neural Community Directions) AVX-2 acceleration, after which once more with AVX-512 and Intel AMX (Superior Matrix Extensions) performing a Tile Matrix Multiply to speed up issues additional. Listed here are the outcomes…

ResNet50v1.5 Tensorflow AI Picture Classification Efficiency With Intel AMX

As you’ll be able to see, there’s a dramatic speed-up in variety of photos processed per second, and an enormous discount in latency with Intel VNNI employed alone with INT8 precision. Nevertheless, kick-in the 4th Gen Xeon’s AMX matrix multiply array and the check confirmed an approximate 6X carry in efficiency. We watched these checks working stay and may the truth is confirm these outcomes, at the very least for the servers within the rack that have been being examined in entrance of us.

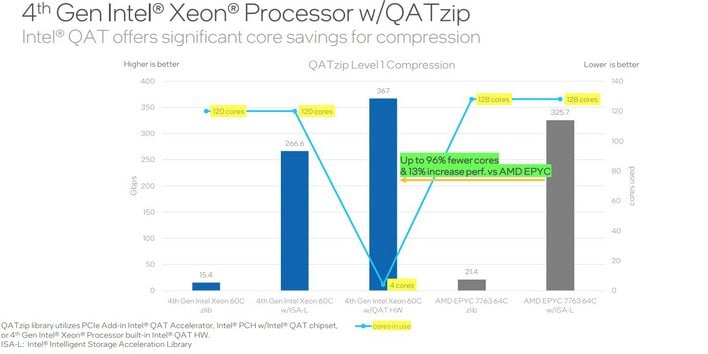

QATzip Degree 1 Compression Acceleration Benchmarks

QATzip is a person area library that may leverage the Intel QuickAssist Expertise to speed up file compression and decompression providers, by offloading the precise compression and decompression requests to the devoted accelerator in Intel’s 4th Gen Xeon Scalable processors.

At first look, the accelerated QATzip compression workload seems to be solely marginally sooner than the AMD EPYC configuration using 120 cores with ISA-L (Clever Storage Acceleration Library). However what the numbers present is that when using Intel QuickAssist Expertise (QAT), the workload is usually offloaded from the CPU cores. Solely 4 cores and the QAT accelerator are utilized within the quickest 4th Gen Xeon Scalable configuration, releasing up 116 of the CPU cores for different duties and VM availability.

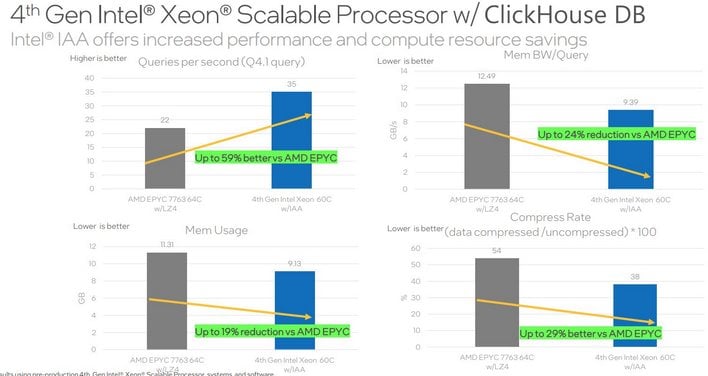

ClickHouse Large Knowledge Analytics Benchmarks On Sapphire Rapids

ClickHouse is an Open-source column-oriented database administration system for on-line analytical processing. It could possibly run on Naked metallic, within the Cloud (AWS, AliCloud, Azure, and so on.), or containerized in Kubernetes. It’s linearly scalable and will be scaled as much as retailer and course of huge quantities of knowledge. These outcomes have been generated utilizing a Star Schema Benchmark, centered on Question This fall.1, which has the very best CPU utilization…

There are a number of outcomes represented right here, displaying the 60-core 4th Gen Xeon Scalable system using IAA outperforming the 64-core EPYC configuration by way of queries per second and compression fee. The 4th Gen Xeon Scalable system additionally utilized much less reminiscence and fewer reminiscence bandwidth in the course of the benchmark, releasing up these resourced for different duties.

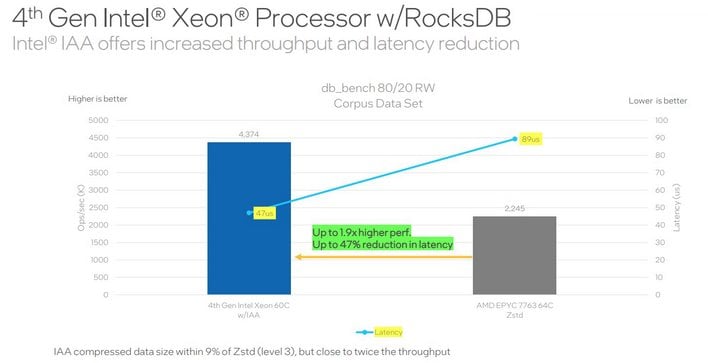

Intel 4th Gen Xeon IAA Accelerating RocksDB

RocksDB is an embedded persistent key-value retailer used as storage engine in lots of in style databases (MySQL, MariaDB, MongoDB, Redis, and so on.). It is utilized in Fb’s internet hosting setting, for instance, as effectively. For these benchmarks, RocksDB was modified to help compressors as plugins. Though not publicly obtainable simply but, the code might be upstreamed to the RocksDB venture…

On this benchmark, the 60-core 4th Gen Xeon Scalable configuration using IAA almost doubles the efficiency of the 64-core EPYC system, whereas concurrently providing a lot decrease latency.

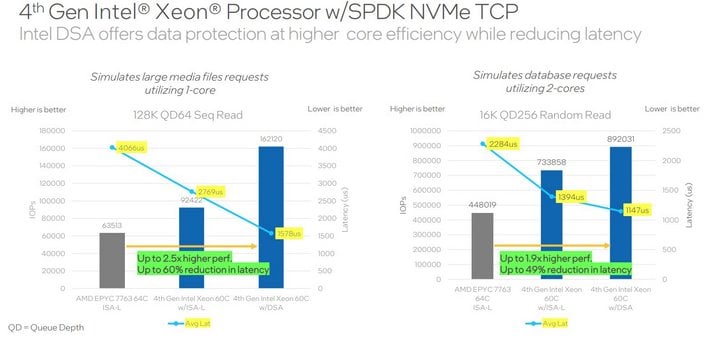

SPDK NVMe TCP Storage Efficiency With Knowledge Safety

This subsequent benchmark is illustrating Error Safety of NVMe TCP information at 200Gbps. For this check, the FIO submits I/O requests to a SPDK (Storage Efficiency Growth Equipment) NVMe/TCP goal. The goal reads information from NVMe SSDs and makes use of DSA or ISA-L for on the Intel processor and ISA-L on the AMD processor to calculate CRC32C information digest. Then the goal sends I/O and CRC32C information digest to FIO…

Whether or not using a single core with 128K sequential reads (QD64) or two cores with 16K random reads (QD256), the Intel 4th Gen Xeon server provides considerably greater throughput, at a lot decrease latencies than the EPYC server.

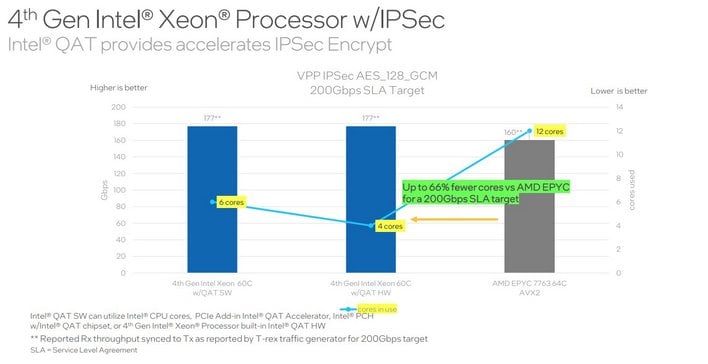

Intel 4th Gen Xeon Scalable IPSec Encryption Benchmarks

The DPDK IPsec-GW (safety gateway) benchmark measures how a lot site visitors the server can course of per second utilizing the IPsec protocol. Encryption is dealt with by software program utilizing Intel Multi-Buffer Crypto for IPsec library or offloaded to the Intel QAT accelerator. The Intel IPsec library implements optimized encryption/decryption operations utilizing AES or VAES directions, however observe VAES directions can’t be utilized on the AMD system as a result of AVX-512 isn’t obtainable on third Gen EPYC processors…

At first look, the bars on this chart do not present massive disparities between the totally different configurations. Nevertheless, the Xeon system is ready to obtain considerably greater efficiency, whereas using far fewer cores.

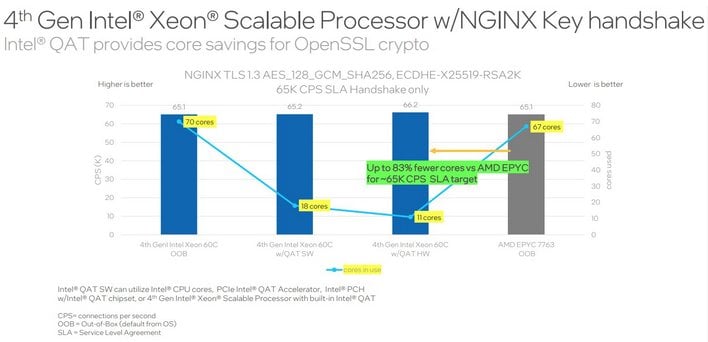

Intel Xeon NGINX Key Handshake OpenSSL Crypto Acceleration Assessments

This NGINX TLS Key Handshake benchmark measures encrypted Net Server connections-per-second. No packets are requested by the purchasers, so solely a TLS handshake is full. The benchmark stresses the server’s compute, reminiscence, and IO assets…

As soon as once more the testing reveals the 4th Gen Xeon Scalable system providing comparable efficiency to the EPYC system, however when using Intel QAT (SW or HW), the Xeon configuration is ready to acquire that efficiency whereas taxing far fewer CPU cores.

All of those benchmarks have been devised by Intel to obviously reveal the potential efficiency and effectivity advantages of the assorted accelerators obtainable in its upcoming 4th Gen Xeon Scalable processors, in any other case generally known as Sapphire Rapids. The checks have been run beneath managed circumstances and a lot of the particulars relating to Intel’s 4th Gen Xeon Scalable processors being employed within the check servers weren’t divulged. With all of that in thoughts, we clearly need to mood our evaluation of those numbers, however assuming every little thing proven holds up in the true world once we do get to independently confirm these outcomes, they definitely bode very effectively for Intel.

AMD will retain a CPU core density per socket benefit with its EPYC processors, but when these devoted accelerators in 4th Gen Xeon Scalable processors negate that benefit and unlock Intel CPU core assets for different duties whereas accelerating these widespread workloads, this new Intel Knowledge Heart Group product providing may have main implications for tomorrow’s information facilities.