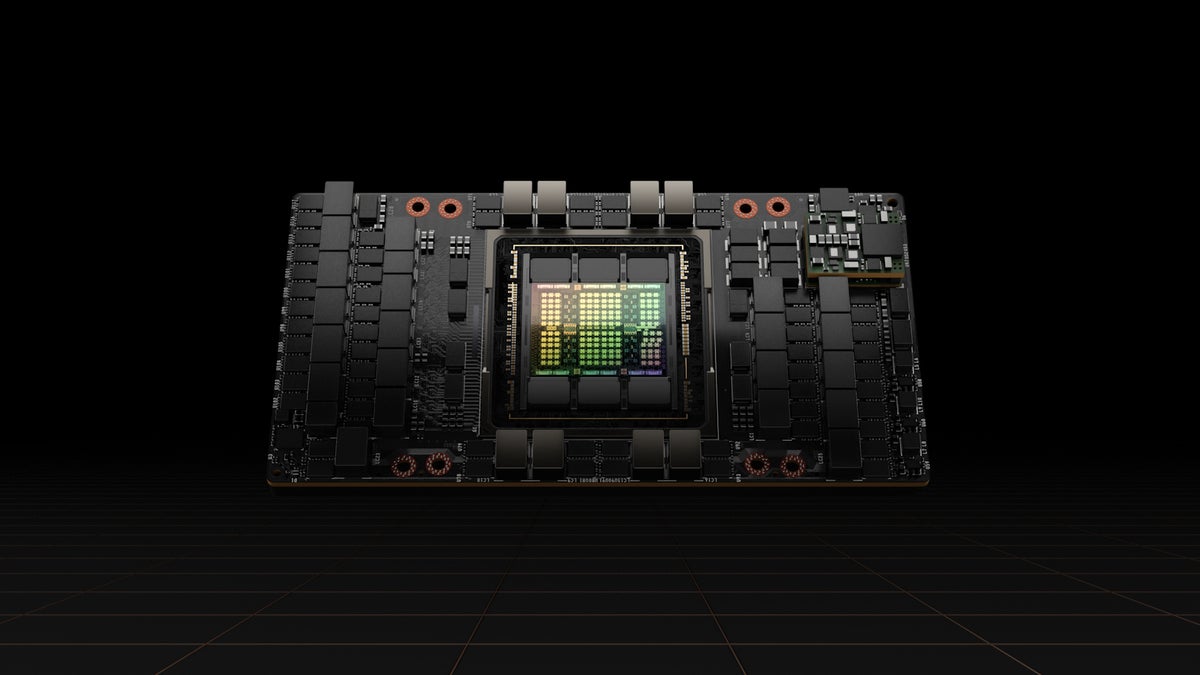

Nvidia kicked off its second GTC convention of the 12 months with information that its H100 “Hopper” technology of GPUs is in full manufacturing, with world companions planning to roll out services in October and huge availability within the first quarter of 2023.

Hopper options numerous improvements over Ampere, its predecessor structure launched in 2020. Most important is the brand new Transformer engine. Transformers are widely-used deep studying fashions and the usual mannequin of selection for pure language processing. Nvidia claims the H100 Transformer Engine can pace up neural networks by as a lot as six-fold over Ampere with out dropping accuracy.

Hopper additionally comes with the second technology of Nvidia’s Safe Multi-Occasion GPU (MIG) know-how, permitting a single GPU to be partitioned into a number of secured partitions that function independently and in isolation.

Additionally new is a operate known as confidential computing, which protects AI fashions and buyer information whereas they’re being processed along with defending them when at relaxation and in transit over the community. Lastly, Hopper has the fourth-generation NVLink, Nvidia’s high-speed interconnect know-how that may join as much as 256 H100 GPUs at 9 occasions increased bandwidth versus the earlier technology.

And whereas GPUs are usually not identified for energy effectivity, the H100 allows corporations to ship the identical AI efficiency with 3.5x better power effectivity and 3x decrease total-cost-of-ownership than the prior technology, as a result of enterprises want 5x fewer server nodes.

“Our prospects want to deploy information facilities which are mainly AI factories, producing AIs for manufacturing use instances. And we’re very excited to see what H100 goes to be doing for these prospects, delivering extra throughput, extra capabilities and [continuing] to democratize AI in every single place,” stated Ian Buck, vice chairman of hyperscale and HPC at Nvidia, on a media name with journalists.

Buck, who invented the CUDA language used to program Nvidia GPUs for HPC and different makes use of, stated giant language fashions (LLMs) will probably be one of the necessary AI use instances for the H100.

Language fashions are instruments educated to foretell the subsequent phrase in a sentence, similar to autocomplete on a cellphone or browser. LLM, because the identify implies, can predict complete sentences and do extra, similar to write essays, create charts, and generate pc code.

“We see giant language fashions getting used for issues exterior of human language like coding, and serving to software program builders write software program sooner, extra effectively with fewer errors,” stated Buck.

H100-powered methods from {hardware} makers are anticipated to ship within the coming weeks, with greater than 50 server fashions out there by the top of the 12 months and dozens extra within the first half of 2023. Companions embody Atos, Cisco, Dell, Fujitsu, Gigabyte, HPE, Lenovo and Supermicro.

Moreover, Amazon Internet Providers, Google Cloud, Microsoft Azure and Oracle Cloud Infrastructure say they are going to be among the many first to deploy H100-based situations within the cloud beginning subsequent 12 months.

If you wish to give the H100 a check drive, it will likely be out there to check out through Nvidia’s Launchpad, its try-before-you-buy service the place customers can log in and check out Nvidia {hardware}, together with the H100.

Copyright © 2022 IDG Communications, Inc.