Deploy Ingress Controller on AWS EKS the proper manner.

The function picture highlights the importance of orchestration at scale, which is the promise and one of many many causes of going for Kubernetes!

The overall steerage on this article is for these with appreciable information of Kubernetes, Helm, and AWS cloud options. Additionally, keep in mind that the method introduced right here is my try at organising a Kubernetes cluster for my firm, and you could resolve to deviate at any time to fit your use case or choice.

With these out of the way in which, let’s leap proper into it.

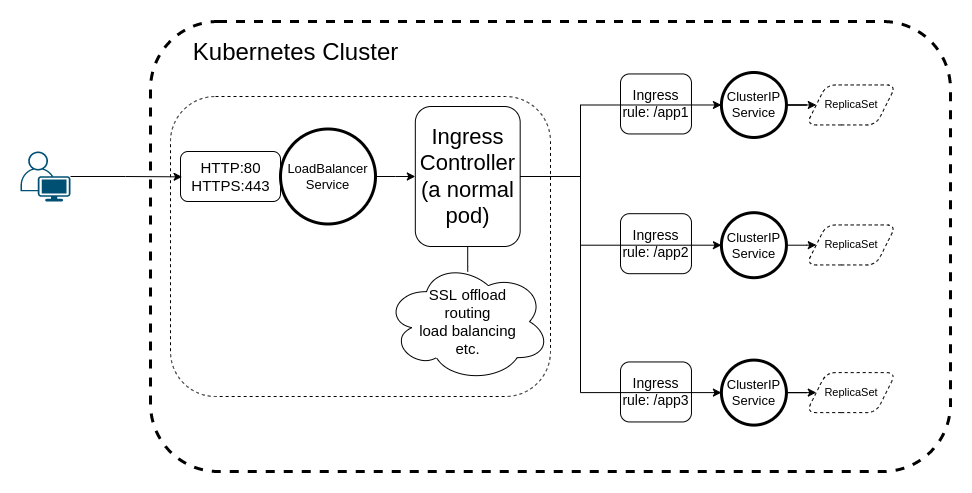

As with different “controllers” inside Kubernetes, chargeable for bringing the present state to the specified state, the Ingress Controller is chargeable for creating an entry level to the cluster in order that Ingress guidelines can route visitors to the outlined Companies.

Merely put, when you ship community visitors to port 80 or 443, the Ingress Controller will decide the vacation spot of that visitors based mostly on the Ingress guidelines.

You could have an Ingress controller to fulfill an Ingress. Solely creating an Ingress useful resource has no impact [source].

To offer a sensible instance, when you outline an Ingress rule to route all of the visitors coming to the instance.com host with a prefix of /. This rule may have no impact except you’ve got an Ingress Controller that may distinguish the visitors’s vacation spot and route it in response to the outlined Ingress rule.

Now, let’s see how Ingress Controllers work outdoors AWS.

All the opposite Ingress Controllers I’m conscious of are working this fashion: You expose the controller as a LoadBalancer Service, which would be the accountable frontier for dealing with visitors (SSL offload, routing, and many others.).

What this leads to is, having created a LoadBalancer, you’ll have the next in your cluster:

Right here’s a command to confirm that your LoadBalancer Service is in place.

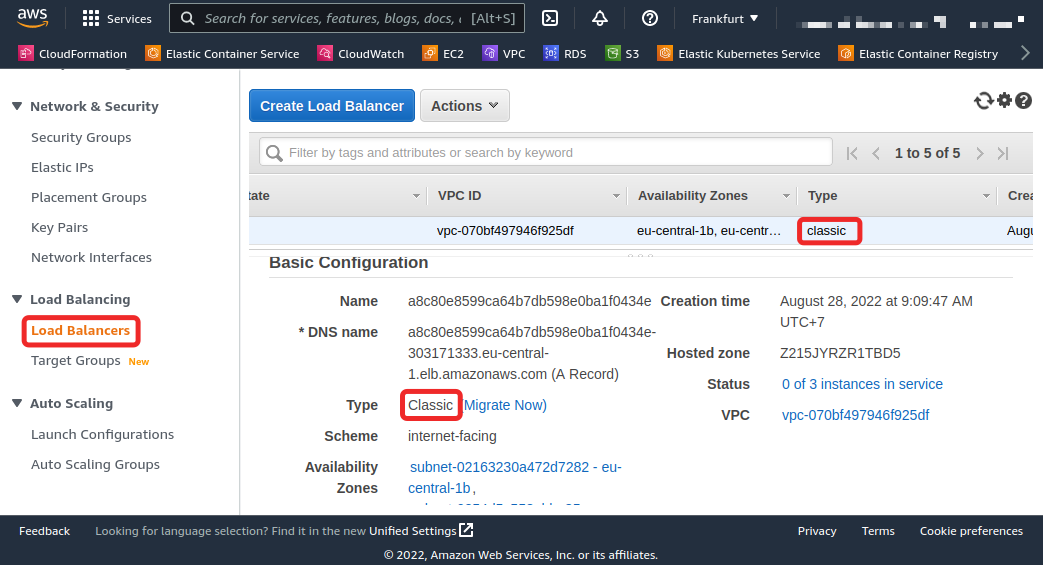

The LoadBalancer service is likely to be best for different cloud suppliers, however not for AWS, for one key cause. Upon creating that Service, when you head over to the Load Balancer tab of your EC2 Console, you’ll see that the kind of load balancer is of kind “traditional” (AWS calls this the “legacy” load balancer) and requires instant migration for a cause described under.

Because the traditional load balancer has reached its finish of life by August 15, 2022, you shouldn’t be utilizing it contained in the AWS platform. Subsequently, you by no means expose any Service as a LoadBalancer as a result of any Service of that kind will end in a “traditional” load balancer. They advocate both of the newer model of load balancers (Software, Community, or Gateway).

Avoiding a “traditional” load balancer is the principle cause we’re going for the choice answer supported by AWS, because it lets us leverage the Kubernetes workload.

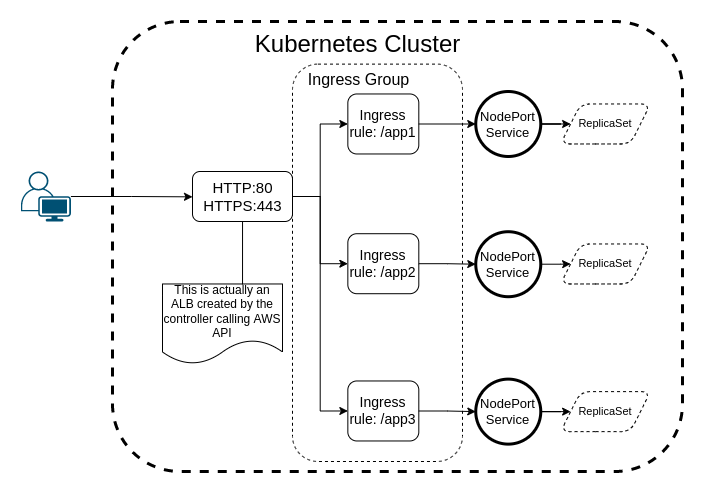

I used to be shocked once I first noticed that, in contrast to different Ingress controllers (HAProxy, Nginx, and many others.), the AWS Load Balancer Controller doesn’t expose the LoadBalancer Service contained in the Kubernetes cluster. Ingress controller is among the important subjects on the CKA examination [source].

However, simply because AWS supplies poor assist for different ingress controllers, you don’t wish to lose any of Kubernetes’ wealthy options, leap proper into vendor-specific merchandise, and change into vendor-locked-in; at the least, I do know I don’t wish to.

My private choice is to have a managed Kubernetes management aircraft with my very own self-managed node however in fact, you’re free to decide on your individual design over right here!

The choice right here is to make use of the AWS ingress controller, which is able to help you have the identical workload and sources when you’d run your Kubernetes elsewhere, simply with a tiny little bit of configuration distinction, defined under.

The ingress controller works contained in the AWS EKS by “grouping” the ingress sources beneath a single title, making them accessible and routable from a single AWS Software Load Balancer. You need to specify the “grouping” both by IngressClassParams or by means of annotations in every Ingress you create in your cluster. I don’t learn about you, however I favor the previous!

The “Ingress Group” is just not a tangible object, nor does it have any fancy function. It’s only a set of Ingress sources annotated the identical manner (As talked about earlier than, you’ll be able to skip annotating every Ingress by passing the “group” title within the IngressClass). These Ingress sources share a gaggle title and will be assumed to be a unit, sharing the identical ALB as a boundary to handle visitors, routing, and many others.

This final half talked about above is the distinction between Ingress sources inside and out of doors AWS. As a result of though in Kubernetes’ world, you don’t must cope with all these groupings, if you’re utilizing AWS EKS, this method is the way in which to go!

I’m not uncomfortable implementing this and managing the configurations, particularly for the reason that Nginx, HAProxy, and different ingress controllers all have their very own units of annotations for his or her tuning and configurations, e.g., to rewrite the trail within the Nginx Ingress Controller, you’d use nginx.ingress.kubernetes.io/rewrite-target annotation [source].

One final vital word to say right here is that your Companies are not ClusterIPs, however NodePorts, which makes them publish Companies on each employee node within the cluster (if the pod is just not on a node, iptables redirects the visitors) [source]. You may be chargeable for managing Safety Teams to make sure nodes can talk on the ephemeral port vary [source]. However when you use eksctl to create your cluster, it is going to handle all this and lots of extra.

Now, let’s leap into deploying the controller to have the ability to create some Ingress sources.

You first want to put in the controller within the cluster for any of your Ingress sources to work.

This “controller” is the man chargeable for speaking to AWS’s API to create your Goal Teams.

- To put in the controller, you need to use Helm or strive it immediately from

kubectl.

NOTE: It’s at all times a finest follow to lock your installations to a particular model and improve after check & QA.

2. The second step is putting in the controller itself, which remains to be doable with Helm. I’m offering my customized values; subsequently, you’ll be able to see each the set up and worth information under.

Now that we get the controller set up out of the way in which let’s create an Ingress useful resource that can obtain visitors from the world huge net!

NOTE: When the controller is initially put in, no LoadBalancer service has been created, nor an AWS load balancer of any type, and the load balancer will solely be created when the primary Ingress useful resource is created.

Alright, alright! After all of the above steps are happy, right here is the entire Nginx stack.

A few notes value mentioning listed below are the next.

- In case your

target-typeis anoccasion, you have to have a service of the kindNodePort. In consequence, the load balancer will ship the visitors to the occasion’s uncovered NodePort. In case you’re extra taken with sending the visitors on to your pod, your VPC CNI should assist that [source]. - The AWS load balancer controller makes use of the annotations you see on the Ingress useful resource to arrange the Goal Group, Listener Guidelines, Certificates, and many others. The checklist of issues you’ll be able to specify contains, however is just not restricted to, the well being checks (finished by Goal Teams), the precedence of the load balancer listener rule, and lots of extra. Learn the reference for an entire overview of what’s potential.

- The ALB picks up the certificates info from the config outlined beneath the

tlspart from the ACM, and all it’s essential do is to make sure these certificates are current and not expired.

Making use of the above definition, you’d get the next in your cluster:

Managing Kubernetes in a cloud answer comparable to AWS has its challenges. They’ve advertising and marketing cause to implement such challenges, I imagine, as a result of they need you to purchase as lots of their merchandise as potential, and utilizing a cloud-agnostic instrument comparable to Kubernetes is just not serving to that trigger loads!

On this article, I defined the way to arrange the AWS Ingress Controller to have the ability to create Ingress sources inside AWS EKS.

As a ultimate suggestion, when you’re going with AWS EKS in your managed Kubernetes cluster, I extremely advocate making an attempt it with eksctl because it makes your life loads simpler.

Have a wonderful remainder of the day, keep tuned and take care!