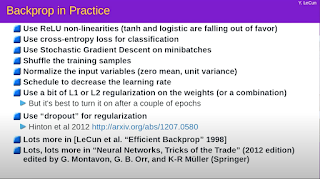

I’m collaborating in a weekly meetup with a TWIML (This Week in Machine Studying) group the place we undergo video lectures of the NYU (New York College) course Deep Studying (with Pytorch). Every week we cowl one of many lectures in an “inverted classroom” method — we watch the video ourselves earlier than attending, and one individual leads the dialogue, masking the details of the lecture and moderating the dialogue. Regardless that it begins from the fundamentals, I’ve discovered the discussions to be very insightful to this point. Subsequent week’s lecture is about Stochastic Gradient Descent and Backpropagation (Lecture 3), delivered by Yann LeCun. In direction of the top of the lecture, he lists out some methods for coaching neural networks effectively utilizing backpropagation.

To be truthful, none of those methods must be new data for people who’ve been coaching neural networks. Certainly, in Keras, most if not all these methods may be activated by setting a parameter someplace in your pipeline. Nevertheless, this was the primary time I had seen them listed down in a single place, and I figured that it will be fascinating to place them to the check on a easy community. That approach, one may examine the consequences of every of those methods, and extra importantly for me, educate me easy methods to do it utilizing Pytorch.

The community I selected to do that with is a CIFAR-10 classifier, applied as a 3 layer CNN (Convolutional Neural Community), an identical in construction to the one described in Tensorflow CNN Tutorial. The CIFAR-10 dataset is a dataset of round a thousand low decision (32, 32) RGB pictures. The great factor about CIFAR-10 is that it’s obtainable as a canned dataset by way of the torchvision package deal. We discover the situations listed beneath. In all circumstances, we prepare the community utilizing the coaching pictures, and validate on the finish of every epoch utilizing the check pictures. Lastly, we consider the educated community in every case utilizing the check pictures. We examine the educated community utilizing micro F1-scores (identical as accuracy) on the check set. All fashions had been educated utilizing the Adam optimizer, the primary two used a hard and fast studying fee of 1e-3, whereas the remainder used an preliminary studying fee of 2e-3 and an exponential decay of about 20% per epoch. All fashions had been educated for 10 epochs, with a batch dimension of 64.

- baseline — we incorporate among the recommendations within the slide, reminiscent of utilizing ReLU activation perform over tanh and logistic, utilizing the Cross Entropy loss perform (coupled with Log Softmax as the ultimate activation perform), doing Stochastic Gradient Descent on minibatches, and shuffling the coaching examples, within the baseline already, since they’re fairly primary and their usefulness just isn’t actually in query. We additionally use the Adam optimizer, primarily based on a remark by LeCun through the lecture to want adaptive optimizers over the unique SGD optimizer.

- norm_inputs — right here we discover the imply and normal deviation of the coaching set pictures, then scale the photographs in each coaching and check set by subtracting the imply and dividing by the usual deviation.

- lr_schedule — within the earlier two circumstances, we used a hard and fast studying fee of 1e-3. Whereas we’re already utilizing the Adam optimizer, which is able to give every weight its personal studying fee primarily based on the gradient, right here we additionally create an Exponential Studying Charge scheduler that exponentially decays the educational fee on the finish of every epoch. It is a built-in scheduler offered by Pytorch, amongst a number of different built-in schedulers.

- weight_decay — weight decay is healthier often known as L2 regularization. The concept is so as to add a fraction of the sum of the squared weights to the loss, and have the community decrease that. The web impact is to maintain the weights small and keep away from exploding the gradient. L2 regularization is offered to set straight because the weight_decay parameter within the optimizer. One other associated regularization technique is the L1 regularization, which makes use of absolutely the worth of the weights as a substitute of squared weights. It’s attainable to implement L1 regularization as properly utilizing code, however just isn’t straight supported (i.e., within the type of an optimizer parameter) as L2 regularization is.

- init_weights — this doesn’t seem within the checklist within the slides, however is referenced in LeCun’s Environment friendly Backprop paper (which is listed). Whereas by default, module weights are initialized to random values, some random values are higher than others for convergence. For ReLU activations, Kaimeng (or He) activtions are preferable, which is what we used (Kaimeng Uniform) in our experiment.

- dropout_dense — dropouts may be positioned after activation capabilities, and in our community, they are often positioned after the activation perform following a Linear (or Dense) module, or a convolutional module. Our first experiment locations a Dropout module with dropout likelihood 0.2 after the primary Linear module.

- dropout_conv — dropout modules with dropout likelihood 0.2 are positioned after every convolution module on this experiment.

- dropout_both — dropout modules with dropout likelihood 0.2 are positioned after each convolution and the primary linear module on this experiment.

The code for this train is accessible on the hyperlink beneath. It was run on Colab (Google Colaboratory) on a (free) GPU occasion. The Open in Colab button on the highest of the pocket book means that you can to run it your self if you want to discover the code.

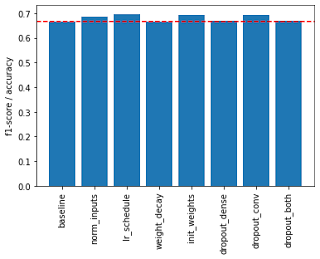

The pocket book evaluates and stories the accuracy, confusion matrix, and the classification report (with per class precision, recall, and F1-scores) for every mannequin listed above. As well as, the bar chart beneath compares the micro F1-scores throughout the totally different fashions. As you may see, normalizing (scaling) the inputs does end in higher efficiency, and one of the best outcomes are achieved utilizing the Studying Charge Schedule, Weight Initialization, and introducing Dropout for the Convolutional Layers.

That is principally all I had for immediately. The primary advantage of the train for me was discovering out easy methods to implement these methods in Pytorch. I hope you discover this handy as properly.