And what you are able to do about it

Meadowrun is the no-ceremony strategy to run your Python code within the cloud, by automating the boring stuff for you.

Meadowrun checks if appropriate EC2 machines are already working, and begins some if not; it packages up code and environments; logs into the EC2 machine through SSH and runs your code; and at last, will get the outcomes again, in addition to logs and output.

This will take between a number of seconds within the warmest of heat begins to a number of minutes within the coldest begin. For a heat begin, a machine is already working, we now have a container already constructed and native code is uploaded to S3. Throughout a chilly begin, all these items have to occur earlier than you get to see you Python code really run.

No one likes ready, so we had been eager to enhance the wait time. That is the story of what we found alongside the way in which.

You don’t know what you don’t measure

On the danger of sounding like a billboard for telemetry providers, the primary rule while you’re attempting to optimize one thing is: measure the place you’re spending time.

It sounds completely trivial, but when I had a greenback for each time I didn’t observe my very own recommendation, I’d have…nicely, at the very least 10 {dollars}.

If you happen to’re optimizing a course of that simply runs domestically, and has only one thread, that is all fairly straightforward to do, and there are numerous instruments for it:

- log timings

- connect a debugger and break every now and then

- profiling

Most individuals soar straight to profiling, however personally I just like the hands-on approaches particularly in circumstances the place the overall runtime of no matter it’s you’re optimizing is lengthy.

In any case, measuring what Meadowrun does is a little more difficult. First, it begins processes on distant machines. Ideally, we’d have some type of overview the place actions working on one machine that begin actions on one other machine are linked appropriately.

Second, a lot of meadowrun’s exercise consists of calls to AWS APIs, connection requests or different forms of I/O.

Due to each these causes, meadowrun just isn’t significantly well-suited to profiling. So we’re left with print statements — however certainly one thing higher should exist already?

Certainly it does. After some analysis on-line, I got here throughout Eliot, a library that permits you to log what it calls actions. Crucially, actions have a starting and finish, and Eliot retains monitor of which actions are a part of different, higher-level actions. Moreover, it could do that throughout async, thread and course of boundaries.

The way in which it really works is fairly easy: you may annotate features or strategies with a decorator which turns it into an eliot-tracked motion. For finer-grained actions, you may also put any code block right into a context supervisor.

@eliot.log_call

def choose_instances():

...

with eliot.start_action(action_type="subaction of select"):

...

To make this work throughout processes, you additionally have to go an Eliot identifier to the opposite course of, and put it in context there — simply two additional traces of code.

# mum or dad course of

task_id = eliot.current_action().serialize_task_id()

# baby course of receives task_id by some means,

# e.g. through a command line argument

eliot.Motion.continue_task(task_id=task_id)

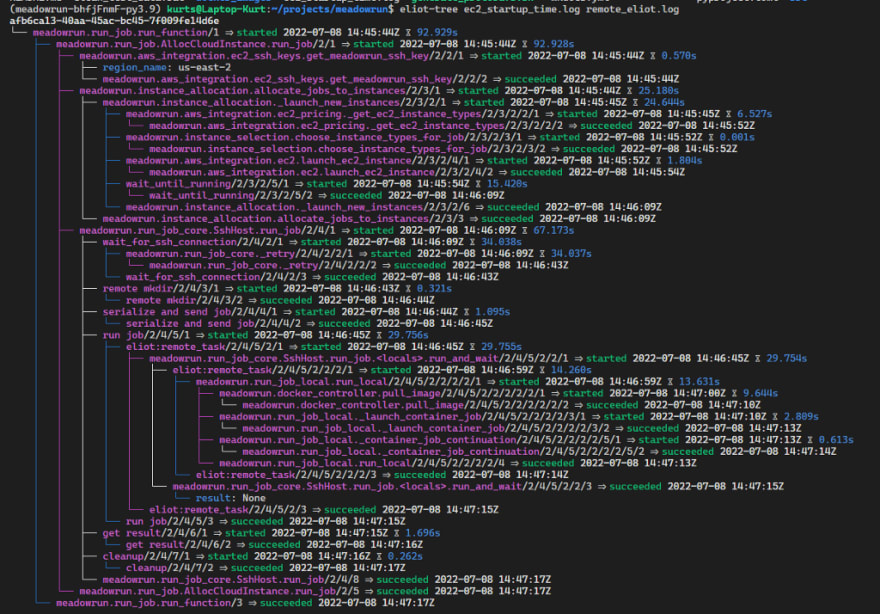

After including the Eliot actions, Eliot logs data to a JSON file. You’ll be able to then visualize it utilizing a useful command line program referred to as eliot-tree. Right here’s the results of eliot-tree displaying what Meadowrun does when calling run_function:

It is a nice breakdown of how lengthy all the things takes, that just about immediately led to all of the investigations we’re about to debate. For instance, the entire run took 93 seconds, of which the job itself used solely a handful of seconds — this was for a chilly begin scenario. On this occasion, it took about 15 seconds for AWS to report that our new occasion is working and bought an IP tackle (wait_until_running) however then we needed to wait one other 34 seconds earlier than we might really SSH into the machine (wait_for_ssh_connection).

Based mostly on Eliot’s measurements, we tried (and never at all times succeeded!) to make enhancements in quite a lot of areas.

Cache all of the issues a few of the time

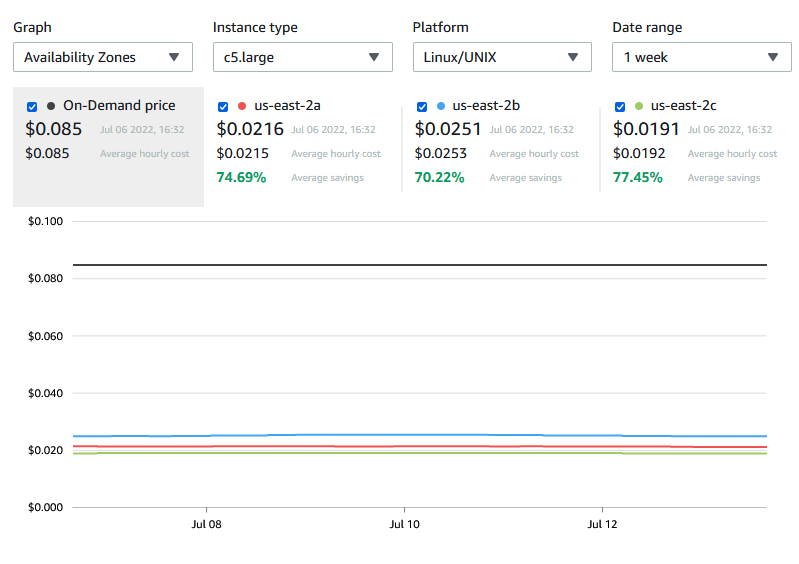

One of many quickest wins: meadowrun was downloading EC2 occasion costs earlier than each run. In follow spot and on-demand costs don’t change incessantly:

So it’s extra cheap to cache the obtain domestically for as much as 4 hours. That saves us 5–10 seconds on each run.

All Linuxes are quick however some are sooner than others

We are able to’t affect how lengthy it takes for AWS to begin a digital machine, however maybe some Linux distributions are sooner to begin than others — particularly it’d be good in the event that they’d begin sshd sooner fairly than later. That probably cuts down on ready for the ssh connection.

Meadowrun is at the moment utilizing an Ubuntu-based picture, which in line with our measurements takes about 30–40 seconds from the time when AWS says the machine is working, to once we can really ssh into it. (This was measured whereas working from London to Ohio/us-east-2 — should you run Meadowrun nearer to residence you’ll see higher instances.)

In any case, in line with EC2 boot time benchmarking, a few of which we independently verified, Clear Linux is the clear winner right here. That is borne out in follow: decreasing the “wait till ssh” time from 10 seconds to some when connecting to an in depth area, and from 30 seconds to five seconds when connecting throughout the Atlantic.

Regardless of these outcomes, to date we’ve not been capable of change Meadowrun to make use of Clear Linux — first it took a very long time earlier than we found out find out how to configure Clear in order that it picks up the AWS user-data. For the time being we’re battling putting in Nvidia drivers on it, which is necessary for machine studying workloads.

We’ll see what occurs with this one, but when Nvidia drivers usually are not a blocker and startup instances are necessary to you, do think about using Clear Linux as a base AMI on your EC2 machines.

EBS volumes are sluggish at start-up

The subsequent concern we checked out is Python startup instances. To run a operate on the EC2 machine, meadowrun begins a Python course of which reads the operate to run and its pickled arguments from disk, and runs it. Nonetheless, simply beginning that Python course of with out really working something, on first startup took about 7–8 seconds.

This appears excessive even for Python (sure, we nonetheless cracked the inevitable “we must always rewrite it in Rust” jokes).

A run with -X importtime revealed the worst offenders, however sadly it’s largely boto3 which we have to speak to the AWS API, for instance to drag Docker photographs.

Additionally, subsequent course of startups on the identical machine take a extra cheap 1–2 seconds. So what’s going on? Our first thought was file system caching, which was an in depth however finally improper guess.

It turned out that AWS’ EBS (Elastic Block Retailer) which gives the precise storage for EC2 machines we had been measuring, has a warmup time. AWS recommends studying the complete system to heat up the EBS quantity by utilizing dd. That works in that after the warmup. Python’s startup time drops to 1–2 seconds. Sadly studying even 16Gb quantity takes over 100 secs. Studying all of the information within the meadowrun atmosphere as an alternative additionally takes about 20 seconds. So this answer gained’t work for us — the warmup time takes longer than the chilly begin.

There’s a chance to pay for “sizzling” EBS snapshots which give warmed-up efficiency from the get-go, but it surely’s loopy costly as a result of you’re billed per hour, per availability zone, per snapshot so long as quick snapshot restore is enabled. This involves $540/month for a single snapshot for a single availability zone, and a single area like us-east-1 has 6 availability zones!

On the finish of the day, seems to be like we’ll simply must suck this one up. If you happen to’re an enterprise that’s richer than god, think about enabling sizzling EBS snapshots. For some use circumstances studying the complete disk up entrance is a extra applicable and definitely less expensive various.

In case your code is async, use async libraries

From the beginning, meadowrun was utilizing material to execute SSH instructions on EC2 machines. Material isn’t natively async although, whereas meadowrun is — meadowrun manages nearly completely I/O sure operations, similar to calling AWS APIs and SSH’ing into distant machines, so async is smart for us.

The friction with Material induced some complications, like having to begin a thread to make Material seem async with respect to the remainder of the code. This was not solely ugly, but in addition gradual: when executing a parallel distributed computation utilizing Meadowrun’s run_map, meadowrun was spending a big period of time ready for Material.

We’ve now totally switched to a natively async SSH consumer, asyncssh. Efficiency for run_map has improved significantly: with material, for a map with 20 concurrent duties, median execution time over 10 runs was about 110 seconds. With asyncssh, this dropped to fifteen seconds — a 7x speedup.

Conclusion

Not all the things you discover whereas optimizing is actionable, and that’s okay — on the very least you’ll have learnt one thing!

Takeaways which you could possibly apply in your individual adventures with AWS:

- If startup time is necessary, attempt utilizing Clear Linux.

- EBS volumes want some warmup to achieve full I/O velocity — you may keep away from this when you have plenty of cash to spend.